Why TypeScript for real-time data transformation?

Using TypeScript for transformations allows us to prevent many of the common types of pipeline failures that might otherwise slip through the cracks.

Estuary Flow is a platform for creating real-time data pipelines. In addition to schlepping data from Point A to Point B, Flow is also able to reshape, filter, and join data in real time. We call this feature derivation because it is a Flow data collection that is derived from one or more source collections via (optionally) stateful transformations. Flow currently supports two types of transforms in derivations: remote transforms using webhooks and native TypeScript transforms.

For remote transforms, Flow will call a provided HTTP(S) endpoint for each document in the source collection. But that means that you need to take care of testing, deploying, and monitoring the code that’s handling your webhook, and ensuring that it’s all in sync with the rest of the catalog. That’s fine for some use cases, but it would be nice if you didn’t have to deal with all that for every single transform. That’s why Flow has native support for running TypeScript transforms, where you get deployment, testing, and monitoring all built in.

A common question we usually get from people at this point is:

“Why did you choose Typescript as the first natively supported language for transformations?”

It’s a reasonable question, especially given the prevalence of Python and Java for analytics and data pipelines. So the rest of this post will go into detail about why TypeScript made sense. This post ended up being pretty long and winding because there’s just so much to unpack here. But the short answer is that using TypeScript allows us to prevent many of the common types of pipeline failures that might otherwise slip through the cracks.

Context: Building Reliable Data Pipelines

Despite all the progress that’s been made since the days of chron jobs and system logs, reliability remains a key challenge with existing data pipelines. And when you’re building streaming data pipelines that process data within milliseconds of it being ingested, the stakes become much higher. If a streaming data pipeline outputs incorrect results, the effects could be immediate, and it could take hours to rebuild data products from their original sources once the problem is discovered. And there’s no guarantee that the incorrectness would ever be discovered, so you could be making important business decisions based on incorrect data, without even knowing it.

So when we designed Flow, we knew we had to give users a better set of tools for managing their data pipelines (called “Data Flows” in Flow-speak), so they can sleep well knowing that their pipelines are operational and outputting correct results.

Why are data pipelines so unreliable?

To understand how to improve reliability of pipelines, you need to first understand why existing data pipelines are so unreliable. Pipelines are often described in terms of an interconnected graph of processing tasks, which each read from one or more data sources and output to one or more destinations. These graphs can get pretty large, and are sometimes highly interconnected. A failure in one processing task can cause a cascading failure of many other tasks that are (often only transitively) depending on its output.

The more tasks you have, the more likely it is to have a failure in any single task. And the more connected your task dependency graph is, the more likely it is for a failure in any single task to have a huge impact on the system as a whole.

But it’s not just about the number of tasks that are affected by a failure. It matters how they fail. An incorrect database password will usually bring things to a halt. The impact might be huge if you have lots of tasks transitively depending on that database. But you can almost always just start things up again once you fix the configuration. The real danger is having your pipeline output incorrect results!

Outputting incorrect results is the worst thing a data pipeline could do.

– Abraham Lincoln (probably)

To really understand the impact of failures, we need to consider the difference between several failure modes. By far the most common causes of failures are:

- Misconfiguration; for example, an incorrect database password

- A bug in the code that’s processing the data

- Some source data has changed. For example, a database column that used to be NOT NULL, starts allowing null values.

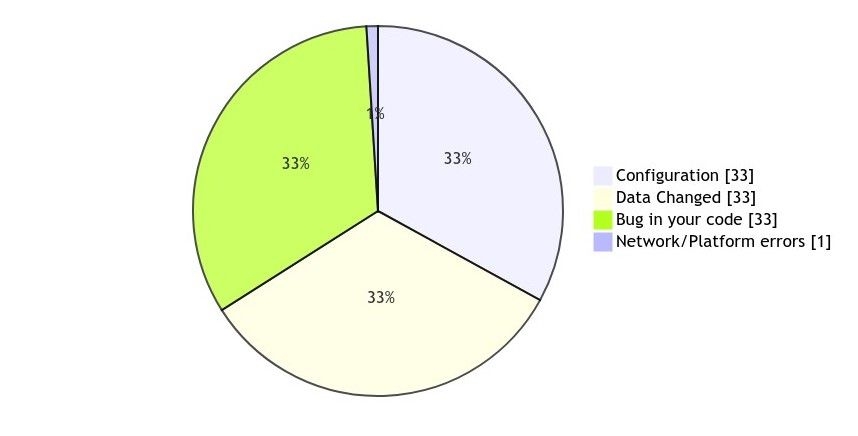

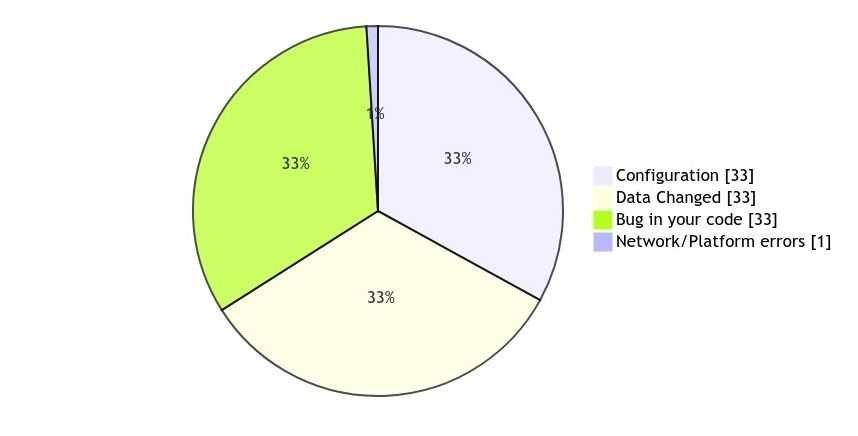

Here’s a completely made-up graph showing the relative frequency of each cause:

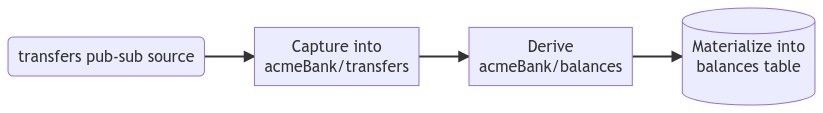

The point I’m trying to illustrate here is that in a large proportion of cases (Data Changed + Bug in your code), failures have the potential to cause incorrect outputs. If we want to make pipelines more reliable, we need better tools for preventing bugs in production code and for dealing with changing data sources. Those specific areas are so important because they are not only relatively common, but also more costly. Let’s look at a simple example pipeline taken from the Flow repo, which we’ll use throughout this post:

This pipeline starts with a pub-sub topic with events representing transfers between accounts. They look something like: {“id”: 1, “sender”: “alice”, “recipient”: “bob”, “amount”: 20.0}. There’s a task that captures those events and writes them to some durable storage in case we ever need to re-process them.

Now, we want to derive, or transform, them into total balance amounts for each account. We can do this with a derivation. In the end, we’ll have a table of account balances in our database that looks something like:

plaintext| account | balance |

+---------+---------+

| "alice" | 8847.50 |

| "bob" | 9014.20 |Testing data transformations isn't enough

Flow has built-in support for testing the data transformations that comprise your derivations. It’s one of Flow’s “killer features,” and in my opinion no other platform does it better. Flow tests work in terms of inputs to source collections and assertions on the fully-reduced output derivations.

Here’s a Flow test case for that example transformation:

plaintexttests:

acmeBank/test/balancers-from-transfers:

- ingest:

collection: acmeBank/transfers

documents:

- { id: 1, sender: alice, recipient: bob, amount: 32.50 }

- { id: 2, sender: bob, recipient: carly, amount: 10.75 }

- verify:

collection: acmeBank/balances

documents:

- { account: alice, amount: -32.50 }

- { account: bob, amount: 21.75 }

- { account: carly, amount: 10.75 }This test adds two transfer events, and then verifies that we get the proper balances as a result. But notice what it doesn’t cover. It doesn’t cover the cases where any of the fields are null, undefined, or any other JSON type. So what happens if sender is null, or the amount is a string instead of a number? We could add test cases to verify the behavior in these cases, but it’s obviously unrealistic for anyone to write test cases for all the possible permutations of all types for all fields, even for a simple example like this.

Tests are an essential tool for any type of software development, but they aren’t enough to make your pipeline reliable. The main drawback of testing is that it falls on you to define all the test cases. It’s really hard to think of all the significant edge cases, and even for relatively simple transformation logic it can be extremely labor intensive to test them all.

Consider the transformation from above that aggregates a stream of account transfer events into account balances. If you wanted to test all the permutations of the basic JSON types and undefined, you’d end up with (4 fields X 7 possible types =) 28 separate test cases, which still doesn’t include other important cases such as empty strings, negative numbers, etc.

Validating data transformations isn’t enough, either

Flow collections each have an associated JSON schema. All data must validate against that schema in order to be written, and it’s validated again whenever it’s read. This JSON schema effectively represents the “type” of documents in the collection. This means that Flow derivations don’t need to have test cases for all permutations of JSON types, because the type of each field can simply be constrained by the collection’s JSON schema.

Here’s the specification for the acmeBank/transfers collection, which contains its schema:

plaintext acmeBank/transfers:

schema:

type: object

properties:

id: { type: integer }

sender: { type: string }

recipient: { type: string }

amount: { type: number }

required: [id, sender, recipient, amount]

key: [/id]This schema says that sender property must be a string, and that it’s required to be present (i.e. it cannot be undefined). Because Flow validates your data before invoking the transformation function, there’s simply no need to write all those tests covering scenarios where sender is a boolean, or amount is a string. Those cases are disallowed by the schema, and the system has safe and well-defined behavior when a document doesn’t match those expectations.

Data validation, like testing, is an essential part of reliable data pipelines. But it also isn’t quite enough to give us reliable pipelines. The issues here are more apparent when you consider changes to the source data over time.

For example, let’s say that the shape of the transfers events needed to change slightly in order to represent cash withdrawals. The team that’s responsible for the acmeBank/transfers data product updates the recipient schema to be { type: [“string”, “null”]}, and a null recipient is used to represent a cash withdrawal from the sender’s account. Bueno! But how do they know whether any downstream consumers of the transfer events might be affected by the change? In our example, the transform that’s publishing account balances would need to be updated in order to account for the recipient property allowing null values.

But the tests for that wouldn’t be able to tell us that! The tests would still pass since the test inputs don’t have any documents where the property is null. In small, simple scenarios, the answer might be to have whoever changes the transfers schema manually identify and look over any consumers. But doing so is error-prone and labor intensive. We can do better!

TypeScript: the Best Language for Data Transformations?

We want to identify all downstream transforms that read from acmeBank/transfers and check whether those transforms are prepared to handle the shape of that data. And we want the ability to re-run these checks whenever the source data changes, so that we can identify any issues before deploying the changes to production.

This is where TypeScript comes in! Flow automatically generates TypeScript classes for each collection that’s used in a derivation. These generated classes are imported by your TypeScript module, and are used in the function signatures for all transformation functions in the derivation. Here’s the TypeScript function for our example:

plaintextimport { IDerivation, Document, Register, FromTransfersSource } from 'flow/acmeBank/balances';

// Implementation for derivation examples/acmeBank.flow.yaml#/collections/acmeBank~1balances/derivation.

export class Derivation implements IDerivation {

fromTransfersPublish(source: FromTransfersSource, _register: Register, _previous: Register): Document[] {

return [

// A transfer removes from the sender and adds to the recipient.

{ account: source.sender, amount: -source.amount },

{ account: source.recipient, amount: source.amount },

];

}

}The import statement at the top is pulling in TypeScript classes that were generated by Flow, based on the JSON schemas that are associated with each collection. Whenever a collection’s schema is changed, Flow uses its thorough knowledge of the task graph to identify all the tasks, including derivations, that read from that collection. It re-generates the TypeScript classes and re-runs the TypeScript compiler to see if there are any issues.

The FromTransfersSource class name is taken from the name of the transform in the Flow derivation specification and the class properties are generated using the JSON schema of the source collection schema. The Document class is generated from the JSON schema of the derivation.

What’s the end result?

Whenever any schema is changed, all connected derivations are type-checked using the newly generated TypeScript classes.

Even if the graph of derivation tasks is large and complex, Flow can type check your data flows from end to end. If a change to a schema causes type checking to fail for a derivation, you can choose to either update the transform code to fix the issue, or disable the derivation. If you choose to update the transform code, then you can make those changes as part of the same draft, so that the tasks are all updated and deployed at the same time. If you choose to disable the derivation, then you can always fix the code and re-enable it later, and it will pick back up right where it left off. Both of those sound like way better options than letting your pipeline output incorrect results!

Certainly Typescript isn’t the only language that supports static type checking. Ultimately, our vision for Flow is that it should support writing derivations in any language. We also plan to support static type checking and generating class/struct definitions for languages where it makes sense. But today, our team’s priority is getting Flow off the ground in a way that’s the most user-friendly and guards against potential error. That’s why we chose to start with a single language. TypeScript seemed like a natural fit for “typed JSON transformations.” It’s performant and relatively easy to sandbox (lookin’ at you, Deno) — combined with its support of type checking to prevent error, it was the obvious choice.

So that’s where we started, and so far it’s been successful at enabling end-to-end fully type-checked data pipelines. We’re not sure which language Flow will support next for derivations, but we’d love to hear from you if you have opinions or suggestions.

2023 Note: Estuary Flow now supports native SQL transformations! While we don't get all the benefits of TypeScript, SQL offers and easy-to-use alternative.

Author

Popular Articles