In the ever-evolving world of technology, the need to remain agile and efficient has never been greater than it is today. And since most software platforms are data-driven, there’s no room for downtime or delays. This is where automated data pipelines come into play.

Automated data pipelines are necessary for most businesses to achieve and maintain a rapid pace. Having a stable and robust data pipeline architecture is crucial for software and applications to work as intended while ensuring the proper flow of information to data users.

By the end of this post, you will have real-world examples of automated data pipeline use and how you can use the technology to usurp traditional methods.

Let’s get right to it.

What Is A Data Pipeline?

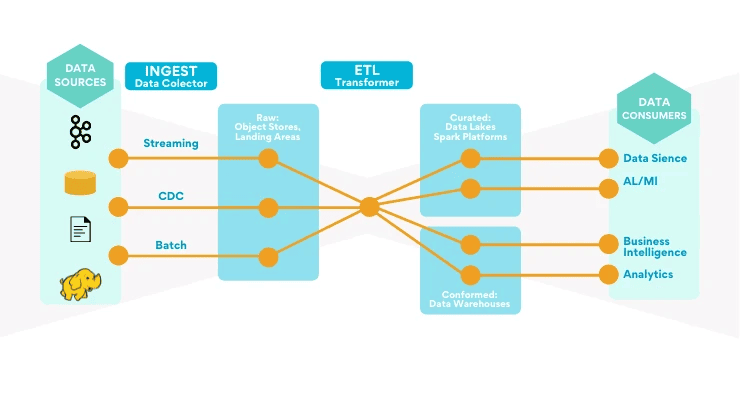

Data pipelines are a series of data processing steps in a system. Their primary purpose is to funnel data from an origin (source) to a destination. Data pipelines may have intermediary steps (as in the case of ETL pipelines) but all of them have the same function.

Data transfer continues until the pipeline process is terminated. In some data integration and management platforms, independent steps may be run in parallel to each other.

Every modern data pipeline has three important parts:

- Source

- Processing steps

- Destination

The primary purpose of every data pipeline is easy to understand. They enable the flow of data from between disparate systems, for example:

- From a SaaS product, such as a CRM, to a data warehouse.

- From a data lake into an operational system, like a payment processing system.

- From a transactional database to an analytical database.

To put it succinctly, every time data is processed between two points, a data pipeline is required between them. So, most companies’ data infrastructure includes numerous pipelines.

ETL Vs Data Pipelines

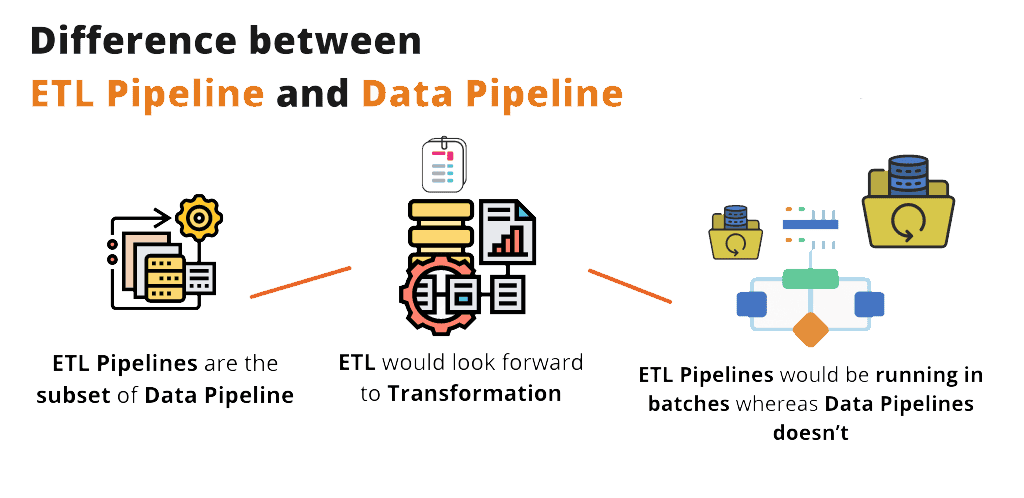

You may have heard the term “ETL” used interchangeably with “data pipeline. ETL pipelines are a subset of data pipelines, but it’s important to make the distinction.

ETL breaks down the pipeline process into three steps. These are:

- Extract – Acquiring new data from the source systems.

- Transform – Moving data to temporary storage and then transforming it to the desired formats for additional processing.

- Load – Fitting reformatted data to its final data storage destination.

ETL pipelines are fairly common but aren’t the only method for data integration. Not every pipeline requires a transformation stage and other alternatives, such as ELT, exist. In the ELT framework, you transform data after loading to the destination.

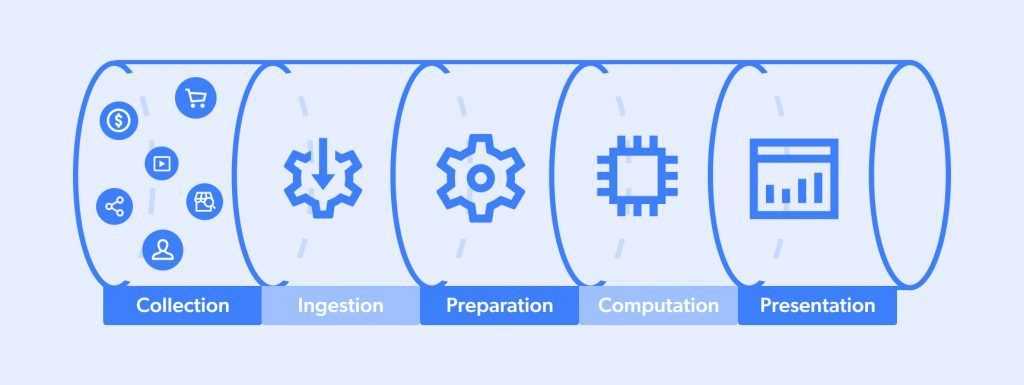

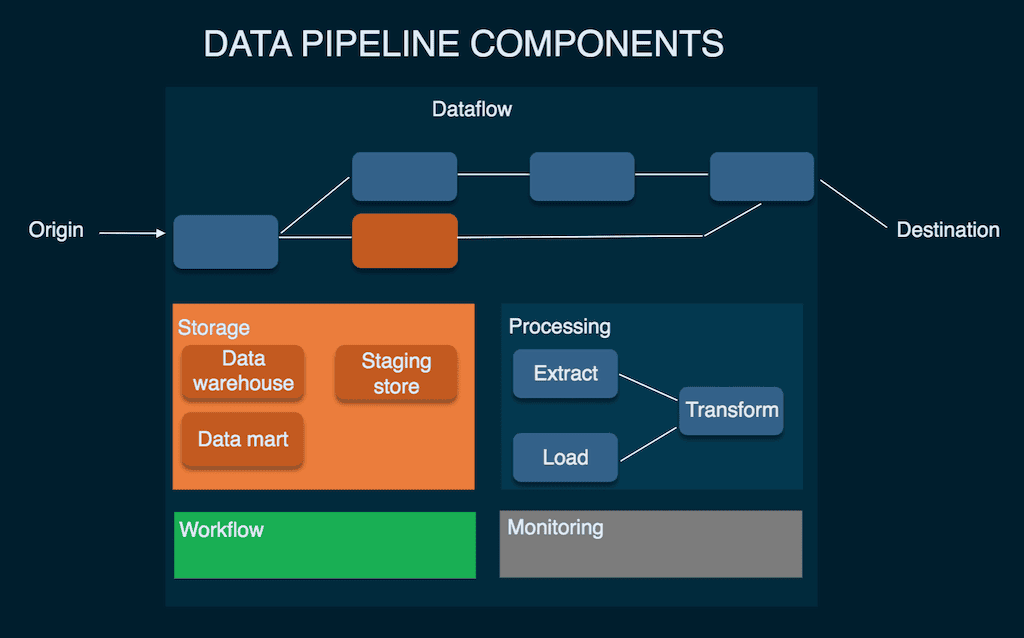

6 Components Of A Data Pipeline

When you automate a data pipeline, you’re essentially kicking off a sequence of processes to run in sequence, each of which can be further broken down into components. Automation also covers background tasks, such as monitoring.

While we can use acronyms like “ETL” to simplify the process of a pipeline, there’s a lot more involved when you look under the surface. Let’s walk through a more complex conceptual model.

i. Origin

The origin is the point of data entry in the pipeline. These origins are also known as data sources and can be anything from transaction processing applications and IoT devices to social media, APIs, or public datasets.

Origin can also be an interchangeable term used for storage systems such as data warehouses or data lakes. These storage systems form the baseline of a company’s reporting and analytical data environment.

ii. Destination

The destination is the final point to which processed or transformed data is sent. The destination changes depending on the pipeline and where the data is sent. It could refer to data visualization and BI tools, or storage systems like data lakes or data warehouses.

iii. Dataflow

Dataflow refers to the movement of the data from the origin to its destination. It is also used to describe the changes and transformations it undergoes along the way.

iv. Storage

The storage in a data pipeline refers to systems where data is kept and preserved at different stages. Some data platforms come with their own storage; in other cases, users must choose their own storage systems, and these typically depend on multiple factors. These factors can be the volume of data, its frequency, and the volume of queries to a system’s use of data.

Cloud storage buckets or data lakes are a common type of storage system used to back data pipelines.

v. Processing

Data processing refers to the steps and activities required for ingesting data from sources, transforming it, and delivering it to a destination. While it is related to data flow, data processing is different in the sense that it focuses primarily on the computational tasks that implement the movement.

vi. Monitoring

Monitoring checks the status of the data pipeline and ensures all of its stages are working correctly. It also refers to the maintenance of efficiency levels with a growing data load and has other important tasks such as determining the accuracy of data through processing stages, or whether no information is lost along the way.

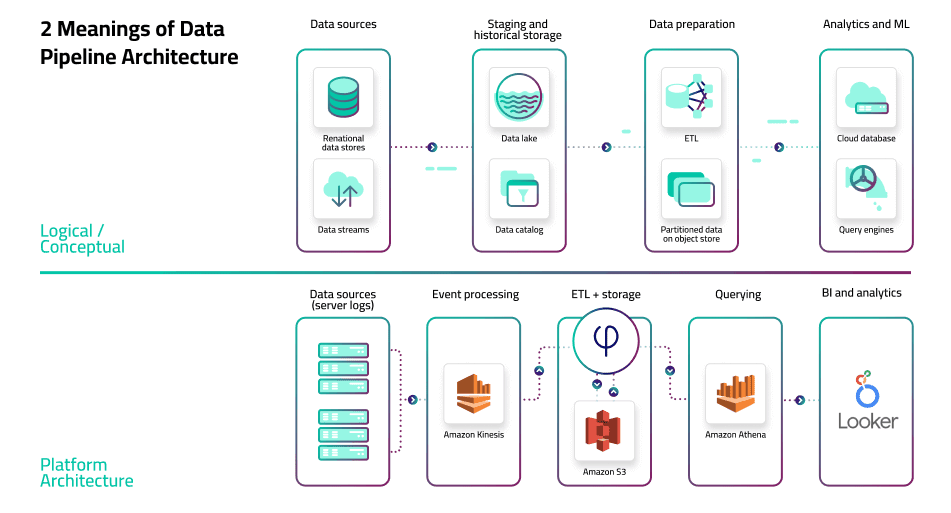

What Are The 3 Different Types Of Data Pipeline Architectures?

A data pipeline architecture determines the specific arrangement of the components of a pipeline to enable the extraction, processing, and delivery of information. Businesses today have various options to choose from. Some of these include:

1. Batch Data Pipeline

Traditional data analytics deals with historical data gathered over time to help decision-making processes. It is heavily dependent on business intelligence (BI) tools and batch data pipelines. Batch data pipelines refer to the collection, processing, and publishing of data in large blocks (batches), at one time or on regular schedules.

Once all the data is ready for access, the batch is queried by software or user for data visualization and exploration. Pipeline execution can be a short or long process, depending on the size of a batch. It could take a few minutes to several hours or even days to complete the process.

This form of processing is a staple among businesses with large datasets in non-time-sensitive projects. However, batch processing may not be the ideal choice for real-time insights. For those, it is better to opt for architectures enabling streaming analytics.

2. Streaming Data Pipeline

Streaming data analytics and pipelines derive insights from real-time and constant flows of data within seconds or milliseconds. It works in the opposite way to batch processing as it sequences and processes data as it comes. The process then progressively updates, reports, metrics, and summary statistics.

Companies can get up-to-date information regarding operations with real-time analytics and react without a delay. They also get solutions for smart monitoring of infrastructure performance. Companies that cannot afford delays in their data processing should opt for this method.

3. Change Data Capture Pipeline

Change Data Capture (CDC) pipelines are a form of streaming and real-time data pipelines for relational databases. This distinction is unique to them as CDC pipelines are specifically designed to detect change events within relational database architecture.

These pipelines maintain consistency across multiple systems and are typically used to refresh big data sets. The process works by transferring only the data that has changed since the last sync, rather than transferring the entire database. This form of processing is important in cloud migration projects where multiple systems have the same data sets.

What Are Automated Data Pipelines?

Automation in data pipelines ensures that none of the processes have to be manually run and managed. Most platforms are automated as is but require some form of initial data management or configuration.

Automated data pipelines work by automating responses to events. It is especially handy in real-time data pipelines where automation is necessary to process all new data events or ensure the processing occurs instantly.

Similarly, batch data pipelines are automated to run regularly as they are configured initially and process data at their predefined points.

The need for manual data pipeline tweaks is eliminated, and it also makes cloud migration simpler. Furthermore, automation establishes a secure platform for data-driven enterprises.

3 Use Cases Of Automated Data Pipelines

Automated data pipelines have three main reasons going in favor of their implementation. These are:

A. Better BI And Analytics

Most businesses lack data maturity and struggle to gain the full value of their data. It can hamper them from attaining important insights that can help in business performance, efficiency, and profitability.

By providing non-technical users with no-code platforms and democratizing access to data throughout the whole organization, business intelligence solutions help businesses become more data-driven.

But even the most intuitive BI platform is useless without current data. Fortunately, most automated data pipeline tools provide an equally user-friendly way to manage and schedule data pipelines and integrate and link them with cloud-native databases and business software. It can further help to provide business insights needed to fulfill their goals.

By equipping employees with the tools necessary to extract value from data, businesses can build a whole data-driven culture from the ground up.

B. More Suitable Data Analytics And BI

As discussed earlier, automated data pipeline management ensures that users do not have to repeatedly manually code and format data to enable transformations. These transformations can further enable real-time analytics and granular insight delivery.

Besides this, here are a few other benefits to business intelligence of implementing automated data pipelines:

- Improvements in efficiency, performance, and productivity

- Business decision-making becomes vastly more effective and timely

- Greater visibility into the customer experience and journey as a whole

C. Creating A Data-Driven Company Culture

Despite the rapid advancements in digital media and technology, most businesses have yet to fully utilize and leverage their data. What they do after the acquisition is also far from the complete picture.

In the past, IT personnel and data engineers were primary data stakeholders but today, stakeholders are people all across the company. If the full breadth of data stakeholders can help out with data infrastructure, any company is more likely to meet its goals, have more employees feel empowered, have more meaningful dialogs, etc. Approachable low-code tools make this possible.

But while collection enables businesses to measure and gauge their assumptions and outcomes, data-driven practices cannot be kept exclusive within highly technical teams only if the goal is to increase a more tech-first culture throughout the organization.

Data Pipeline Tools You Can Leverage

Data pipeline tools form the entire basis of the infrastructure behind most components of a data pipeline. Companies have a variety of options at their disposal but choosing the right one can be dependent on many factors, such as company size and the use case for data.

I. Estuary

The Estuary Flow platform is based on the DataOps philosophy and is a real-time data integration solution. Estuary helps combine the ease of other popular ELT tools with the scalability and efficiency you find in data streaming and has incredibly user-friendly features.

Estuary Flow is built from the ground up to make automated real-time data pipelines less challenging for most engineers, which enables companies to allow for more cost savings and seamless scalability as their data grows.

And with automation to power real-time transformations, the platform leverages both CLI and UI support to enable faster data pipeline creation compared to other tools such as Kafka, Google Cloud Dataflow, and Kinesis.

II. Matillion

Matillion is another low-code alternative for all your data pipeline management requirements. At its core, the software is a data integration platform with cloud-ready use cases and helps orchestrate and transform data. It also allows for the quick ingestion of data from any source and has connectors and built-in pipelines that are easy to deploy.

Mattilion’s Change Data Capture can help streamline data pipeline management too and simplifies batch loading from a singular control panel. The software also has data lakes, preparation, and analytics all rolled into one platform, making it one of the more versatile options available today.

III. Qlik Integrate

Qlik Data Integration is a modern platform and an excellent tool you can leverage for your data pipeline management. The software delivers real-time analytics-ready data to any environment and supports many environments like Tableau, Power BI, and more.

Using CDC, the platform features real-time data streaming and can extend enterprise data into live streams. It can also design, build, deploy and manage purpose-built cloud data warehouses without manual coding.

Data Pipeline Examples

The biggest brands in the world have already adopted automated data pipelines in their corporate processes. Here are two great examples below:

1. Sales Forecasting At Walmart

Walmart is a massive conglomerate in the U.S. and every time a customer makes a purchase, retail data is generated. With their recent sales forecasting project, the use of this retail dataset from the previous year allows the organization to forecast sales revenue for the next year.

However, one major caveat in this process is that data scientists and engineers have to build a data pipeline to clean data and preprocess steps. Walmart sorted these issues by building a data pipeline that performs data cleaning and preprocessing steps such as checking for missing values, normalizing unbalanced data, and removing outliers to ready the dataset for model building.

However, since their initial solution is forecasting, time series models like SARIMAX and ARIMA can help predict sales. Facebook has an open-source time series forecasting algorithm too called Prophet.

Once the forecasting model is trained, you can evaluate the data quality and accuracy of the prediction. The model can predict future sales values with ease and accuracy when a new Walmart sales dataset with different time series data is introduced.

2. Uber’s Real-Time Feature Computation

Uber is the most popular mobility and taxi service provider around the world. The platform uses machine learning to enable dynamic pricing. This model helps the system adjust rates based on real-time data and then calculate the maximum estimated time of arrival and fare for the consumer.

Since Uber requires insights in real-time, it needs pipelines that can ingest current data from driver and passenger apps and churn out actionable and accurate results.

The company uses a data flow infrastructure called Apache Flink to enable machine learning models to generate predictions in real-time. Uber also uses batch processing to identify medium-term and long-term trends.

Conclusion

Automated data pipelines are the future of data-driven technologies where the need or resources for manual pipelines has become obsolete. The implementation of data engineering and data pipeline automation in such domains has enabled many companies to expand their processing capabilities and provide real-time solutions to their customers and teams.

With the help of our guide, we hope that you’re set to understand the importance of automation in the data integration space and all the ways it can help boost your business processes. And with examples from real companies to boot, the technology will seem all the more approachable and important moving forward.

Want to try Estuary Flow? Register for the web app – it’s free.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles