Understanding the modern data stack, and why open-source matters

What is the modern data stack? This guide covers its evolution, cloud-based architecture, essential tools, and the role of open-source options in enabling faster, scalable data insights.

Every organization’s data goes through a complex journey each day. It’s collected, moved, transformed, and analyzed to support business decisions. Along the way, data must be safeguarded against errors and checked for quality.

To manage this journey, there’s an ecosystem of platforms and tools designed for each step. The “modern data stack” is one popular framework that conceptualizes how these data tools work together.

But there’s more to it than that.

In this post, we’ll explore what a modern data stack is, what makes it “modern,” and how it will continue to evolve.

We’ll also cover an important decision you’ll need to make as you design your stack: whether to use open-source components.

What is the Modern Data Stack?

What is the modern data stack, and why has it become the go-to framework for data-driven organizations? To understand it, let’s take a quick tour through the evolution of data management technology.

From Tech Stack to Data Stack

We begin with the overarching concept: the technology stack (or tech stack). A tech stack is a combination of various technology systems used to build a product or service. Typically applied to software development, tech stacks can also be tailored to specific functional areas.

Next, we have the data stack, a type of tech stack explicitly designed for managing data—storing it, accessing it, and preparing it for use in analytics and business intelligence.

Defining the Modern Data Stack

So, what exactly makes a data stack “modern”? While definitions may vary, a common characteristic is the cloud-based architecture of its components. The modern data stack primarily relies on cloud data warehouses and SaaS (Software as a Service) models, which allow for flexibility, scalability, and real-time access.

Earlier data stacks faced significant limitations in terms of speed and scalability. Analytics were often slow because they depended on data storage systems not optimized for querying large volumes of data. Data pipelines that connected various systems were ad hoc, prone to errors, and introduced latency. And most critically, everything was on-premise, requiring physical servers and local storage that restricted flexibility.

Cloud-Based Architecture: The Key to Modernization

The concept of the modern data stack emerged in the mid-2010s as cloud technology became the industry standard. This shift wasn’t instant. Cloud platforms like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure first made general-purpose cloud storage widely accessible. Later, specialized analytics solutions like Vertica offered self-hosting options either on-premise or in the cloud.

The next major leap was fully cloud-native platforms, where Amazon Redshift pioneered as the first cloud-native OLAP (Online Analytical Processing) database. A 2020 article from dbt's blog credits Redshift's launch as a turning point that allowed other tools to thrive and defined the “modern data stack” as we know it today. The result? An ecosystem of cloud-native or SaaS-based data solutions that work seamlessly together.

Combining all of this, we can finally define the modern data stack.

In summary, a modern data stack is a cloud-based suite of tools and systems that organizations use to store, access, and manage data efficiently. This infrastructure allows for rapid, scalable data operations and supports real-time insights, making it ideal for analytics and business intelligence.

While other desirable characteristics continue to emerge—like enhanced interoperability and data democratization—the modern data stack is an evolving space with new tools actively in development. We’ll discuss these innovations in more detail shortly.

Key Components of a Modern Data Stack

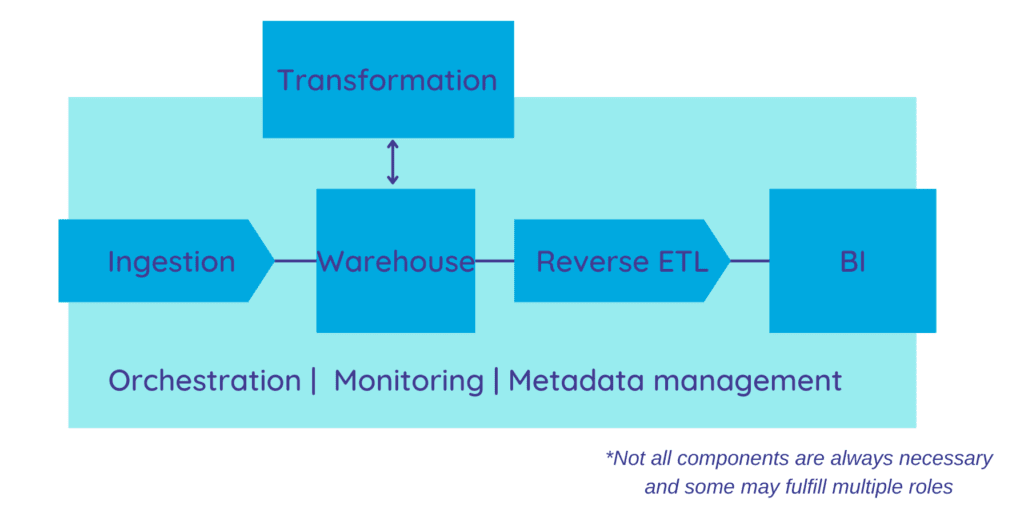

Modern data stack tools combine various different functions, but there isn’t a set checklist of roles each piece must fulfill. The systems a given organization uses will depend on their goals for their data and business.

What’s more, data technology is a dynamic and evolving space. Sometimes, a single system can fulfill multiple roles. For example, an organization that uses a real-time data integration platform to ingest data may not need a data orchestration system.

For this reason, we’ll break down the essential categories of modern data stack tools: things for which you’ll likely have a dedicated system. Then, we’ll cover other important components that are more likely to be covered by other systems, or can be omitted in certain cases.

The essentials:

- Data warehouse: Your cloud-based, OLAP storage designed to power analytics.

- Popular options: Redshift, BigQuery, Snowflake

- Popular option with similar characteristics: Databricks

- Ingestion: Connects data sources to data warehouse and other components of the stack. You can think of this as the “EL” in “ELT.”

- Best options: Estuary Flow, Fivetran

- Other options: Airbyte and Meltano

- Transformation: Apply queries, joins, and similar modifications to raw data. This creates a user-friendly version of your data that’s ready to be operationalized, or leveraged. Sometimes, this functionality is included in your ingestion system, but it helps to have another environment for exploratory analysis.

- Popular option: dbt

- Business intelligence or data visualization: Where data is analyzed to produce business-applicable insights in the form of metrics, visuals, and reports

- Popular options: Looker, Mode, Tableau, Preset, Superset, Thoughtspot

Additional components include:

- Operationalization (Reverse ETL): Moves transformed data into BI or other SaaS tools, where it is put to work. The tool used for ingestion may already cover this.

- Popular options: Census, Hightouch, Rudderstack

- Observability and monitoring:Tracks logs and metrics to give your team insights into data health, system behavior, and, sometimes, the path data takes through your stack.

- Popular options: Monte Carlo, Observe.ai, Splunk, Datadog, Datakin

- Orchestration: Executes jobs; manages the data lifecycle and movement throughout different components of the stack.

- Popular options: Airflow, Prefect, Dagster, Astronomer

- Metadata management: Maintains a central repository of metadata across the stack, which other components can pull from.

- Popular options: OpenMetadata, Informatica, MANTA

Note that the above is not meant to be an exhaustive list of valuable modern data stack companies and tools! It’s simply a list of some names you might recognize.

Below is a visual of how a stack’s components might interact. Again, this varies widely, so it’s just an example.

Future Trends Shaping the Modern Data Stack

We’re entering a new wave of innovation for the data stack. Platforms and solutions are proliferating at a dizzying pace.

We’ve more or less solved the problem of big data storage, and SaaS is a common business model. Now, new platforms can refine the basics and further specialize.

Looking into all these new platforms can get overwhelming. As you research, focus on the themes of change in the industry — specifically, how they affect your organization and how a given tool can help you incorporate positive change.

Here are a couple of these important industry trends:

- Real-time data integration: Most of the current data ingestion, reverse ETL, and orchestration tools rely on a batch data paradigm. That is, they run at an interval and introduce some amount of time lag. However, data delays are becoming less acceptable with every passing year, and at the same time, the tools for painless real-time data integration are finally within reach. As time goes on, we’ll see real-time become the standard.

- Data democratization: Working with data systems has always required a high degree of technical expertise. As a result, data traditionally lived in a silo, where only a small group of specialists were able to meaningfully manage it. As data becomes more important across organizations, it should be useable and manageable by different types of professionals and different user groups. That’s what data democratization is about: data as a shared resource, accessible for all. Getting to this point is the industry’s next frontier.

To read more about these trends, see our posts on Estuary’s real-time vision and data mesh.

Open-Source vs. Closed-Source in the Modern Data Stack

Building a modern data stack often involves choosing between open-source and closed-source tools. Here’s a quick look at the pros and cons of each:

Advantages of open-source:

- It’s free! You won’t be paying a bill to use it.

- More contributors add a range of features and cover more use-cases.

- Enhancements won’t get bottlenecked by a single engineering team.

- The tool isn’t dependent on the welfare or survival of a specific company.

Advantages of closed-source:

- You get what you pay for: closed-source products can be more user-friendly. Unlike open-source, this means you’re less likely to incur a hidden cost of engineering time on your side of the equation. And you probably won’t have to pay for self-hosting, either.

- Quality guarantees: closed-source products are sometimes seen as more stable and trustworthy.

Fortunately, there’s a way to get the best of both worlds, and that’s what we’re seeing in many of the new data systems today.

Many of these tools are built and overseen by a company, but are at least partially open-source. This can mean certain components are open-source, but other features are paid. Often, the code is open-source for anyone who’s able to host the platform on their own infrastructure, but paid, hosted options are also available.

Some companies also use a business source license (BSL). This means that that code is open to external contributors, but can only be used in non-production environments. That is, you’re free to change things yourself, but if you want to use the code for your own business, you still have to pay.

For instance:

- dbt offers an open-source, self-hosted version of their product, as well as paid tiers.

- Estuary Flow’s runtime is licensed under BSL. Its plug-in connectors are completely open-source and compatible with Airbyte, a platform known for its many open-source connectors.

Let’s take another look at all the popular products listed above. The bolded products are either completely open-source or have some open-source component.

- Data warehouse: Redshift, BigQuery, Snowflake, Databricks

- Ingestion: Fivetran, Airbyte, Meltano, Estuary Flow

- Transformation: dbt

- Business intelligence or data visualization: Mode, Tableau, Preset, Superset, Thoughtspot

- Operationalization, or “reverse ETL”: Census, Hightouch, Rudderstack

- Observability and monitoring: Monte Carlo, Observe.ai, Splunk, Datadog, Datakin

- Orchestration: Airflow, Prefect, Dagster, Astronomer

- Metadata management: OpenMetadata, Informatica, MANTA

This means you can more or less build a high-quality, completely open-source modern data stack, with one notable exception: none of the popular data warehouses are open-source (except for Databricks’ Delta Lake, which isn’t actually a warehouse). For an example of how you might build a completely open-source stack, see this article in Towards Data Science.

It’s unlikely you have that specific goal, though. The important thing here is that the varied approaches to open-sourcing allow flexibility and cost savings across your stack.

You can save money where it makes sense for your team by leveraging open-source offerings in that area. For other functionalities, paid options will save you valuable time, as well as the trouble of self-hosting. And a community of open-source contributors working on the codebases that underlie many products makes those products more feature-rich and valuable.

Flexibility and Scalability for Your Data Needs

The “modern data stack” isn’t something that’s easy to define. It’s an umbrella term with a few key characteristics:

- Allows you to store, access, and manage data.

- Made up of mix-and-match components, so it’s flexible.

- Has a cloud-based architecture, so it’s scalable.

A modern data stack can be open-source, paid, or a combination. Though technical, it’s a business tool, so design your stack with organizational goals in mind.

If a real-time data foundation is one of those goals, but it’s proved too challenging in the past, Estuary Flow was designed for you.

Flow is a DataOps platform that provides end-to-end data integration. It covers many of the functionalities we’ve mentioned: ingestion, transformation, and reverse ETL.

To learn more about building a flexible, modern data stack, and to explore how Estuary Flow can support your data needs, visit our website, docs, or GitHub.

To try Estuary Flow for your open-source modern data stack, sign up for the free tier here.

Author

Popular Articles