Streaming data processing has rapidly emerged as a critical aspect of the big data and analytics landscape. Its ability to handle and analyze data in real time is invaluable for a wide variety of industries, from finance to healthcare and logistics.

However, implementing streaming data processing in an organization is not a straightforward task. It requires a clear understanding of what it entails, its key characteristics, and the differences between stream and batch processing.

In this guide, we’ll shed light on these elements and explore the core components of stream processing architectures, alongside the leading frameworks and platforms. We’ll also take you through the practical steps for implementing data stream processing using Estuary Flow and Apache Spark and examine real-world case studies of stream data processing.

By the end of this guide, you will have a solid grasp of streaming data processing and a roadmap for its implementation.

What Is Streaming Data Processing?

Stream processing is an advanced technique that handles continuous data streams. This approach facilitates real-time data analysis and provides immediate insights rather than storing data for future analysis.

4 Key Characteristics Of Stream Processing

Here are some important features of stream processing:

- Concurrent Operations: Stream processing can perform multiple operations simultaneously. It can filter, aggregate, enrich, and transform data all at once.

- Continuous Data Ingestion: Stream processing is designed to handle a constant flow of data from various sources. By continuously ingesting this data, it ensures that no valuable insights are missed.

- Scalability: Stream processing is inherently scalable. As your business expands and the volume of data increases, stream processing efficiently handles larger data streams without compromising on speed or efficiency.

- Real-Time Analysis: The stream processing paradigm allows for immediate data analysis as the data arrives. This provides real-time insights which can be crucial for time-sensitive decision-making in various industries.

Now that you have a basic understanding of streaming data processing, let's take a look at the differences between stream and batch processing, understanding the complexities and resource requirements associated with each to help design an efficient and scalable data processing system.

Stream Vs. Batch Processing: Understanding The Differences

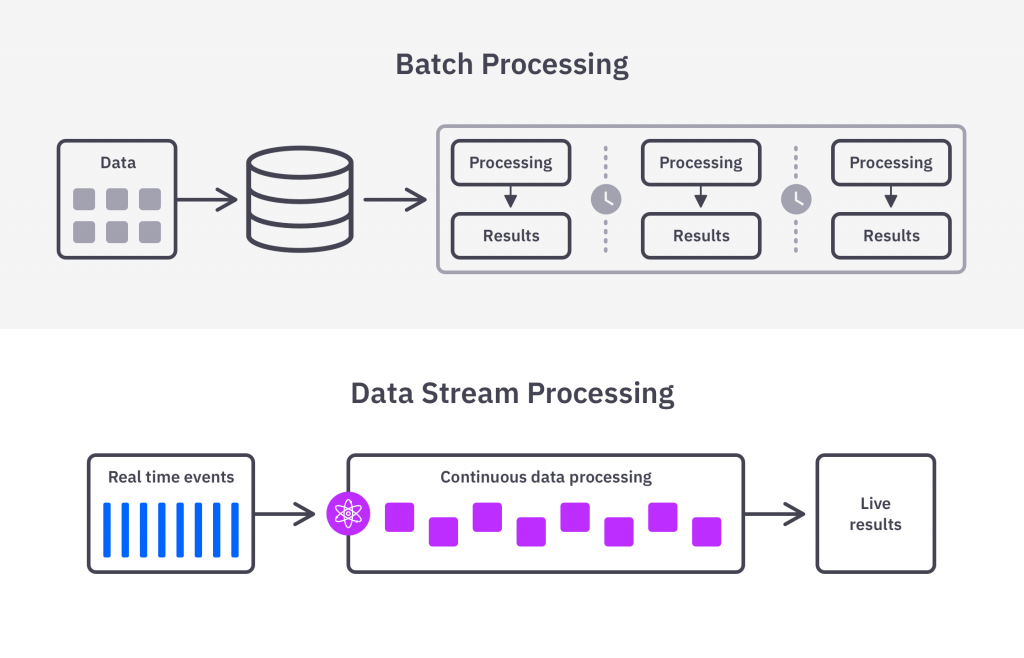

Batch processing is the more traditional approach to data processing. In it, data is gathered over a period and processed all at once, typically at set intervals. This method shines in situations where you’re dealing with hefty volumes of data that don't require immediate attention.

Let’s break down the major differences between these two approaches.

Accumulation & Processing

Batch processing operates by collecting data over a duration and processing it in large, scheduled batches. These chunks of data are processed together, often during periods of low demand or pre-set intervals. In contrast, stream processing continuously handles data as it arrives, in real-time.

Latency

Batch processing typically experiences higher latency because of its nature of processing accumulated data. On the other hand, stream processing is characterized by low latency.

Data Volume & Resource Management

Batch processing is a clear winner when it comes to handling large volumes of data. However, it’s worth noting that it can be resource-intensive both in terms of storage and computational power. Stream processing, conversely, is optimized for high-volume data flows with efficient resource usage.

Use Cases

Batch processing is a go-to for tasks where urgency isn’t a priority but processing large volumes of data is. This includes scenarios such as generating quarterly sales reports or calculating monthly payroll. On the contrary, stream processing is best in situations that require real-time data analysis like fraud detection systems.

Processing Environment

While batch processing is better suited for offline data processing, stream processing is designed for real-time scenarios. Batch processing is scheduled and can be adjusted to run during low-demand periods, while stream processing is continuous, responding to data events as they occur.

To make stream processing more effective, it's important to learn about the main elements of stream processing architectures and how they work together. Let's take a look.

4 Essential Components Of Stream Processing Architectures

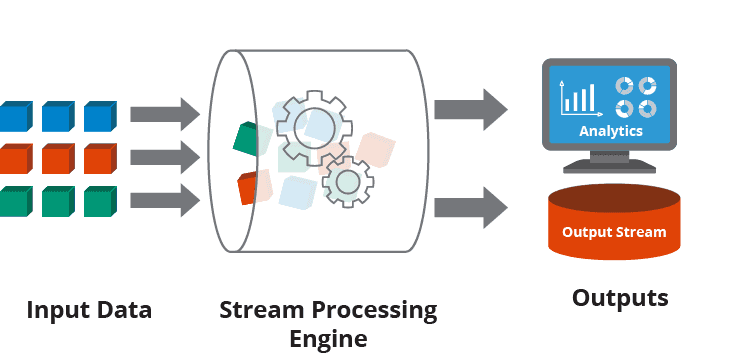

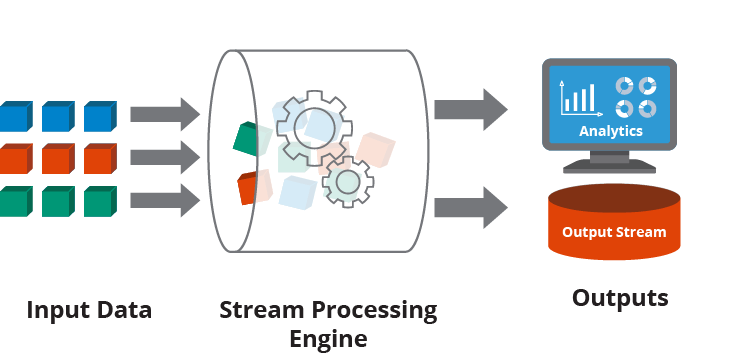

Stream processing architectures are composed of 4 key components that work together to manage and process the incoming event data streams. Here’s a breakdown of each component:

Message Broker Or Stream Processor

Stream processors are your data’s first point of contact in the streaming architecture. Their job is to gather data from various sources, convert it into a standard message format, and then dispatch the data continuously. Other components within the system can then listen in and consume these messages.

Real-Time ETL Tools

The next stop for your data is the ETL (Extract, Transform, Load) stage. They take the raw, unstructured data from the message broker, aggregate and transform it, and structure it into a format that’s ready for analysis.

Data Analytics Engine Or Serverless Query Engine

After your data is transformed and structured, it’s time to analyze it. The data analytics engine processes structured data, identifying patterns, trends, and valuable insights. These findings can then be used to help make business decisions and strategies.

Streaming Data Storage

Lastly, we have storage. This is where your processed stream data resides, housed in a secure and accessible environment. Depending on your organization’s needs, you can opt for various types of storage systems such as cloud-based data lakes or other scalable storage solutions.

Now that we have a grasp of the 4 essential components of stream processing architectures, let us say that this knowledge becomes practically meaningless unless we explore the implementation of data stream processing. Let’s take a look at it in greater detail.

Implementing Data Stream Processing To Unlock The Power of Real-Time Insights

Let’s look at how you can implement streaming data processing in real-world scenarios. We will focus on 2 methods: using Estuary Flow and Apache Spark.

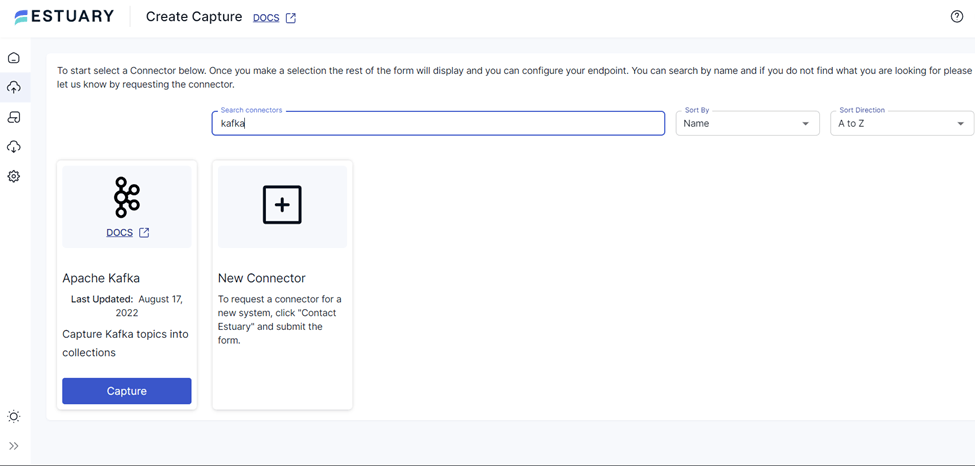

Using Estuary Flow

With Flow’s robust real-time capabilities, you can extract, transform, and load data streams into various destinations. Let’s see an example of taking a data stream from Apache Kafka, transforming it, and sending it to Snowflake, all using Estuary Flow.

Creating A New Capture

- On the Flow web app, navigate to the ‘Captures’ tab and click on ‘New capture’.

- From the list of available connectors, select the Kafka Connector.

- Fill in the required properties, including your Kafka broker details and any specific topic configurations.

- Name your capture.

- Click ‘Next’.

- In the Collection Selector, select the collections you want to capture from Kafka.

- Once you're satisfied with the configuration, click ‘Save and publish’.

Transforming Captured Data

If you need to transform your data before sending it to its destination, you can use Flow’s derivations feature. These allow you to apply transformations to your collections on the fly, filter documents, aggregate data, or even apply business logic.

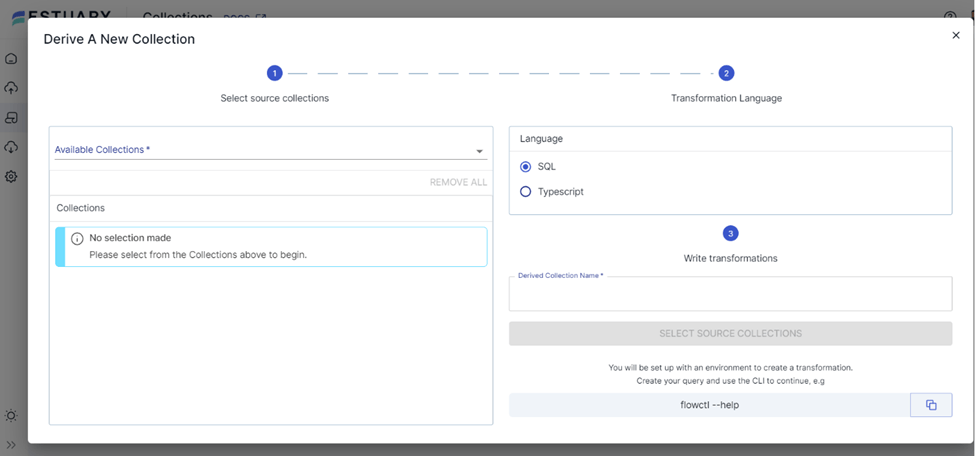

Here’s how you can create a derivation:

- Navigate to the ‘Collections tab’ and click on ‘New transformation’.

- Select the Collection you wish to transform.

- Name your new derived collection.

- Define your transformation logic. Flow currently enables you to write transformations using either SQL or TypeScript.

Creating A New Materialization

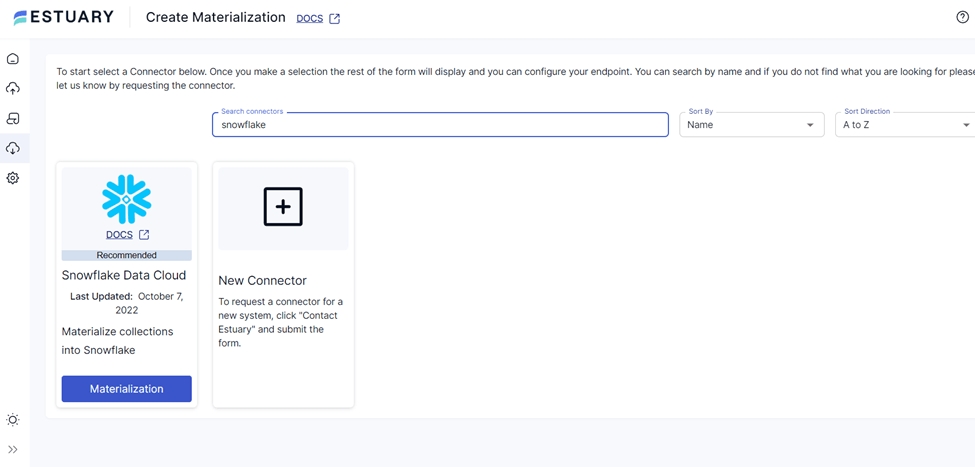

- After your Kafka data is captured into Flow collections, navigate to the ‘Materializations’ tab and click on ‘New materialization’.

- Choose the ‘Snowflake Connector’ from the available connectors.

- Fill in the required properties. This will typically include your Snowflake account details, database name, and other specific configuration properties.

- Name your materialization.

- Click ‘Next’.

- The Collection Selector will now display the collections you captured from Kafka.

- Once you’re satisfied with the configuration, click ‘Save and publish’. You'll receive a notification when the Snowflake materialization publishes successfully.

Using Apache Spark

Spark Streaming operates by dividing live input data streams into batches which are then processed by the Spark engine. This enables Spark to process real-time data with the same API and robustness as batch data. Here are 2 key concepts that you should be familiar with:

- Batch Duration: When creating a streaming application, you need to specify the batch duration. This is the time interval at which new batches of data are created and processed.

- DStream (Discretized Stream): DStream is a high-level abstraction provided by Spark Streaming which represents a continuous data stream. They can be created either from streaming data sources like Kafka, Flume, and Kinesis or by applying high-level operations on other DStreams.

Implementing data stream processing using Apache Spark involves 5 major steps which are discussed below. In each step given below, we have also added code snippets to demonstrate Apache Spark’s streaming functionality using an example of a real-time word count problem on data received from a TCP socket.

Step 1

First, create a SparkContext object. This object represents the connection to a Spark cluster and can be used to create RDDs (Resilient Distributed Datasets) and broadcast variables on that cluster.

plaintextfrom pyspark import SparkContext

from pyspark.streaming import StreamingContextStep 2

Create a StreamingContext object which is the entry point to any Spark functionality. When creating a StreamingContext, specify a batch interval. Spark Streaming will divide the data into batches of this interval and process them. In this example, we set the batch interval to 1s.

plaintextsc = SparkContext("local", "First App")

ssc = StreamingContext(sc, 1)Step 3

Create a DStream (Discretized Stream) which represents a stream of data.

plaintextlines = ssc.socketTextStream("localhost", 9999)Step 4

Apply transformations and actions to DStreams. Transformations modify the data in the DStream, while actions perform computations on the data.

plaintextwords = lines.flatMap(lambda line: line.split(" "))

pairs = words.map(lambda word: (word, 1))

wordCounts = pairs.reduceByKey(lambda x, y: x + y)Step 5

Finally, start the streaming service.

plaintextssc.start()

ssc.awaitTermination()3 Real-World Case Studies Of Stream Processing: Illuminating Success Stories

Stream Processing has become an essential tool for companies dealing with vast amounts of data in real time. Let’s look at some real-world case studies to truly understand their importance.

Thomson Reuters

Thomson Reuters, a world-renowned information provider, serves a wide range of markets, including legal, financial, risk, tax, accounting, and media. They needed a solution to capture, analyze, and visualize analytics data from their varied offerings.

For this, they built their analytics solution called Product Insight on AWS. Some key components of the solution include:

- Amazon S3 for permanent data storage.

- AWS Lambda for data loading and processing.

- Elasticsearch cluster for real-time data availability.

- An initial event ingestion layer comprising Elastic Load Balancing and customized NGINX web servers.

- A stream processing pipeline composed of Amazon Kinesis Streams, Amazon Kinesis Firehose, and AWS Lambda serverless compute.

Thomson Reuters’ Product Insight system showcases several benefits of using streaming data processing:

- Reliability: The robust failover architecture causes no data loss since the inception of Product Insight.

- Scalability: The system can handle up to 4,000 events per second and is projected to manage over 10,000 in the future.

- Real-time Analysis: The streaming data architecture, powered by Amazon Kinesis, ensures that new events are delivered to user dashboards in less than 10 seconds.

- Security: Using AWS Key Management Service, Thomson Reuters ensured the encryption of data both in transit and at rest, meeting internal and external compliance requirements.

Live Sports Streaming Platform

Live Sports, a leading sports streaming provider in the US and Western Europe, was trying to expand its live streaming services beyond horseracing. The main challenge was to revitalize an outdated system to incorporate a variety of sports, attract a wider audience, and generate new revenue sources.

So they underwent a complete overhaul of the system’s core, integrating more data providers and sports data, and developing a central engine for data aggregation and processing.

They adopted Kafka streams to reduce the database’s burden and increase efficiency. This new approach to data stream processing had significant benefits:

- The system now covers multiple sports, catering to diverse fan interests.

- The platform can manage an average of 10,000 sporting events daily, working with over 20 third-party providers.

- Fans can enjoy real-time and archived streams, live game visualizations, and race statistics, significantly enhancing user engagement.

Shippeo

Shippeo, a supply chain visibility provider, uses data stream processing to generate accurate ETA predictions and performance insights. The company faced challenges around data replication at scale, including running out of disk space and snapshotting that could take days to finish.

To solve this, they leveraged a variety of technologies including:

- Kafka: Used to consume the events in near real time, sent from Debezium.

- Snowflake, PostgreSQL, BigQuery: Downstream systems where the data is pushed by Kafka Sink Connectors.

- Debezium: An open-source tool for log-based Change Data Capture (CDC), used to track and stream all changes in a database as events.

Using data stream processing, Shippeo greatly enhanced its data management. Real-time data processing ensures accurate, timely decision-making, while scalable data replication accommodates growing data needs.

6 Best Stream Processing Frameworks & Platforms

For quick and efficient implementation, using a ready-made stream processing framework or cloud platform is your best option. These can enable you to quickly ingest, analyze, and act on real-time data. Let’s look at some important ones:

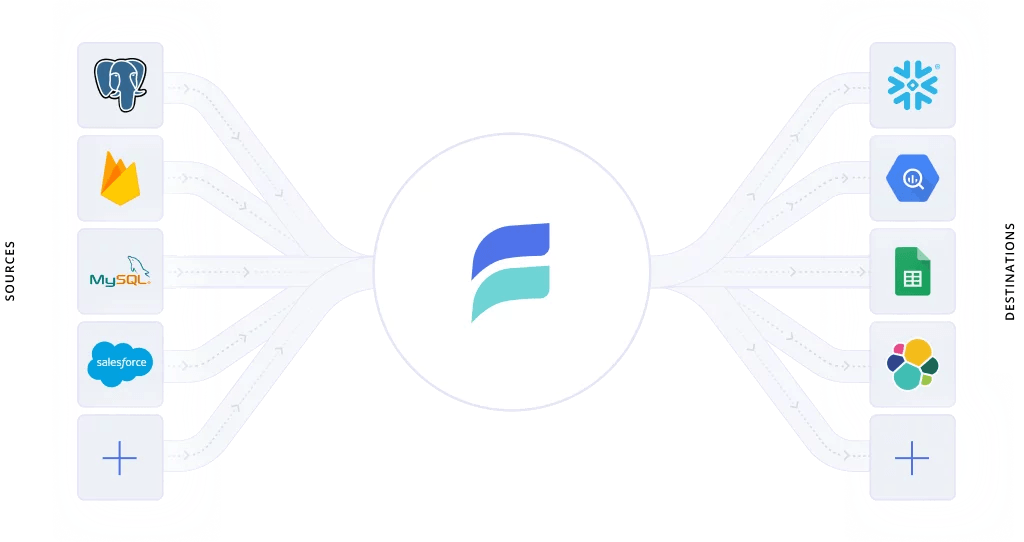

Estuary Flow

Estuary Flow is our real-time data operations platform that’s built on a real-time streaming broker called Gazette. Flow allows the flexible integration of SQL, batch, and millisecond-latency streaming processing paradigms. It provides real-time data streaming capabilities and focuses on high-scale technology systems like databases, filestores, and pub-sub.

Pros Of Estuary Flow

- Flexibility: Both web UI and CLI support are available.

- Scalability: Flow can easily scale up as the business data needs to grow.

- Event-Based Architecture: This architecture provides better performance and pricing at scale.

Apache Kafka

Apache Kafka, an open-source platform, is a popular tool for streaming data processing. It was developed by LinkedIn for real-time data processing and provides a significant shift from batch to real-time streaming.

Pros Of Apache Kafka

- Fault-Tolerance: Kafka replicates data across different brokers in the cluster, minimizing data loss.

- Integration Capabilities: Kafka can be integrated with various data processing frameworks like Hadoop and Spark.

- High-Throughput and Scalability: Kafka can process millions of messages per second and can scale up by adding more machines to increase capacity.

Apache Spark

Apache Spark is an open-source unified analytics engine and is renowned for processing vast amounts of data in near-real-time. With Apache Spark, you can perform various operations on streaming data, such as filtering, transforming, and aggregating, to derive valuable insights.

Pros Of Apache Spark

- Batch Processing: Spark simplifies batch processing, making it easier to handle large data sets.

- Advanced Analytics: Spark supports advanced analytics, including machine learning and graph processing.

- Fault-tolerance capability: It can recover from failures and continue to process data streams without losing any information.

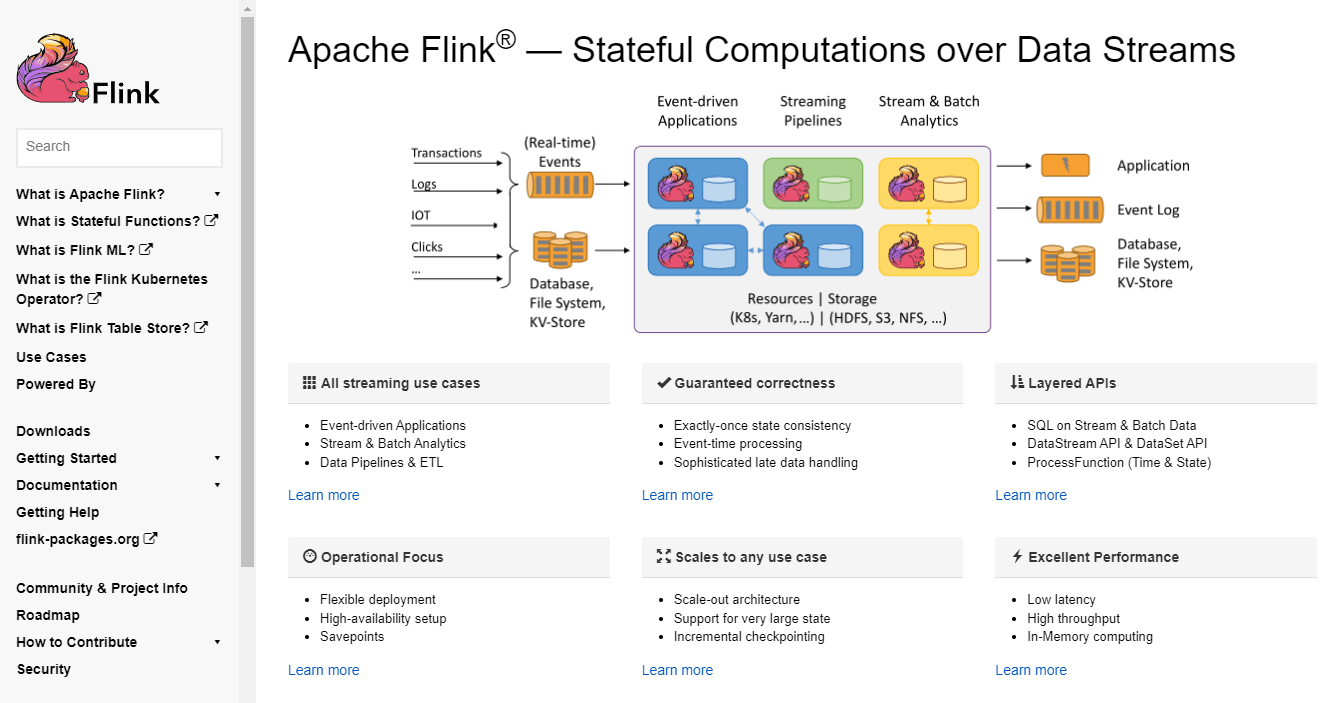

Apache Flink

Apache Flink is an open-source event stream processing application primarily designed for stream processing, though it also supports batch processing. It can compute both bounded and unbounded data streams from a multitude of sources.

Pros Of Apache Flink

- Intuitive UI: Flink’s user interface is easy to navigate and understand.

- Dynamic Task Optimization: Flink can analyze and optimize tasks dynamically, enhancing efficiency.

- Event time processing: It can handle out-of-order events and provide accurate results by considering event timestamps.

Apache Storm

Apache Storm is a distributed stream processing computation framework written in Clojure and Java. It’s designed to process vast amounts of data in real time, making it an excellent choice for live data streams.

Pros Of Apache Storm

- Simple API: The simplicity of Storm’s API makes it easy for developers to use and understand.

- High Throughput: Storm can process millions of records per second, making it suitable for high-volume real-time processing.

- Ability to scale horizontally: You can add more computing resources as your data stream processing needs grow so you can handle larger volumes of data without sacrificing performance.

Amazon Kinesis Data Streams

Amazon Kinesis Data Streams is a fully managed service offered by Amazon Web Services (AWS) that’s designed for real-time data streaming. It allows you to ingest, process, and analyze large volumes of data in real time.

Pros Of Amazon Kinesis Data Streams

- Scalability: It can handle any amount of streaming data which makes it suitable for the dynamic nature of real-time data.

- Easy to Set Up and Maintain: As a fully managed service, Kinesis Data Streams simplifies the setup and maintenance process for developers.

- Integration: It integrates well with Amazon’s big data toolset, like Amazon Kinesis Data Analytics, Amazon Kinesis Data Firehose, and AWS Glue Schema Registry.

Conclusion

The ability to process data in real-time sets successful businesses apart. Streaming data processing allows you to analyze and act on live data, providing advantages in operational efficiency, insights, and decision-making.

Finance, eCommerce, IoT, and social media are just a few examples that only scratch the surface of what streaming data processing can achieve. The possibilities are endless and it's up to you to explore how this powerful technology can revolutionize your organization's data analytics journey.

By implementing the right tools, leveraging the right techniques, and staying up-to-date with advancements in the field, you can benefit from real-time insights and stay ahead in today's fast-paced data-driven landscape.

If you are looking for a platform that offers flexibility, scalability, and ease of use, there is none better than Estuary Flow. With our platform, you can effortlessly integrate various data sources and destinations and create robust real-time DataOps solutions that can transform your business’s data analytics capabilities. Sign up today to try Estuary Flow for free or contact us to discuss how we can help.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles