If you're working with SQL databases, you're likely already familiar with how important SQL Change Data Capture is for monitoring changes to your database tables in real time. Your database is a treasure trove of valuable data that fuels your business decisions. Missing minor updates, inserts, or deletes will jeopardize the integrity and security of your data, not to mention the damage it will cause to your business reputation.

While CDC is undoubtedly important, choosing the right CDC technique for your SQL database can be a bit of a challenge. Some methods may be better suited to certain situations than others. Choose the wrong one and you’ll miss important changes to your data and waste resources on unnecessary monitoring.

In today’s guide, we will answer all these concerns. We’ll discuss how CDC works with various SQL databases, including Microsoft SQL Server, MySQL, and Postgres SQL, and introduce you to the top CDC tools available in the market. By the time you are done reading this value-packed guide, you’ll be familiar with industry best practices for implementing CDC in your organization and how you can keep your data up-to-date, accurate, and ready for action.

What Is Change Data Capture (CDC)?: Understanding Its Capabilities & Benefits

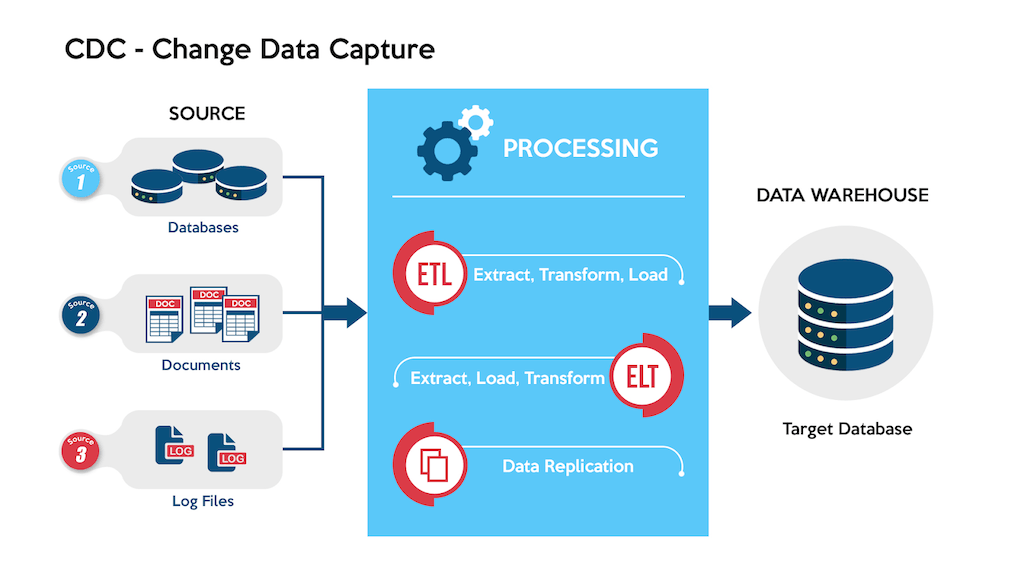

Change Data Capture is a process that identifies and tracks changes to data within your SQL databases and then transfers those changes to downstream systems or processes in real-time or near-real-time. It ensures that your data is always up-to-date and accessible whenever you need it.

There are plenty of scenarios where the CDC comes in handy. For instance, it's a paradigm shifter for data warehousing and business intelligence as it ensures that your data is up-to-date and accurate. It’s also useful for data migration, system integration, and even maintaining audit trails for compliance purposes.

CDC is also a crucial component of ETL pipelines for streaming data. ETL pipelines are responsible for moving data from various sources to a data warehouse. CDC ensures that the data in the data warehouses is updated in near-real-time or real-time which is essential for reporting and analytics purposes.

Implementing CDC in your organization can have many benefits. Let’s talk about some of them.

9 Real-World Benefits Of Change Data Capture (CDC)

Here are some important benefits of implementing CDC in your SQL databases:

- Real-time data updates: CDC allows you to capture and process data changes as they happen so you can always have up-to-date information at your fingertips.

- Stream processing compatibility: CDC works great with stream processing solutions like Apache Kafka, opening up new possibilities for your data processing workflows.

- Efficient resource usage: By transferring data in small increments, CDC minimizes the impact on your system resources and makes your processes leaner and meaner.

- No need for batch windows: With CDC, you can forget about those cumbersome batch windows and bulk loading. It’s all about real-time operations now.

- Zero downtime migrations: CDC enables fast database migrations without any downtime. This ensures that your operations run smoothly and continuously.

- Synchronized data systems: Keep your data consistent across multiple systems with CDC’s real-time synchronization capabilities. No more worrying about outdated information.

- Perfect for the cloud: CDC’s efficiency in moving data across wide area networks makes it an ideal solution for cloud-based environments.

- Improved reliability and availability: By keeping your data in sync across various systems, CDC enhances your data’s reliability and accessibility which is crucial for business continuity.

- Fresh insights: With constantly updated data in your warehouses, you can enjoy accurate, up-to-date insights through Business Intelligence tools or AI/machine learning pipelines.

Should You Use Change Data Capture?

Before deciding to use change data capture (CDC), consider these questions to help you make an informed decision:

- Do you need real-time data loading and connectivity?

If your organization handles large amounts of data across multiple databases and requires real-time data loading, CDC can provide the necessary speed and connectivity.

- Are you experiencing disruptions in production workloads due to slow data processing?

CDC processes data quickly and efficiently, minimizing disruptions to your production workloads. If you're struggling with slow data processing, CDC could be the solution.

- Are faster reporting and better business intelligence important to your organization?

Building on the above question, CDC can greatly accelerate data collection for quicker reporting and improved business intelligence capabilities. If timely decision-making based on up-to-date information is important for your business, CDC provides the necessary speed and insights.

- Is your company’s master data management system in need of improvement?

If you're looking to enhance your organization's master data management system, CDC can help by continuously updating critical data from multiple sources. This ensures that your data remains secure and up-to-date.

- Do you need to integrate apps with incompatible databases?

CDC is one way to integrate apps that may otherwise be incompatible with your in-house database systems. It provides you with flexibility in choosing the right business applications for your company.

- Is the pressure on your operational databases affecting overall performance?

If your operational databases are under stress, CDC helps by creating a copy of your databases and distributing the load between systems. This leads to better performance and stability.

While it's helpful to know what CDC is, it's equally important to understand the various types available to determine which one aligns best with your business model. So let's take a closer look at the different types.

4 Types Of CDC Methods: Weighing Pros & Cons Of Each

Now that we’ve set the stage, let’s dive into the various CDC methods. We’ll explore each type, discuss their pros and cons, and figure out the best scenarios to use them.

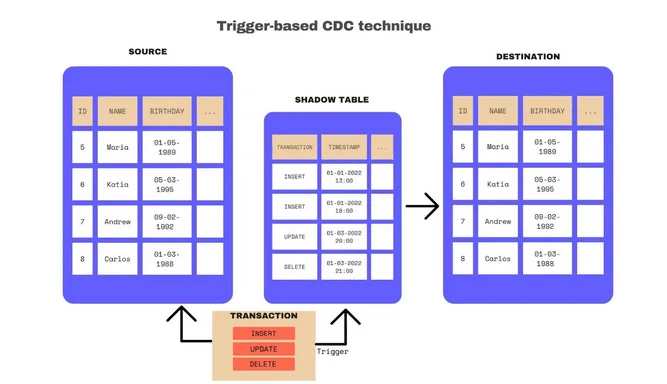

A. Trigger-Based CDC

This method relies on database triggers that detect changes and create a change log in shadow tables. It operates at the SQL level with some databases offering native support for triggers in their SQL API. The real effectiveness of this method is its real-time data capture. Also, shadow tables provide an immutable, detailed log of all transactions.

However, there are downsides too. Trigger-based CDC can significantly reduce database performance as it requires multiple writes for each row insert, update, or delete. What’s more, it can be quite a task to maintain triggers as applications change resulting in management challenges.

This method suits applications where a detailed change log is crucial and where the impact on performance is manageable.

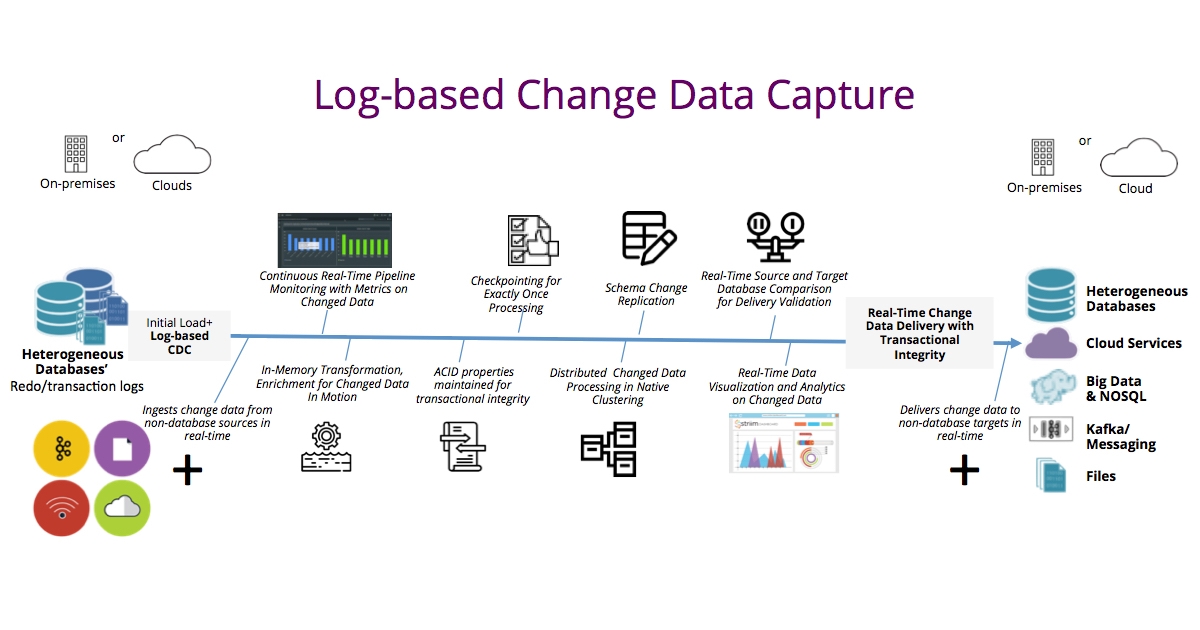

B. Log-Based CDC

The log-based CDC reads the transaction log from the source database to capture new transactions, including inserts, updates, and deletes. The transaction log goes by a different name in some relational databases, but it more or less does the same thing in all of them. For example, it’s called the write ahead log (WAL) in PostgreSQL and the binary log (binlog) in MySQL.

No need to worry about performance issues here. It’s less intrusive than trigger-based CDC and has minimal impact on the production database since it doesn’t require scanning operational tables or making application-level changes.

However, parsing internal logging formats can be complex and you may need to update your parsing logic with each new database release. Moreover, a system to manage change event metadata and additional log levels for scannable transaction logs might be necessary when using log-based CDC.

This type of CDC is ideal when you need minimal impact on the source system and want to maintain ACID reliability across multiple systems.

C. Polling-Based CDC

In this approach, the source database is polled at regular intervals and changes are extracted from the source database and stored in a separate table. These changes are then propagated to the target system. It’s a relatively simple approach that’s easy to implement as it doesn’t require any complex triggers or logs.

However, polling-based CDC can cause increased latency and may miss some changes if the polling interval is too long. It’s best suited for applications with low-frequency data changes and where near-real-time updates aren’t a priority.

D. Query-Based CDC

The query-based CDC involves running queries to detect changes in the database. This method retrieves only the rows that have changed since the last extraction. It’s also flexible and can work with most databases.

But this method can be resource-intensive and might not be the fastest option. It can also be error-prone and may cause data consistency issues. Query-based CDC works well when you can build it with native application logic and don’t require external tools.

While knowing different types of CDC methods is important, it's even more important to explore the best CDC tools to greatly improve the efficiency and accuracy of the CDC process. Let’s discuss them in greater detail.

5 Best CDC Tools For SQL Databases That Guarantee Efficiency

There are many CDC tools available in the market that can help you set up CDC from a SQL database like Microsoft SQL Server, Postgres, or MySQL quickly and efficiently. We will discuss the 5 most popular ones below.

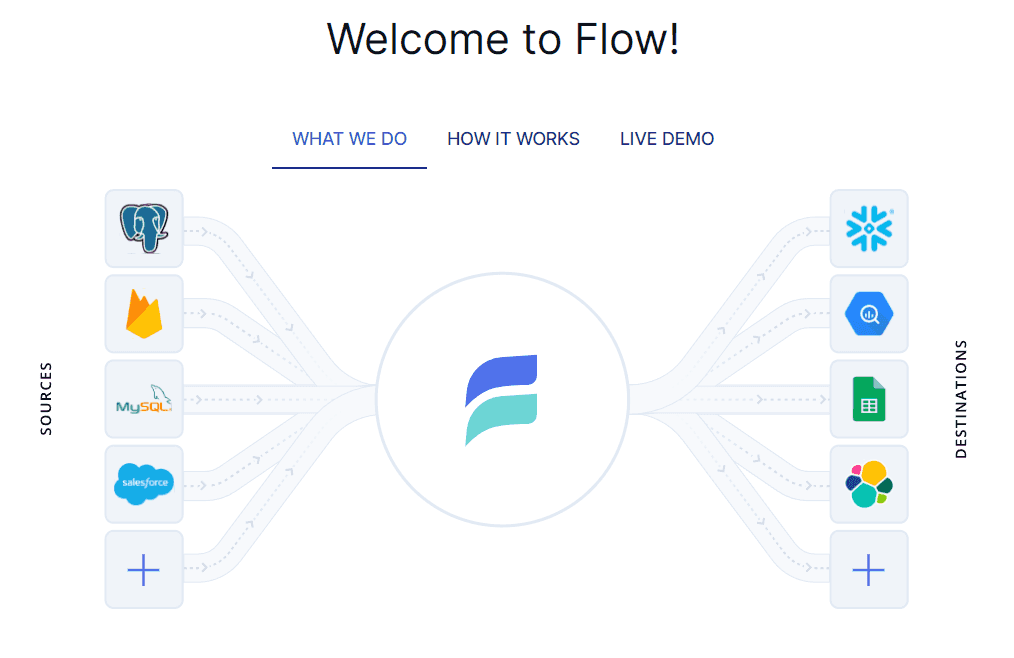

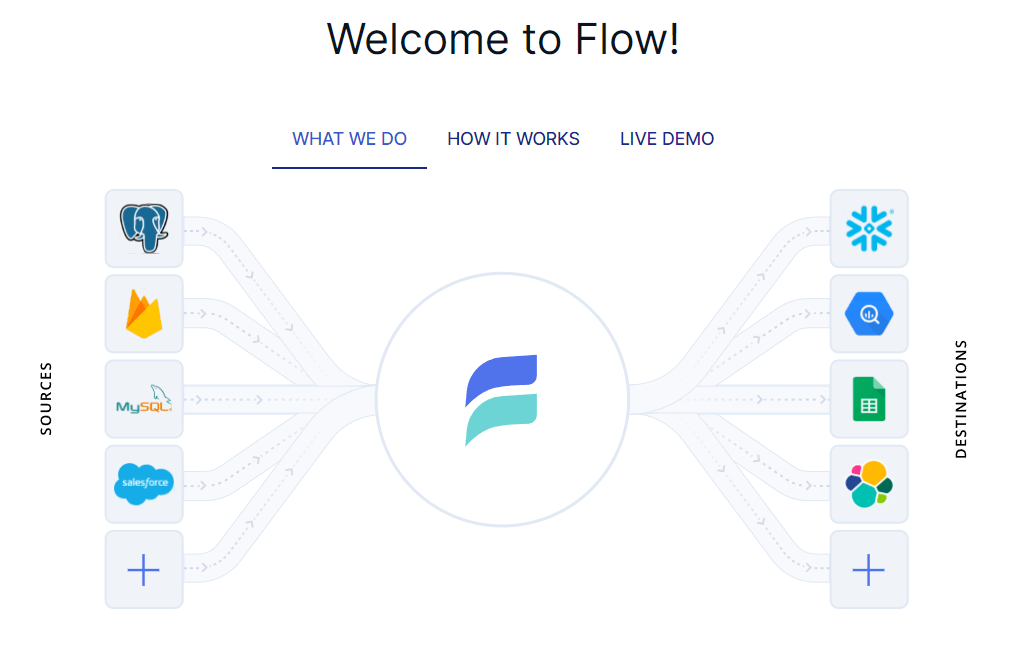

Estuary Flow

Estuary Flow is a cutting-edge DataOps platform designed to streamline data integration and processing. This enables the platform to efficiently identify and capture changes in the source data. After you capture data, use Flow to transform your data on-the-fly and move it to a destination system in real time. Because it uses stream processing, Estuary Flow minimizes data latency and ensures that your data is always up-to-date.

Estuary Flow offers 200+ connectors for smooth integration with tons of data sources and targets. Its user-friendly interface makes creating and managing data workflows a breeze. The platform simplifies complex data operations and allows users to focus on deriving valuable insights from their data rather than being bogged down by technicalities.

Fivetran

Fivetran is another great DataOps platform with efficient CDC functionality that ensures your data is always fresh and arrives at the destination on time. What sets Fivetran apart is its library of 50+ pre-built data models for common scenarios like finance and digital marketing.

It also has 200+ pre-built connectors for easy integration with various data sources and destinations. Fivetran’s pricing is affordable as well – you only pay for the number of rows processed each month.

Airbyte

Airbyte is an open-source data integration platform that simplifies ELT data pipeline creation. It can efficiently capture changes in source data, ensuring up-to-date information with minimal latency.

Some of Airbyte’s features include a user-friendly interface and in-memory processing before pushing data to the destination. Being open-source, users can even contribute improvements and new features. Airbyte has over 300 connectors and charges users based on the amount of data synced.

Hevo Data

Hevo Data is an end-to-end data pipeline platform that streamlines data integration and transformation. It features over 150 connectors for data sources and destinations, along with the ability to create no-code data pipelines, efficient data transformation for analytics, and operational intelligence delivery to business tools.

While it’s easy to use, it does limit customization and monitoring compared to more complex platforms. Hevo Data has pricing options for different business sizes, including a free plan and paid plans.

Talend

Talend provides an enterprise-grade data replication solution that works across hybrid and multi-cloud environments. It also supports CDC for real-time data replication, so your data stays fresh and reliable.

Talend offers many connectors that allow you to integrate pretty much any data type from any source to any destination, whether it’s on-premises or in the cloud.

Their cloud-native Talend Data Fabric has a whole suite of tools, like Pipeline Designer for creating, deploying, and reusing data pipelines, and Data Inventory for easy data discovery and sharing.

Now that we have discussed different CDC tools, note that merely discussing these tools is not sufficient. For maximizing real-time data synchronization and easy tracking of data changes, learn how you can implement CDC with different SQL databases. Let’s take a look.

Prepare Your SQL Database For A CDC Pipeline

Setting up different types of SQL databases for Change Data Capture (CDC) involves several steps. These steps may vary depending on the specific database system you are using, but generally include enabling CDC, configuring database-specific settings, and setting up jobs or processes for managing your CDC tasks.

Let’s explore these steps in a broader context using Microsoft SQL Server as an example.

Enabling CDC For Your SQL Database

To get started with CDC, you first need to enable Change Data Capture on your SQL database.

For Microsoft SQL Server, this can be done in 2 ways: at the database level or for specific tables. Let’s discuss each method in detail.

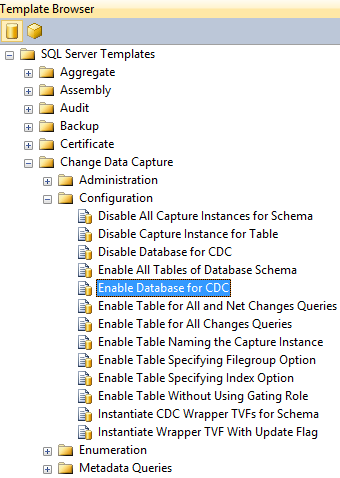

Step I.1. Enabling CDC At The Database Level

To enable CDC at the database level in Microsoft SQL Server, you need to be a member of the sysadmin fixed server role.

You can run a stored procedure called sys.sp_cdc_enable_db within the database context.

Here's how to do it using SQL Server Management Studio:

- Open SQL Server Management Studio.

- Navigate to View > Template Explorer > SQL Server Templates.

- Select the Change Data Capture sub-folder to access the templates.

- Use the "Enable Database for CDC template" and run it within your desired database.

Step I.2. Enabling CDC For Specific Tables

Before you can enable CDC for specific tables, make sure it’s already enabled at the database level.

If it is, you can enable CDC at the table level as a member of the db_owner fixed database role group. Here’s how:

- Use the "Enable a Table Specifying Filegroup Option Template" within your desired database.

- Edit the setting as per your requirement.

- Run the procedure to enable CDC for your desired table.

You’ve now successfully enabled CDC for your SQL Server database.

Note that the steps provided above are specific to Microsoft SQL Server. For other SQL databases, the steps may differ. Check out our guides for enabling CDC in MySQL and Postgres SQL for more details.

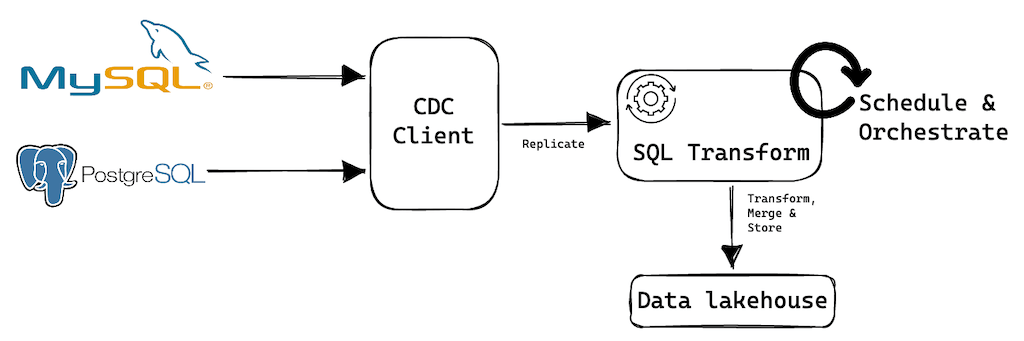

Setting Up Estuary Flow For SQL CDC

With CDC enabled in your SQL database, it is time to connect your CDC tool to your database. Estuary Flow is a modern data integration platform that enables you to easily build real-time data pipelines.

Let’s see the steps for setting up an Estuary Flow CDC from SQL databases. Before you get started, ensure that you satisfy the prerequisites. You will need to:

- Enable CDC in your SQL database, as discussed in Step I.

- Register for an Estuary account.

- Satisfy database-specific requirements. You can check these out in Estuary Flow documentation for MySQL, Postgres SQL, and SQL Server.

A basic CDC Data Flow has the following three parts:

- Data capture: takes data from the SQL database source

- Collections: Flow stores data in a real-time cloud data lake

- Materialization: sends data to an external destination

Both capture and materialization need connectors, which are components that link Flow with data storage.

To start, go to the Estuary Flow dashboard and log in.

Step 1 - Create A New SQL Capture

- Click Captures, then New Capture.

- Pick the appropriate SQL database Connector.

- Name your capture, like myOrg/myFirstSQLCapture.

- Complete the required fields and click Next.

- Note that Flow listed the tables in the database, which it will ingest in the form of collections.

- Next, click Save and publish. Once the dialog box shows the operation was successful, click Materialize collections to push the CDC data to a destination.

Step 2 - Create An SQL CDC Materialization

The "CDC" part is technically done, but now you have to connect your pipeline of real-time change data to your destination of choice.

- If you missed the button after completing your capture, no worries: just lick Materializations, then New Materialization.

- Choose the Connector for your data destination, such as a data warehouse like Snowflake or BigQuery.

- Fill out Endpoint Configuration properties. These depend on the system you chose.

- Name your materialization, e.g., myOrg/myFirstMaterialization.

- Click Next to let Flow connect to the destination.

- If necessary, adjust collections before materializing.

- Click Save and publish. You'll be notified when the Data Flow is published successfully.

Capturing Change Data From SQL Database

With SQL CDC enabled and Estuary Flow set up, you can now start capturing change data from your SQL database. Here’s a brief overview of how this process works:

- As changes occur in your source database, SQL CDC captures the change data and stores it in dedicated database change tables (in the case of SQL Server) or reads directly from the transaction log.

- Estuary Flow processes the captured change data, transferring it to your data warehouse or other downstream systems.

- You can then access the change data in your data warehouse for analysis, reporting, or other business intelligence purposes.

With this, you can keep your data warehouse up-to-date and accurate with data coming from your SQL Server or other SQL database.

Change Data Capture Best Practices For Efficient & Secure Data Integration

When implementing change CDC in your organization, following the industry’s best practices can ensure that your work is done efficiently. Here are some crucial best practices to consider:

Asynchronous Change Propagation

Opt for a CDC solution that supports asynchronous pub/sub-style change propagation to consumers. This allows for better scalability and performance in your data integration processes.

Preserve Change Order

Ensure that your CDC solution maintains the order of changes so that data can be accurately propagated to the target system in the same sequence as the source.

Limit Captured Columns

To optimize performance, only capture the columns you truly need to track. By specifying the necessary columns in the configuration, you can minimize the amount of data to process and improve efficiency.

Avoid Frequent Large Updates

Large update transactions can negatively impact CDC performance. If possible, limit the use of CDC on tables that experience frequent large updates.

Monitor Transaction Logs

Parse transaction logs in relational database management systems to extract changes. This method is often more efficient than trigger-based CDC as it doesn’t impede performance while data changes are being made.

Choose The Right CDC Tool

Select a CDC tool that fits your organization’s needs and integrates well with your existing systems. Consider factors such as compatibility, scalability, and ease of use.

Plan For Data Consistency

Implement data validation and reconciliation processes to ensure data consistency between the source and target systems. This helps maintain the integrity of your data throughout the CDC process.

Conclusion

Many factors play their role in choosing the right SQL Change Data Capture technique. Evaluate the size and complexity of your database, the frequency and volume of changes, and the latency requirements for your business.

If you're looking for a powerful, flexible, and easy-to-use CDC tool for a database like Microsoft SQL Server, PostgreSQL, or MySQL, Estuary is a clear choice. With Estuary, you can easily capture and replicate changes to your SQL database in real time, providing up-to-the-second visibility into your data.

Estuary provides a wide range of data integration and transformation capabilities that enable you to easily move data between different systems and formats. Our user-friendly web-based interface makes it easy to set up, monitor, and manage your CDC workflows. If you are interested in knowing more about Estuary Flow’s potential, sign up for free now.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles