Data ingestion forms the foundation for data-driven decision-making, analytics, and reporting. So it's only natural that it's an extremely important step in ELT and ETL pipelines, particularly when it comes to loading data into Snowflake, a leading cloud-based data warehousing platform. Snowflake real-time data ingestion capabilities ensure that data from various sources is efficiently captured, processed, and stored in a centralized data warehouse.

While Snowflake is a powerful tool, organizations can still face challenges in terms of its accuracy, formatting to fit the schema, and ability to manage the complexity of multiple data sources. So how do you find a way around it?

This is what our today’s guide aims to do – to provide practical solutions for addressing these challenges.

We will also discuss Snowflake’s features and its real-time data ingestion capabilities. By the end of this guide, you’ll be familiar with 3 different approaches for real-time data ingestion into Snowflake and its practical use cases that will demonstrate how companies across different sectors are leveraging Snowflake to enhance their decision-making processes.

Exploring The Snowflake Data Cloud For Maximizing Business Insights & Efficiency

As the importance of data analysis in business grows, organizations are turning to cloud data platforms to handle the sheer scale and speed of the tremendous amount of data. One such platform is Snowflake Data Cloud, a powerful tool that enables businesses to load data into Snowflake efficiently, supporting multi-cloud infrastructure environments.

Let’s take a deeper look at Snowflake’s data platform and explore what makes it so popular for loading data into Snowflake.

What Is Snowflake?

Snowflake Data Platform is a fully managed warehousing solution designed to store and process massive amounts of data. Snowflake provides near real-time data ingestion, data integration, and querying at a large scale.

One of Snowflake's key features is its unique architecture that separates the compute and storage layers. This enables users to scale resources independently and pay only for what they use.

Snowflake supports various data types and sources, including structured, semi-structured, and unstructured data. It also integrates very well with SaaS applications, various APIs, and data lakes which makes it extremely versatile.

3 Main Components Of The Snowflake Platform

The Snowflake platform is built upon three foundational components, which together form the basis of its cloud data platform:

- Cloud Services: Snowflake's cloud services layer coordinates activities across the platform, handling tasks like authentication, infrastructure, metadata, and access control. The platform takes care of data security and encryption and holds certifications like PCI DSS and HIPAA.

- Query Processing: Snowflake uses independent "virtual warehouses" for query execution. Each Snowflake data warehouse is formed as a separate cluster. This prevents competition for computing resources and ensures that warehouses don’t impact each other's performance.

- Database Storage: Snowflake databases can store structured and semi-structured data for processing and analysis. The platform takes care of managing every aspect of data storage such as organization, structure, file size, compression, metadata, and statistics. Access to this stored data is exclusively available through SQL query operations within Snowflake, ensuring security and control.

6 Key Features & Benefits Of Snowflake

What makes Snowflake popular are its unique features and the many benefits it provides. Some important ones are:

- Unique Architecture: Snowflake’s unique approach of separating compute and storage components of DataOps allows users to scale resources independently and pay only for what they use. Its multi-cloud approach and highly parallel design ensure efficient data processing and increase the reliability of the system.

- Data Type and Source Support: Snowflake can handle a variety of data types including:

- Unstructured data (e.g., images, text files)

- Structured data (e.g., SQL databases)

- Semi-structured data (e.g., JSON, AVRO, or XML)

- Unstructured data (e.g., images, text files)

It integrates with various data sources, including SaaS applications, APIs, and a data lake.

- Scalability: Snowflake's architecture enables easy scaling for handling large datasets and sudden spikes in data volume.

- Performance: The platform's design allows for fast and efficient query execution.

- Ease of Use: Snowflake offers a user-friendly interface for creating, managing, and querying data.

- Security: Advanced security features like multi-factor authentication, encryption, and role-based access control are provided.

4 Popular Use Cases Of Snowflake

Snowflake is a diverse platform offering a range of services. This allows organizations to leverage their power in a variety of ways. Below are the 4 most important ones:

- Data warehousing: Snowflake is ideal for handling large amounts of structured and semi-structured data.

- Analytics: The platform's architecture and data support make it suitable for data visualization and machine learning applications.

- Data sharing: It offers built-in secure and efficient data sharing between departments or organizations.

- ETL processes: Users can easily extract data from different sources, transform it into the desired format, and load it into Snowflake for analysis.

Now that we understand Snowflake, its components, and its features, let’s take a deeper look into data ingestion and more specifically, real-time data ingestion to understand how Snowflake leverages it.

Understanding Real-Time Data Ingestion For Unlocking Actionable Insights

Data ingestion refers to the process of collecting large volumes of data from various types of sources and transferring them to a destination where they can be stored and analyzed. These target destinations may include databases, data warehouses, document stores, or data marts. Data ingestion often consolidates data from multiple sources at once, such as web scraping, spreadsheets, SaaS platforms, and in-house applications.

Now let’s talk about what real-time data ingestion is and why you need it.

Real-Time Data Ingestion

Real-time data ingestion is the process of collecting and processing data in real-time or near real-time. This approach is crucial for time-sensitive use cases where up-to-date information is essential for decision-making.

This approach focuses on collecting data as it is generated and creating a continuous output stream, making it an invaluable tool for businesses across various industries.

Let’s look at a couple of use cases:

- In eCommerce and retail, real-time transactional data ingestion allows companies to accurately forecast demand, maintain just-in-time inventory, or adjust pricing more rapidly.

- In manufacturing, real-time analytical data ingestion can provide IoT sensor alerts and maintenance data, reducing factory floor downtime and optimizing production output.

Comparing Data Ingestion Methods: Batch Processing Vs. Real-time Ingestion

There are 2 primary types of data ingestion methods: real-time and batch-based.

Batch processing collects data over time and processes it all at once. This method is suitable for situations where data types and volumes are predictable long-term.

In contrast, real-time ingestion is vital for teams that need to handle and analyze data as it is produced, especially in time-sensitive scenarios.

With a solid understanding of real-time data ingestion, it's time to see how you can ingest real-time data into Snowflake.

How Can You Load Real-Time Data into Snowflake? 3 Proven Methods

Several methods for real-time data ingestion into Snowflake cater to diverse use cases and requirements. Let’s look at 3 different approaches for ingesting real-time data into Snowflake.

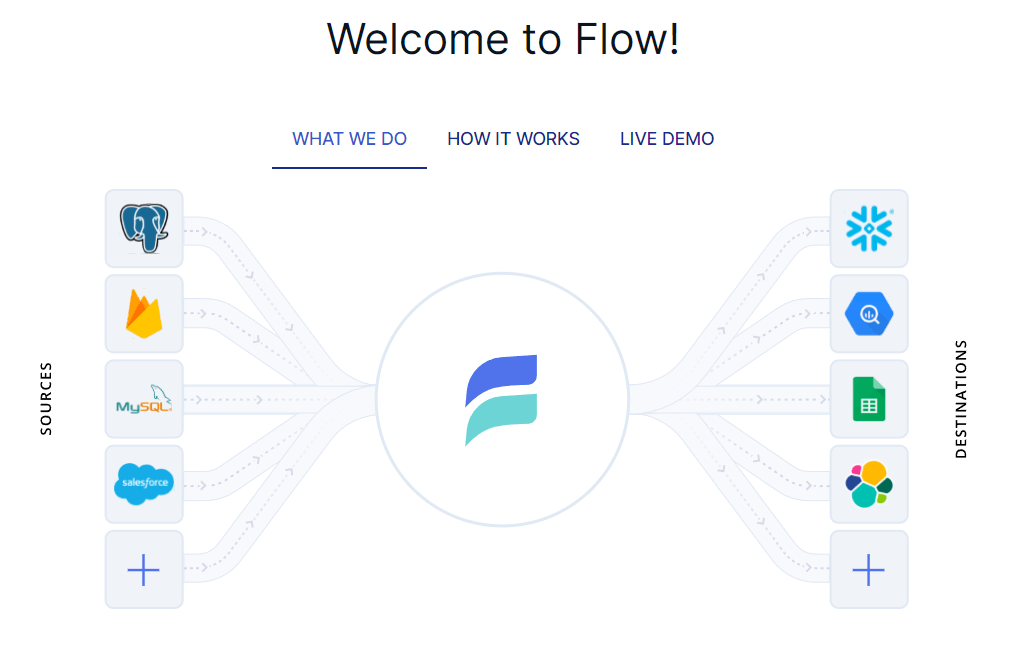

Estuary Flow: A Powerful Tool for Loading Data into Snowflake

Estuary Flow’s real-time data operations platform is designed to handle high-throughput data with low latency, making it a leading choice for businesses that require real-time insights.

The best part about Estuary Flow is its scalability. Whether you're dealing with a small data inflow of 100MB/s or a massive inflow of 5GB/s, Flow can handle it all efficiently. Also, you can easily backfill large volumes of historical data from your source systems in a matter of minutes. Estuary Flow can also set up pipelines for both historical and real-time data in just a few minutes.

It can continuously capture new data from data sources such as SQL or NoSQL databases and immediately write it to destinations such as Snowflake. Flow connects to Snowflake using the Snowflake connector that can materialize Flow collections into tables in a Snowflake database.

To use this connector, you need a Snowflake account, a target database, schema, a virtual warehouse, a user with an assigned role, and knowledge of your Snowflake account's host URL.

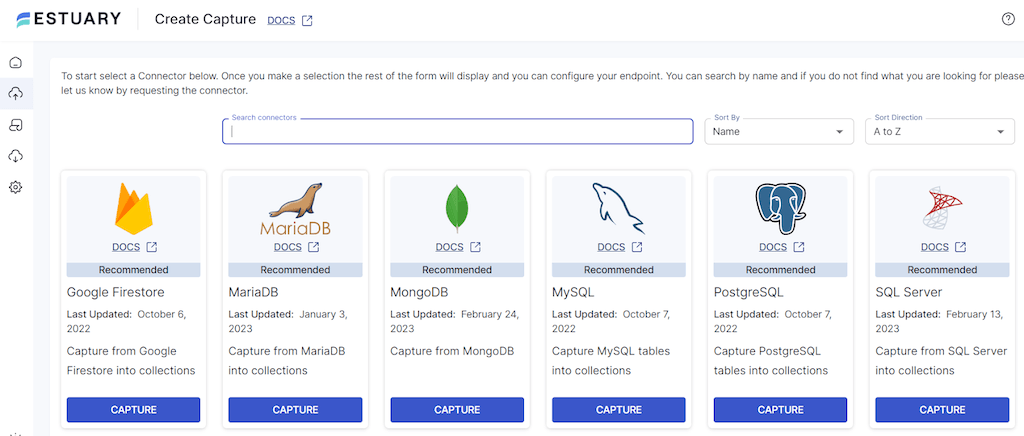

Here are the steps you need to follow to set up your pipeline for real-time data ingestion into Snowflake:

- First, create a free Estuary account using your GitHub or Gmail account. You'll then see a welcome page showing the features of Flow.

- Click "Captures" and then "New capture" to connect to your data source (see a list of supported sources here). Provide the required details like server address and credentials. Flow will then connect to your source to verify the information you provided.

- Choose the collections you want to capture, modify their properties if needed, and click "Save and publish."

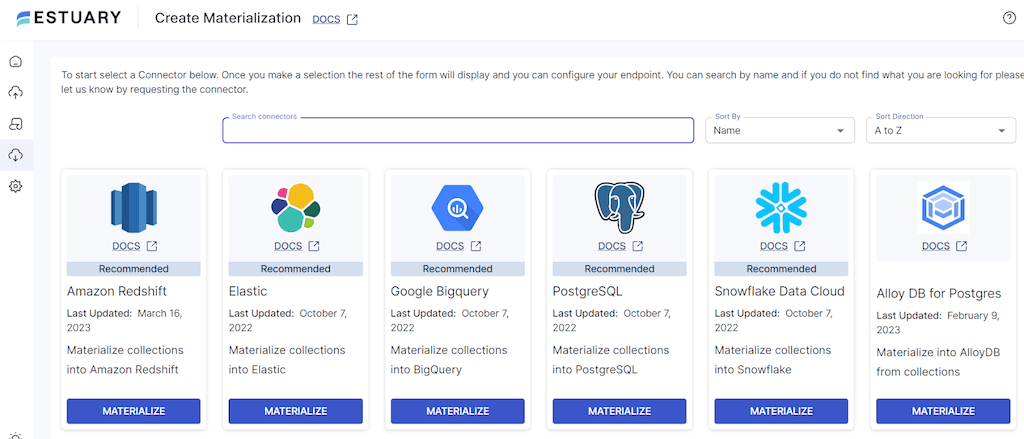

- Next, click the "Materialize collections" button to move your captured data to Snowflake. Select "Snowflake Data Cloud" as the connector and provide the necessary information for the Endpoint Configuration.

- Once you're satisfied with the configuration, click "Save and publish" and Flow will start to capture your real-time data and move it into Snowflake.

Refer to the technical documentation of the Snowflake connector and this guide to set up a basic data flow in Estuary Flow before getting started.

Using Snowpipe to Load Data into Snowflake in Real-Time

If you're looking to ingest real-time data into Snowflake, Snowpipe is a popular option. Snowpipe enables loading data stored in files directly into Snowflake tables.

Let’s see how to use it to get your data into Snowflake with helpful code snippets along the way.

Step 1: Setting Up Your Stage

First, you'll need to set up a stage for your data files. A stage is a storage location where Snowpipe can find your data. You can choose from Amazon S3, Google Cloud Storage, or Microsoft Azure as your stage location.

plaintext-- Create an Amazon S3 stage

CREATE STAGE my_s3_stage

URL = 's3://my-bucket/path/'

CREDENTIALS = (AWS_KEY_ID='my_key_id', AWS_SECRET_KEY='my_secret_key');Step 2: Creating A Pipe

Next, you need to create a pipe. A pipe is a named object in Snowflake that holds a COPY statement. The COPY statement tells Snowpipe where to find your data (in the stage) and which target table to load it into.

Remember, Snowpipe supports all data types, including semi-structured ones like JSON and Avro.

plaintext-- Create the target table

CREATE TABLE my_target_table (data VARIANT);

-- Create the pipe with a COPY statement

CREATE PIPE my_pipe AS

COPY INTO my_target_table(data)

FROM (SELECT $1 FROM @my_s3_stage)

FILE_FORMAT = (TYPE = 'JSON');Step 3: Detecting Staged Files

Now, you'll need a way for Snowpipe to detect when new files are available in your stage. You have two main options here:

- Automate Snowpipe using cloud messaging services, such as Amazon S3 Event Notifications or Azure Event Grid. Configure the messaging service to send notifications to a Snowpipe REST endpoint.

- Call Snowpipe REST endpoints directly to notify it of new files in the stage. You can use a script, a REST client, or any tool that can make HTTP requests.

Choose the method that best fits your use case and infrastructure.

Step 4: Continuous Data Loading

With everything set up, Snowpipe will now load data in near real-time. As new files become available in your stage, Snowpipe will ingest them into the target table according to the COPY statement in the pipe.

Step 5: Monitoring Your Data Ingestion

Keep an eye on your data ingestion process by monitoring the Snowpipe history and logs. This will help you spot any issues and ensure that your real-time data is being ingested smoothly.

plaintext-- Query the history of your pipe

SELECT *

FROM TABLE(INFORMATION_SCHEMA.PIPE_USAGE_HISTORY('my_pipe'))

ORDER BY START_TIME DESC;The process is complete. You are now ingesting real-time data into Snowflake using Snowpipe.

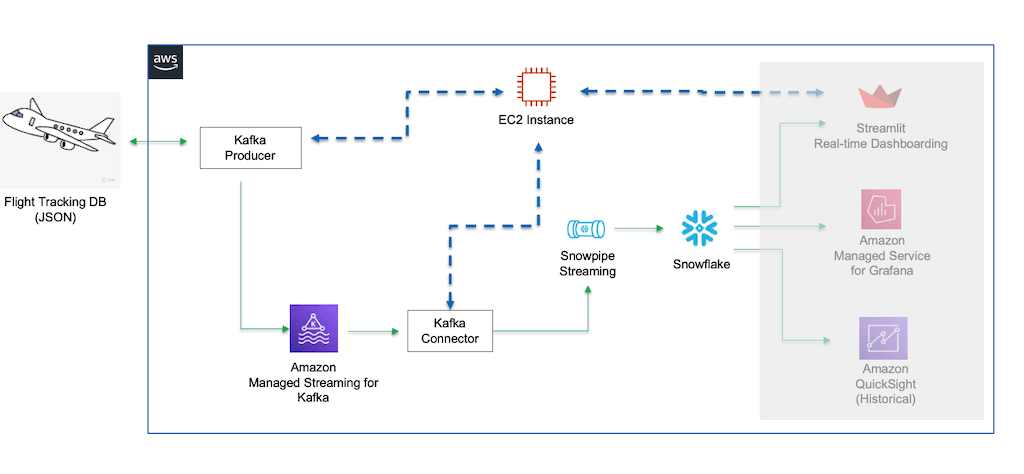

Ingesting Real-Time Data With Snowpipe Streaming API

Snowpipe Streaming is a new addition to Snowflake's data loading capabilities which was released in March 2023 and is currently available as a preview feature. This new feature is designed to allow users to load and process streaming data from Kafka directly into Snowflake database tables. This results in a faster and more efficient alternative to Snowpipe for handling high volumes of real-time data.

To use this new option to ingest streaming data into Snowflake, follow the below steps:

Step 1: Install Apache Kafka, Snowflake Kafka Connector & OpenJDK

- Start by installing Apache Kafka on your local machine as it will serve as the streaming platform for data ingestion.

- Also, make sure to download the Snowflake Kafka Connector which bridges the gap between Kafka and Snowflake.

- Ensure that OpenJDK is installed as it's required for running Apache Kafka.

Step 2: Configure Snowflake & Local Setup

To configure Snowflake, follow these steps:

- Create a new Snowflake database and schema for storing Kafka data.

- Generate a Snowflake user with the necessary permissions for data ingestion.

- Create a Snowflake storage integration object for Kafka, allowing access to your Snowflake account.

Next, configure your local Kafka setup:

- Set up Kafka's properties file to define topics, partitions, and other settings.

- Configure the Snowflake Kafka Connector by specifying the Snowflake account, user credentials, database, schema, and other relevant information.

Step 3: Start The Environment

Once everything is installed and configured, start Apache Kafka and create the Kafka topic. Then launch the Snowflake Kafka Connector to start ingesting data from Kafka to Snowflake using Snowpipe.

Step 4: Test The System

After setting up the environment, run tests to ensure that data is flowing seamlessly from Kafka to Snowflake. You can do this by:

- Publishing sample data to the Kafka topic.

- Verifying that the Snowflake Kafka Connector processes the data and ingests it into Snowflake.

You can find more details on setting up Snowpipe Streaming along with code examples here.

Now that we have learned about 3 different methods for real-time data ingestion into Snowflake, let’s take a look at some of its examples to understand it better.

Real-time Data Ingestion Examples With Snowflake

Let’s discuss in detail how various companies utilize Snowflake with real-time data.

Yamaha Corporation

Yamaha Corporation leverages Snowflake's multi-cluster shared data architecture and flexible scaling to handle real-time data. This eliminates resource bottlenecks, enables fresher data imports, and speeds up visualization rendering in Tableau.

Snowflake-powered machine learning models are helping Yamaha take data analytics to new heights. This is expected to open up exciting new avenues of data utilization for the company. For example, showing a dealer's likelihood to buy in Tableau can help the sales team spot revenue opportunities more easily.

Sainsbury

Snowflake has streamlined Sainsbury’s data consolidation and data science workloads by providing a single source of truth (SSOT) across all its brands. Using Snowflake Streams and Tasks, Sainsbury's processes transaction and click-stream data in real-time. This has helped the company democratize data access and foster innovation.

Petco

Petco is a health and wellness company that specializes in improving the lives of pets. Snowflake’s data platform supports Petco by offering a scalable data pipeline for real-time data ingestion, processing, and utilization.

Petco's data engineering team has built an advanced real-time analytics platform based on Snowflake. This has helped simplify data warehouse administration for the company and free up resources for crucial tasks like democratizing data analytics.

AMN Healthcare

AMN Healthcare is a leading healthcare staffing solutions provider in the US. AMN Healthcare utilizes the Snowflake platform to quickly fulfill clients' ad-hoc reporting requests during critical moments in near-real-time.

Using Snowflake virtual warehouses, AMN Healthcare achieves a 99.9% pipeline success rate and a 75% reduction in data warehouse runtime. By writing over 100 GB of data and replicating 1,176 tables to Snowflake daily, AMN Healthcare consistently meets data replication service level agreements (SLAs).

Pizza Hut

Snowflake's near-real-time analytics allows Pizza Hut to make swift decisions during high-impact events like the Super Bowl. The platform’s instant elasticity supports virtually unlimited computing power for any number of users while separating storage and compute offers performance stability and cost visibility.

Pizza Hut also uses the Snowflake Data Marketplace for easy access to weather and geolocation data, and Snowflake Secure Data Sharing for direct data access from partners.

Conclusion

When considering the significance of real-time data ingestion for your organization, it’s essential to understand how crucial it is to have instant access to fresh data for making informed decisions. By leveraging real-time data ingestion, you can load data into Snowflake efficiently, resulting in improved business efficiency, more accurate forecasting, and better overall performance.

If loading data into Snowflake in real-time is a priority for your organization, it's important to find an effective solution that meets your DataOps requirements. Whether you're using Snowpipe, Estuary Flow, or Snowpipe Streaming, these methods provide reliable and scalable options to load data into Snowflake in real-time.

For those seeking a low-code platform to access the power of real-time data at scale, Estuary Flow might be an excellent fit. With Estuary Flow, you can seamlessly integrate various data sources and extract real-time insights, enabling quick and informed decision-making. Flow’s capabilities can help your business streamline its data analytics processes, gain valuable insights rapidly, and stay ahead of the competition. Sign up for free and start exploring.

If you're interested in loading data from one source to Snowflake, check out our related guides:

- How to Load from SQL Server to Snowflake

- How to Load from Postgres to Snowflake

- How to Load from MySQL to Snowflake

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles