Data can be immense, complex, and even intimidating. But that very same data drives innovation, decision-making, and business success. However, staring at these heaps of data won’t take you there. You need the tools, strategies, and technology to make it not only manageable but downright effective – like using Snowflake integration to make the most out of it.

When you first look at it, Snowflake integration might seem like a simple task of transferring information from point A to point B. But there's a whole lot more going on beneath the surface.

In this guide, you’ll discover best practices to achieve Snowflake integration, techniques to efficiently source and manage data, and learn about the best tools for the job.

What Is Snowflake?

Snowflake is a cloud data platform that stores, retrieves, and analyzes vast amounts of data without the hassles of managing the infrastructure. Built on top-tier cloud services like Amazon Web Service and Microsoft Azure, Snowflake has a unique architecture that separates its Compute and Storage capabilities.

Let us look at the most notable Snowflake features:

- Real-time data sharing: It provides instant data sharing which improves teamwork within companies. Teams can quickly tap into designated data repositories and get insights immediately.

- Integrated data integration tool: Snowflake integration platform supports several data integration tools. It simplifies the data migration process from existing structured data systems to its data repository.

- Elastic scalability: Snowflake’s cloud data warehouse can scale its resources both up and down based on demand. Whether you’re integrating data or analyzing it, Snowflake adjusts its resources for optimal performance.

- No resource contention: Businesses often handle multiple workloads, from data preparation to business intelligence tools. With Snowflake, you can deploy multiple virtual warehouses each catering to different teams or tasks.

- Simplified data management: Given that Snowflake is a cloud solution, you are freed from the burdens of selecting, installing, and maintaining hardware and software. This is a huge win if your business doesn't have the time or resources for extensive IT commitments.

- Efficient cost management: Unlike traditional data marts or warehouses that require upfront investment and often result in under-utilization, Snowflake’s on-demand model means you only pay for the resources you use. This further brings down your data warehouse implementation costs.

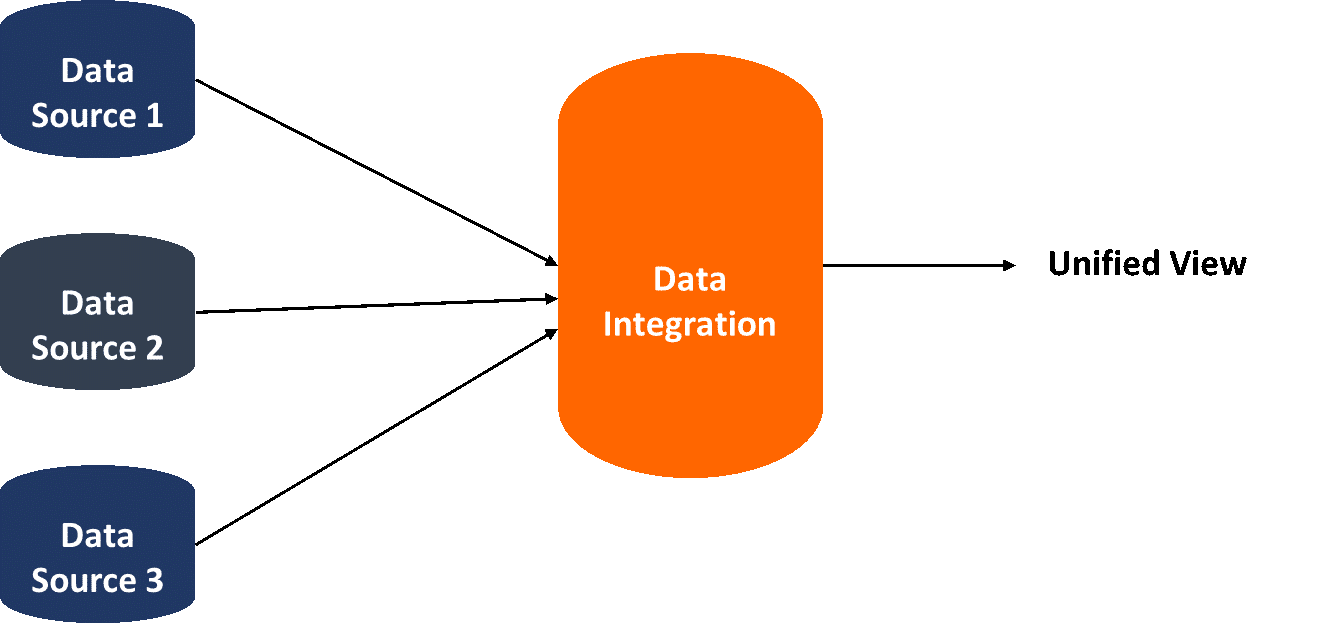

What Is Data Integration?

Data integration is combining data from multiple sources into a unified view. It involves ingesting data from diverse sources, both internal and external, and consolidating it into a single, coherent dataset.

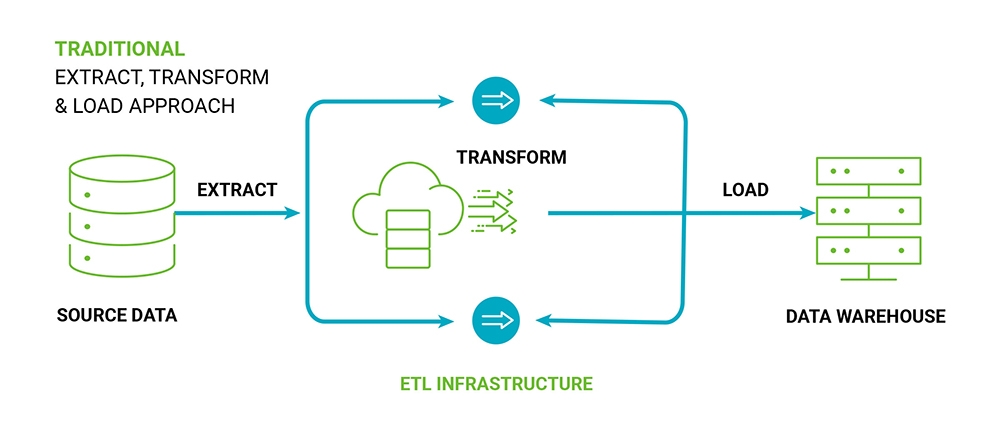

The key components of data integration include:

- Data sources like databases, files, and software applications

- Data transformation tools like ETL (extract, transform, load) that prepare and map the data

- Target database or data warehouse where the integrated data is stored

Data integration provides users and applications with a consistent, unified view of an organization’s data for reporting, analytics, and decision-making. With it, you can:

- Efficiently prepare data for analysis

- Access data across systems and locations

- Reduce errors and rework through data quality checks

- Provide clean and consistent data across the organization

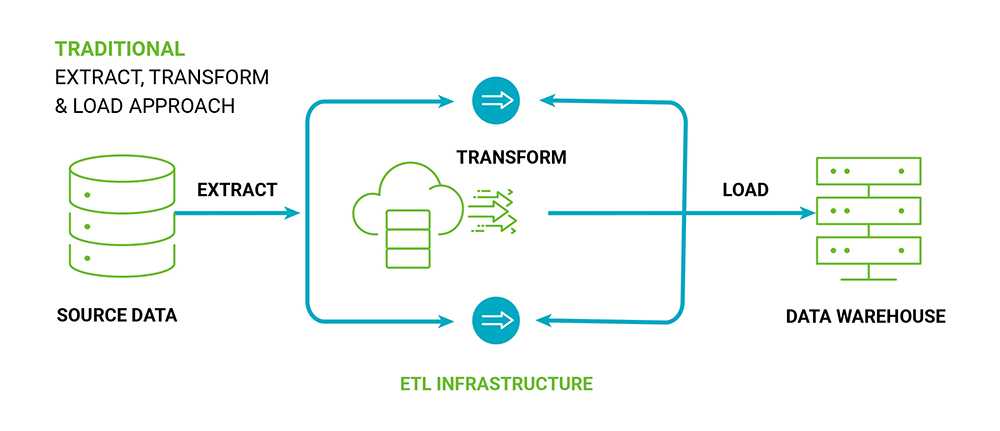

ETL Tools & Snowflake Integration

ETL tools enable seamless data flow into Snowflake's data warehouse, whether you're transferring data from systems like Postgres to Snowflake or SQL Server to Snowflake. Here's how ETL tools and Snowflake integration work hand in hand:

- Data Extraction: ETL tools extract data from different source systems like on-premises databases, cloud-based applications, external APIs, and more. They connect to these sources, retrieve data, and prepare it for further processing.

- Data Transformation: After extracting data, ETL tools apply transformations to ensure that it conforms to the structure and schema required by Snowflake. This involves data cleansing, data enrichment, data aggregation, and format conversion.

- Data Loading: Once the data is transformed and ready, ETL tools help load it into Snowflake's data warehouse. Snowflake provides a variety of Snowflake connectors that simplify data integration and streamline data transfers between different platforms.

- Automation & Scheduling: ETL tools often offer scheduling and automation capabilities which lets you set up regular data extraction, transformation, and loading processes. This ensures that data in Snowflake is always up-to-date and ready for analysis.

- Data Integration: ETL tools help in integrating data from multiple sources into Snowflake. This provides a unified view of your data for analytics and reporting purposes. This integration supports data-driven decision-making and insights generation.

Discover the best ETL tools for Snowflake that can optimize your data workflows.

6 Data Integration Platforms for Seamless Snowflake Integration

Snowflake’s compatibility with leading data integration platforms showcases its commitment to seamless data transfers and real-time operations. These platforms not only support efficient data transformations and transfers but also bring advanced functionalities that enhance the overall data integration process. Let us look into some of the best tools available for this purpose.

1. Estuary Flow

Estuary Flow is our no-code data integration platform offering real-time ETL and CDC functions. It stands out with its superior data pipeline tools and an easy-to-use interface for efficient data transfers. Flow integrates batch and real-time data from sources like databases, SaaS, and filestores.

Estuary Flow lets you enhance and modify your data and ensures accuracy and analysis readiness. Strong security measures, including encryption and authentication, are implemented to protect your data.

Flow is designed for businesses of every size and has an intuitive web app that makes Data Flow management easy — even for users with limited technical proficiency. It’s the go-to for your business if you are looking to quickly synchronize data across systems. The platform is also compatible with different data sources, thanks to its extensive connector support.

Estuary Flow Features

- A cost-effective solution that supports 100+ data sources and destinations

- Efficient ETL capabilities for various data formats

- Top-tier data security, especially for sensitive data

- Real-time transformations using SQL and TypeScript

- GUI-driven web application for simple pipeline management

- Enhanced data security through encryption and tight access controls

- Live reporting tools for monitoring data integration and spotting issues

- Capable of handling large data volumes with a capacity of up to 7 GB/s

- Advanced real-time data replication and database migration capabilities

- Features to manage automated schema and reduce data duplication during integration

- Real-time change data capture for different database systems, improving integration speed

2. Qlik

Qlik is an integrated data solution that provides both cloud and on-premise services. This platform streamlines real-time data delivery with a special focus on automating data transformation processes. Qlik automatically updates your data, removing the need for manual scheduling or scripting.

Qlik Features

- User-friendly point-and-click pipeline configuration

- Comprehensive insight and monitoring tools for the entire data lifecycle

- Efficient real-time data movement from a wide range of sources to major cloud platforms

- Intuitive no-code interface that simplifies data transformation and workflow creation processes

3. Informatica PowerCenter

Offering a GUI-based interface, Informatica PowerCenter is a comprehensive platform for data integration, migration, and validation tasks. Its zero-code data integration feature combines diverse business data seamlessly into a unified, trustworthy view.

Informatica PowerCenter Features

- Ability to seamlessly scale in alignment with Big Data demands

- Cutting-edge serverless deployment that reduces the associated overhead

- Enhanced pipeline partitioning and push-down optimizations for efficient data processing

- The in-built debugger option is a valuable tool for identifying potential issues in data mappings

4. Talend

Talend is a well-known name in data integration and management. It has 2 primary products:

- Stitch: A data pipeline tailored for analytical tasks

- Talend Data Fabric: A unified platform for trustworthy data

Talend also offers an open-source alternative, Talend Open Studio, that caters to initial data integration and ETL ventures.

Talend Features

- An easy-to-use interface to visualize data pipelines effectively

- Capacity to handle simple to complex data transformations with ease

- Over 800 connectors for extensive data and business endpoint integrations

- Tools and functionalities that help in designing both scalable ELT and ETL data pipelines

5. Hevo Data

Hevo Data is a robust data pipeline platform. It offers an accessible GUI-based web application tailored for constructing and overseeing data pipelines. The platform’s wide range of connectors provides hassle-free integration with many specified data sources and destinations.

Hevo Data Features

- Comprehensive integration of ELT, ETL, and Reverse ETL capabilities

- Automated pipelines support real-time transformations without the need for coding

- Strong emphasis on data governance and security measures to protect sensitive data

- A versatile platform design caters to businesses from small-scale to enterprise-level operations

6. SnapLogic

SnapLogic is an Intelligent Integration Platform with self-service functionalities. With its browser-based interface and over 500 adaptable connectors known as 'Snaps', it simplifies data integration and makes it easy even for those without a technical background

SnapLogic Features

- Continuous data quality checks maintain data integrity

- Straightforward integration from a data source to its destination

- An innovative AI assistant designed to help in seamless platform integrations

- Comprehensive visual tools like graphs and charts to monitor ETL job progress

Snowflake's Approach to Data Integration: A Detailed Overview

Snowflake integration, as a process, offers a simplified way to merge data. Compatible with many leading data integration tools, it reduces the reliance on methods like Electronic Data Interchange that data marts traditionally rely on.

This compatibility shows when you look at how Snowflake handles different data conversion functions, particularly when it comes to dealing with semi-structured data. With strong JSON support, you can prepare your data for data integration projects.

If your organization faces changing demands, Snowflake's instant compute resource scaling becomes valuable. It seamlessly integrates with programming tools like Spark and Python to make data integration smoother. What's more, it connects with your business intelligence tools to improve the overall functionality of sophisticated data systems.

Snowflake integration stands out for its data transformation capability. When data is loaded into a target database, Snowflake makes the transformed data compatible with the existing structure. On the other hand, Snowflake's data ingestion process plays a different role. It moves data directly into storage spots, like a data warehouse, without modification.

So, whether it’s exporting data, managing data migration, or implementing data warehouse solutions, Snowflake integration has every angle covered.

Snowflake’s Data Integration Mechanism: Everything You Need to Know

Snowflake's data integration mechanism innovatively improves data loading as it's being ingested. Let’s get into its details.

1. Loading & Transforming Data

In Snowflake, data transformation occurs when it is loading data.

- It loads data in bulk using the COPY command from various sources like local files or cloud storage.

- It supports different file formats like CSV, JSON, Avro, Parquet, and XML.

- Helpful data conversion functions assist during the import process.

2. Types of Data & Their Handling

Snowflake can manage different data types.

- It handles numeric, string, date-time, and logical data, as well as semi-structured variants like array objects.

- There’s also support for specialized types like geo-spatial, blobs, and clobs.

3. Using Stored Procedures With Snowflake

With Snowflake, SQL can be integrated into Javascript to devise stored procedures.

- Error management is streamlined

- Role-based procedure execution is facilitated

- You can create and execute SQL statements dynamically

- It supports procedural elements like branching and looping

4. Streams & Change Data Capture

Snowflake’s ‘Streams’ feature monitors table alterations. This includes:

- Recognizing operations like inserts, updates, and deletes.

- Offering a revised table layout with metadata that indicates the specific type of operational change to optimize Change Data Capture (CDC).

5. Automating With CI/CD Pipelines

Data ingestion is automated with Snowflake’s capabilities. For instance:

- Post-deployment to Production, CI/CD pipelines are geared up for regular data intake schedules.

- Tasks and their dependencies can be arranged to ensure that initiating a master task triggers all the associated tasks in order.

Setting Up Snowflake Integrations: A Step-by-Step Guide

Snowflake’s interoperability with third-party services centers around its integration system. Through specialized objects called integrations, Snowflake creates vital connections that facilitate the consistent flow and interaction of data across platforms. Let’s see how these integrations are created, managed, modified, and even deleted.

1. Creating an Integration

To establish a new integration or replace an existing one, you can use the CREATE INTEGRATION command. The syntax is as follows:

plaintextCREATE [ OR REPLACE ] <integration_type> INTEGRATION [ IF NOT EXISTS ] <object_name>

[ <integration_type_params> ]

[ COMMENT = '<string_literal>' ]Here, ‘<integration_type_params>’ varies depending on the specific integration type you’re working with, be it API, Notification, Security, or Storage. The ‘OR REPLACE’ and ‘IF NOT EXISTS’ clauses are mutually exclusive and shouldn’t be used in the same statement.

When replacing an integration with the ‘CREATE OR REPLACE’ clause, it’s an atomic operation. The previous integration gets deleted and the new one is instantiated in a single, unbroken transaction.

2. Modifying an Integration

There are instances when you need to alter the properties of an already set-up integration. For such purposes, the ‘ALTER INTEGRATION’ command is used:

plaintextALTER <integration_type> INTEGRATION <object_name> <actions>In this context, ‘<actions>’ are specific to the type of integration you’re dealing with.

3. Viewing Integrations

plaintextIf you want to review the integrations for which you have access rights within your account, you can use the ‘SHOW INTEGRATIONS’ command. The command fetches integration metadata and properties, offering insights like the integration’s name, type, category, status (enabled/disabled), comments, and the timestamp of its creation. Use the following syntax:

SHOW [ { API | NOTIFICATION | SECURITY | STORAGE } ] INTEGRATIONS [ LIKE '<pattern>' ]4. Describing an Integration

For a more granular view of an integration’s properties, the ‘DESCRIBE INTEGRATION’ (or its shorthand ‘DESC’) comes in handy:

plaintextDESC[RIBE] [ { API | NOTIFICATION | SECURITY | STORAGE } ] INTEGRATION <name>This command gives a breakdown of the chosen integration’s attributes.

5. Deleting an Integration

When you want to remove an integration from your Snowflake account, the ‘DROP INTEGRATION’ command is used. Here’s its syntax:

plaintextDROP [ { API | NOTIFICATION | SECURITY | STORAGE } ] INTEGRATION [ IF EXISTS ] <name>The ‘<name>’ parameter specifies the identifier for the integration you want to remove. If this identifier contains spaces, special characters, or mixed-case characters, you should enclose the entire string in double quotes. Remember that identifiers wrapped in double quotes are case-sensitive.

The types of integrations available for dropping are explicitly stated: API, Notification, Security, or Storage. If you’re unsure whether an integration exists and want to avoid an error when attempting to drop it, you can use the ‘IF EXISTS’ clause.

Once deleted, integrations cannot be retrieved. If you want to use them again, recreate them from scratch.

6. API Integration

API Integration in Snowflake facilitates the connection between Snowflake and various Application Programming Interfaces (APIs). It’s especially useful when you want real-time data sharing or interaction between Snowflake and external applications.

Commands specific to API integrations like ‘CREATE API INTEGRATION’, ‘ALTER API INTEGRATION’, and others allow for the creation, modification, and management of these API connections.

Get Integrated: Use Flow

This is where Estuary Flow shines. Its code-free interface, extensive connector library, efficient ETL, and automation features ensure hassle-free integration. Flow easily manages high volumes of real-time data transfer with top-notch security.

Sign up today for free to get started with simplified integration workflows tailored to your business needs. You can also reach out to the Flow team to learn more.

Frequently Asked Questions

Whether you're just starting out or looking to fine-tune your Snowflake data integration strategy, we have answers to keep you on the right track.

What Is Snowflake Integration?

Snowflake integration is the process of connecting Snowflake, a cloud-based data warehousing platform, with other systems, applications, or tools to efficiently manage and analyze large volumes of data. Snowflake integration includes various phases, including data sourcing, data modeling, data insertion, and data management, to benefit from Snowflake's advanced capabilities for data storage, processing, and analytics.

What Are the Types of Data Integration Tools?

There are several types of data integration tools:

- ETL (Extract, Transform, Load) Tools: ETL tools are used to extract data from various sources, transform it into a structured format, and load it into a target database or data warehouse.

- ELT (Extract, Load, Transform) Tools: ELT tools extract data and load it into a target storage system first, and then perform transformations as needed.

- Data Integration Platforms: These comprehensive platforms provided different integration capabilities, including data cleansing, data transformation, and data mapping.

- Data Replication Tools: These tools focus on replicating data from one source to another in real-time or near-real-time, ensuring data consistency across systems.

- Data Integration as a Service (DIaaS): DIaaS solutions are cloud-based services that offer data integration capabilities without the need for on-premises infrastructure.

Why You Should Use a Data Integration Tool?

Using a data integration tool has many benefits:

- Efficiency: Data integration tools automate the process of collecting, transforming, and loading data which reduces manual effort and potential errors.

- Data Quality: These tools often include data cleansing and validation features for improving data quality and accuracy.

- Real-time or Batch Processing: Data integration tools can handle both real-time and batch data integration to cater to various data processing needs.

- Scalability: They can scale to handle large volumes of data and accommodate growing data requirements.

- Consistency: Data integration tools ensure data consistency and coherence across multiple data sources.

- Cost-Effective: These tools streamline data integration processes which results in cost savings through improved resource utilization and reduced maintenance efforts.

- Business Intelligence: Effective data integration is important for generating meaningful insights and supporting business intelligence and analytics initiatives.

How Is Snowflake Used In ETL?

Snowflake is commonly used in ETL processes to efficiently handle and analyze large volumes of data. Here's how Snowflake is typically used in ETL:

- Data Extraction: Snowflake can act as both the source and destination for ETL processes. Data is extracted from various sources and loaded into Snowflake for further processing and analysis. It supports various data ingestion methods, including bulk loading, Snowpipe (continuous data ingestion), and connecting to external data sources using Snowflake connectors.

- Data Transformation: Snowflake offers powerful transformation capabilities through SQL that let data engineers and analysts apply various data transformations directly within the Snowflake data warehouse. Complex transformations, data cleaning, aggregation, filtering, and enrichment can be performed using SQL queries, stored procedures, and user-defined functions.

- Data Loading: Once data has been extracted and transformed, it is loaded back into Snowflake or other target systems, depending on the ETL workflow requirements. Snowflake's scalable architecture and efficient loading mechanisms allow for the rapid loading of transformed data into the data warehouse for subsequent analysis.

Is Snowflake ETL or Data Warehouse?

Snowflake is primarily a data warehouse but it is also used for ETL processes. Snowflake's core functionality revolves around data storage, management, and analytics. It provides a scalable, cloud-based data warehousing solution that lets you store and analyze large volumes of data.

How to Choose the Right Snowflake Integration Tool?

Here are some key factors to consider when picking the Snowflake integration tool:

- Data Source Compatibility: Ensure that the integration tool supports the data sources you use in your organization.

- Ease of Use: Look for an integration tool that has a user-friendly interface and is easy to set up and configure.

- Data Transformation Capabilities: Assess the tool's ability to transform and manipulate data as it moves from source to Snowflake. It should support data cleansing, enrichment, and transformation tasks.

- Scalability: It should handle growing data volumes and workloads as your organization expands.

- Performance: Evaluate the tool's performance when loading data into Snowflake. It should efficiently utilize Snowflake's features like parallel loading to optimize data transfer.

- Monitoring and Logging: Ensure that the tool provides comprehensive monitoring and logging capabilities.

- Cost: Consider the total cost of ownership, including licensing fees, maintenance, and any additional infrastructure requirements.

- Support and Documentation: Look for a tool with a strong support system, including documentation, user forums, and responsive customer support.

What Are the Key Strategies for Maximizing Snowflake's Integration Potential?

To get the most out of Snowflake for integrating and managing large data ecosystems, consider implementing these 4 proven strategies:

- Data Sourcing: In this phase, gather data from various sources, including eCommerce platforms, legacy databases, cloud repositories, applications, and IoT devices. Best practices include choosing batch or continuous data streaming modes based on your data needs and using Snowflake-native mechanisms like Snowflake Data Sharing for efficient data sharing.

- Data Modeling: After collecting data, transform it into a consistent, structured format ready for analysis. Adopt flexible data modeling techniques, store the original data footprint for generating data models, and leverage Snowflake's features designed for different data types and structures.

- Data Insertion: Migrate prepared data into Snowflake using commands like 'PUT' and 'COPY INTO.' Familiarize yourself with these commands, stage your data in suitable locations, and select between batch or continuous processing depending on your needs.

- Data Management: Focus on coordinating data tasks and ensuring compliance during data operations. Identify and categorize data types, automate routine tasks, and strengthen your automation strategy while mapping out your processes.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles