Your business is a dynamic entity powered by data. The Snowflake Connectors and drivers are the invisible gears that keep the machinery running smoothly, just like other data integration tools found in Top ETL Tools for Data Integration. If these tools are misaligned, outdated, or incompatible, your data efficiency suffers. Data silos form, insights will be delayed or inaccurate, and operational bottlenecks will slow down processes.

Incorporating Snowflake connectors and drivers into your data strategy isn't just about adding another tool to the toolbox. With data creation expected to reach more than 180 zettabytes by 2025, the importance of Snowflake connectors and drivers becomes even more pronounced. The challenge? How to pinpoint the ones that generate the best results.

In this guide, we’ll give you a clear overview of Snowflake connectors and drivers and guide you through the key things to consider when looking for the perfect fit.

Understanding Snowflake: The Native Data Cloud Platform

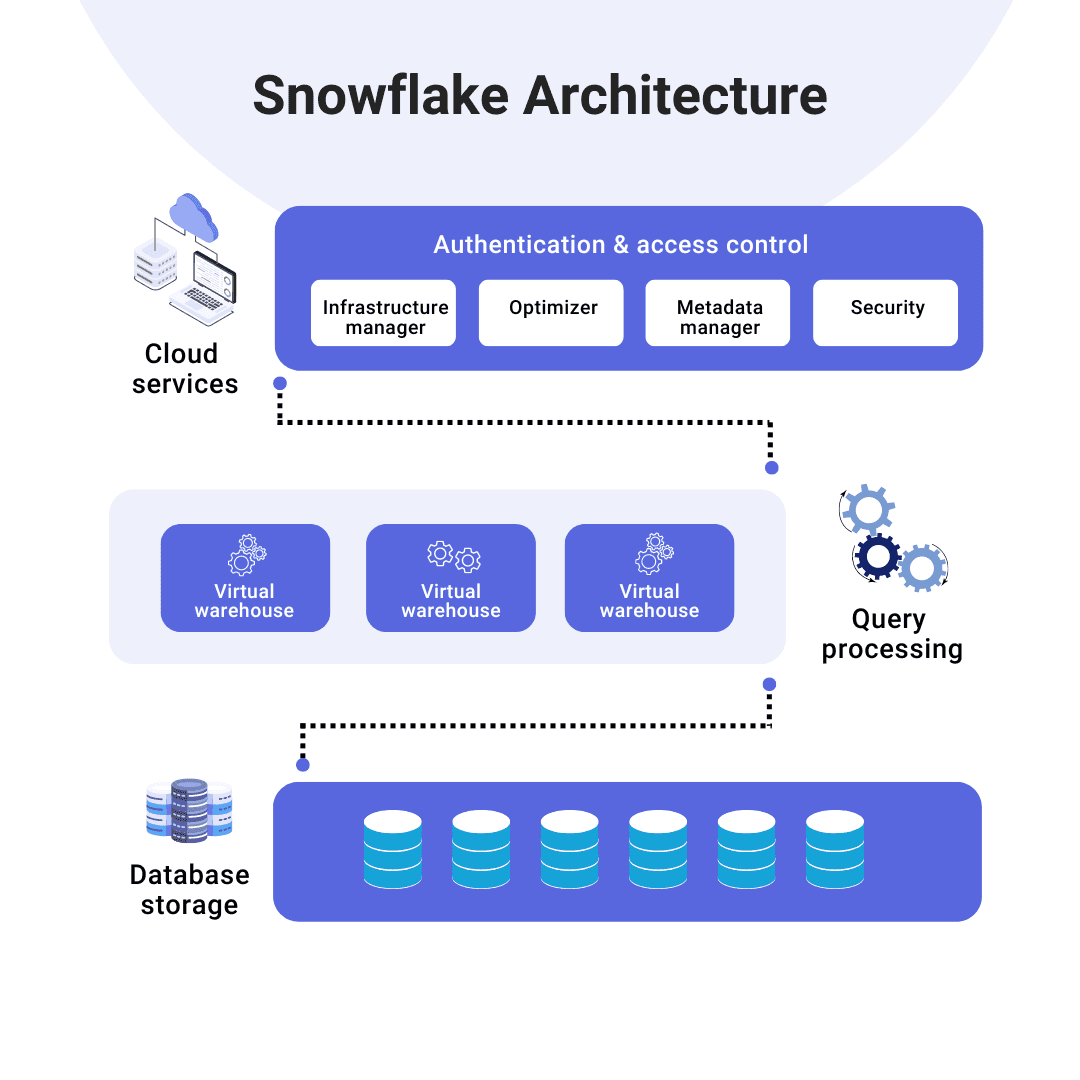

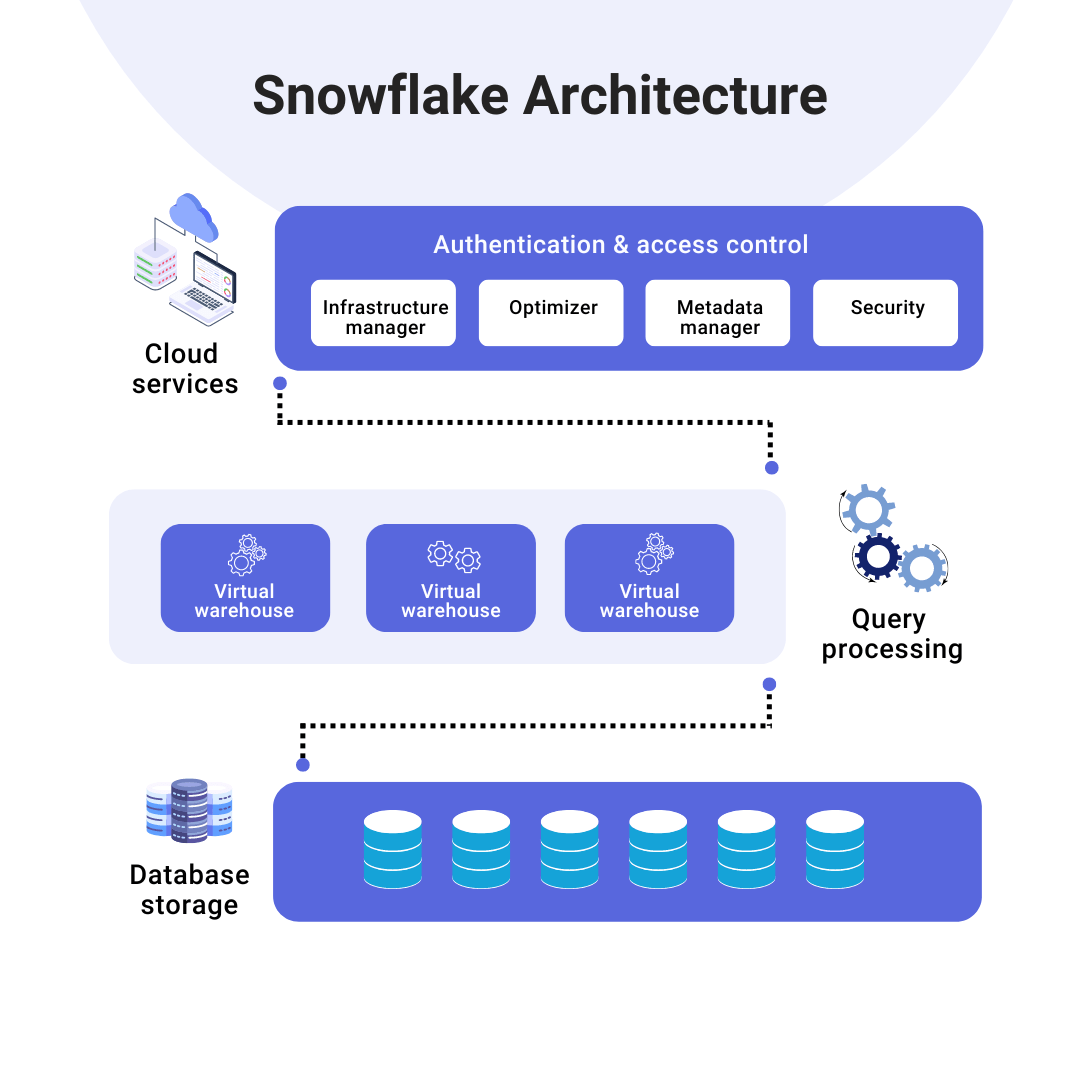

Snowflake stands out as a unique native data cloud platform that offers multiple features ranging from data warehousing and data lakes to advanced data security. Unlike other cloud services, Snowflake manages both software and hardware components so you don’t have to get into the complexities of setup and maintenance.

This means there's no need to establish separate data warehousing, data lakes, or data security solutions. Snowflake schema takes care of all data storage aspects to ensure efficient big data management and flexibility.

The Snowflake Ecosystem Explained: Components, Features, and Benefits

The Snowflake ecosystem is a vast network with various components. This includes:

- Certified Partners: These are entities that have developed both cloud and on-premises solutions to integrate seamlessly with Snowflake.

- Snowflake-provided Clients: Snowflake offers its own set of clients to facilitate direct interactions. These include SnowSQL, Connectors, and Drivers.

- Third-party Tools & Technologies: A good number of external tools and technologies can connect with Snowflake which enhances its functionality.

Key Features of the Snowflake Ecosystem

- SQL Development & Management: Snowflake provides native SQL development interfaces like Snowflake Worksheets and SnowSQL. It also supports third-party SQL tools, ranging from Aginity to SQL Workbench/J.

- Native Programmatic Interfaces: Snowflake supports application development across various programming languages and platforms. Connectors facilitate interactions with programmatic interfaces like GO, JDBC, .NET, Node.js, PHP, and Python.

- Business Intelligence (BI): BI tools within the Snowflake ecosystem gives you access to detailed data analysis and generates insights, recommendations, and comprehensive reports. Tools like Adobe and TIBCO present data visually through dashboards and charts.

- Partners & Technologies: Snowflake is compatible with a wide range of third-party technologies and tools, from Adobe to Zepl. However, you should ensure full compatibility as not all features from other technologies might be supported and can potentially cause issues.

- Security & Governance: Snowflake's ecosystem protects data and supports the integration of data governance and security tools that protect data against unauthorized access. Tools like Acryl Data to TRUSTLOGIX can be seamlessly connected with Snowflake.

- ML & Data Science: The ecosystem also includes machine learning and data science tools. These tools focus on predictive modeling and identify patterns in vast datasets to forecast future trends and derive actionable insights. Advanced analytics tools like Alteryx and Apache Spark can be integrated with Snowflake.

- Data Integration: At the heart of Snowflake's data integration lies the ETL method – Extract data, Transform data, and Load data. This process involves exporting data, converting it into a compatible format, and then importing it into target databases. Various data integration tools, from Ab Initio to Workato, can be integrated with Snowflake.

Snowflake Connectors & Drivers: Your Key to Seamless Data Integration

The Snowflake connectors and drivers are specialized software tools that establish secure and flawless connections between Snowflake and external systems, allowing for efficient data integration. These tools enable operations like reading, writing, importing metadata, and performing bulk data loading. If you’re looking to explore tools that complement these connectors, take a look at some of the best Snowflake ETL tools to enhance your data integration strategy.

Key Differences Between Snowflake Connectors and Drivers:

1. Snowflake Connectors:

- Primarily used for integrating Snowflake with various applications like Python.

- Support real-time data processing and include extra features like compatibility with Python libraries (e.g., Pandas).

- Implement the Python Database API Specification to facilitate data transfer.

2. Snowflake Drivers:

- Used for Java and C-based applications via JDBC (Java) and ODBC (C).

- Provide standardized methods for these programming languages to communicate with the Snowflake platform.

- Typically lack some of the advanced features found in the Python connector but are essential for broader compatibility across platforms.

Core Functions of Snowflake Connectors and Drivers

- Data Integration: Enable seamless data transfers and synchronization between Snowflake and external platforms.

- Bulk Data Loading: Allow for efficient bulk loading of large datasets into Snowflake tables.

- Metadata Importing: Simplify the process of importing metadata from external sources into Snowflake.

- Real-Time Data Access: Facilitate real-time data reads and writes for continuous data operations.

By choosing the appropriate Snowflake connector or driver based on your application needs, you can optimize data flows and ensure high performance in your data integration tasks.

Top 5 Use Cases for Snowflake Connectors

Snowflake connectors and drivers are super versatile and packed with great functionalities. They enhance how you interact with the Snowflake data cloud. So what Snowflake connectors and drivers mainly do are:

- Efficiently load data in vast amounts into Snowflake tables.

- Retrieve data from tables within the Snowflake data warehouse.

- Read and write data directly from and to the Snowflake data warehouse.

- Use the multiple input links feature to push data in high volume into several tables simultaneously.

- Use tools like InfoSphere Metadata Asset Manager (IMAM) to import metadata from the Snowflake cloud data warehouse.

Choosing the Right Snowflake Connector: A Step-by-Step Guide

Picking the right Snowflake connector affects how smooth and effective your data operations turn out. Here's a comprehensive guide to help you understand and choose the suitable connector.

SnowSQL Connector: Direct Access for Power Users

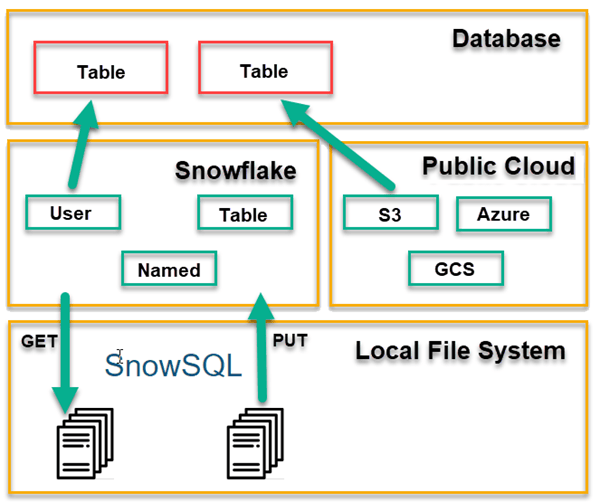

SnowSQL is Snowflake's proprietary command-line client that offers a direct interface to interact with Snowflake. It's adept at executing SQL queries and handling different DDL and DML operations.

Why Pick SnowSQL?

SnowSQL provides a hands-on approach for those comfortable with command-line interfaces. Its versatility in handling tasks like data loading and unloading makes it a preferred choice for many.

How to Choose SnowSQL

- Compatibility: Ensure compatibility with your operating system since Snowflake offers platform-specific versions.

- Scripting & Automation: If you plan to automate tasks or run batch processes, check if SnowSQL supports scripting and automation features.

- Task Nature: Evaluate the nature of tasks you frequently perform. If they include consistent data loading and unloading, SnowSQL is the one to pick.

- Querying Features: Consider the querying capabilities of SnowSQL. Look for features like support for SQL syntax, stored procedures, user-defined functions, and transaction management.

- Error Handling & Logging: A connector that provides clear error messages, logging capabilities, and troubleshooting resources can save time and effort during development and maintenance.

- Performance Optimization: SnowSQL offers options for performance optimization like query caching, query profiling, and query history. Review these features and choose a connector that helps you optimize your query performance.

Spark Snowflake Connector: Integrating with the Apache Spark Ecosystem

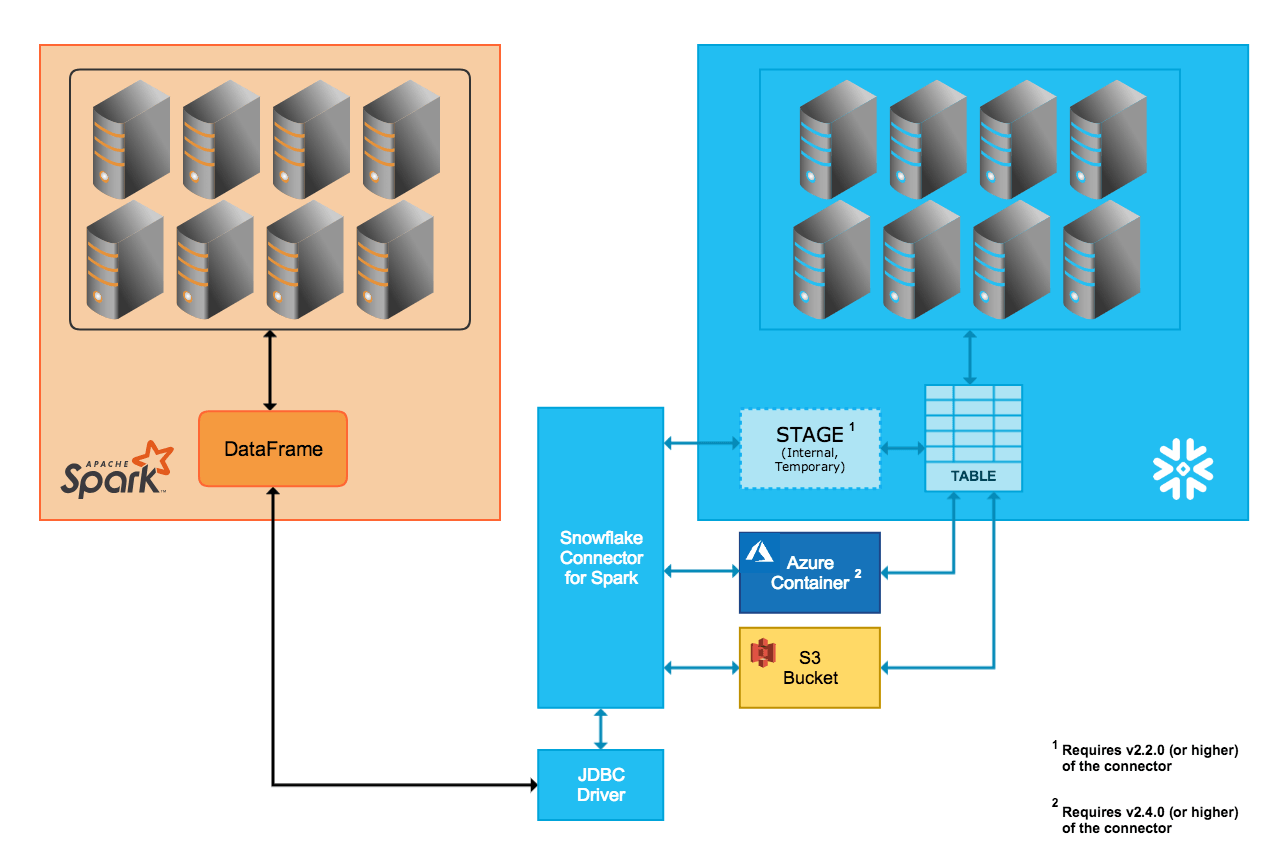

This connector integrates Snowflake seamlessly with the Apache Spark ecosystem. It helps Spark to read from and write data back to Snowflake.

Why Pick Spark Snowflake Connector?

If you are already deep into the Spark ecosystem, this connector provides a familiar and integrated experience. It ensures smooth data operations especially when dealing with diverse data sources.

How to Choose the Spark Snowflake Connector?

- Data Types & Compatibility: Ensure that the Spark Snowflake Connector supports the data types and schema structures used in your data processing tasks.

- Connector Versions Compatibility: Make sure the version of the connector matches up with the Spark’s since Snowflake is compatible with several different versions.

- Configuration Flexibility: Evaluate the level of configuration flexibility. You might need to fine-tune various parameters related to connection pooling, partitioning, and query optimization.

- Security & Authentication: Review the security and authentication options that the connector offers. Snowflake supports authentication methods like username/password, OAuth, and SSO.

- Data Movement Strategy: The Spark Snowflake Connector lets you choose between 2 data movement strategies: Direct Mode and Snowflake Data Mover. Consider your data movement requirements and choose the appropriate strategy.

- Performance Requirements: The Spark Snowflake Connector provides various options for performance optimization like pushdown predicates, query parallelism, and partition pruning. Choose a connector version and configuration that aligns with your performance needs.

Python Snowflake Connector: A Native Experience for Python Developers

Designed for Python developers, this connector offers a dedicated interface to let Python applications communicate easily with Snowflake.

Why Pick Python Snowflake Connector?

It stands out for its native Python experience. Unlike other connectors that might rely on ODBC or JDBC dependencies, the Python Snowflake Connector has been engineered to directly interact with Snowflake using Python-native code.

How to Choose the Python Snowflake Connector?

- Connection Management: Evaluate how the connector handles connection pooling and reusability.

- Installation & Dependencies: Check the installation process and dependencies. Make sure the installation is straightforward and aligns with your development environment.

- Data Type Support: Ensure that the connector supports Snowflake's data types and allows for seamless conversion between Python data types and Snowflake data types.

- SQL Execution: Check the capabilities of the connector for executing SQL queries and statements. Look for support for parameterized queries, batch execution, and handling query results.

- Error Handling & Logging: Consider the error handling and logging capabilities of the connector. A connector that provides clear error messages, logging options, and effective error-handling mechanisms can simplify troubleshooting.

- Performance Optimization: Evaluate any performance optimization options that the connector offers. Options like query optimization, fetching strategies, and data compression impact the efficiency of data retrieval and manipulation.

Kafka Snowflake Connector: Real-Time Data Synchronization with Apache Kafka

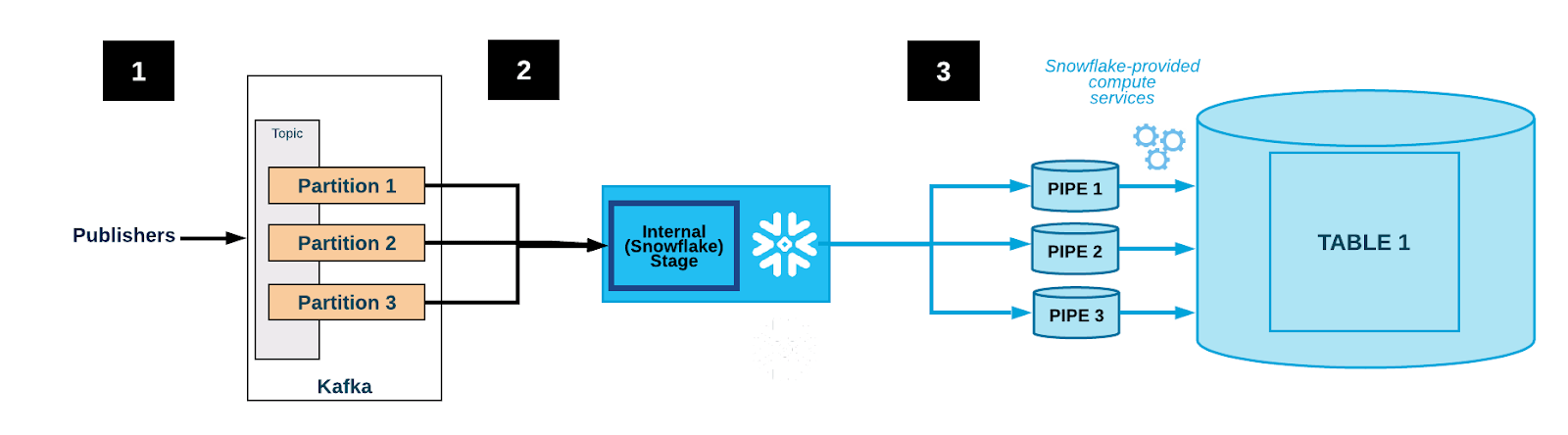

Tailored for the Apache Kafka ecosystem, this connector channels data from Kafka topics directly into Snowflake. The Kafka Snowflake Connector is configured to read data from specific Kafka topics and transform it into a format that Snowflake can understand.

Why Pick Kafka Snowflake Connector?

If you are looking for real-time or near-real-time data synchronization between your streaming pipelines and data warehousing systems, the Kafka Snowflake connector is the best way to go about it. It provides a consistent and efficient data flow which makes it important for those who heavily rely on real-time operations within the Kafka ecosystem.

How to Choose the Kafka Snowflake Connector?

- Concurrency & Parallelism: Consider if the connector can handle multiple Kafka partitions concurrently and manage parallelism efficiently.

- Compatibility: Ensure that the Kafka Snowflake Connector version is compatible with your versions of Apache Kafka, Snowflake, and any other related technologies you're using.

- Data Partitioning & Sharding: If your Kafka topics have multiple partitions, make sure that the connector can effectively map Kafka partitions to Snowflake partitions or tables for optimal performance.

- Data Serialization & Formats: Verify that the connector supports the data serialization formats used in your Kafka messages, like JSON, Avro, or others. Make sure it can efficiently deserialize and process the data.

- Connector Source & Sink Modes: Understand whether the Kafka Snowflake Connector supports source mode (streaming data from Kafka to Snowflake) and/or sink mode (streaming data from Snowflake to Kafka).

These are some of the most popular Snowflake connectors out there. Before we explore the different Snowflake drivers, we thought it’s worth mentioning the use of data pipeline tools that simplify data management and integration when you’re looking for a scalable yet cost-effective solution.

Integrating DataOps Platforms Like Estuary Into Your Snowflake Data Strategy

If you're considering the best Snowflake connectors and drivers, using a no-code, data pipeline solution like Estuary Flow can dramatically improve your data operations. Let's look at how Estuary’s reliable data management solution complements Snowflake's tools.

Facilitating Large Volume Data Operations

Estuary Flow can manage high volumes of data. The synergy between Estuary and Snowflake ensures that large-scale data tasks become more manageable and improve your operational efficiency.

Streamlining Data Integration

Flow’s compatibility with Snowflake's connectors and drivers makes it easy to integrate data from different sources. This efficient coordination ensures a seamless data flow between Snowflake and other platforms and helps avoid the common challenges associated with data integration.

Automating & Monitoring Data Management

Estuary Flow automates data pipelines, tracks data lineage, and provides real-time data monitoring. These features work in tandem with Snowflake's connectors and drivers to make data management more straightforward and less time-consuming.

Boosting Data Security

Data security is a crucial concern in any data strategy and this is another area where Estuary shines. Estuary provides an added layer of protection against unauthorized access that complements Snowflake's security measures to ensure the safety and integrity of your data.

Choosing the Right Snowflake Driver: Optimizing Your Application Connections

Now, let’s explore the various Snowflake drivers to help you make the right choice.

#1. Go Snowflake Driver

The Go Snowflake Driver is a software library or package that provides a programmatic interface between Go applications and Snowflake databases. This driver allows Go developers to establish connections to Snowflake, execute queries, retrieve results, and manage various data-related operations without communication protocols or database protocols.

Why Pick Go Snowflake Driver?

If you are developing applications using the Go programming language and need to interact with Snowflake databases, the Go Snowflake Driver enables this interaction. Using a dedicated driver optimized for Go can perform better and more efficiently compared to using generic drivers or interfaces. However, it supports limited operations and excludes functions like PUT and GET.

How to Choose the Go Snowflake Driver?

- SQL Execution: Look for support for parameterized queries, batch execution, and handling query results.

- Performance Optimization: Evaluate if the driver offers any performance optimization options like query optimization, fetching strategies, and data compression.

- Data Type Support: Make sure that the driver supports Snowflake's data types and allows for seamless conversion between Go data types and Snowflake data types.

- Authentication Methods: Review the authentication methods that the driver supports. Choose the one that is in sync with your organization's security policies and infrastructure.

- Connection Pooling: Evaluate the driver's connection pooling capabilities. Efficient connection pooling reuses established connections to improve the performance of your Go applications.

- Error Handling & Logging: Consider if the driver provides clear error messages, logging options, and effective error-handling mechanisms that can simplify troubleshooting.

#2. JDBC Driver

Snowflake's JDBC driver facilitates communication between Java applications and Snowflake databases. It implements the JDBC API and provides a standardized way for Java programs to connect to Snowflake and perform various database tasks.

The driver translates Java calls into Snowflake-specific communication protocols to ensure seamless interaction between Java applications and Snowflake's data warehousing services.

Why Pick JDBC Driver?

When you're developing Java applications that need to interact with Snowflake databases, using the Snowflake JDBC driver establishes the connection and performs data-related tasks. For applications that need to synchronize or transfer data between Snowflake and other systems, the JDBC driver helps facilitate data integration tasks.

How to Choose the JDBC Driver?

- Snowflake Compatibility: Make sure that the version of the Snowflake JDBC driver you're considering is compatible with the version of Snowflake you're using.

- Performance & Efficiency: Evaluate the driver's performance characteristics, including connection pooling, data fetch strategies, and query execution optimizations.

- Feature Support: Check if the driver supports advanced features that Snowflake provides like Snowflake-specific data types, query optimization, and features related to Snowflake's architecture.

- Driver Type: Snowflake offers a Type 4 JDBC driver which is a pure Java driver that communicates directly with Snowflake's service over the network. This type is recommended for most scenarios because of its performance, simplicity, and platform independence.

#3. .NET Driver

Snowflake's .NET driver serves as a bridge between .NET applications and Snowflake databases. It implements the necessary functionality to connect to Snowflake using the .NET programming framework. The driver handles the translation of .NET calls into Snowflake-specific communication protocols for smooth data exchange.

Why Pick .NET Driver?

If you're developing applications using the .NET framework (C#, VB.NET, etc.) and need to work with Snowflake databases, the .NET driver is crucial for establishing connections and performing data-related tasks. It provides secure connections to Snowflake so that the data transferred between your .NET application and Snowflake remains encrypted and secure.

How to Choose the .NET Driver?

- Connection Resilience: Evaluate how well the driver handles connection failures, network interruptions, and reconnects to ensure the stability of your application.

- Data Type Mapping: Check if the driver provides an accurate mapping between Snowflake's data types and .NET data types to prevent data loss or conversion issues.

- Concurrency & Multithreading: If your application requires concurrent database operations or multithreading, make sure that the driver is capable of handling these scenarios effectively.

- Customization & Extensibility: Assess whether the driver allows for customization and extensibility, like custom connection pooling settings, logging mechanisms, or query execution strategies.

- Performance Testing: Conduct thorough performance testing using your application's typical workload to ensure that the driver can handle the expected data volumes and transaction rates efficiently.

- Deployment & Maintenance: Consider the ease of deployment and maintenance of the driver within your application infrastructure. This includes version upgrades, configuration changes, and compatibility with your deployment tools.

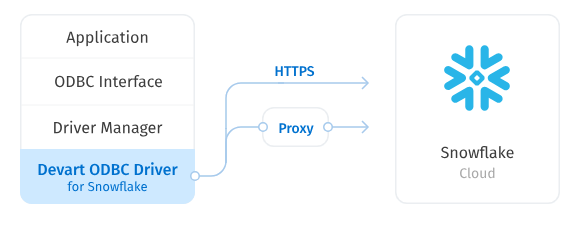

#4. ODBC Driver

Snowflake's ODBC driver allows applications to connect to the Snowflake cloud data platform using the ODBC standard. With this driver, you can develop and deploy applications that interact with Snowflake, retrieve data from Snowflake databases, and perform various operations like querying, updating, and managing data.

Why Pick ODBC Driver?

If you're building custom applications, business intelligence tools, or other software that needs to access and work with data stored in Snowflake, you would use the ODBC Driver to establish the connection. You can use the ODBC driver to connect your ETL scripts to Snowflake's data when building custom ETL processes to move data between different data sources and Snowflake

How to Choose the ODBC Driver?

- Driver Manager Compatibility: Consider whether the ODBC driver is compatible with popular ODBC driver managers like unixODBC and iODBC.

- Compatibility with ODBC Versions: Ensure that the Snowflake ODBC driver is compatible with the version of the ODBC standard your application is using.

- Performance & Efficiency: Evaluate the driver's performance characteristics, including connection pooling, data fetch strategies, and query execution optimizations.

- Documentation & Resources: Evaluate the quality of Snowflake's documentation for the ODBC driver. Well-documented features, examples, and usage guidelines can simplify the integration process.

- Feature Support: Check if the ODBC driver supports advanced features that Snowflake provides, like Snowflake-specific data types, query optimization, and features related to Snowflake's architecture.

#5. Node.js Driver

This driver provides a set of functions, methods, and tools that make it easier to establish connections, execute queries, and manage data within Snowflake databases using Node.js applications. The Snowflake Node.js driver leverages the capabilities of Snowflake's cloud data warehouse and is tailored to the asynchronous and event-driven nature of Node.js.

Why Pick Node.js Driver?

Node.js is known for its asynchronous and event-driven architecture. The Snowflake Node.js driver uses these features so you can handle multiple concurrent requests efficiently. This helps in real-time processing, web applications, and other scenarios where responsiveness and concurrency matter.

How to Choose the Node.js Driver?

- Official Snowflake Drivers: Snowflake provides two official Node.js drivers that you can consider:

- Snowflake SDK: The Snowflake SDK for Node.js provides full compatibility with Snowflake features and capabilities. It's a native JavaScript driver that offers a direct and optimized connection to Snowflake. It is suitable for applications that require deep integration with Snowflake and need access to all its features.

- Snowflake Data Marketplace: Snowflake also offers a Node.js driver specifically designed for accessing data from the Snowflake Data Marketplace. If your primary use case is consuming external data sources available in the Snowflake Data Marketplace, this driver might be a good fit.

- Snowflake SDK: The Snowflake SDK for Node.js provides full compatibility with Snowflake features and capabilities. It's a native JavaScript driver that offers a direct and optimized connection to Snowflake. It is suitable for applications that require deep integration with Snowflake and need access to all its features.

- Performance: Consider the performance requirements of your application. The Snowflake SDK is optimized for performance and offers various options to fine-tune performance settings. If performance is a critical factor, the Snowflake SDK is a better choice.

- Features & Capabilities: Compare the features and capabilities of each driver. The Snowflake SDK has broader capabilities as it's designed for general-purpose connectivity to Snowflake. Evaluate whether the features align with your project requirements.

Final Thoughts

The potency of Snowflake lies not just in its architecture but also in the data connectors and drivers that bridge the gap between your data and this cutting-edge platform. Considering the speed at which data moves nowadays, choosing connectors and drivers that can keep up means you get answers quickly — a crucial factor whether you're running a store online or taking care of patients in a hospital.

While Snowflake is already a powerful platform on its own, the potential it offers can be expanded even further by integrating more tools. Estuary Flow is one such tool that acts as a catalyst for process simplification and operational enhancement. Flow is designed to work seamlessly with Snowflake to bridge gaps and extend functionalities.

If you're keen on maximizing your Snowflake experience, Estuary Flow is worth exploring. Sign up for free or connect with our team to know more about it.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles