Today, businesses are striving to efficiently leverage vast amounts of data for valuable insights. To achieve this, they centralize data in a data warehouse to perform complex analytics. With expanding and diverse data sources, it is crucial to move data between platforms securely. SFTP and FTP are two commonly used reliable methods for moving data across networks. Connecting SFTP/FTP to Redshift will help businesses centralize and analyze their data better.

The robust architecture and real-time data analysis capabilities of Redshift will help unlock the full potential of critical data. Let’s explore the different methods to move data from SFTP/FTP to Redshift.

SFTP/FTP: An Overview

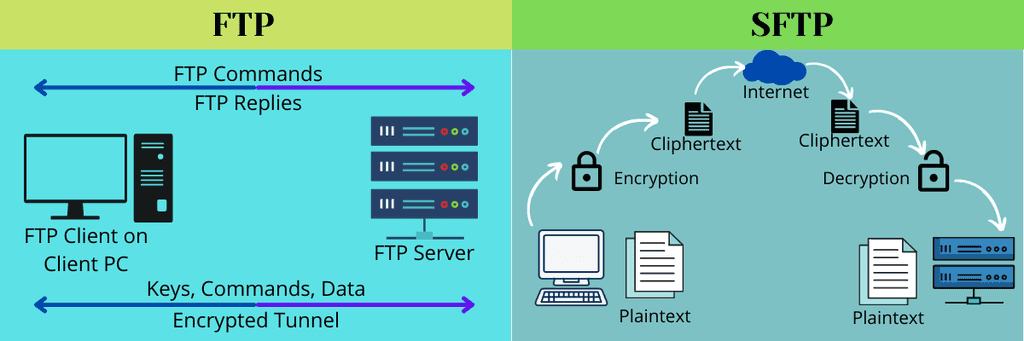

FTP (File Transfer Protocol) is one of the most widely used protocols for uploading or downloading files from a server. It operates on the client-server model, where a client initiates a connection with an FTP server for file transfers. Any remote host running an FTP can connect to a local host machine through an Internet connection, allowing for seamless file transfer.

Data is transmitted in plain text, including usernames and passwords. As a result, there is no encryption for data transfers, making it a less secure option, especially for sensitive data.

On the other hand, SFTP (Secure File Transfer Protocol) is based on the SSH (Secure Shell) protocol, providing secure and encrypted data transfer over the network. It encrypts data during transmission, ensuring even the most sensitive data is secure from potential threats.

Amazon Redshift: An Overview

Amazon Redshift is a cloud-based data warehousing service provided by Amazon Web Services (AWS). Launched in 2012, Redshift has become a popular choice for organizations seeking a high-performance and scalable data warehousing solution. It is a fully-managed service, with AWS handling all the routine maintenance tasks, such as backups, software updates, and hardware provisioning.

Redshift is designed to handle petabyte-scale data analytics workloads with reduced query execution time. It accomplishes this through the following features:

- Distributed Architecture: Redshift has a distributed architecture, separating the storage and compute nodes. This is beneficial for businesses to scale independently based on the requirements.

- Columnar Storage: Redshift uses a columnar storage format to store its data, organizing data in columns rather than in rows. For a given query, Redshift will only read the necessary columns. It optimizes the data scanning process to improve query performance.

- Massively Parallel Processing: Redshift architecture involves multiple nodes and slices with its distributed architecture. Queries are executed across these nodes in parallel, allowing for faster query processing even on large datasets.

Methods to Move Data from SFTP/FTP to Redshift

There are two different methods that you can use to move data from SFTP/FTP to Amazon Redshift:

- Method #1: Using custom code

- Method #2: Using no-code tools like Estuary Flow

Method #1: Using Custom Code to Move Data from SFTP/FTP to Amazon Redshift

To load data into Redshift, you need to first load it into Amazon S3. Then, you can use the Redshift COPY command to move data from S3 to Redshift.

Here are the steps involved in loading data from SFTP/FTP to Redshift:

Step 1: Download SFTP/FTP to a Local Server

Download the FTP/SFTP data to a local system with the CURL utility:

plaintextcurl -u user:password 'ftp://<ftp_url>/data/products.csv' -o ~/Downloads/products.csvIf the server supports SFTP, you can change the URL as follows:

plaintextcurl -u user:password 'sftp://<ftp_url>/data/products.csv' -o ~/Downloads/products.csvStep 2: Use AWS CLI to Load the File to Amazon S3

After the file is downloaded to your local machine, use the AWS CLI to load it into S3. You must install and configure AWS CLI before you start loading the data. Then, use the following command:

plaintextaws s3 cp products.csv s3://my-data-bucket/products/Step 3: Connect to Redshift

Before loading data into Redshift, you must first connect to the Redshift instance with this command:

plaintextpsql -h mycluster.12345672922.us-west-2.redshift.amazonaws.com -U user -d dbname -p 5439When you’re prompted for the password, enter the password for the specific user.

Step 4: Load the Data to Redshift

Ensure you have a table in Redshift to load the data and that the data file schema is in line with that of the Redshift table.

Use the Redshift COPY command with CLI to load data from the S3 bucket to Redshift:

plaintextcopy products from 's3://my-data-bucket/products/products.csv' credentials 'aws_access_key_id=<AWS-Access-Key-ID>;aws_secret_access_key=<AWS-Secret-Access-Key>' csv;For more information on the Redshift COPY command, refer to the AWS documentation.

This completes the process of loading data from SFTP/FTP to Redshift. There are some limitations associated with this method, including:

- The custom code method is suitable only for a first-time bulk load. By default, the COPY command appends data to the existing rows. If you’re loading incremental data, you need to write additional logic.

- The implicit data type mapping of the Redshift COPY command may result in inaccurate data in Redshift. For example, if the target column’s data type specification is an integer, Redshift converts a decimal value to an integer by automatically truncating the value.

Method #2: Using No-Code Alternatives like Estuary Flow to Integrate SFTP to Redshift

To overcome the drawbacks associated with the custom code method, consider using no-code ETL tools.

Estuary Flow is one such SaaS tool that simplifies the process of moving data from SFTP/FTP to Redshift. Its easy-to-use interface and a range of readily-available built-in connectors allow you to set up a data integration pipeline in a few clicks. Once deployed, the fully-managed pipeline will operate in real time without any manual effort.

To start using Estuary Flow, log in to your Estuary account. If you don’t have one yet, register for a free account. Here are the steps to move data from SFTP/FTP to Redshift using Estuary Flow:

Step 1: Configure SFTP/FTP as the Data Source

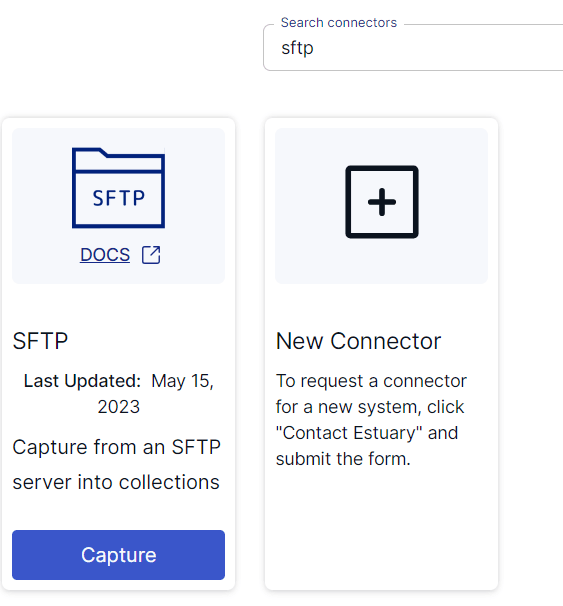

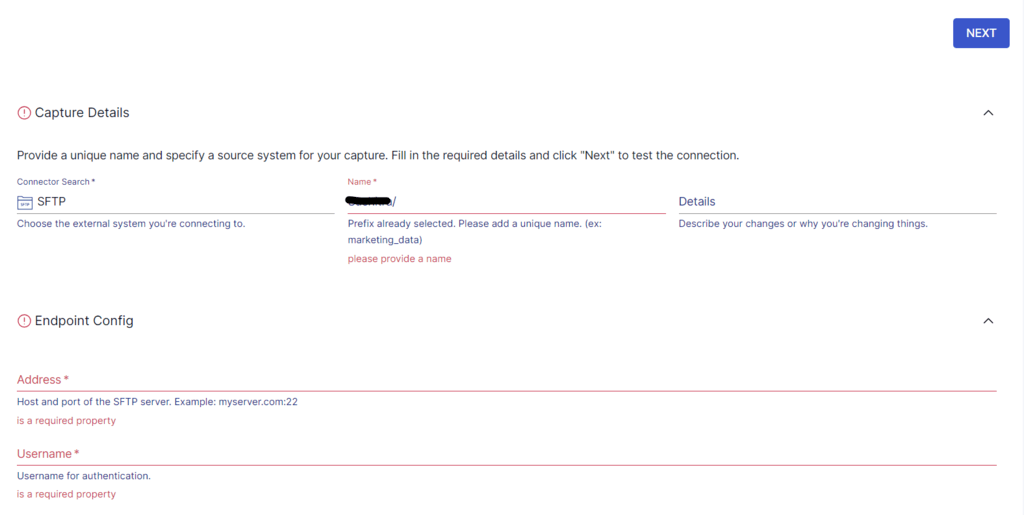

After logging in to your Estuary account, you will be redirected to the Estuary dashboard. Click on Sources on the left-side pane of the dashboard. Then, click on the + NEW CAPTURE button. Scroll down and look for the SFTP connector or search for SFTP in the Search connectors box. Once you locate the connector, click on its Capture button.

Prerequisite: An SFTP server that can accept connections from the Estuary IP address 34.121.207.128, with password authentication.

On the SFTP connector page, fill in the required details like a Name for the connector, Address, Username, and Password. Click on the NEXT button and then click on Save and Publish. The connector will successfully capture data from the SFTP server.

Step 2: Configure Redshift as the Destination

The next step is to set up the destination end of your pipeline. To do this, you can click on Materialize Connections in the pop-up that appears after a successful capture. Alternatively, you can navigate to the Estuary dashboard and click on Destinations in the left-side pane.

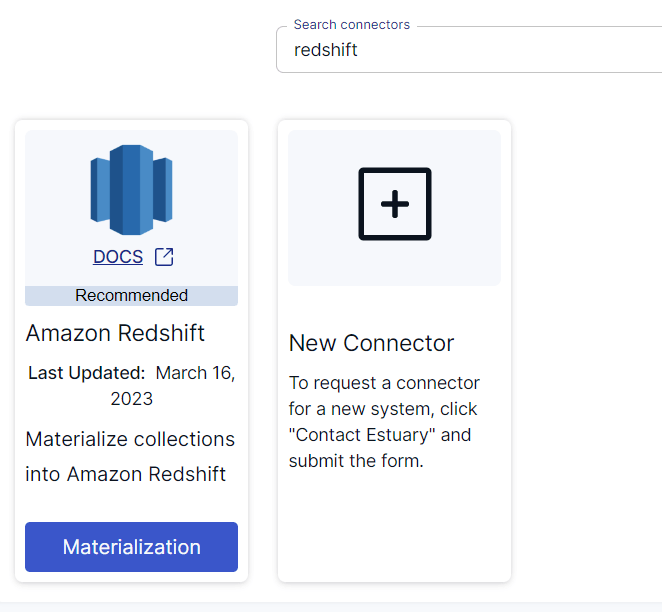

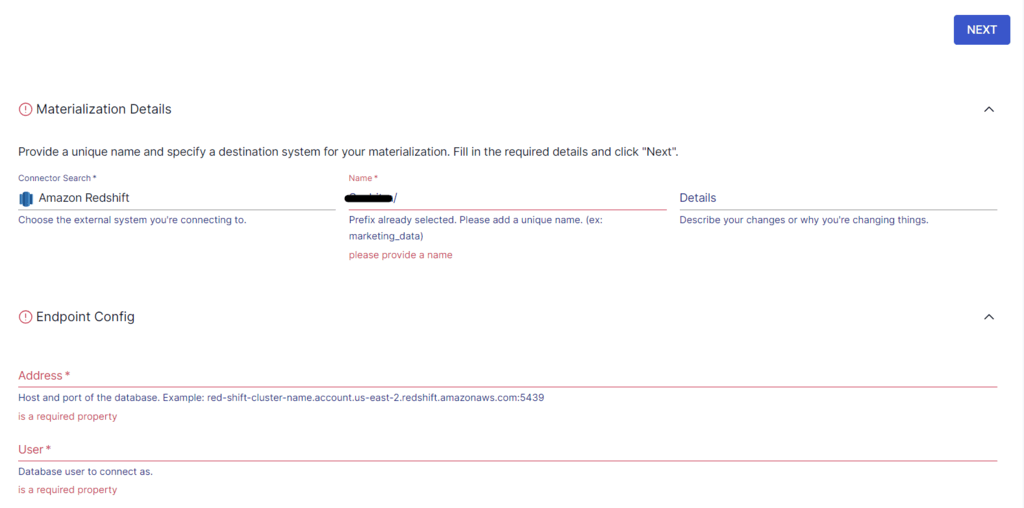

Then, click on + NEW MATERIALIZATION, and search for Redshift in the Search connectors. The search results will show the Amazon Redshift connector. Click on the Materialization button of the connector to navigate to the Amazon Redshift connector page.

Before proceeding, you must set up Redshift to connect with Flow. Learn how you can do this here.

On the connector page, fill in the required information, including a Name for the connector, User, Password, Address, and S3 bucket-related information. After specifying the details, click on NEXT.

If the data captured from SFTP wasn’t filled in automatically, you can add the data from the Source Collections section. Then, click on Save and Publish.

The connector will materialize Flow collections into tables in an Amazon Redshift database, using an S3 bucket as a temporary staging area for storage and retrieval.

For more information on the integration process, refer to the Estuary documentation

Conclusion

Moving data from SFTP/FTP to Redshift can provide real-time insights for informed decision-making, helping your business gain a competitive edge.

The two methods to perform this integration are using custom code and using no-code ETL tools like Estuary Flow. However, the custom code method can be time-consuming, resource-intensive, and error-prone. This is where no-code tools stand out. By creating a real-time data pipeline between SFTP/FTP and Redshift, SaaS alternatives like Estuary Flow enable you to channel valuable resources for innovation and growth.

Estuary Flow provides a range of connectors to help you easily establish a real-time connection between different platforms. Register for an Estuary account to start building data pipelines.

Here are some other integrations involving Redshift as the data destination:

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles