Migrating data from Amazon Redshift, a fully managed service, to Google BigQuery, a serverless data warehouse, presents a compelling opportunity with numerous advantages. Leveraging BigQuery’s serverless architecture can lead to improved scalability, performance, and accessibility.

The migration process begins with assessing your existing data architecture within Redshift, creating a comprehensive migration strategy, and executing an effortless transfer of your data to BigQuery.

In this guide, we will explore the most effective methods to achieve data migration from Amazon Redshift to Google BigQuery.

If you're familiar with both platforms and want to skip directly to the methods, click here.

Amazon Redshift — The Source

Amazon Redshift is a cloud-based, fully managed solution offered by Amazon Web Services (AWS). It is designed to handle huge volumes of data and perform complex queries with high performance and scalability. With its Massively Parallel Processing (MPP) architecture and columnar storage, Redshift delivers high-performance query execution, enabling rapid analysis of large datasets.

Top features of Amazon Redshift:

- Automated Allocation: Automated allocation is the capability of managing and optimizing resources within the Redshift cluster. This feature automates the allocation of resources such as CPU and memory across the cluster’s nodes based on workflow demands and performance requirements.

- Column-based Storage: Redshift stores data in columns rather than rows, which optimizes data retrieval for analytical purposes and improves performance, especially when dealing with massive data warehouse workloads.

- Machine Learning for Optimal Performance: Amazon Redshift provides advanced ML capabilities that contribute to high performance and scalability. Using sophisticated algorithms, it predicts incoming queries based on specific factors, enabling the prioritization of critical workloads.

- Parallel Processing: Redshift splits extensive data jobs into smaller tasks, distributing them across multiple processors. This methodology ensures that various tasks are handled simultaneously and efficiently.

Google BigQuery — The Destination

Google BigQuery is a serverless cloud data warehouse and a fully managed analytical platform provided by Google Cloud Platform (GCP). With its columnar storage and SQL compatibility, you can efficiently execute complex queries and aggregations by eliminating the typical delays encountered in traditional databases.

For comprehensive analytics, BigQuery supports various features, such as geospatial analysis, machine learning, window function, and BigQuery ML. This makes it an excellent solution for large-scale data analysis and insights.

Key features of Google BigQuery include:

- Scalability and Performance: By executing queries in the same region where the data is stored, BigQuery facilitates better performance and generates faster insights. It is also designed to scale data efficiently and handle large workloads.

- Machine Learning models: You can use simple SQL queries to execute BigQuery ML models such as Linear regression, Logistic regression, Matrix factorization, Time series, and more.

- Omni Service: BigQuery Omni service is a serverless service that does not need to provision resources or manage clusters. It allows you to execute analytical queries on foreign platforms, making it valuable for cross-cloud analysis, including AWS and Microsoft Azure.

- Real-time Data Processing: Big Query enables real-time data processing, facilitating up-to-date insights for applications, especially those dealing with dynamic and fast-changing datasets.

Methods to Migrate Data from Redshift to BigQuery

This section explores two effective methods for connecting Redshift to BigQuery.

- Method 1: Using Estuary Flow to Migrate Data from Redshift to BigQuery

- Method 2: Manual Data Migration from Redshift to BigQuery

Method 1: Using Estuary Flow to Migrate Data from Redshift to BigQuery

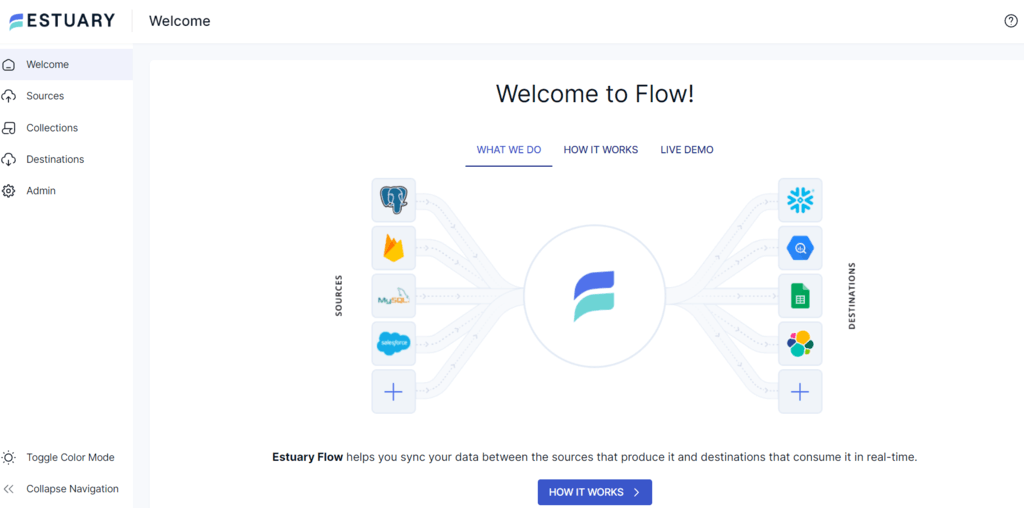

Reliable data pipeline solutions like Estuary Flow offer enhanced scalability and integration capabilities. Its intuitive user interface and 200+ readily available connectors streamline the setup process, facilitating effortless data migration. Flow can efficiently handle complex workflows, synchronization, and achieve near real-time data transfer between various sources and destinations.

Benefits of Using Estuary Flow:

- Scalability: Estuary Flow is designed to handle massive data volumes to scale with growing needs. Whether handling small datasets or managing complex data pipelines, it offers scalability to support evolving requirements.

- Streamlined Workflow: Estuary Flow's streamlined workflow facilitates the seamless coordination of various data sources, providing real-time monitoring and enhancing efficiency. This streamlined approach reduces errors and enables you to focus on deriving meaningful insights from your data.

- Change Data Capture (CDC): CDC pipelines capture changes to data as they occur, which lets you access the most frequent data for analysis and decision-making. It also enhances data integration and synchronization processes to improve accuracy.

Now, let’s go through the step-by-step process to migrate Redshift to BigQuery.

Prerequisites

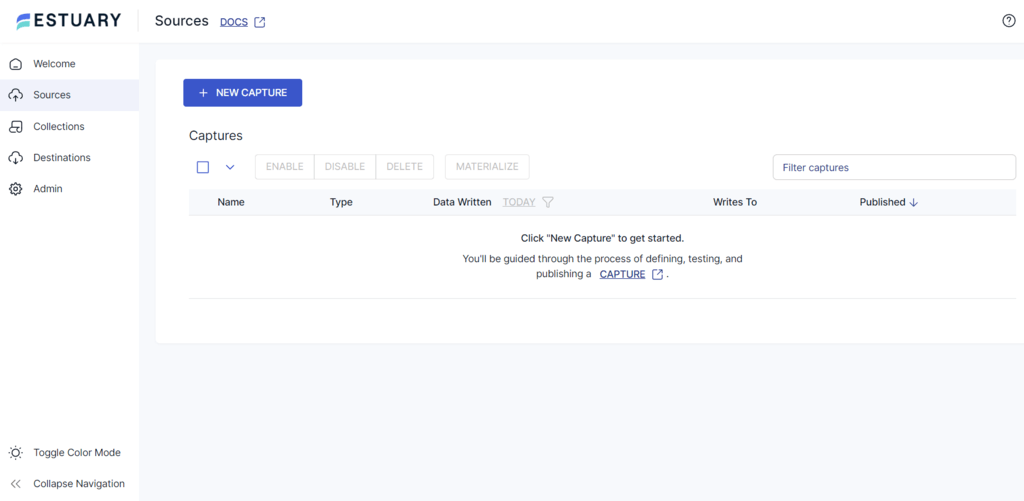

Step 1: Connect to the Redshift Data Source

- Sign in to your Estuary account to access the dashboard.

- To configure Redshift as a source, click the Sources button on the left navigation pane of the dashboard. Then, click the + NEW CAPTURE button at the top of the Sources page.

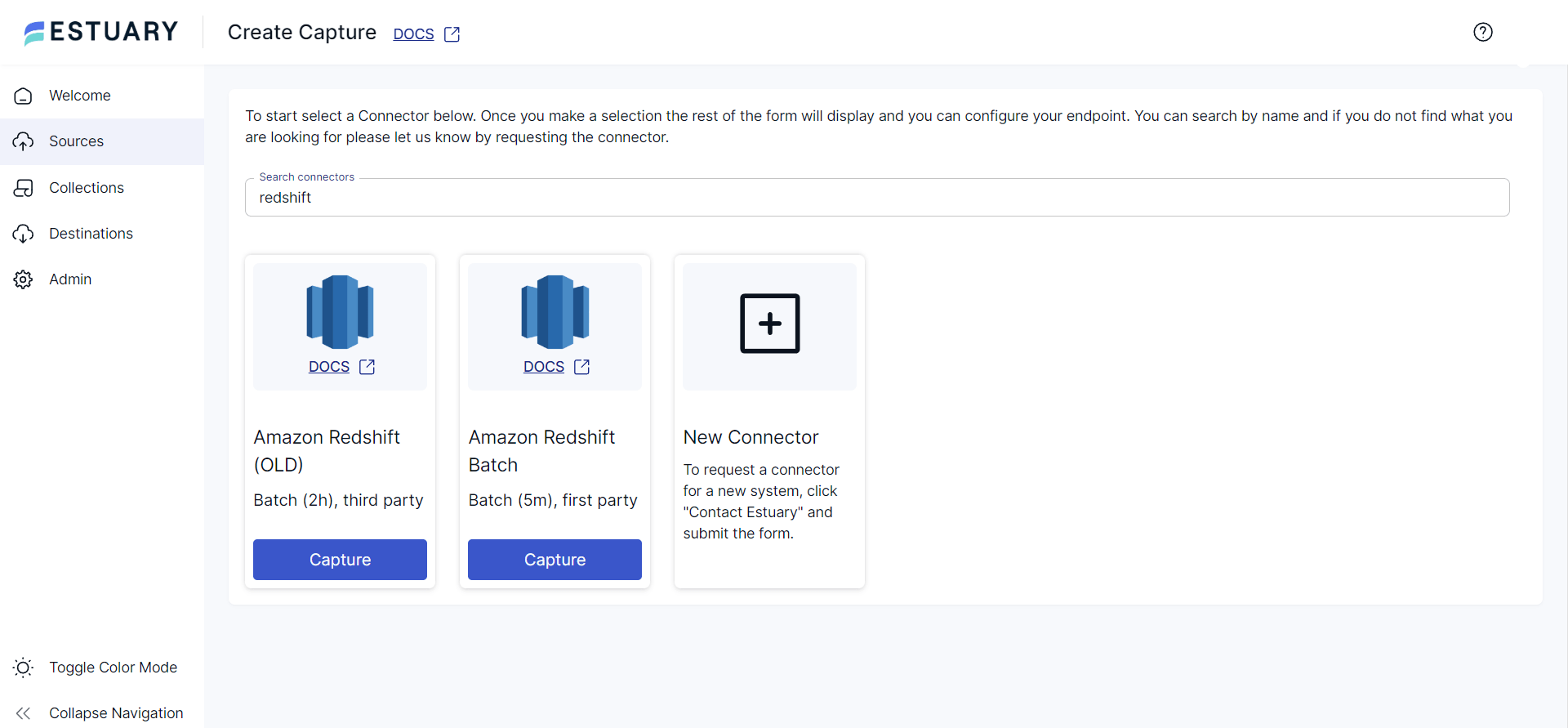

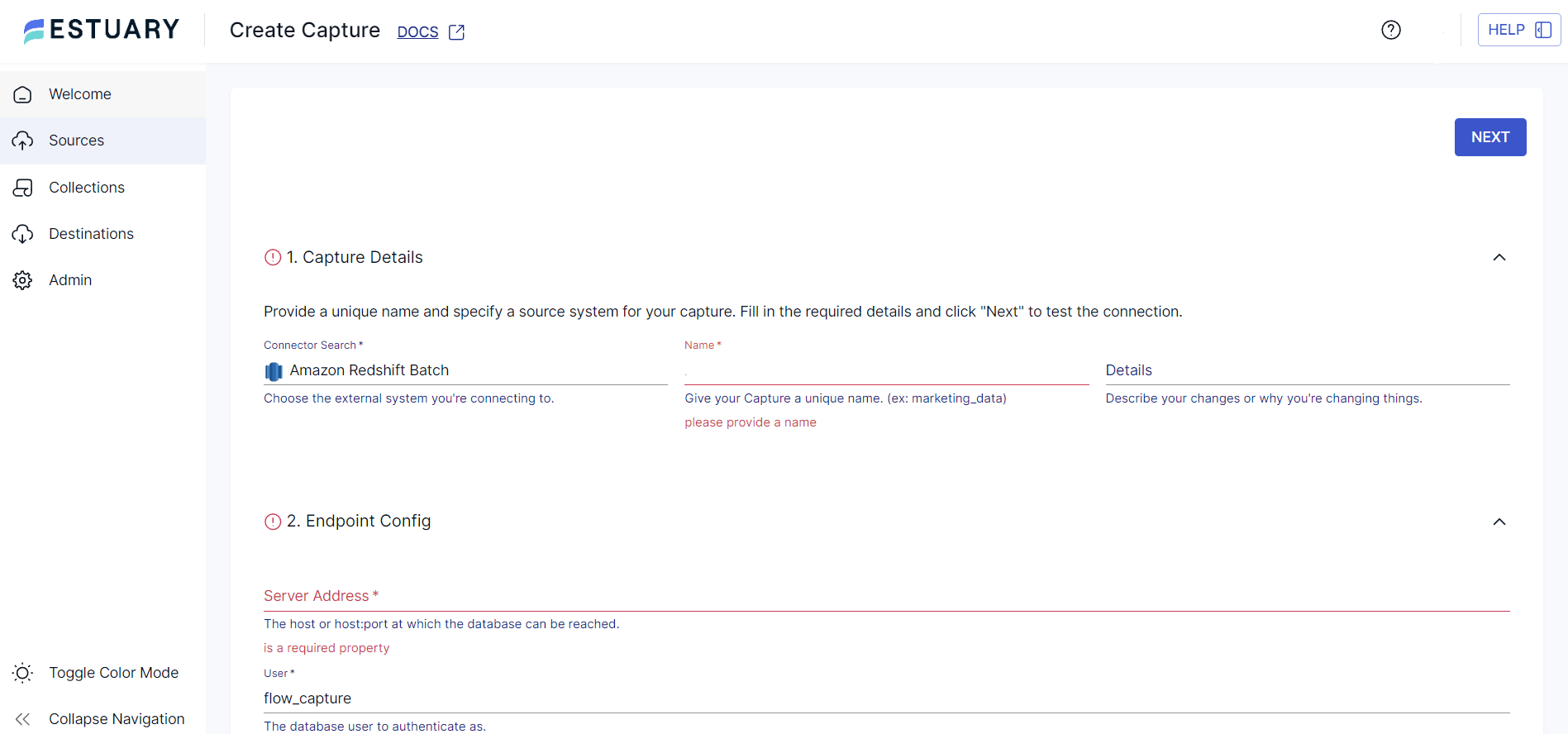

- Type Redshift in the Search connectors field; choose Amazon Redshift Batch from the search results and click its Capture button.

- On the Create Capture page, fill in the details like Name, Server Address, User, and Password.

- Click on NEXT > SAVE AND PUBLISH to configure the Redshift connector as the source end of the data integration pipeline. The connector will capture and convert your Amazon Redshift cluster data into Flow collections.

Step 2: Connect BigQuery as the Destination

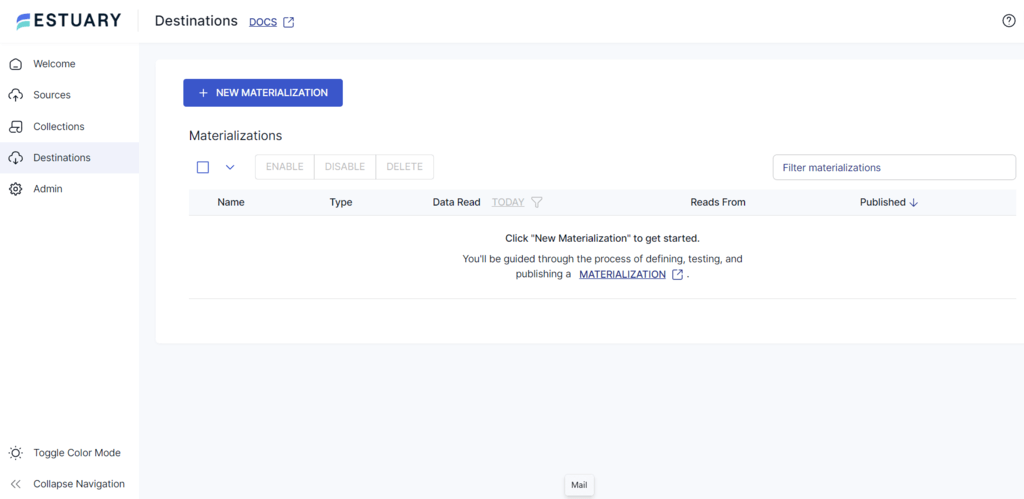

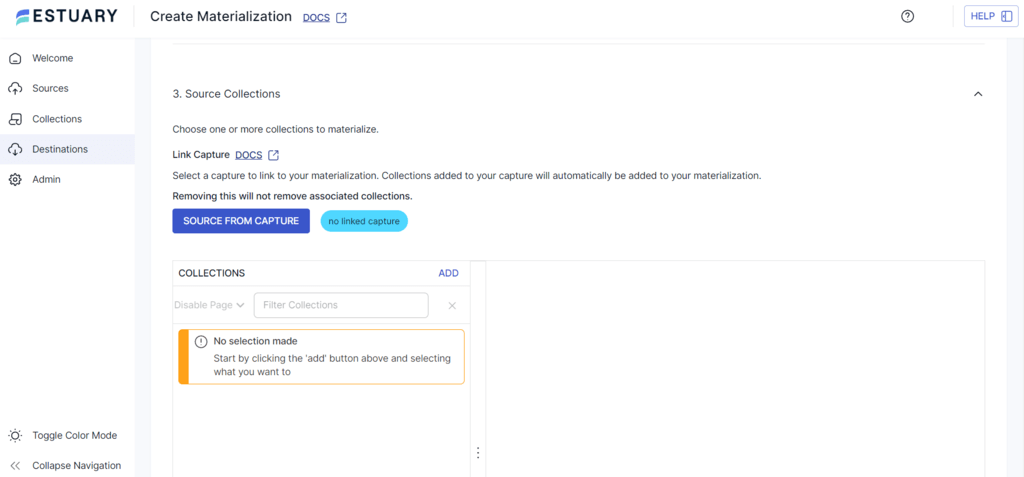

- Click the Destinations option on the left navigation pane on the Estuary dashboard.

- On the Destinations page, click on the + NEW MATERIALIZATION button.

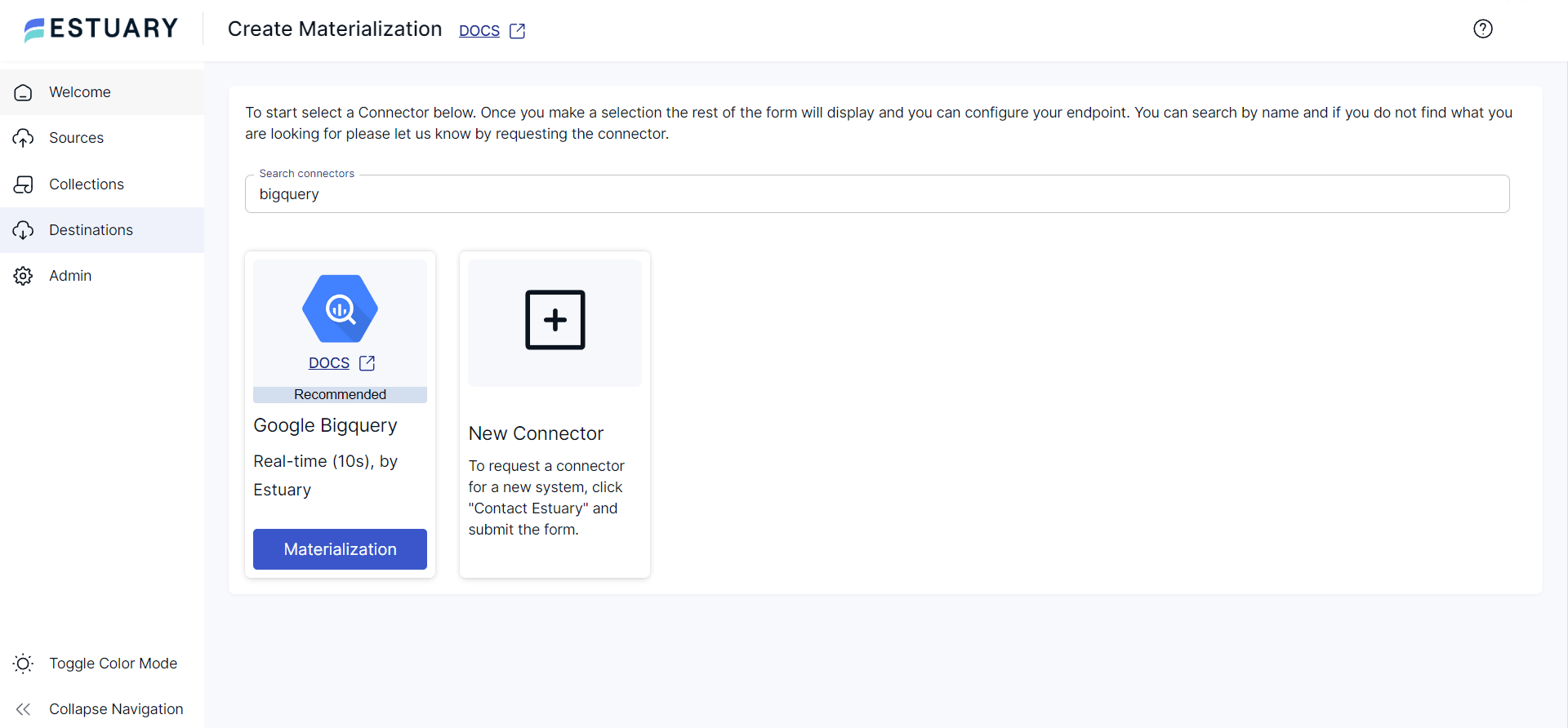

- On the Create Materialization page, search for the BigQuery connector. From the options you see in the search results, click on the Google BigQuery Materialization button.

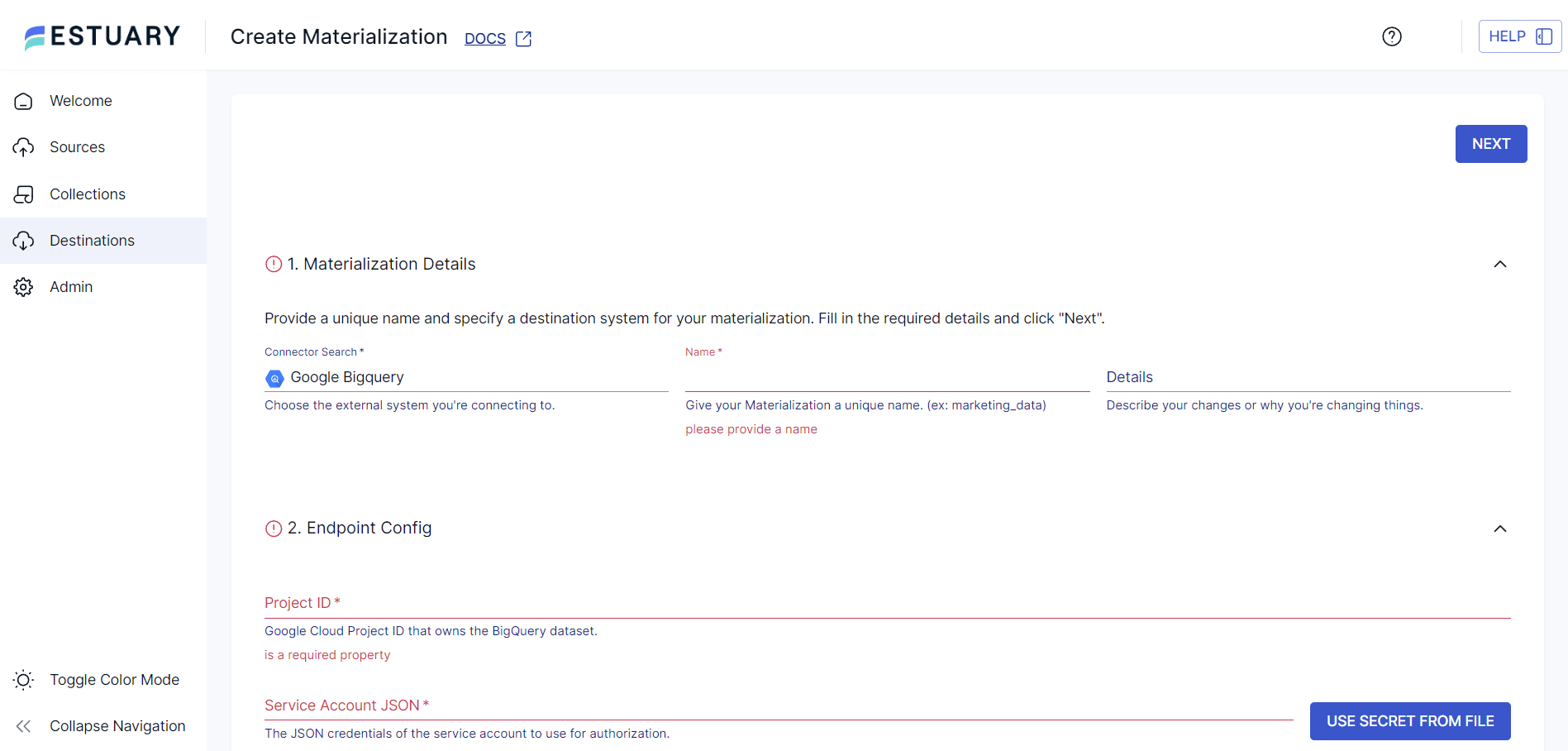

- You will now be redirected to the BigQuery Create Materialization page. Fill in the details such as Name, Project ID, Service Account JSON, Region, Dataset, and Bucket.

- You can also select collections to materialize by clicking the SOURCE FROM CAPTURE button.

- Finally, click NEXT > SAVE AND PUBLISH to complete the BigQuery destination configuration. The connector will materialize Flow collections into Google BigQuery tables.

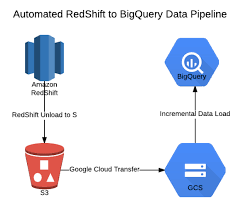

Method 2: Manual Data Migration from Redshift to BigQuery

Manual migration involves exporting data from Redshift and importing it into BigQuery. While more complex, it offers full control over the migration process.

- For Google BigQuery, the necessary permissions include:

- Permission to create the transfer: bigquery.transfers.update

- Permissions for the target dataset: bigquery.datasets.get and bigquery.datasets.update

These permissions fall under bigquery.admin predefined within the IAM (Identity and Access Management) role. Learn more about IAM roles here.

- For Amazon Redshift transfer, ensure the AWS access key pair is acquired. Learn more about how to acquire the access key pair here.

To proceed further with the migration, follow these steps:

- Sign in to your Google Cloud account and navigate the Google Cloud Console. Create a new project or use the existing one using the SELECT PROJECT or CREATE PROJECT.

- Enable the BigQuery Data Transfer Service API by clicking the Enable button.

- Next, create a BigQuery dataset to store data.

Note: For the Redshift cluster, you must allow the specific IP addresses associated with your dataset’s location. A comprehensive list of these IP addresses can be found here.

The migration process can be done as follows:

Step 1: Access the BigQuery page within the Google Cloud Console.

Step 2: Click Data transfers within the Analysis section on the left.

Step 3: Click CREATE A TRANSFER.

Step 4: Choose Amazon S3 as the Source and provide a name for the migration in the Transfer config name field. For Schedule options, choose between Start now and Start at set time.

Step 5: Specify the data set ID in the Destination settings box and proceed by entering the Data source details like

- JDBC connection URL for Amazon Redshift

- Username and Password for your database

- Access key ID and secret access key

- Amazon S3 URI

- Amazon Redshift Schema that you are migrating

- Mention a table name pattern to match the schema

- Leave VPC and reserved IP range blank

Step 6 (Optional): Enable notifications to receive email alerts in case of transfer failures. Click Save to continue.

Step 7: Upon successful execution, the Google Cloud Console will provide all the transfer setup details, including the Resource name.

Limitations of Using Custom Data Migration Model

- The custom data migration process requires a thorough understanding of Redshift and BigQuery and their migration strategies. If you’re a new user of either of these platforms, there is an increased chance of errors.

- This process is time-consuming due to the delays involved in manually extracting data from Redshift and loading it into BigQuery. As a result, it isn’t suitable for real-time integration or data analysis.

Use Cases for Migrating from Redshift to BigQuery

Migrating from Redshift to BigQuery can offer significant advantages for businesses across various industries and use cases. Some of the most common scenarios where this migration proves beneficial include:

- Cost Optimization: BigQuery's serverless pricing model eliminates the need for upfront infrastructure investment and allows you to pay only for the resources you use. This is particularly advantageous for businesses with fluctuating workloads or those looking to reduce their data warehousing costs.

- Scalability: BigQuery's flexible architecture can easily handle massive data volumes and scale effortlessly to accommodate growing needs. This makes it an ideal choice for businesses experiencing rapid data growth or those dealing with large and complex datasets.

- Performance Improvement: BigQuery's optimized query engine and columnar storage enable faster and more efficient data analysis, allowing businesses to gain insights and make data-driven decisions more quickly.

- Advanced Analytics: BigQuery offers built-in machine learning and geospatial analysis capabilities, empowering businesses to uncover deeper insights and unlock new opportunities for innovation.

- Cloud Consolidation: Migrating to BigQuery allows businesses to consolidate their data infrastructure within Google Cloud Platform, simplifying management, reducing complexity, and enhancing integration with other Google services.

Conclusion

To migrate data from Amazon Redshift to Google BigQuery, you can either use Estuary Flow or go about it the manual way. Estuary Flow provides a user-friendly interface and enhanced automation capabilities to simplify the entire process. It reduces operational complexities by scaling, automatic partitioning, and schema management.

Manual data migration is also a reliable method, but it is a complex process that demands thorough planning and expertise in both Redshift and BigQuery systems. Understanding of the source and destination systems is required to ensure seamless data transfer with minimal operational errors.

Are you looking to migrate your data to a different platform? Estuary Flow offers a comprehensive solution for all your data integration needs. Get started by signing up for a free account or logging in today!

If you have any questions or need assistance along the way, our community Slack channel is always open and ready to help you succeed!

Related Guide to Load Redshift Data to Other Platforms:

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles