The impact of Real-Time Data Replication goes beyond corporate boardrooms and touches the core of our daily lives – from eCommerce transactions that occur in the blink of an eye to vital healthcare information that saves lives within milliseconds. Data replication acts as a safety net – promoting high data availability and ensuring smooth operations even if the primary system experiences an outage.

Unplanned downtime incidents can strike at any time, hitting businesses where it hurts the most.

Believe it or not, around 4 out of 5 companies have gone through at least one of these incidents in a 3-year period, causing immense data loss. This makes real-time data replication all the more important.

But, do you know what is even more important? Knowing the right strategies and tools to implement real-time data replication. In this read, we will take you through 5 easy strategies and introduce this year’s best real-time data replication tools.

What Is Real-Time Data Replication?

Real-time data replication is the instantaneous data transfer from a source to a target storage system. This could be a data warehouse, data lake, or another database. The purpose is to ensure that any modifications made in the source database are immediately mirrored in the destination database to keep the data in both locations consistently synchronized.

3 Components Of Real-Time Data Replication

The 3 major components of real-time data replication include:

Data Ingestion

The initial stage is about gathering and moving data from the original source to the destination. This acts as the stepping stone in the data replication process to ensure that data is effectively assembled for duplication.

Data Integration

The next phase is consolidating data from varied sources into a unified view. This helps eliminate discrepancies and establish uniformity in the data across different platforms, particularly when dealing with multi-source data.

Data Synchronization

This is the crux of real-time data replication. The data between the source and the target is continuously harmonized. This ongoing process guarantees that any changes at the source are instantly reflected at the target location for a constantly updated and accurate dataset.

Mastering Real-Time Data Replication: 5 Simple Strategies You Need To Know

Real-time data replication is an integral part of today’s data management practices. It's the backbone of businesses keeping them up and running, providing valuable data analysis, and acting as a safety net for disaster recovery. But putting it into action can be quite a challenge – there are all sorts of technological and logistical hurdles to overcome.

To simplify this process, we’ve put together 5 straightforward strategies that effectively address this challenge. No matter what type of database you're dealing with or how big your data is, these methods give you all the right tools to make real-time data replication smooth.

Strategy 1: Using Transaction Logs

Many databases store transaction logs to observe ongoing operations. Transaction logs contain extensive data about actions like data definition commands, updates, inserts, and deletes. They even monitor specific points where these tasks take place.

To implement real-time data replication, you can scan the transaction logs and identify the changes. When done, a queuing mechanism is used to gather and then apply these changes to the destination database. However, a custom script or specific code may be needed to transfer transaction log data and make the required changes in the target database.

Considerations When Using Transaction Log Backups

- Connector requirements and limitations: Accessing transaction logs require special connectors for real-time data replication. These connectors, whether open-source or licensed, might not function as needed or can be costly.

- Labor and time-intensive: Using transaction logs is laborious and time-consuming. If an appropriate connector is not found, you’ll need to write a parser to extract changes from the logs and replicate the data to the destination database in real time. Using this strategy can be helpful, but just to be upfront, it might need a lot of work and effort to get it up and running.

Strategy 2: Using Built-In Replication Mechanisms

Built-in mechanisms use a process known as "log reading," a key part of change data capture functionality. This method copies data to a matching replica database to provide a convenient and reliable way to back up data.

They come integrated into many transaction databases like Oracle, MySQL, and PostgreSQL. These tools are dependable, user-friendly, and have comprehensive documentation. This makes it quite easy for database administrators to set up real-time data replication.

Considerations When Using Built-In Mechanisms To Replicate Data

- Cost implications: When you use built-in mechanisms, keep the costs in mind. Some vendors, like Oracle, will ask you to purchase software licenses for using their built-in data replication mechanisms.

- Compatibility issues: Built-in replication mechanisms' performance largely depends on the versions of the source and destination databases. Databases running on different software versions face difficulties during the replication process.

- Cross-platform replication limitations: They work best when both the source and destination databases are from the same vendor and share a similar technology stack. Replicating data across different platforms can be technically challenging.

Strategy 3: Using Trigger-Based Custom Solutions

Many popular databases, like Oracle and MariaDB, have the built-in capacity to create triggers on tables and columns. These are a form of custom solutions for real-time data replication and are used when certain predefined changes occur in the database.

When these changes match the specified criteria, the triggers record them into a 'change' table, like an audit log. This table stores all updates that should be made to the destination database. This approach does not rely on a timestamp column but needs a tool or platform that can appropriately apply the change data to the destination.

Considerations When Using a Trigger-Based Custom Solution

- Limited operational scope: Triggers can’t be used for all database operations. They are typically tied to a limited set of operations like calling a stored procedure, performing insert, or updating operations. As a result, for comprehensive change data capture (CDC) coverage, you have to pair trigger-based CDC with another method.

- Potential for additional load: Triggers can add an extra burden on source databases that impacts overall system performance. For example, if a trigger is associated with a particular transaction, that transaction will be on hold until the trigger is successfully executed. This can lock the database and create a standby for future changes until the trigger is executed.

Strategy 4: Using Continuous Polling Methods

Continuous polling mechanisms create custom code snippets that copy or replicate data from the source database to the destination database. Then the polling mechanism actively monitors for any changes in the data source.

These custom code snippets are designed to detect changes, format them, and finally update the destination database. Continuous polling mechanisms use queuing techniques that decouple the process in cases where the destination database cannot reach the source database or requires isolation.

Considerations When Using Continuous Polling Mechanisms

- Monitoring fields required: To use continuous polling methods, you need a specific field in the source database. Your custom code snippets will use this field to monitor and capture changes. Typically, these are timestamp-based columns that are updated when the database undergoes any modifications.

- Increased database load: Implementing a polling script causes a higher load on the source database. This additional strain affects the database’s performance and response speed, particularly on a larger scale. Therefore, continuous polling methods should be carefully planned and executed while considering their potential impact on database performance.

Strategy 5: Using Cloud-Based Mechanisms

Many cloud-based databases that manage and store business data already come with robust replication mechanisms. These mechanisms effortlessly replicate your company's data in real time. The advantage of using these cloud-based mechanisms is that they can replicate data with minimal or no coding. Integrating database event streams with other streaming services achieves this.

Considerations When Using Cloud-Based Replication Mechanisms

- Compatibility issues with different databases: If the data source or destination belongs to a different cloud service provider or any third-party database service, the built-in data replication method becomes difficult.

- Custom code for transformation-based functions: If you want to use transformation-based functions in your replication process, you’ll need custom coding. This additional step is necessary to manage the transformations effectively.

Real-Time Data Replication Made Easy: 5 Best Tools You Shouldn't Miss

Real-time data replication tools maintain the consistent flow and synchronization of data across various platforms and systems. These tools not only help in preserving the integrity and reliability of data but also let businesses react proactively to evolving trends and changes. Let’s look into the 5 leading real-time data replication tools available in 2023.

Estuary Flow

Estuary Flow is our innovative platform for efficient real-time data processing. Flow has an advanced set of features for diverse data management needs. From data replication and migration to real-time analytics and data sharing, it makes your data readily available, exactly when it's needed.

Estuary Flow Features

- Schema inference: Estuary Flow transforms unstructured data into structured data. This simplifies further data processing and lets you gain meaningful insights from your data.

- Real-time materializations: It facilitates real-time views across various systems and gives swift access to the most up-to-date information for a bird’s-eye view of your data landscape.

- Built-in testing and reliability: Flow provides built-in unit tests to verify data accuracy as your pipelines evolve. It’s built to weather any failure, offering you reliability and peace of mind.

- Consistent data delivery: It gives you transactional consistency for an accurate view of your data. With Flow, you can trust that your data will stay consistent, accurate, and reliable.

- Extensibility: Flow supports the addition of connectors via an open protocol. With this feature, it can be integrated with your existing systems with ease, minimizing disruption and enhancing synergy.

- Data transformations: It excels in real-time SQL and Javascript transformations. It uses a flexible and efficient way to manipulate data. With it, you can customize your data streams in ways that best suit your needs.

- Low system load: It is designed to minimize the strain on your systems since it uses low-impact CDC to pull data once. This means you can use your data across all your systems without re-syncing which reduces the system load.

- Scalability and first-rate performance: Flow scales with your data and has been tested at high data volumes. It can quickly backfill large data volumes without affecting your system’s performance. Regardless of your data volume or demands, it is built to scale and perform.

- Data capture capabilities: Flow collects data from different sources, including databases and SaaS applications. It has a robust Change Data Capture (CDC) functionality, integrating with real-time SaaS applications and even linking to batch-based endpoints.

Qlik

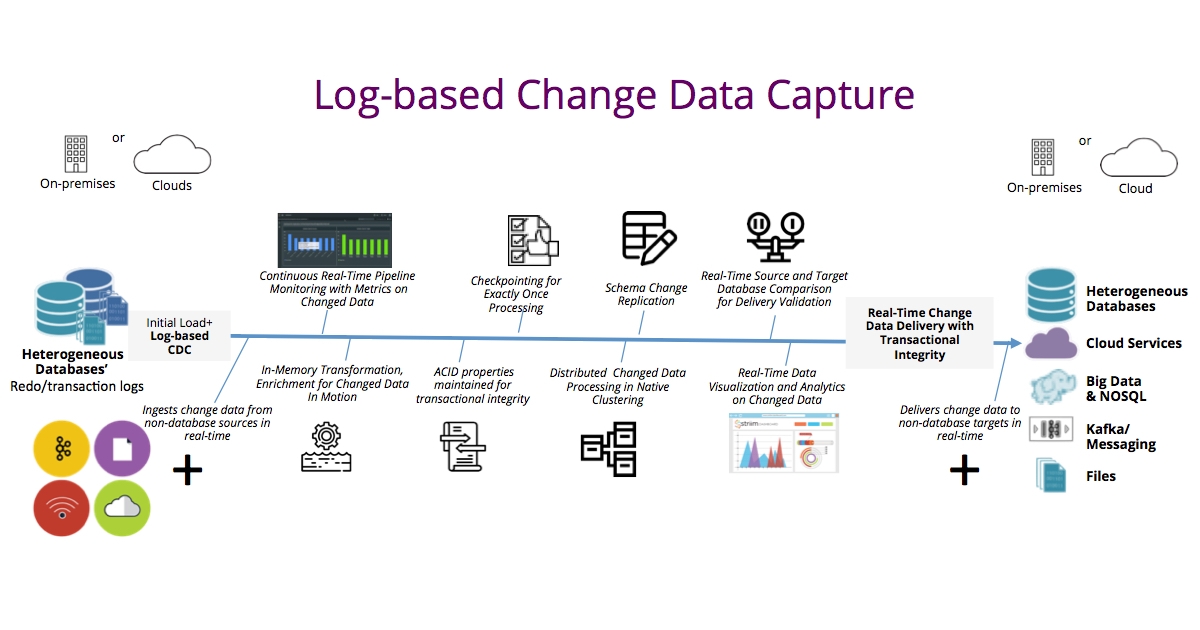

Qlik efficiently delivers large volumes of real-time, analytics-ready data into streaming and cloud platforms, data warehouses, and data lakes. With an agentless and log-based approach to change data capture, your data is always current without impacting source systems.

Qlik Features

- Real-time change data capture: Qlik moves data in real-time from source to target, all managed through a simple graphical interface that completely automates end-to-end replication.

- Log-based change data capture: Its log-based change data capture benefits from a cloud-optimized, zero-footprint architecture that keeps system impact to a minimum.

- Broad support for sources, targets, and platforms: It supports a wide range of sources and targets so you can load, ingest, migrate, distribute, consolidate, and synchronize data on-premise and across cloud or hybrid environments.

- Enterprise-wide monitoring and control: Its command center lets you set up, execute, and oversee integration tasks across the organization. This facility helps scale your operations easily and monitor data flow in real time.

- Flexible deployment: As a data integration platform, Qlik is impartial to cloud and analytics vendors. This gives you a wide range of choices and flexibility when deciding where to store, transform, and analyze your data.

IBM Data Replication Software

IBM's data replication solution provides real-time synchronization between heterogeneous data stores. This modern data management platform is offered both as on-premise software and as a cloud-based solution. IBM Data Replication synchronizes multiple data stores in near-real time and tracks only the changes to data to keep the impact minimal.

IBM Data Replication Software Features

- Data integration: It provides up-to-the-second operational data to event processing and analytics applications for direct data support and event integration.

- Resilience against unplanned outages: The software’s automatic failover capabilities effectively minimize the impact of unexpected system interruptions and database migrations.|

- Wide-ranging data sourcing: It is compatible with various database types and delivers the changes to multiple platforms — including databases, message queues, and Big Data and ETL platforms.

- Data accessibility and governance: IBM Data Replication goes beyond mere data synchronization; it also makes hard-to-reach data more accessible and manageable. This enhances governance and empowers better decision-making within organizations.

Hevo Data

Hevo Data is a no-code data pipeline that provides a fully automated solution for real-time data replication. This tool integrates with more than 100 data sources, including different data warehouses. Once set up, it requires zero maintenance, which makes it an ideal choice for businesses looking to enhance their real-time data replication efforts.

Hevo Data Replication Features

- Fault-tolerance: Its architecture detects data anomalies to protect your workflow from potential disruptions.

- Bulk data integration: Hevo integrates bulk data from diverse sources like databases, cloud applications, and files to provide up-to-date data.

- Data capture: This feature captures database modifications from various databases, making it easy to deliver these changes to other databases or platforms.

- Automated data mapping: Intelligent mapping algorithms are employed for automatic data mapping from various sources to enhance the efficiency of the integration process.

- Uninterrupted operations: Hevo creates standby databases for automatic switch-over. This minimizes the impact of unplanned disruptions and maintains high data availability.

- Scalability: Designed to handle millions of records per minute without latency, it is built to deal with large data volumes and perform data replication without compromising system performance.

Resilio Connect

Resilio Connect is a reliable file sync and share platform that elevates real-time data replication and employs innovative and scalable data pipelines. It uses an advanced P2P sync architecture and proprietary WAN acceleration technology to secure low-latency transport of massive data volumes without impacting the operational network.

Resilio Connect Features

- No single point of failure: Its unique P2P architecture removes the single point of failure commonly found in other data replication solutions.

- Robust scalability: Its peer-to-peer architecture supports rapid data replication which is easily scalable to accommodate growing data demands.

- Transactional integrity: Resilio Connect accurately replicates every file change across all devices to ensure end-to-end data consistency at the transaction level.

- Automatic file block distribution: Resilio Connect effectively auto-distributes file blocks. It detects and aligns file changes across all devices to simplify the replication process.

- Guaranteed data consistency: With its optimized checksum calculations and real-time notification system, it maintains data consistency across all devices and eliminates discrepancies or inaccuracies.

- Accelerated replication without custom coding: Resilio Connect lets users replicate data easily without writing any custom code. You can configure it using a user-friendly interface. Comprehensive support and documentation further ease the deployment experience.

Conclusion

The importance of real-time data replication goes beyond simple redundancy. It ensures the utmost data integrity and guarantees that decisions are made based on the latest information available. Whether it's for mission-critical applications or big data analytics, it lets you act with speed and precision and respond to market changes and customer demands in real time.

Real-time data replication can be complex, but with the right data replication strategy and tools, you can maintain data consistency and coherence across multiple locations and applications. Estuary Flow is built to manage real-time data replication. It guarantees that your systems always have accurate and consistent data.

With Estuary Flow, you can sync data across various applications and locations, making your business operations run seamlessly. You shouldn’t have to worry about data mismatches – Estuary Flow empowers you with real-time insights and ensures your data is reliable and up-to-date.

If you are looking to enhance your replication processes, try Estuary Flow at no cost by signing up today. If you need further support, feel free to reach out to our team and we’ll be thrilled to help you out.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles