The digital era has experienced relentless growth in data generation during the last decade. This growth is primarily driven by factors like the rise of the Internet of Things (IoT), social media, and the widespread adoption of smart devices. With this explosion of data comes the need for efficient and scalable real-time data ingestion architectures that can handle the vast volumes of data generated every second.

In today’s fiercely competitive market, businesses simply cannot afford to overlook the value of real-time insights for making well-informed decisions. Enter real-time data ingestion architecture - an innovative solution that helps you keep up with the fast-paced world of big data.

In this article, we’ll look into the concept of real-time data ingestion and its significance in the vast world of big data. By the end of this power-packed guide, you’ll not only be familiar with the importance of real-time data ingestion but also get a complete grip on different real-time data ingestion architectures and the tools used for it.

Understanding Real-Time Data Ingestion In Big Data

To understand the real-time data ingestion process in the context of Big Data, it is important to understand what Big Data is. Big Data is defined by the "3 Vs":

- Volume: The amount of data generated is very large and difficult to analyze with traditional tools.

- Velocity: Data is generated at a high speed. Corresponding data ingestion and processing tools must match the speed of data generation.

- Variety: Big Data encompasses a variety of data types, ranging from structured to unstructured.

Now let's consider what Real-time data ingestion is.

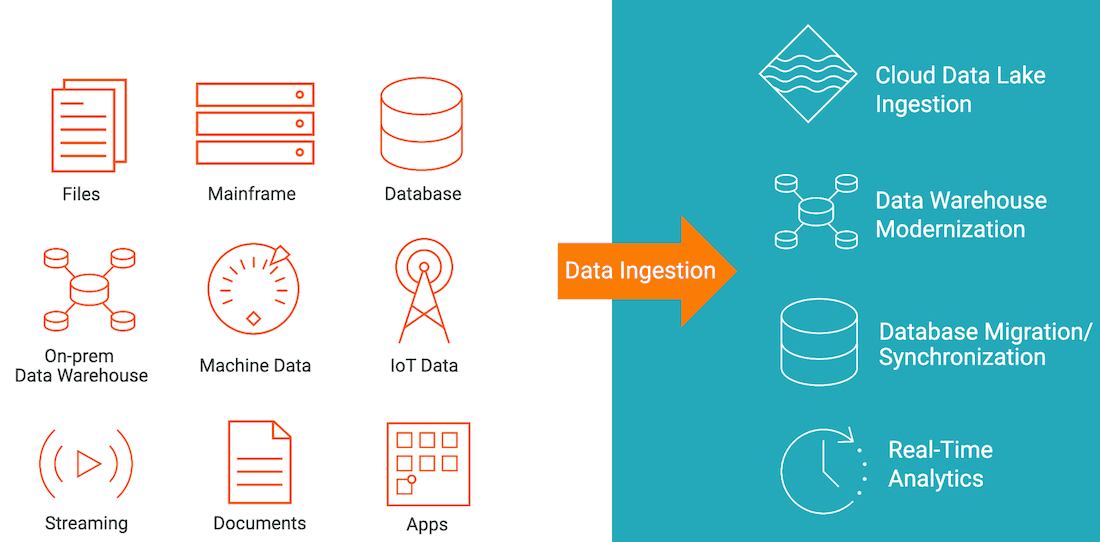

Real-time data ingestion involves actively capturing, integrating, and processing data as organizations generate or receive it. This method ensures that businesses base their decisions on the latest information available.

By gathering data from diverse sources like sensors, social media platforms, and web applications, real-time ingestion converts unstructured data into structured data that makes it suitable for storage and analysis with data ingestion tools.

How Does Real-time Ingestion Differ From Traditional Data Ingestion?

Traditional data ingestion usually involves collecting data in batches at scheduled intervals as part of an ETL pipeline. On the other hand, real-time data ingestion deals with ingesting data continuously in real or near real-time, reducing latency and making sure the data warehouse has the most up-to-date info.

So why should you care about real-time data ingestion?

Because it can help you with:

- Flexibility: Real-time ingestion allows organizations to adapt and respond to changes more quickly, maintaining a competitive edge and capitalizing on new opportunities as they arise.

- Infrastructure: Real-time ingestion typically requires smaller storage and compute resources since it processes data on-the-fly whereas traditional methods involve processing and storing large batches.

- Decision-making: With real-time ingestion, business leaders access and analyze the most current and relevant information available, allowing for timely and accurate decision-making. On the other hand, traditional methods mean waiting for data batches to be collected, processed, and analyzed causing delays in accessing valuable information.

Real-life Examples & Applications

Real-time data ingestion plays an important role in different industries. Here are some examples of real-life use cases:

- Social media platforms: Companies like Twitter and Facebook use real-time data ingestion tools to keep users informed of the latest posts, trending topics, and user interactions.

- IoT devices and smart home systems: These systems rely on real-time data ingestion to provide users with accurate, up-to-date information about their connected devices and environment.

- Finance industry: Banks and financial institutions ingest data in real-time to monitor transactions, detect fraudulent activities, and adjust stock trading algorithms according to market conditions.

- Transportation and logistics: Real-time data ingestion helps monitor traffic patterns, vehicle locations, and delivery times, enabling companies to optimize routes and improve efficiency.

- eCommerce: Online retailers use real-time data ingestion to update product inventory, track customer behavior, and provide personalized recommendations based on browsing history and preferences.

While having a clear understanding of the real-time data ingestion work mechanism and its wide-ranging applications is crucial, it's equally important to know the different types of real-time data ingestion architecture to identify the ideal approach for your organization's specific requirements. Let’s take a look.

5 Different Types Of Real-Time Data Ingestion Architecture

Let's take a closer look at 5 distinct types of real-time data ingestion architecture and explore their advantages.

Streaming-Based Architecture

A streaming-based architecture is all about ingesting data streams continuously as they arrive. Tools like Apache Kafka are used to collect, process, and distribute data in real time.

This approach is perfect for handling high-velocity and high-volume data while ensuring data quality and low-latency insights.

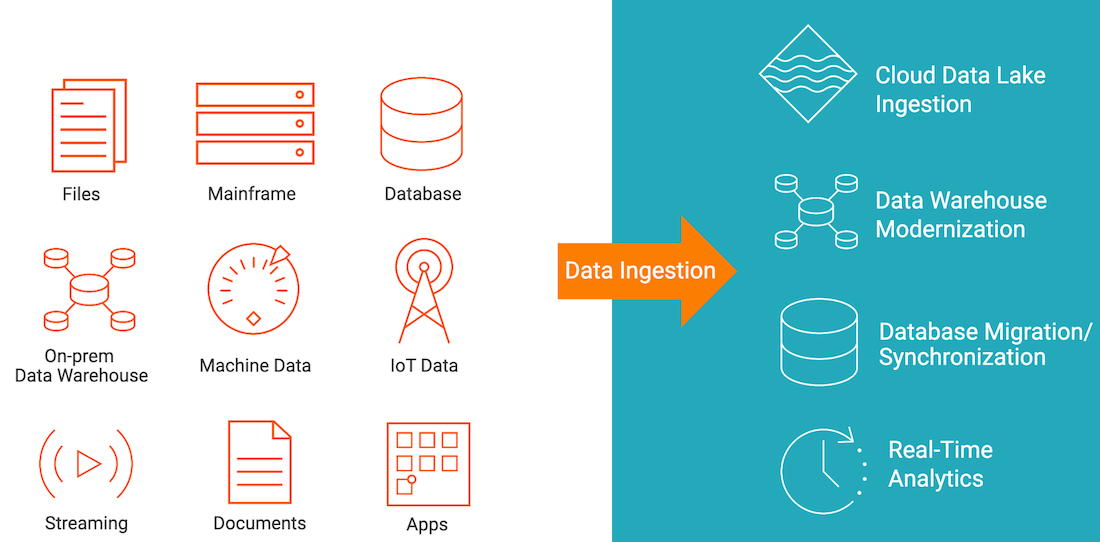

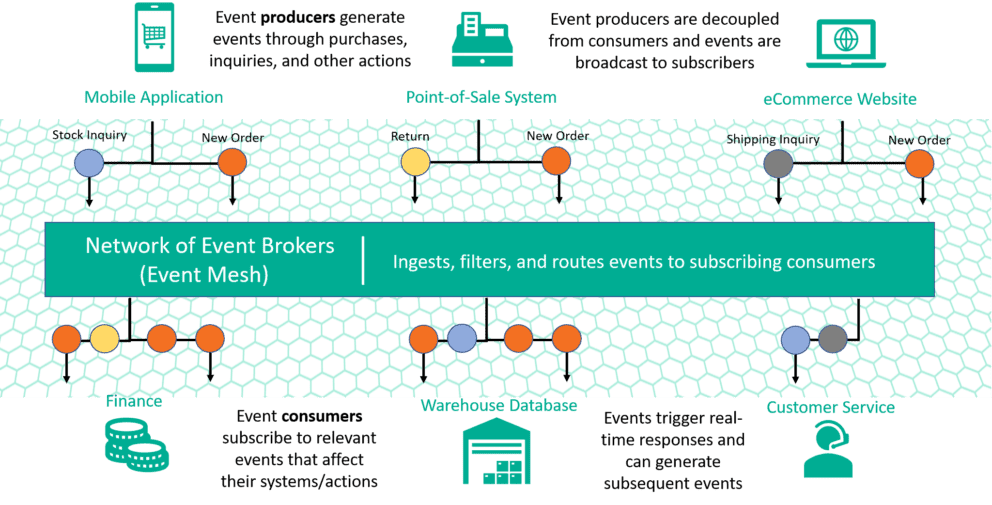

Event-Driven Architecture

An event-driven architecture reacts to specific events or triggers within a system. It ingests data as events occur and allows the system to respond quickly to changes.

This architecture is highly scalable and can efficiently handle large volumes of data from various sources which makes it a popular choice for modern applications and microservices.

Lambda Architecture

Lambda architecture is a hybrid approach that combines the best of both worlds – batch and real-time data ingestion.

It consists of two parallel data pipelines: a batch layer for processing historical data and a speed layer for processing real-time data. This architecture provides low-latency insights while ensuring data accuracy and consistency, even in large-scale, distributed systems.

Kappa Architecture

Kappa architecture is a streamlined version of Lambda architecture that focuses solely on real-time process data.

It simplifies the data ingestion pipeline by using a single stream processing engine, like Apache Flink or Apache Kafka Streams, to handle both historical and real-time data. This approach reduces complexity and maintenance costs while still providing fast, accurate insights.

Why Real-Time Data Ingestion Matters

The value of real-time data ingestion cannot be overstated in a world where information is constantly evolving. Embracing real-time insights allows organizations to seize opportunities, address challenges proactively, and drive innovation across various industries.

Let's explore the key benefits of real-time data ingestion and how it can make a difference for your business.

Faster Decision-Making

It's all about staying one step ahead of the competition. By processing data as it streams in, organizations can quickly spot trends and issues which eventually helps in quicker and better decision making. For instance:

- eCommerce companies can adjust prices in real time based on supply and demand.

- Financial institutions can respond to market fluctuations and make timely investment decisions.

- Manufacturing firms can optimize production schedules to minimize downtime and reduce costs.

- Transportation companies can adjust routes and schedules in response to traffic conditions and demand.

Improved Customer Experience

Another perk of real-time data ingestion is its ability to enhance the customer experience. With the power to analyze and respond to streaming data, businesses can personalize interactions and offers based on current customer behavior. The result – happier and more loyal customers.

Some applications include:

- Providing real-time customer support via chatbots or live agents.

- Recommending products or content based on a user's browsing history.

- Tailoring promotional offers to match customer preferences and purchase history.

- Real-time notifications and alerts based on customer preferences, like price drops or restocked items. Incorporating CRM with conversational bots can further streamline these processes, making interactions even more efficient and personalized.

Enhanced Fraud Detection & Prevention

By keeping an eye on transactions and user behavior 24/7, businesses can identify suspicious activities and take action instantly. This helps minimize financial losses and protect the company's reputation.

Here are a few examples:

- eCommerce platforms can detect fake accounts and prevent fraudulent purchases.

- Banks can monitor transactions for unusual patterns and block suspicious activities in real time.

- Telecommunication companies can track unusual call patterns to identify and block spam or fraud attempts.

- Insurance companies can detect fraudulent claims by analyzing patterns and inconsistencies in real time.

Real-Time Analytics & Insights

Real-time data ingestion fuels real-time analytics, providing businesses with up-to-the-minute insights for better decision-making. This means organizations can stay agile and responsive, adjusting to market shifts and customer needs as they happen.

Some real-life applications include:

- Monitoring social media sentiment to gauge brand perception and respond to customer concerns.

- Real-time inventory tracking and management to prevent stockouts and overstock situations.

- Tracking website traffic and user engagement to optimize marketing campaigns and web design in real time.

- Analyzing streaming IoT data from sensors to optimize energy consumption, machine performance, and maintenance.

Now that we know the benefits of real-time data ingestion, let’s take a closer look at the 5 best tools for the job to get a better understanding of how they work and see which is the best fit for your organization.

Data Ingestion Made Easy: 5 Best Real-Time Data Ingestion Tools To Simplify Your Workflow

A high-quality real-time data ingestion tool can streamline your data workflow and ensure that your data is ingested, processed, and analyzed in real time, allowing you to quickly identify patterns, trends, and anomalies. Here are our top 5 picks for the best data ingestion tools:

Estuary Flow

Estuary Flow is our DataOps platform that is focused on making real-time data ingestion user-friendly. Built on top of Gazette, an open-source Kafka-like streaming broker, Estuary Flow aims to accommodate everyone on your team, not just engineers.

It offers seamless integration with platforms like Google Firestore, Microsoft SQL Server, and Snowflake. This enables businesses to work with multiple data platforms at the same time utilizing each one for its best use case.

Key Benefits & Features

Some standout features of Estuary Flow are:

- Fully integrated pipelines: This helps streamline data workflows, reduce errors, and improve overall efficiency.

- Enhanced data security and compliance: Keeps sensitive data protected with encryption, access controls, and auditing capabilities.

- Powerful data transformation: Estuary Flow ensures data quality and consistency with built-in data validation, transformation, and enrichment features.

- Variety of pre-built connectors: This makes it easy to synchronize data between Firestore and other systems like ElasticSearch, Snowflake, or BigQuery.

Flow Vs. Other Real-time Data Ingestion Tools

What sets Estuary Flow apart from other data ingestion tools is its user-friendly web application. This allows data analysts and other user groups to actively participate in and manage data pipelines.

Flow offers a powerful no-code interface for setting up real-time data pipelines. This reduces the need for expert engineers and makes real-time data ingestion accessible to everyone.

On top of this, Flow combines the best practices of DevOps, Agile, and data management to guarantee the finest data quality and consistency across systems.

Ideal Use Cases & Target Audience

Estuary Flow is perfect for businesses looking to:

- Create, manage, and monitor data pipelines with ease.

- Maintain high data quality and consistency across various platforms.

- Reduce the time and effort required to build and maintain custom connectors.

- Get instant metrics for crucial events, allowing teams to react quickly and make informed decisions.

- Reduce the cost and execution time of repeated OLAP queries to data warehouses by optimizing performance

Estuary Flow provides a powerful, user-focused solution for real-time data ingestion, ensuring a smooth and efficient experience for your entire team.

Want a comparison of Estuary Flow with other tools? Check out our Airbyte vs Fivetran vs Estuary comparison to make your decision easier.

Apache Kafka

Apache Kafka is a widely used open-source tool for real-time data streaming. Its high-throughput capabilities, ability to integrate with various systems, and robust architecture make it an excellent choice for handling vast amounts of data from diverse sources.

Here are some key features of Apache Kafka:

- Durability: It stores data on disk so it’s durable and it can recover data in the event of a failure.

- Scalability: You can easily scale it horizontally by adding more brokers to a cluster, increasing its capacity as needed.

- Low-latency: With its low-latency processing, you can analyze and act upon data almost immediately after it’s generated.

- Fault-tolerance: Even if some of its components fail, it can continue to operate. This ensures that data is always available and processed quickly.

- High-throughput: By handling millions of events per second, it’s ideal for applications that require real-time processing of large amounts of data.

Apache Nifi

Apache Nifi is a user-friendly data ingestion tool that streamlines ingesting and transforming data from multiple sources. Its web-based interface lets users create and manage data flow with little to no coding. Nifi supports both batch and real-time processing, making it a versatile option.

Let’s see the key benefits that make it so efficient.

- Scalability: It can scale to handle large amounts of data and complex data flows.

- Extensibility: You can extend it with custom processors to support specific use cases.

- Security: With features such as encryption and access control, it ensures that your data is secure.

- Ease of Use: With its user-friendly web-based interface, you can easily design and manage data flows.

- Data Provenance: It tracks data from its source to its destination, providing a clear record of where data came from and how it was processed.

AWS Kinesis

Amazon Web Services offers AWS Kinesis, a managed service for real-time data streaming and processing. It takes the hassle out of building and managing data ingestion pipelines so businesses can focus on analyzing their data. With its scalability, durability, and low-latency processing, Kinesis is perfect for handling large volumes of data quickly.

Here are some of its many benefits:

- Dedicated Throughput: Up to 20 consumers can attach to a Kinesis data stream, each having its dedicated read throughput.

- Low Latency: Data is available within 70 milliseconds of collection for real-time analytics, Kinesis Data Analytics, or AWS Lambda.

- High Availability & Durability: Data synchronously replicates across three Availability Zones and stores for up to 365 days, offering multiple data loss protection layers.

- Serverless: Amazon Kinesis Data Streams eliminates the need to manage servers as on-demand mode automatically scales capacity during increased workload traffic.

Google Pub/Sub

Google Pub/Sub is a messaging service from the Google Cloud Platform that enables real-time data ingestion and distribution. It guarantees at-least-once message delivery and is designed for scalability. Pub/Sub can tackle millions of events per second which are ideal for high-throughput data streams.

Some additional key features of Google Pub/Sub include:

- Global Routing: Publish messages anywhere in the world and have them persist in the nearest region for low latency.

- Cost-Optimized Ingestion: Pub/Sub Lite offers a low-cost option for high-volume event ingestion with regional or zonal storage.

- Synchronous Replication: Offers cross-zone message replication and per-message receipt tracking to ensure reliable delivery.

- Native Integration: Integrates with other Google Cloud services such as Dataflow for reliable and expressive processing of event streams.

Conclusion

For organizations aiming to excel in the modern era, implementing real-time data ingestion is a must-have component of their data strategy. It is no longer a luxury but a necessity. Ever increasing volume, variety, and velocity of data requires adopting real-time data ingestion to the fullest to stay competitive.

However, it is not enough to simply implement real-time data ingestion. You must also carefully choose the right tools and architectures that suit your organization’s data requirements, including data sources, data formats, and data volumes.

If you are on the lookout for a new data ingestion tool, give Estuary Flow a try. Flow is an excellent solution to get started with. It is a powerful and efficient real-time data ingestion tool that can help you streamline data collection and processing.

With its real-time streaming ability, Estuary Flow enables you to process and analyze data in real time and provides you with the latest and most accurate insights into your business operations. Sign up today and see the difference it can make.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles