Python is the de facto language of the data world. Developers use it for data extraction tasks, transformations, and integration as well. As a general-purpose language, Python fits perfectly for these use cases, and its widespread adoption means developers can find a library for all common problems.

Python has many ETL tools which help data engineers manage data pipelines. When picking a tool, consider scalability, ease of use, and complexity. Python tools work for both small tasks and large, distributed systems. The best tool depends on your specific needs, such as handling big data, connecting different sources, or ensuring real-time processing.

Python’s flexibility makes it easy to manage both small and complex workflows. Let’s look at the top Python ETL tools, their strengths, and how to choose the best one for you.

9 Best Python ETL Tools

Here is the list of the 9 popular Python ETL tools in 2024:

1. Apache Airflow

Apache Airflow is a popular Python ETL tool used for managing and scheduling complex workflows. It creates ETL pipelines using a Directed Acyclic Graph (DAG). In these pipelines, each task depends on the one before it. This ensures that tasks run in the right order. Airflow handles many tasks, like extracting, transforming, and loading data into different systems.

Key Features:

- Orchestration of Complex Workflows: Airflow’s DAG system lets you build workflows where tasks depend on each other. Each task can be a Python script or another type of operator.

- Monitoring and Logging: Airflow provides a web-based UI to track the progress of workflows. You can easily monitor task status, check for failures, and rerun jobs if needed.

- Scalability: Airflow scales well, managing both small and large data processing pipelines. It's suitable for enterprise-level data needs.

- Extensibility and Flexibility: Airflow works with cloud platforms, databases, and APIs. This makes it useful for many ETL tasks, like loading data into cloud storage or processing large datasets across clusters.

2. Luigi

Luigi, created by Spotify, is a Python ETL tool designed to manage complex workflows. Similarly to Airflow, It handles pipelines where tasks depend on each other, ensuring that each task runs only after the previous one finishes. This makes Luigi great for managing large workflows with many connected tasks.

Key Features:

- Task Dependency Management: Luigi handles workflows with task dependencies effectively. Each task is part of a broader pipeline, and Luigi ensures that the tasks follow the correct execution order.

- Task Progress Visualization: Luigi makes it easy to track task progress with visual tools. These tools help data engineers monitor tasks, spot errors, and rerun any failed steps.

- Integration Capabilities: Luigi works well with systems like Hadoop, Spark, and SQL databases.

- Simplified Task Execution: Luigi breaks down tasks into smaller, modular components. This makes it easier to test, troubleshoot, and maintain workflows.

3. PySpark

PySpark is a powerful Python ETL tool. Apache Spark, it’s underlying compute engine is designed to process large datasets using distributed computing. PySpark is the Python API for Spark. It lets you use Spark’s data processing power in Python. This makes it perfect for big data tasks. PySpark handles large datasets efficiently. It also integrates well with machine learning libraries, making it ideal for advanced workflows.

Key Features:

- Distributed Data Processing: PySpark divides large datasets into smaller parts. It processes them at the same time, which makes data processing much faster.

- Big Data Processing: PPySpark uses Resilient Distributed Datasets (RDDs). RDDs split data across multiple nodes, making processing faster and ensuring that any failures don’t stop the entire workflow.

- Integration with Machine Learning: PySpark works with Spark’s MLlib, a machine learning library. This makes it a good fit for ETL tasks that involve machine learning.

- SQL-Like Operations: With PySpark’s DataFrame API, you can perform SQL-like queries on large datasets. This makes transforming and querying data easier and faster.

4. Polars

Polars is a lightning-fast DataFrame library in Rust with a Python API, making it an efficient choice for data manipulation and ETL processes. Polars provides a memory-efficient approach with multi-threaded operations, making it perfect for processing large datasets in a fraction of the time. With its DataFrame API, it supports many SQL-like operations and excels at handling complex transformations.

Key Features:

- Speed and Efficiency: Polars is optimized for speed, using Rust to handle data operations faster than traditional tools. It leverages multi-threading, allowing for high performance even on large datasets.

- Low Memory Usage: Polars has an efficient memory footprint, ideal for managing large datasets without overwhelming memory.

- Lazy Evaluation: Polars processes data in a lazy manner, optimizing computations to reduce processing time and resource usage.

- Flexible and Feature-Rich API: It includes SQL-like queries, joins, aggregations, and more, making complex data manipulation straightforward.

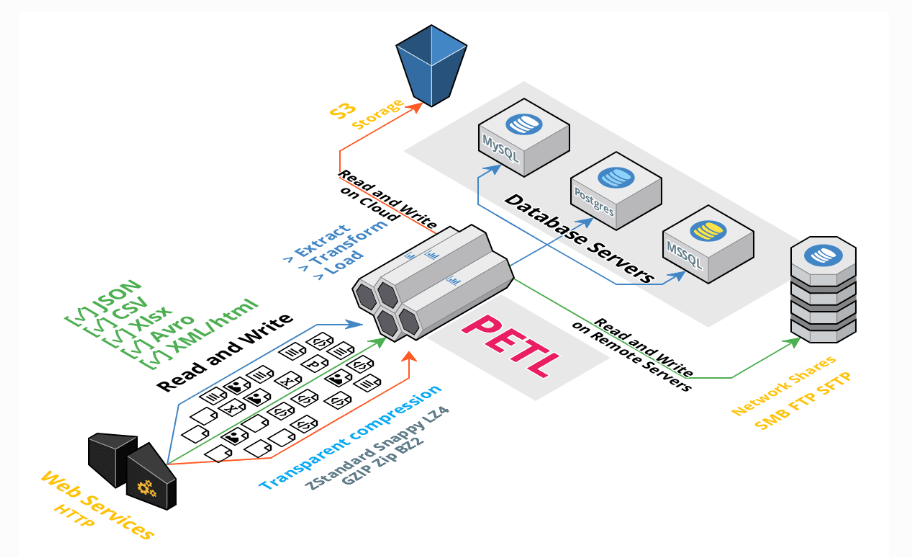

5. petl

petl is a lightweight Python library used for data extraction, transformation, and loading tasks. It works well with large datasets and uses memory efficiently. petl only processes data when needed, making it great for simple ETL tasks that have limited resources.

While it doesn’t have advanced features like other ETL frameworks, petl is fast and effective for table manipulation. It’s perfect for users who need to prioritize low memory usage.

Key Features:

- Lazy Loading: petl processes data only when needed. This helps it manage large datasets without using too much memory.

- Low Memory Usage: petl uses less memory. It works well with large datasets and doesn’t need complex systems.

- Extendable: petl can be expanded to support different data formats. This makes it adaptable to various ETL tasks.

- Simple API for Table Manipulation: petl provides a simple API to filter, sort, and transform data. It works well for basic ETL tasks involving structured data. You can use it without the need for large frameworks.

6. Bonobo

Bonobo is a simple and lightweight Python ETL framework. It helps developers build ETL pipelines with reusable components. Bonobo’s modular design makes it perfect for smaller ETL tasks. It also supports parallel processing to boost performance. Although it lacks the complexity of bigger ETL tools, Bonobo is great for simple and maintainable workflows. It is an excellent choice for developers who need something easy to read and test.

Key Features:

- Atomic Pipeline Design: Bonobo breaks each pipeline into smaller, reusable parts, making it easier to test and maintain.

- Parallel Processing: Bonobo can run multiple tasks at the same time, improving performance.

- Flexibility: It comes with built-in connectors for common tasks. You can also customize it for more complex workflows.

- Ease of Use: Bonobo's simple API lets developers build ETL pipelines with minimal effort. It’s a good option for smaller projects or proof-of-concept pipelines.

7. Odo

Odo is a versatile Python ETL tool made for data conversion. It is perfect for data engineers who work with many data formats. Odo's main strength is its ability to quickly and efficiently convert data between formats. This makes it ideal for ETL pipelines that move data across different environments.

Key Features:

- Wide Data Format Support: Odo works with many data formats. It can convert data between NumPy arrays, Pandas DataFrames, SQL databases, CSV files, cloud storage, and even Hadoop.

- High Performance: Odo is built to handle large datasets quickly. It converts data into different formats efficiently.

- Efficient Data Loading: Odo is great at loading large datasets into databases or other storage systems with little delay.

- Ease of Integration: Odo integrates well with other Python libraries and tools. It fits easily into existing ETL pipelines. Whether you work with file-based data, databases, or cloud storage, Odo simplifies data conversion and loading.

8. dltHub

dltHub is an open-source Python library designed to simplify data extraction, transformation, and loading tasks with a focus on ease of use and scalability. dltHub enables data engineers to create low-code ETL pipelines that can ingest data from multiple sources like APIs, databases, or files and then load it into data warehouses or lakes. With automation for error handling and retry mechanisms, it’s an excellent choice for reliable and efficient data pipelines.

Key Features:

- Incremental Data Loading: dltHub supports incremental loading, updating only new or modified data to save processing time and resources.

- Built-in Error Handling and Retry Mechanisms: Automates retries and error handling, ensuring robust and reliable pipeline execution.

- Broad Integration Support: It is compatible with destinations such as BigQuery, Snowflake, and PostgreSQL, making it versatile for various data storage needs.

- Low-Code, Developer-Friendly API: dltHub's simple API reduces setup complexity, allowing data engineers to deploy and maintain ETL workflows quickly.

9. Bytewax

Bytewax is a Python-based stream processing framework designed for building scalable data pipelines that handle real-time data. Its event-driven architecture allows developers to process streaming data from various sources, making it an ideal choice for real-time analytics, ETL processes, and event-driven systems. Bytewax enables stateful computations on streaming data, allowing for complex transformations and processing.

Key Features:

- Stream Processing: Bytewax excels at processing continuous data streams, making it perfect for real-time ETL workflows.

- Scalable Architecture: Designed for distributed processing, Bytewax can scale across clusters to efficiently handle large volumes of data.

- Stateful Processing: It allows users to maintain state across data events, enabling advanced use cases like sessionization and real-time anomaly detection.

- Integration with Data Sources: Bytewax integrates with various sources like Kafka, WebSockets, and databases, making it versatile for different ETL tasks.

- Pythonic API: Bytewax provides an easy-to-use API, making it accessible to Python developers who want to build complex real-time workflows without learning a new framework.

How to Use Python for ETL?

Python is one of the best programming languages for ETL processes. This is because of its simplicity, large number of libraries, and ability to handle complex data workflows. To perform ETL with Python, you can follow these core steps:

- Extract Data:

- Python allows you to extract data from many sources. These include databases like MySQL and PostgreSQL, APIs, and file formats such as CSV, JSON, and Excel.

- You can use libraries like pandas for working with CSV and Excel files. For APIs, requests is commonly used. For database connections, sqlalchemy or psycopg2 are popular choices.

Example using pandas:

pythonimport pandas as pd

data = pd.read_csv('data.csv')- Transform Data:

- Data transformation includes cleaning, enriching, and reshaping the extracted data. Python libraries like pandas and NumPy make it easy to manipulate data. They allow users to filter, aggregate, and join data with simple commands.

- You can also apply functions, handle missing data, and modify data types.

Example transformation with pandas

pythondata['new_column'] = data['existing_column'].apply(lambda x: x * 2)

transformed_data = data.dropna() # Remove missing values- Load Data:

- Once the data is transformed, it needs to be loaded into the desired destination, such as databases, data lakes, or cloud storage.

- You can use libraries like sqlalchemy to load data into SQL databases or boto3 to send data to AWS S3.

Example loading data to a SQL database:

pythonfrom sqlalchemy import create_engine

engine = create_engine('postgresql://user:password@localhost/dbname')

transformed_data.to_sql('table_name', engine, if_exists='replace')Python Libraries to Streamline ETL:

- Pandas: For quick data extraction, transformation, and loading tasks involving structured data.

- SQLAlchemy: This is used to connect to databases and perform SQL operations.

- Airflow: For scheduling and orchestrating complex ETL workflows.

- PySpark: For processing large datasets with distributed computing.

- Bonobo: For modular and maintainable ETL pipelines.

- Odo: For efficiently converting and loading data across different formats.

Python has many libraries to help you build ETL pipelines. These pipelines can scale to fit your data needs. You can use Python for simple file transformations or complex workflows. It gives you the right tools to automate the process and make it easier.

How do you choose the right Python ETL tool?

Choosing the right ETL tool depends on several factors, such as:

- Data Volume: Tools like PySpark and Apache Airflow are better for large-scale data processing.

- Complexity of Transformations: Tools like Pandas and petl are great for simpler tasks. Airflow and Luigi handle more complex workflows.

- Deployment Needs: If you need flexibility for deployment (like using Docker or Kubernetes), tools like Airflow or Bonobo may be a better fit.

Conclusion

In this article, we explored nine essential Python ETL tools for a range of data engineering needs. Tools like Apache Airflow and Luigi excel in managing complex workflows, while PySpark is ideal for processing large-scale datasets. Lightweight options like Pandas and petl are well-suited for smaller, simpler tasks.

As data demands shift towards real-time processing, traditional batch ETL tools often can’t keep up. Estuary Flow offers a solution with its real-time, low-code platform, enabling continuous data synchronization. Combining Estuary Flow with Python ETL tools allows data engineers to seamlessly manage both batch and real-time workflows, creating scalable, future-ready pipelines that fit modern integration needs.

As data integration becomes more complex, the need for real-time data pipelines is growing. This is where Estuary Flow helps. Estuary offers a real-time, low-code solution to sync data between sources and destinations. It removes much of the complexity found in traditional ETL processes.

By combining Estuary with Python tools, data engineers can handle both batch and real-time data processing easily. This ensures accurate and up-to-date insights.

The right tool depends on your project’s needs. Combining traditional ETL tools with modern solutions like Estuary can improve workflows. It also increases scalability and makes your data pipelines ready for the future.

FAQs

1. What is the best Python ETL tool for big data?

The best Python ETL tool for big data is PySpark. It is built for distributed computing, which helps in processing large datasets across many systems. PySpark also integrates well with machine learning libraries. This makes it ideal for advanced workflows that require data analytics and transformations.

2. Can I use Python ETL tools for real-time data processing?

Yes, real-time data pipelines are possible with Estuary Flow. Most Python ETL tools handle batch processing. However, Estuary Flow provides real-time data synchronization. This makes it great for continuous data streaming and quick updates.

3. What Python ETL tool should I choose for small tasks?

For smaller ETL tasks, petl is a great choice. This lightweight tool is easy to use and allows for efficient, in-memory data manipulation, making it ideal for tasks involving structured data like CSV or Excel files. petl offers straightforward functions for filtering, sorting, and transforming data, making it perfect for simpler ETL workflows without the need for a complex setup.

About the author

Dani is a data professional with a rich background in data engineering and real-time data platforms. At Estuary, Daniel focuses on promoting cutting-edge streaming solutions, helping to bridge the gap between technical innovation and developer adoption. With deep expertise in cloud-native and streaming technologies, Dani has successfully supported startups and enterprises in building robust data solutions.

Popular Articles