Given the ever-changing field of data management, companies are driven to investigate specialized databases in order to find the best answers to their changing needs.

The need for real-time analytics, similar searches, and personalized content distribution grows as businesses manage the complexity of contemporary applications. By combining Pinecone’s vector processing with PostgreSQL’s dependability in managing structured data, this integration should allow for more advanced data processing options. Moving your data from Postgres to Pinecone can help you with efficient vector search for applications such as document and image retrieval, accurate recommendation systems, machine learning models, and more.

This post explores the process of integrating Pinecone, a cloud-based vector database intended for high-dimensional vector data, with PostgreSQL, a reliable relational database.

Overview of PostgreSQL

PostgreSQL is an open-source object-relational database management system that builds upon the SQL language and incorporates numerous capabilities to securely store and handle even the most complex data demands. Because of its well-established design, dependability, data integrity, extensive feature set, and extensibility, PostgreSQL has gained a solid reputation.

Today, PostgreSQL is appropriate for a wide range of applications because it offers strong transactional support and sophisticated capabilities like built-in replication and concurrency control. However, standard relational databases are not up to the task of searching through large datasets for comparable things, particularly those requiring complex comparisons or intricate relationships.

Some essential characteristics of PostgreSQL are:

- Adaptability: With Postgres, users can design their own operators, functions, aggregates, and data types to cater to different application requirements.

- Adherence to ACID: PostgreSQL ensures the dependability and integrity of transactions, even during system failures, by adhering to the Atomicity, Consistency, Isolation, and Durability (ACID) principles.

- Types of Data: It supports several built-in data types such as a character, date and time, numeric, boolean, JSON, and more.

- Superior Indexing: Numerous indexing techniques, such as B-tree, hash, GIN (Generalised Inverted Index), and GiST (Generalized Search Tree), are available in PostgreSQL, enabling effective data retrieval and query optimization.

- Full Text Search: It provides strong support for full-text search, allowing users to conduct intricate text searches and sort results according to relevancy.

- Features of Security: PostgreSQL provides strong security features, including user roles and privileges, SSL certificates, and encryption choices to protect the confidentiality and integrity of data.

Introduction to Pinecone

Pinecone is a managed, cloud-native vector database with a straightforward API. It is designed to simplify the provision of long-term memory for high-performance AI applications and to efficiently handle high-dimensional vector data, allowing for lightning-fast search and retrieval of comparable items. It stands out in scenarios like recommendation systems and personalized content distribution through optimized storage and querying for embeddings.

Key features of Pinecone include:

- Analytics in Real Time: Pinecone is designed for real-time analytics on vector data, making it ideal for applications requiring immediate insights and answers.

- Search for Similarities: The database excels at similarity searches, allowing users to identify vectors that are similar to a given query vector.

- Cloud Computing Service: Pinecone is available as a cloud-based service, assisting you with scalability, dependability, and accessibility without requiring you to maintain the underlying infrastructure.

- Vector Operations API: Pinecone provides a strong vector operations API, making it user-friendly for developers and allowing for smooth integration into applications.

Best Ways to Integrate PostgreSQL to Pinecone

There are two approaches for integrating PostgreSQL with Pinecone, which are described here.

- Method 1: Using Estuary Flow to connect PostgreSQL to Pinecone

- Method 2: Manually connecting PostgreSQL to Pinecone

Method 1: Using Estuary Flow to Connect PostgreSQL to Pinecone

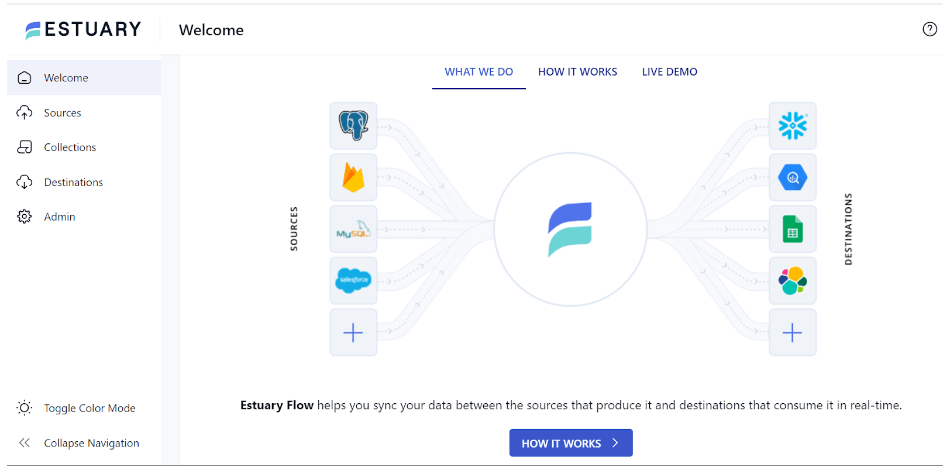

Estuary Flow is a cutting-edge data integration tool designed to streamline the process of connecting various data sources, such as PostgreSQL databases, to advanced analytics engines like Pinecone. With Flow, users can easily build data pipelines to seamlessly migrate data from PostgreSQL to Pinecone, eliminating the need for manual intervention and complex scripts. By leveraging Flow's intuitive user interface and powerful real-time capabilities, organizations can simplify their workflows and unlock the full potential of their data.

Here’s a step-by-step tutorial for connecting PostgreSQL with Pinecone utilizing Estuary Flow, a powerful, real-time extract, transform, load (ETL) tool.

Step 1: Configure PostgreSQL as the Source

- Visit Estuary’s official website and sign in with your existing credentials or create a new one.

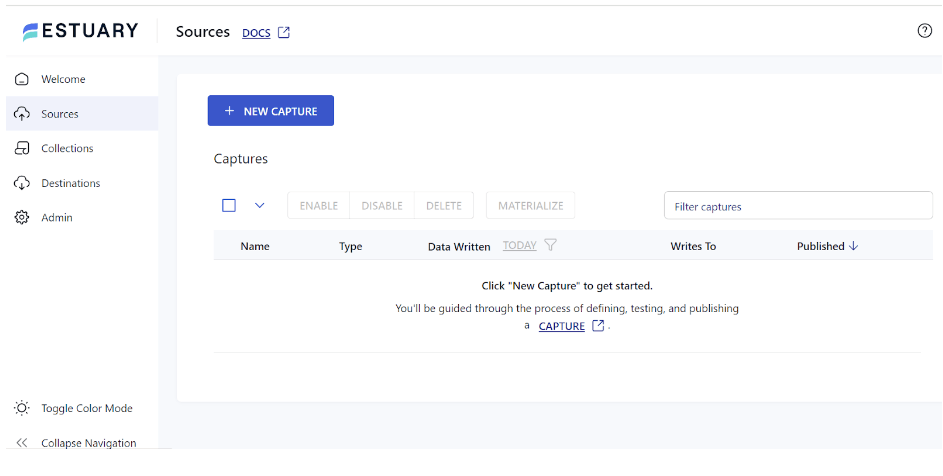

- Navigate to the main dashboard and select the Sources option from the left-side pane.

- On the Sources page, select the + NEW CAPTURE option in the upper left.

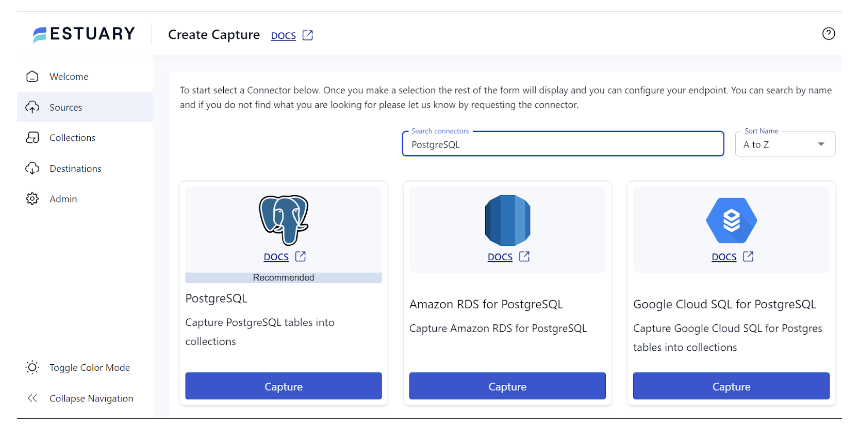

- To identify the connector, type PostgreSQL into the Search connectors box. When you find the PostgreSQL connector in the search, click the Capture button.

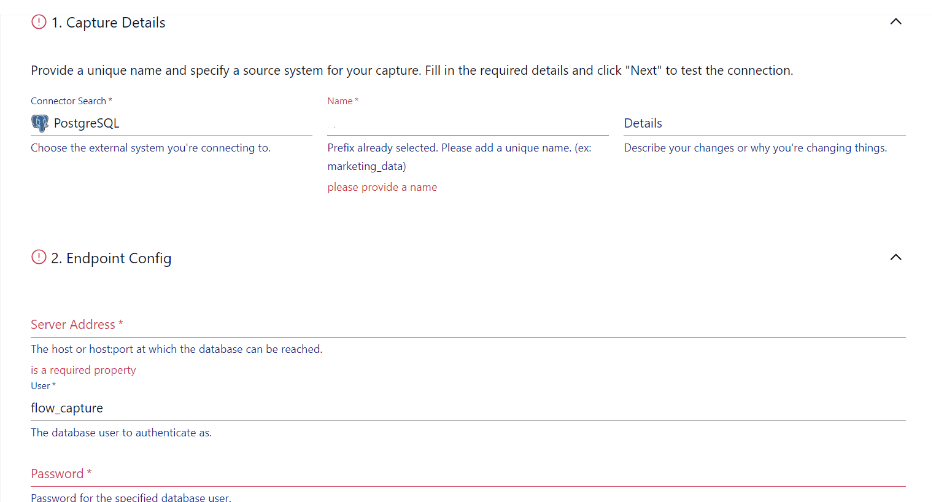

- On the Create Capture screen, provide Name, Server Address, Login Username, and Password.

- To finish configuring PostgreSQL as your source, click NEXT > SAVE AND PUBLISH.

Step 2: Configure Pinecone as the Destination

- After configuring the source, go to the left-side pane and look for the Destinations option.

- Locate and click the + NEW MATERIALIZATION button on the Destinations page.

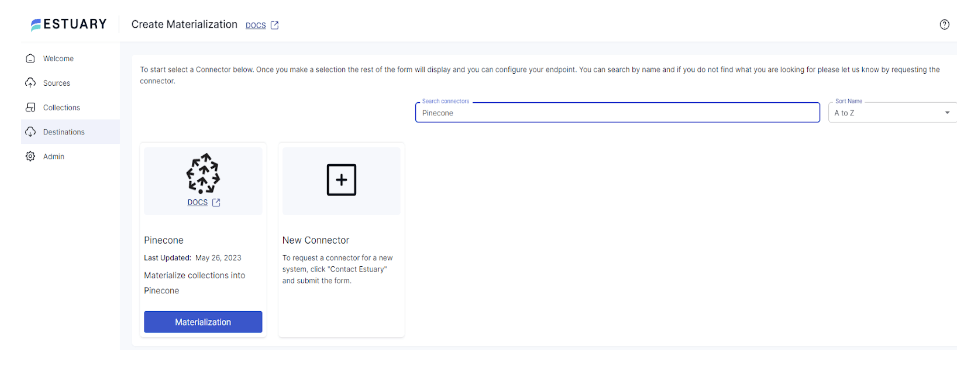

- Use the Search connectors search bar to find the Pinecone connector. When it displays in search results, click the Materialization option.

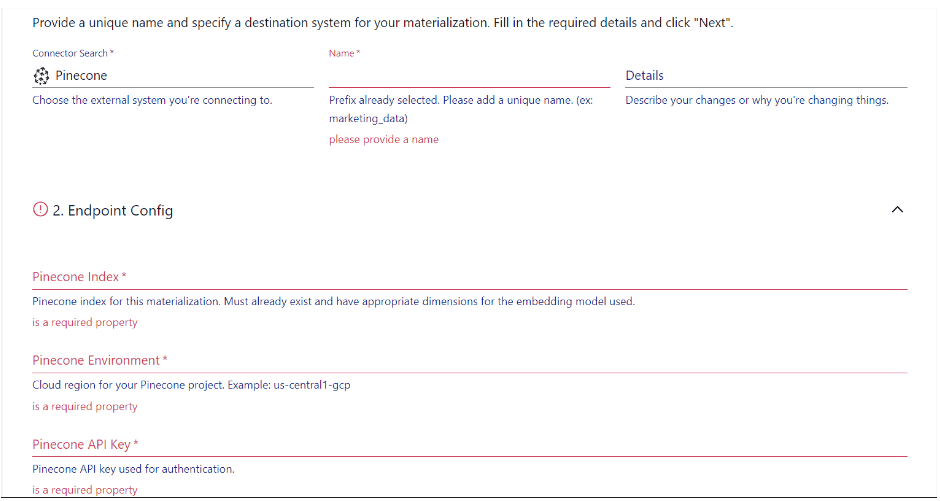

- You will be taken to the Create Materialization page. Fill in the required details, including Name, Pinecone Index, Pinecone Environment, and Pinecone API Key.

- Then, click on NEXT, followed by SAVE AND PUBLISH. This will complete the integration of PostgreSQL and Pinecone using Estuary Flow.

To get a better idea of how Estuary Flow works, check out the following documentation:

Integrating PostgreSQL to Pinecone Using Estuary Flow Offers Several Benefits:

Data Synchronisation: Pinecone and PostgreSQL can easily synchronize data thanks to Estuary Flow. This implies that Pinecone will automatically reflect any modifications or updates made to the PostgreSQL database without the need for human interaction.

Real-time Indexing: You can index data from PostgreSQL in Pinecone in real time by using Estuary Flow. This lets you keep your search indexing updated all the time, so you can always search for the most recent data.

Flexibility and Scalability: You will benefit from Estuary’s scalability and manage increased volumes of data without compromising performance or availability by merging the two databases.

Method 2: Manually Connecting PostgreSQL to Pinecone

There are several steps to integrating PostgreSQL with Pinecone. Here’s an outline of the procedure, along with sample code snippets:

Step 1: Install the essential dependencies:

- Install the ‘psycopg2’ library to interact with PostgreSQL.

- Install the Pinecone Python client library.

Step 2: Establish a connection to PostgreSQL

pythonimport psycopg2

conn = psycopg2.connect(

host= “your_host”,

dbname= “your_database”,

user= “your_user”,

password= “your_password”

)The code connects to a PostgreSQL database using the Python psycopg2 package. For authentication, you need to provide details like host, database name, username, and password.

Step 3: Fetch the data from PostgreSQL

pythoncursor =conn.cursor()

cursor.execute(“SELECT* FROM your_table;”)

records = cursor.fetchall()In the code above, a database connection is made, a SQL query is run to select all records from a table called “your_table”, and all of the records are then fetched and placed into the “records” variable.

Step 4: Transform the data into vectors using Pinecone

pythonfrom pinecone import Index

Index = Index(index_name= “your_index_name”)

For record in records:

vector = transform_to_vector(record)

index.upsert(ids=[record.id],vectors=[vector])The code first creates an index with Pinecone, then upserts records by converting them into vectors linked to their IDs in the index.

Step 5: Perform searches with Pinecone

pythonquery_vector = transform_to_vector(query)

result = index.query(queries=[query_vector], top_k=10)

for hit in result.hits:

print(hit)By converting a query into a vector representation, the code queries a certain index using the vector to obtain the top 10 hits, and then the output is generated.

Step 6: Terminate the connection to PostgreSQL

pythonconn.close()You can modify the above code depending on your individual configuration and requirements.

Limitations of Integrating PostgreSQL to Pinecone Manually

- Data synchronization: Manually synchronizing data between PostgreSQL and Pinecone can be time-consuming and inefficient. You would be required to build several procedures that involve writing custom scripts, queries, or workflows to ensure data consistency between the two systems.

- Real-time updates: If you require continuous changes to your search index, directly integrating PostgreSQL with Pinecone may not be the most effective solution. Keeping the search index in sync with the database in real-time can be difficult without additional technology.

- Performance: Pre-built connectors offer optimization and scalability that manual integrations sometimes lack. Performance bottlenecks may result from this, particularly as your data volume increases.

- Complex searches: Manually connecting PostgreSQL with Pinecone may require significant work to ensure you can effectively do complex queries such as full-text search or advanced filtering. While PostgreSQL has significant querying capabilities, implementing them effectively in a search engine requires complex design and customization.

- Maintenance and operational overhead: Manually connecting Postgres to Pinecone requires managing and maintaining continuously. This includes database optimization, backups, monitoring data pipelines, and upgrades, which might add to operational overhead.

To address these constraints, consider adopting a dedicated data integration platform such as Estuary Flow to connect PostgreSQL with Pinecone or look into other data storage choices that better meet the needs of your search use case.

Get Integrated

The integration of PostgreSQL with Pinecone is not just a simple database change. It is a deliberate step towards improved real-time analytics, similar searches, and the effective management of high-dimensional vector data. Using the best features of both platforms, this integration will enable businesses to operate efficiently around analytics requirements.

When manually moving data from PostgreSQL to Pinecone, thorough attention to data formats, vectorization strategies, and application code adjustments is required.

We recommend exploring the integration process with SaaS tools like Estuary Flow for a seamless and effective switch from PostgreSQL to Pinecone.

Register to get integrated with Flow or contact our team for more information.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles