Both PostgreSQL (referred to as Postgres) and Aurora are popular relational database management systems (RDBMS) known for their performance and reliability.

However, as your data needs grow, you might need to migrate Postgres to Aurora for better scalability, availability, cost-efficiency and overall performance.

If you're considering making the switch, this comprehensive guide will walk you through three proven methods for migrating your PostgreSQL data to Amazon Aurora.

PostgreSQL Overview

PostgreSQL is a powerful, open-source RDBMS built in 1986 at UC Berkeley. It can store and manage structured data in tabular format. Postgres can also handle semi-structured data like JSON and XML documents. Postgres also supports storing, indexing, and querying geospatial data with powerful add-ons like PostGIS geospatial database extender. Therefore, it has become widely popular for location-based applications.

Amazon Aurora Overview

Aurora is a fully organized relational database engine on the Amazon Relational Database Service (Amazon RDS). It includes a high-performing storage subsystem and offers simplicity and cost-effectiveness over traditional RDBMS.

Aurora offers a highly scalable database solution that scales the storage as required up to a maximum size of 128 tebibytes (TiB). This helps organizations optimize cost efficiencies and avoid overspending when less storage is required.

When to Consider Migrating from PostgreSQL to Amazon Aurora?

- High Performance Needs: If your PostgreSQL database is struggling to keep up with increasing workloads or demanding applications, Aurora's highly scalable architecture can deliver up to 5x the throughput.

- Scalability Requirements: Aurora automatically scales storage and compute resources as needed, accommodating rapid growth without manual intervention.

- High Availability Concerns: Aurora provides built-in fault tolerance and self-healing capabilities, minimizing downtime and ensuring data durability.

- Cost Optimization: Aurora's Serverless option can automatically scale capacity up or down based on demand, potentially reducing costs compared to provisioning for peak usage.

- Simplified Management: Aurora is a fully managed service, offloading tasks like backups, patching, and upgrades to AWS, freeing up your team to focus on other priorities.

3 Ways to Migrate Postgres to Aurora

Let’s look at three reliable ways you can use to transfer your data from PostgreSQL to Amazon Aurora:

Method 1: Using a No-Code ETL Tool to Migrate Postgres to Aurora

Method 2: Using Publisher and Subscriber to Migrate Postgres to Aurora

Method 3: Using pg_dump and pg_restore to Migrate Postgres to Aurora

Method 1: Using a No-Code ETL Tool to Migrate Postgres to Aurora

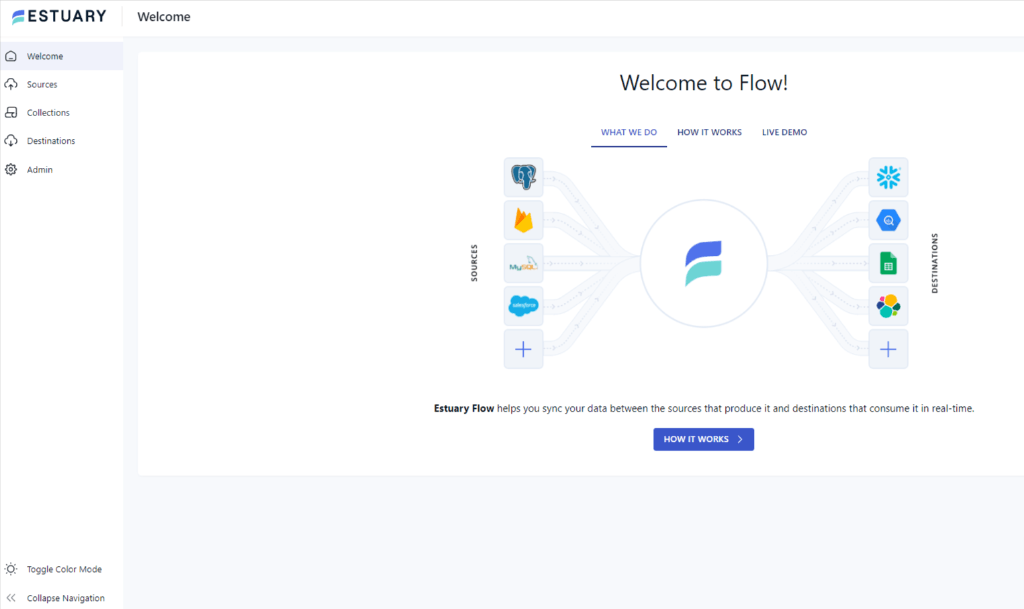

No-code ETL (extract, transform, load) and data integration tools provide efficient data migration from PostgreSQL to AWS Aurora with automated data migration and require little to no technical expertise. One such effective data integration platform is Estuary Flow.

Estuary Flow is a SaaS tool that guarantees decreased latency during data migration by using real-time streaming pipelines. It supports real-time data processing applications, especially for fluctuating data volumes and demanding performance needs.

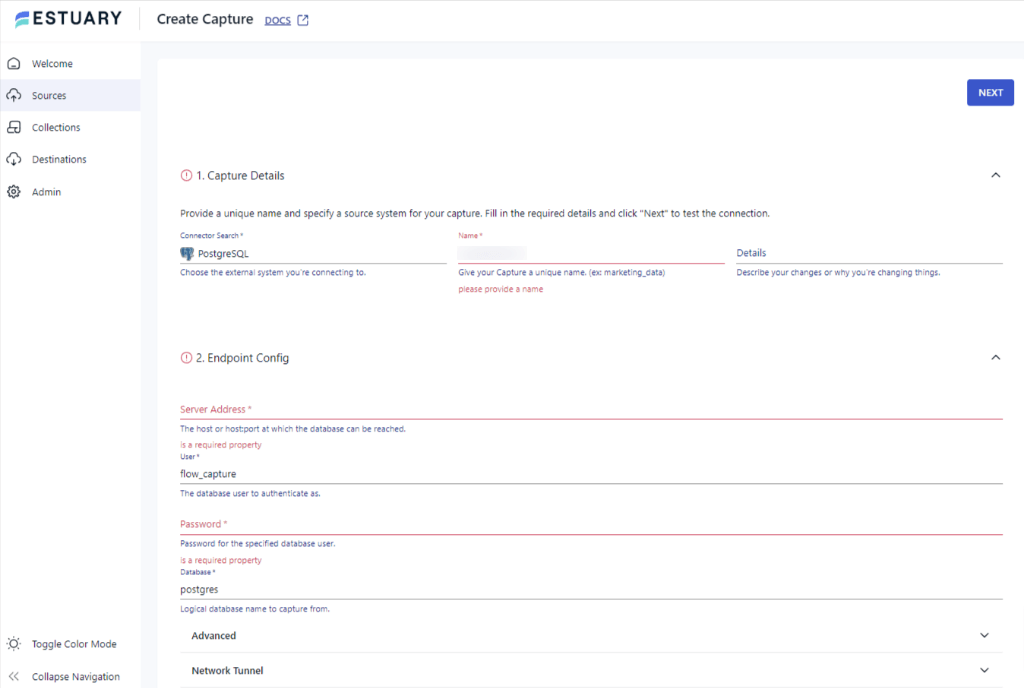

Step 1: Set Up Postgres as the Source Connector

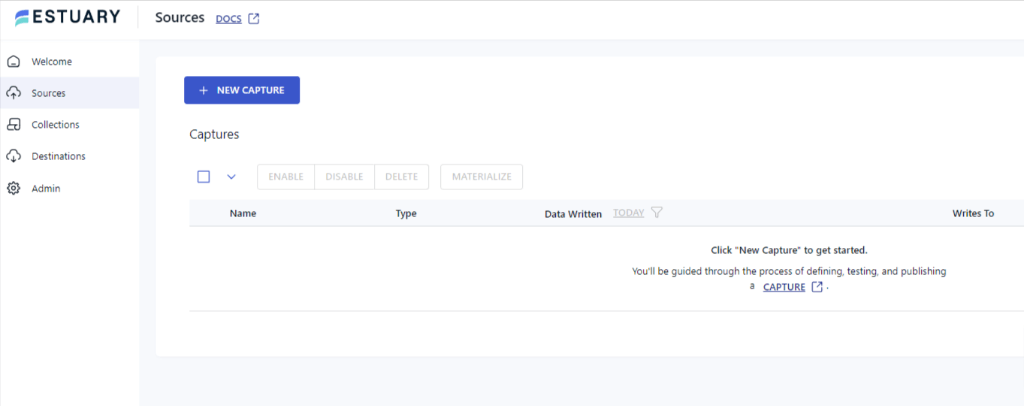

- To migrate Postgres to Aurora, sign in to your Estuary Flow account or create a free one.

- After logging in, click on Sources on the dashboard’s left pane.

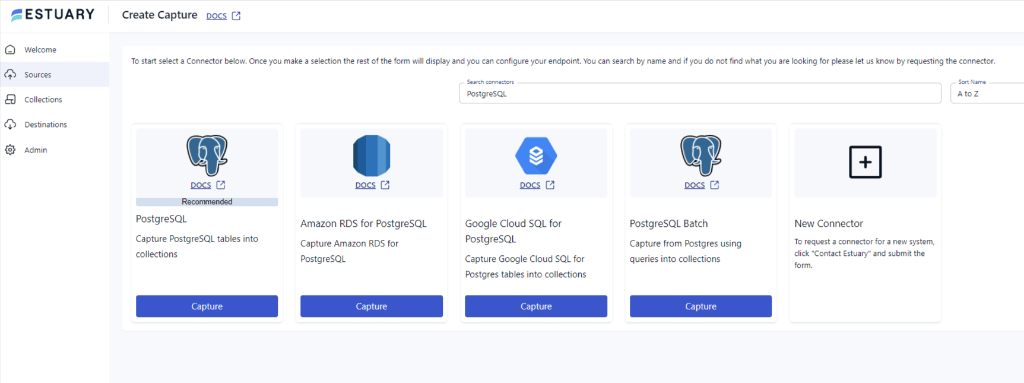

- On the Sources page, click on + NEW CAPTURE and search for PostgreSQL in the Search connectors box.

- Click on the Capture button once you see the PostgreSQL connector.

- On the Create Capture page, enter the required details like Name, Server Address, Username, Password, and Database information.

- After filling in all the details, click on NEXT > SAVE AND PUBLISH.

This will capture the data from PostgreSQL to Estuary Flow Collections.

Step 2: Set Up Amazon Aurora as the Destination

After successfully capturing PostgreSQL, a pop-up window with capture details will appear. Click on MATERIALIZE CONNECTIONS in the pop-up window to set up the pipeline's destination.

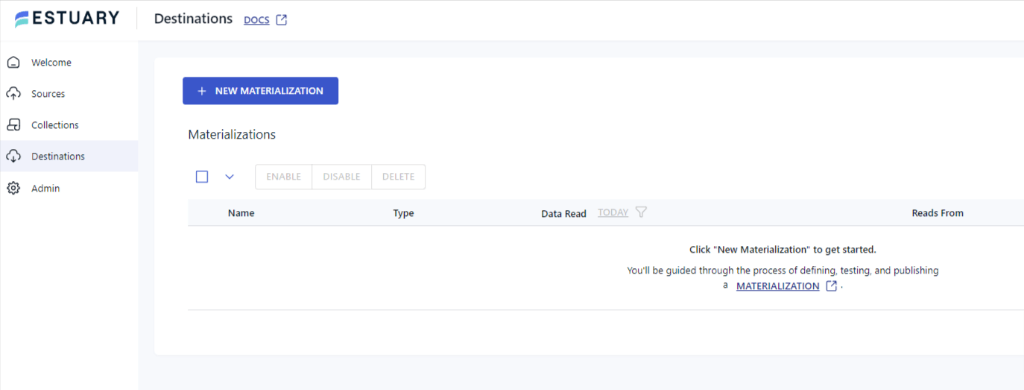

Another way to configure the source is by clicking on the Destinations option on the left-side pane of the dashboard.

- Once redirected to the Destinations page, click on the + NEW MATERIALIZATION

button.

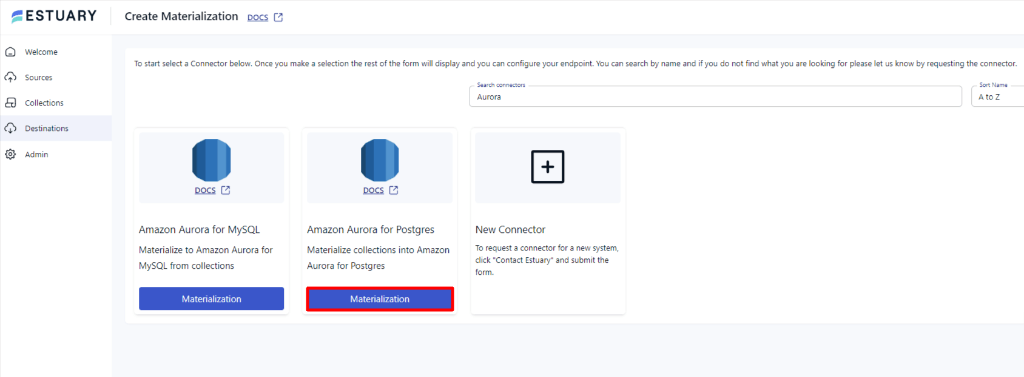

- Search for Aurora in the Search connectors on the Create Materialization page.

- Select Amazon Aurora for Postgres by clicking on the Materialization button.

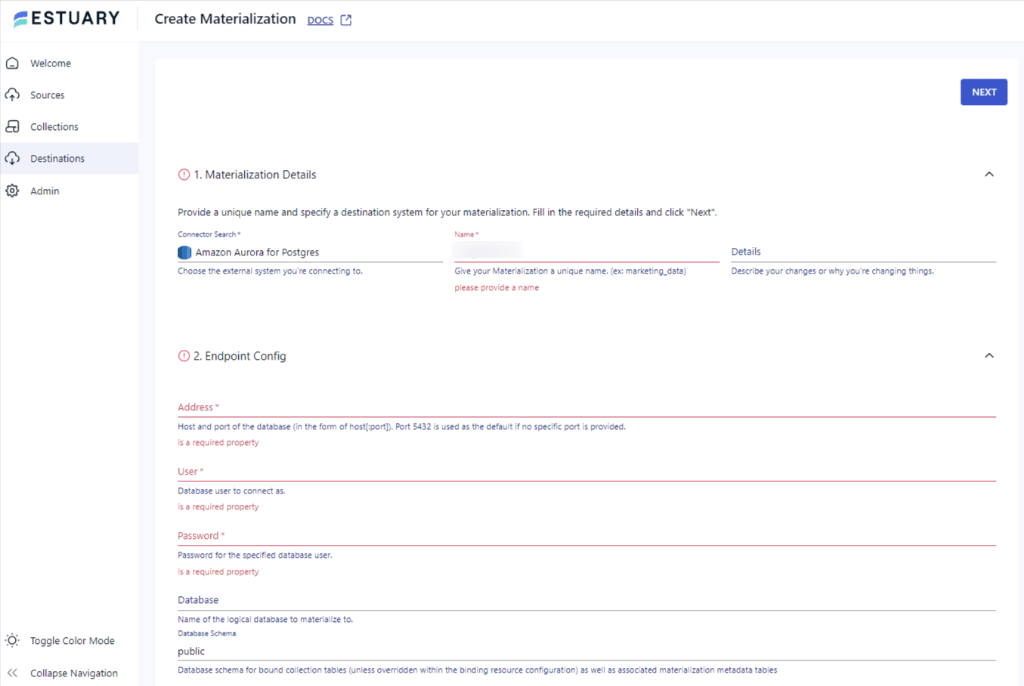

- Enter the required Materialization Details and Endpoint Config details, such as Name, Address, User, Password, and Database information.

- Then click on NEXT > SAVE AND PUBLISH to finish the migration process.

Benefits of Using No-Code ETL Tool to Migrate Postgres to Aurora

- Scalability: With a fully managed enterprise-grade system, Estuary Flow supports flows of up to 7 GB/s+.

- Built-in Connectors: Estuary Flow offers simplified data migration using 200+ built-in connectors, reducing errors during the data migration.

- Automation: Estuary Flow automates the entire migration process, from extraction to loading, requiring only a few clicks.

Estuary Flow offers a range of pricing plans to suit different needs, including a free tier. For detailed pricing information and to explore their flexible options, visit pricing page.

Method 2: Using Publisher and Subscriber to Migrate Postgres to Aurora

You can use logical replication models such as Publisher and Subscriber to migrate Postgres to Aurora. In the pub/sub model, subscribers subscribe to one or more publications on a Publisher node.

The following guide shows how to migrate all tables from a public schema.

Step 1: Configuring the Source Database

- Edit the postgresql.conf file in the source database and add the below parameters.

pythonwal_level = 'logical'

max_replication_slots = 10

max_wal_senders = 10 pythonRestart the source instance to apply these parameters and use the following command to ensure the parameters are configured correctly in your database.

psql -h <hostname> -p 5432 -U <username> -d <database_name>

-c "select name, setting from pg_settings where name in ('wal_level','max_worker_processes','max_replication_slots','max_wal_senders','shared_preload_libraries');"- Enter the name of the source server at <hostname> and the name of the user present on the server at <username>. Enter the name of the database present on the server.

Step 2: Setting up the Logical Replication

- Create the publication on your source database server using the following command.

pythonCREATE PUBLICATION my_publication FOR ALL TABLES;- You can only specify the tables that you want to publish and limit the changes that will be published. Now, you can create a database on Amazon Aurora using the following command.

pythonpsql -h <hostname> -p 5432 -U <username> -d -c "create database migrated_database;"- Create the subscription on your target database using the following command.

pythonCREATE SUBSCRIPTION <subscription_name>

CONNECTION 'host=<host> port=<port_number> dbname=<database_name> user=<username> password=<password>' PUBLICATION <publication_name> WITH copy_data=true;Step 3: Capture Publisher and Subscriber Values and DDrop Artifacts

- Check the replication status of your database by running the following command.

pythonSELECT * FROM pg_stat_replication;- Stop the replication process once the data syncs between the source and the target using the following command.

pythonALTER SUBSCRIPTION <subscription_name> DISABLE;- Capture the slot name created on the target database by running the following command.

pythonselect subslotname from pg_subscription where subname like 'subsription_name';- Run the following command to fetch the confirmed_flush_lsn from the replication slot.

pythonSELECT slot_name, confirmed_flush_lsn from pg_replication_slots where slot_name like 'replication_slot_name'; - Finally, drop the subscription and publication artifacts using the following commands.

pythonDROP SUBSCRIPTION <subscription_name>;

DROP PUBLICATION <publication_name>;Limitations of Using Publisher and Subscriber to Migrate Postgres to Aurora

- Metadata Migration: Migrating the database’s metadata is not supported by the Publisher and Subscriber model.

- Version Compatibility: Publisher and Subscriber model is only compatible with PostgreSQL database version 10.x and lower.

- Schema Replication: This model doesn’t support the replication of schema, DDL, and sequences, which can result in loss of information.

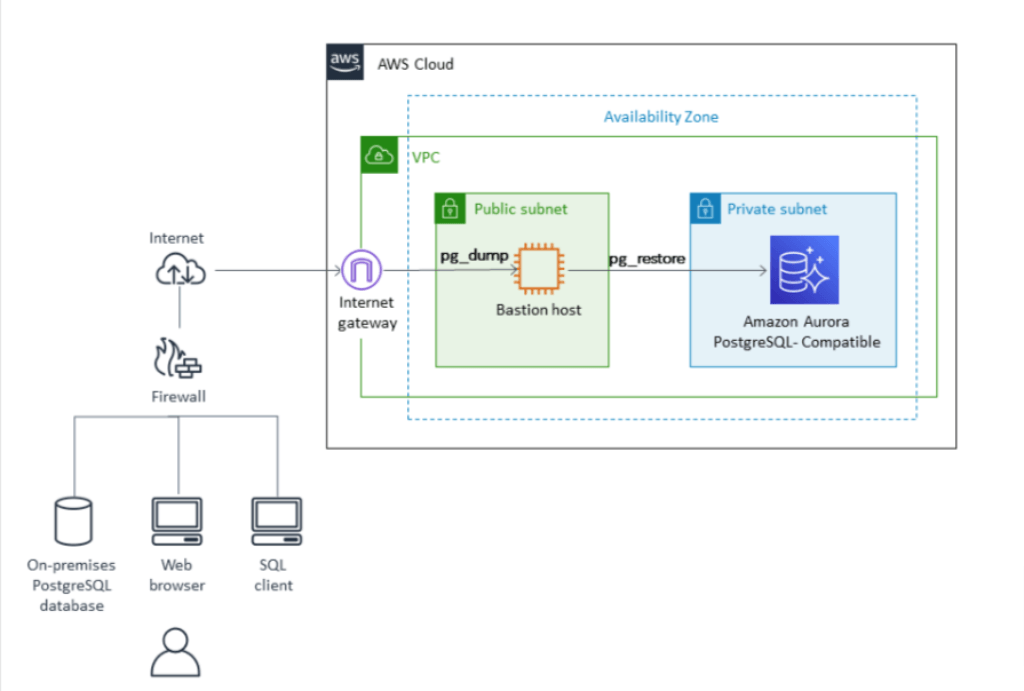

Method 3: Using pg_dump and pg_restore to Migrate Postgres to Aurora

You can use PostgreSQL native utilities like pg_dump and pg_restore to migrate data from a self-managed PostgreSQL database to Amazon Aurora. These utilities create a set of SQL commands to reproduce the original database.

Prerequisites:

Step 1: Export Data to Dump Files

- You can create dump files for your source database using the following command in the PostgreSQL terminal.

pythonpg_dump -h <hostname> -p 5432 -U <username> -Fc -b -v -f <dumpfilelocation.sql> -d <database_name>Here, hostname refers to the name of your self-managed Postgres server, and username refers to the name of the user present on the source server. Enter the dump file path at <dumpfilelocation.sql>.

Step 2: Create a DB on Your Target Instance

- Login to your database server using the following command.

pythonpsql -h <hostname> -p 5432 -U <username> -d <database_name>- Enter the name of the Aurora server you want to migrate your database to at <hostname> and the name of the user present on the target server at <username>. Enter the name of the database present on the target at <database_name>

- Create the database using the following command.

pythoncreate database migrated_database;Step 3: Import the Dump Files to Aurora

- Use the following command to import the dump files to Amazon Aurora.

pythonpg_restore -v -h <hostname> -U <username> -d <database_name> -j 2 <dumpfileloacation.sql>- Enter the name of the Amazon Aurora server at <hostname> and the name of the database you created in Step 2 at <database_name>.

Limitations of Using pg_dump and pg_restore to Migrate Postgres to Aurora

- DB Size Restriction: pg_dump and pg_restore only support database migration up to 100 GB; any database larger than 100 GB will require splitting into smaller files.

- Downtime: The downtime is higher in the pg_dump and pg_restore methods compared to any other method.

- Technical Expertise: Using this method to migrate Postgres to Aurora requires a strong understanding of PostgreSQL native utilities and Amazon Web Services.

The Takeaway

Seamless migration from PostgreSQL to Amazon Aurora can be achieved with three different methods. The right migration method for you depends on several factors, including your technical expertise, database size, and tolerance for downtime.

- No-Code ETL (Estuary Flow): By using Estuary Flow, you can achieve Postgres and Aurora integration with just a few clicks. It's also a great choice if you need real-time data streaming or have large databases that can't be easily dumped.

- Logical Replication (Publisher/Subscriber): You can use manual methods such as Logical Replication. However, this manual methods are more time-consuming, require technical expertise, and have compatibility issues with the RDBMS version.

- pg_dump/pg_restore: This manual method is a reliable option for smaller databases or when budget constraints are a major factor. However, it requires technical expertise and can lead to longer downtime during the data transfer.

These methods are reliable and will help you to migrate your data, but there are limitations that are important to consider when comparing the options to transfer data to Aurora from Postgres. Ultimately, the best approach will be the one that aligns with your specific needs and resources.

Interested in other PostgreSQL migrations? Explore our guides on:

Ready to elevate your database experience? Sign up for Estuary Flow today to migrate data seamlessly across multiple platforms. You can also migrate Postgres RDS to Aurora and many more with the 200+ inbuilt connectors of Flow.

Start your free trial of Estuary Flow and experience hassle-free data transfer today!

FAQs

What is the difference between Postgres and Aurora Postgres?

A difference between PostgreSQL and Aurora PostgreSQL is in cost and management complexity. Aurora PostgreSQL charges for compute and storage, with costs scaling linearly. The overall ownership cost is lower due to reduced operational overhead. However, PostgreSQL involves lower initial costs with pay-per-use instance pricing; it requires dedicated personnel to manage backups and replication, increasing long-term operational costs.

Is Amazon Aurora compatible with PostgreSQL?

Yes, Amazon Aurora is fully compatible with PostgreSQL. It allows existing applications and tools built for PostgreSQL to run without any modifications. Aurora also offers significant performance and scalability improvements over standard PostgreSQL, with additional benefits like automatic failover and fault tolerance.

Is Aurora faster than RDS?

Aurora is generally a good choice for high-performance workloads requiring high throughput and low latency. It can be up to 3x faster than PostgreSQL and 5x faster than MySQL. While RDS can also be used for high-performance workloads, it isn’t as fast as Aurora.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles