With recent technological advancements, there has been an exponential rise in the generated volumes of data through apps, interactions, and digital transactions. Organizations are constantly looking for a scalable, secure, and flexible solution to store, process, and analyze this vast information.

From the time of its introduction, the Oracle Database has remained a popular choice for organizational data storage and management. This enterprise database has impressive capabilities to manage large volumes of complex data. However, Oracle’s associated licensing costs and proprietary nature can present financial challenges

Moving from Oracle to PostgreSQL could be just the answer to these challenges. The extensibility, scalability, and open-source nature make Postgres a suitable option for high-performance software applications.

This guide explores the key features of both databases and provides step-by-step instructions to simplify your Oracle to PostgreSQL migration.

An Overview of Oracle

Oracle Database is a relational database management system that allows the storage and querying of relational data. It is a high-performing data system well-suited for OLTP (Online Transaction Processing) workloads, data warehousing operations, and advanced analytics.

The Oracle database has a client/server architecture with database server and client connections running in separate processes. While the server helps in data and resource management, the client connections interact with servers to access and manipulate data.

Oracle also supports Change Data Capture (CDC), a method for tracking and capturing changes in data for replication purposes, making it ideal for situations that require continuous data synchronization. To learn more about this, check out our Oracle CDC guide

A database and database instance are essential components of the Oracle database server. The database comprises a set of files located on a disk that contains user data. After the launch of Oracle Database 21c, ‘database’ implies data files of multitenant container database (CDB) architecture. This can host one or more pluggable databases (PDB) and an optional application container.

On the other hand, an instance is a set of memory structures that enable you to manage database files. It consists of a shared memory area known as a system global area (SGA) and a set of background processes. An instance can function independently of database files.

To accommodate increasing data volumes, you can scale the Oracle Database horizontally using sharding and partitioning techniques. These methods enable you to distribute the additional data load across multiple servers or nodes. As a result, Oracle facilitates the effective management of large datasets and can be used in software applications that utilize big data.

An Overview of PostgreSQL

PostgreSQL is an open-source object-relational database system known for its high performance and advanced features. You can use it to store and query different data types, such as numeric, boolean, date/time, and JSON. This makes it a versatile option for varied data storage needs.

Postgres supports several SQL functions and operators, including aggregate operators, for effective querying of the data stored in its tables. The database also allows you to retrieve data faster using various indexing methods such as B-Tree, hash, GiST, SP-GiST, GIN, and BRIN.

Here are some important features of PostgreSQL:

- Extensibility: You can expand the functionality of PostgreSQL with the help of extensions. It supports various extensions such as PostGIS, which helps you manage spatial data, and pg_stat_, which enables tracking of query statistics.

- Concurrency Control: PostgreSQL supports a Multiversion Concurrency Control (MVCC) model. This model provides a snapshot of the database at the beginning of the transaction in case of concurrent operations. This facilitates the simultaneous usage of Postgres by multiple users without affecting data integrity.

- ACID-Compliance: PostgreSQL is ACID (Atomicity, Consistency, Isolation, Durability)-compliant, ensuring data integrity and reliable transaction processing in case of system failures. This makes Postgres an optimal choice in critical sectors such as healthcare, finance, and telecommunications.

- Robust Security Mechanism: You can ensure data security in Postgres through security features, such as encryption and role-based access control. It also supports authentication mechanisms, including trust, password, and GSSAPI authentication.

While PostgreSQL offers flexibility and cost efficiency, some organizations consider other options like migrating from Oracle to Snowflake, especially if they need optimized cloud-based data warehousing solutions.

Why Should You Migrate from Oracle to PostgreSQL?

Migrating data from Oracle to PostgreSQL can be helpful for organizations that prioritize cost efficiency, ease of use, and better flexibility.

Here are some reasons why you should make the move from Oracle to PostgreSQL:

- Flexibility: Oracle is a proprietary database and is less flexible in terms of customizability. While you can use Oracle’s extensions to expand its capabilities, they are expensive.

On the other hand, PostgreSQL is open-source, and you can modify its source code. The wide array of extensions in Postgres allows you to expand its functionality considerably. This makes Postgres more flexible than Oracle.

- Cost Efficiency: Oracle's licensing fees can be expensive and vary according to features and deployment options.

Contrarily, PostgreSQL is open source, involves no licensing costs, and can be used freely for commercial and individual purposes.

- Multi-cloud Compatibility: You can deploy Oracle databases on cloud platforms, such as AWS, Azure, or GCP. However, deploying them on external cloud platforms is complex and expensive, mainly due to licensing, the need for specific configurations, and higher operational management overhead.

PostgreSQL offers multi-cloud support with greater flexibility. As an open-source database, you can easily deploy it on several cloud provider platforms, including AWS, GCP, and Azure. You need not worry about any licensing constraints as with Oracle.

How to Migrate Data From Oracle to PostgreSQL

- The Automated Method: Oracle to PostgreSQL Migration Using Estuary Flow

- The Manual Method: Using Manual Methods to Transfer Data from Oracle to PostgreSQL

- Using Foreign Data Wrapper to Load Data from Oracle to PostgreSQL

- Using CSV Export/Import to Move Data from Oracle to PostgreSQL

Method 1: Oracle to PostgreSQL Migration Using Estuary Flow

Using automated data transfer tools is an effective way to minimize errors and reduce downtime while building data pipelines. Estuary Flow, a real-time ETL solution, is one such platform that helps you simplify data migration with automation. It offers an extensive library of 200+ pre-built connectors that enable you to collect and consolidate data from various sources to your desired destination system.

Here are some key features of Estuary Flow:

- Change Data Capture (CDC): Estuary Flow supports CDC for real-time data synchronization. It facilitates an end-to-end latency of less than 100 milliseconds between capturing source data changes and reflecting them at the destination. Estuary facilitates the streaming of transaction logs and incremental backfill to deliver the changes to the destination system.

- Private Deployments: You can deploy Estuary Flow in your private network in order to make sure your data never leaves your control. Flow supports multiple deployment modes such as BYOC (Bring Your Own Cloud) and fully Private Deplyoments in order to conform to any enterprise environment.

- Efficient Transformations: Estuary Flow supports transformations, termed derivations. You can use SQLite or TypeScript for streaming and batch pipelines. Whether you want to aggregate data from many documents, unpack an array nested inside, or add calculations to certain documents, Flow derivations can help out.

- No-code Configuration: Estuary enables you to easily integrate data between various sources and destinations. Anybody in your organization, with or without detailed technical knowledge, can start moving data effortlessly with Estuary Flow.

Let’s look into the details of how you can use Estuary Flow to move your data from Oracle to PostgreSQL.

Prerequisites

- An Estuary Flow account.

- OracleDB 11g or above.

- Enable connections from Estuary Flow to the Oracle database.

- Create a read-only Estuary Flow user with access to all tables required for replication.

- A PostgreSQL account with database and user credentials.

- At least one Flow collection.

Step 1: Set Up Oracle Database as Source

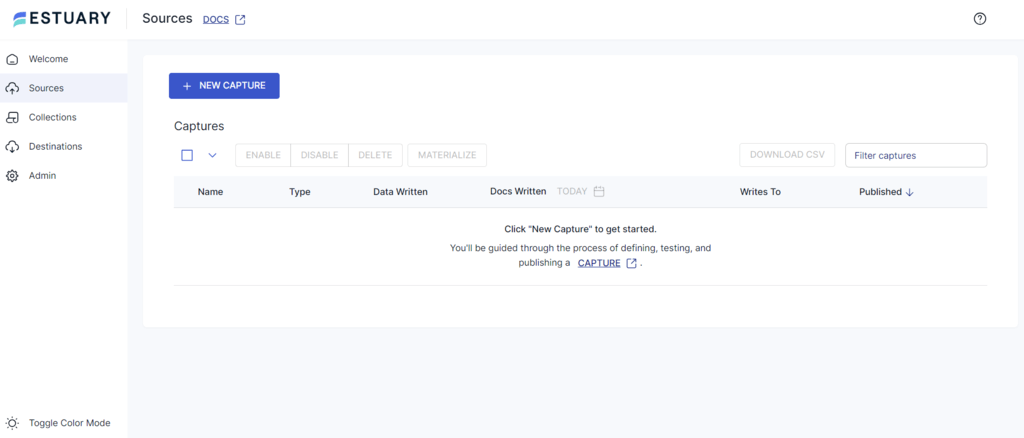

- Sign in to your Estuary Flow account.

- Select Sources from the left-side pane of the dashboard. You will be redirected to the Sources page.

- Click the + NEW CAPTURE button to proceed to the Create Capture page.

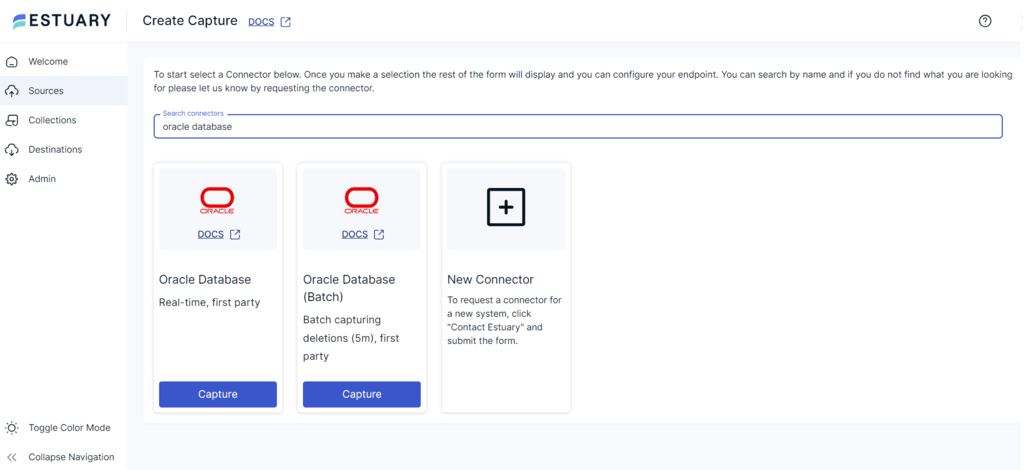

- Use the Search connectors field to find the connector for Oracle Database.

- You will see two Oracle Database connector options: Real-time and Batch. Select the one that is suitable for your requirements and click the connector’s Capture button.

Let’s proceed with the Oracle real-time connector for this tutorial.

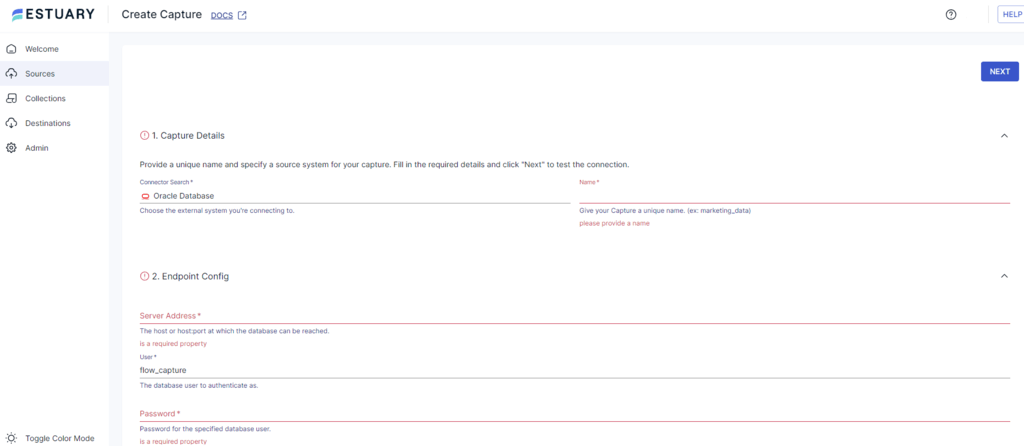

- You will be redirected to the connector’s configuration page. Enter all the required fields, including:

- Name: Provide a unique capture name.

- Server Address: This is the host:port at which you can connect to your database.

- User: Enter your Oracle database user name for authentication.

- Password: Provide the password for the specified database user.

- After entering all the details, click NEXT and then SAVE AND PUBLISH.

This completes your source connector configuration. The connector will capture your OracleDB data into a Flow collection.

Step 2: Set Up PostgreSQL as a Destination

- To set up PostgreSQL as a destination, click the MATERIALIZE COLLECTIONS button on the pop-up that follows a successful capture.

Alternatively, navigate to the dashboard and click Destinations > + NEW MATERIALIZATION.

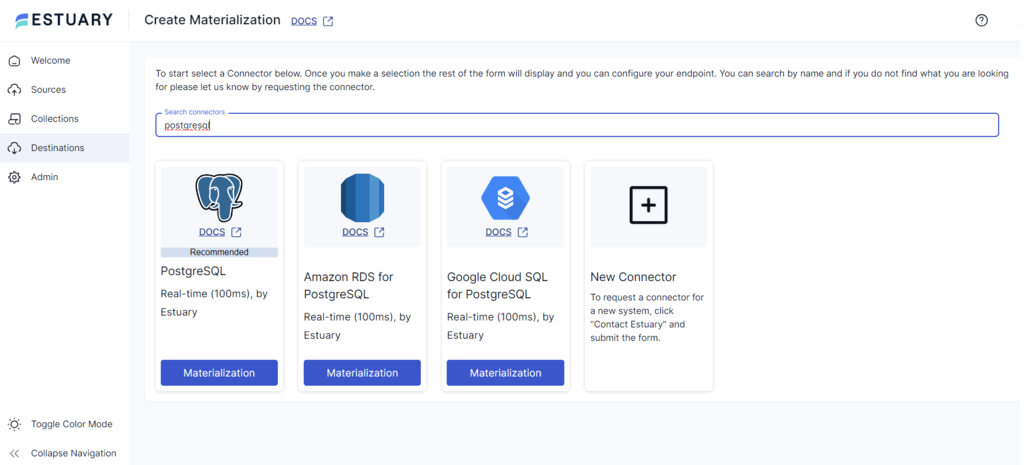

- Search for the PostgreSQL connector using the Search connectors field.

- Click the Materialization button of the PostgreSQL connector in the search results.

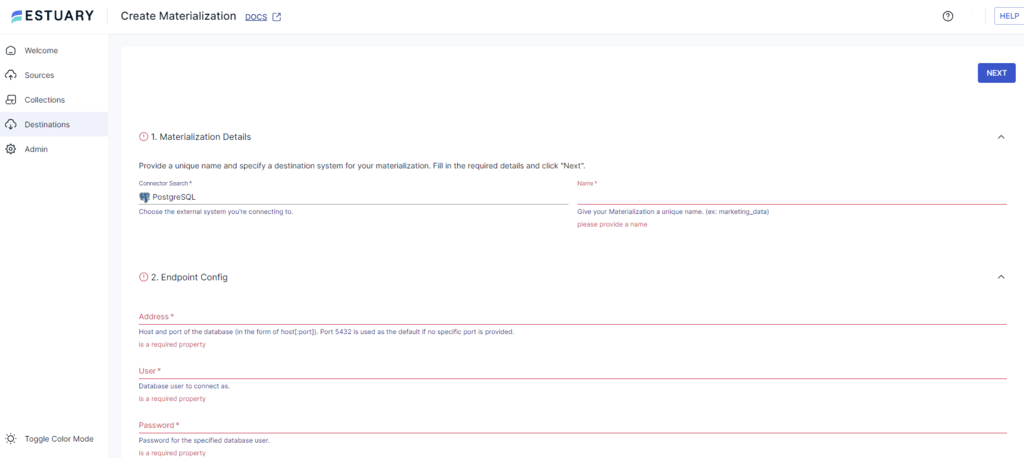

- On the Create Materialization page, enter all the necessary details, including:

- Name: Give your materialization a unique name

- Address: Enter the host and port of the database. Port 5432 is used by default if you don’t provide a specific port.

- User: Provide the database user you want to connect as.

- Password: Mention the password for the specified database user.

- Check if your data captured from the Oracle Database is added to your materialization. If not, you can click the SOURCE FROM CAPTURE button in the Source Collections section to link the capture to your materialization.

- Click on NEXT > SAVE AND PUBLISH to complete the destination connector configuration.

This connector will materialize the Flow collections of your Oracle data into PostgreSQL tables.

Ready to simplify your Oracle to PostgreSQL migration? Get started with Estuary Flow today, and also join the Slack community. It’s the easiest way to get support!

Method 2: Oracle to PostgreSQL Migration Using Foreign Data Wrappers

A Foreign Data Wrapper (FDW) in PostgreSQL is a component that enables you to integrate and query data from external sources, such as Oracle databases. Oracle_fdw is a PostgreSQL extension that supports FDW functionality, allowing you to access data from Oracle databases. It allows you to access and modify data stored in Oracle databases as if it were a native PostgreSQL table.

Here are the steps to execute this data transfer:

- Log in to your PostgreSQL account.

- Ensure the Oracle client is installed and properly configured on your system.

- Connect to Oracle using SQL*Plus with the following command.

plaintextsqlplus orauser/orapwd@//dbserver.mydomain.com:1521/ORADBConsider an example table in Oracle you’re looking to access:

plaintextSQL> DESCRIBE oratab

Name Null? Type

------------------------------- -------- ------------

ID NOT NULL NUMBER(5)

TEXT VARCHAR2(30)

FLOATING NOT NULL NUMBER(7,2)- Create and configure the oracle_fdw extension and define the Oracle server to which you want to connect.

plaintextpgdb=# CREATE EXTENSION oracle_fdw;

pgdb=# CREATE SERVER oradb FOREIGN DATA WRAPPER oracle_fdw

OPTIONS (dbserver '//dbserver.mydomain.com:1521/ORADB');- oradb: Name of the Oracle server.

- dbserver: Oracle database connection string for a remote database.

- Next, allow the Postgres user, pguser, to access the Oracle server.

plaintextpgdb=# GRANT USAGE ON FOREIGN SERVER oradb TO pguser;- Following this, create a user mapping to connect pguser in PostgreSQL to orauser in Oracle.

plaintextpgdb=> CREATE USER MAPPING FOR pguser SERVER oradb

OPTIONS (user 'orauser', password 'orapwd');- orauser: Oracle user name

- orapwd: Oracle password

- Finally, create a foreign table in Postgres that links to the Oracle table (oratab) using the following command:

plaintextpgdb=> CREATE FOREIGN TABLE oratab (

id integer OPTIONS (key 'true') NOT NULL,

text character varying(30),

floating double precision NOT NULL

) SERVER oradb OPTIONS (schema 'ORAUSER', table 'ORATAB');You can now query this data from the oratab table in Postgres, as if it were a local table.

Limitations of Using Foreign Data Wrappers for Oracle to Postgres Migration

- Data Security: The use of foreign data wrappers without proper security mechanisms can expose external data sources, such as Oracle databases. This can lead to increased risks of unauthorized access and data breaches.

- Limited Functionality: Foreign data wrappers may not be suitable for complex querying. This is mainly because Postgres can fail to send complex queries or operations dealing with large datasets efficiently to the Oracle database.

- Complexity: Installing and managing the performance of foreign data wrappers can be complex. This can reduce the efficiency of the data migration process, contributing to latency. You can train your current employees or hire an expert for this, but it can be expensive.

Method 3: Oracle to PostgreSQL Migration Using CSV Method

An alternative method for loading data from Oracle to PostgreSQL is through CSV files. You can extract data from the Oracle database as a CSV and transfer the data in this file to PostgreSQL.

Here are the steps for this migration:

Step 1: Export Data from Oracle as CSV

There are two ways to export data from Oracle to CSV as follows:

Method 1: Export Data from Oracle as CSV Using Oracle SQL Developer

Here are the steps to export your Oracle data in CSV format using SQL Developer, a command line interface supported by Oracle.

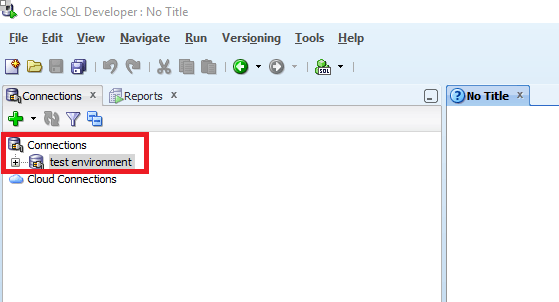

- Log in to Oracle SQL Developer and connect to the database that you want to export.

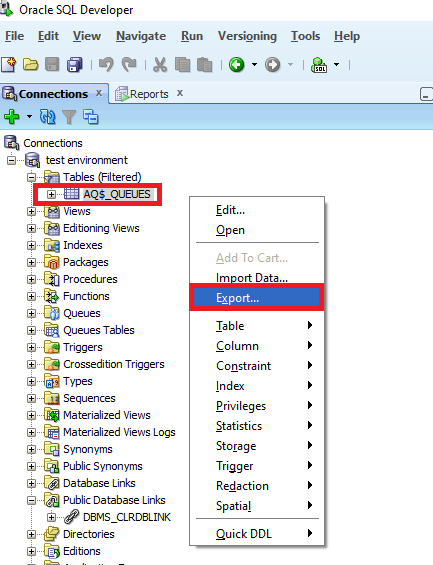

- Select the table you want to export and right-click on it to open the context menu. Then, click on Export.

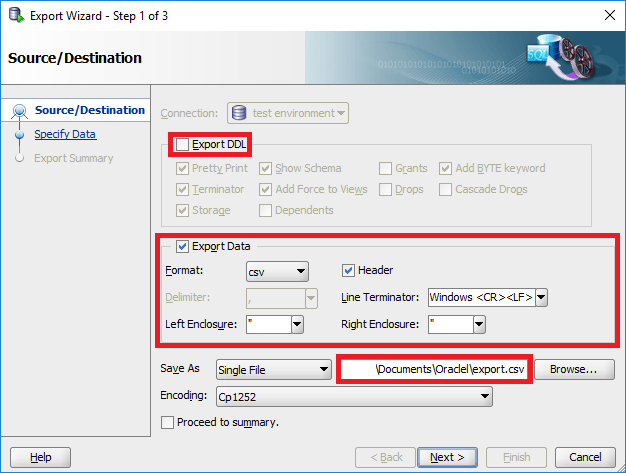

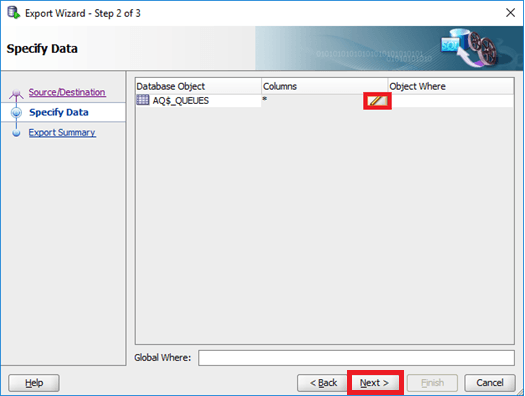

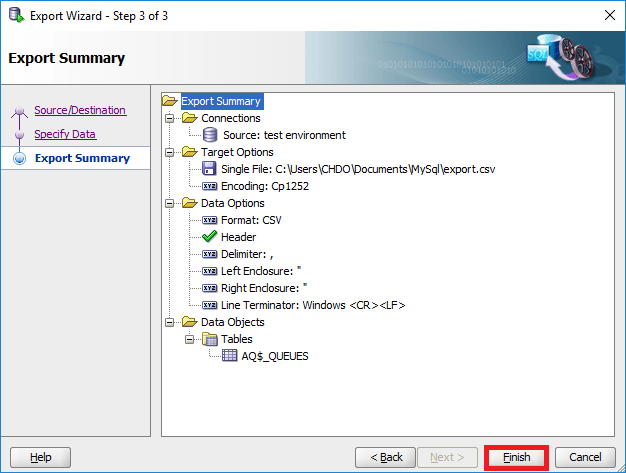

- You will be redirected to the Export Wizard page, where you need to deselect Export DDL. Change the Format to CSV and set the path to export file. Then click Next.

- Specify the columns you want to export. If you want to change the settings, use the edit icon; else, click Next.

- You will receive a summary of your export. Ensure that the settings are valid, and then click Finish to complete the data transfer.

The data extracted from the Oracle database in CSV format gets stored in your local system.

Method 2: Export Data from Oracle as CSV Using SQLcl

SQLcl is a command line interface for Oracle Database. You can use it to execute SQL and PL/SQL queries.

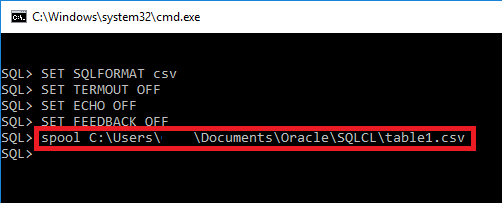

Here are the steps to export your Oracle data to CSV using SQLcl:

- First, install SQLcl from the official site and connect to the Oracle database you want to export.

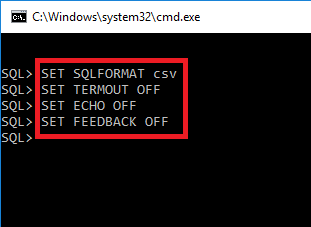

- You can change the following parameters of SQLcl:

- SET SQLFORMAT CSV for conversion into CSV format.

- SET TERMOUT OFF to suppress output on display.

- SET FEEDBACK OFF to avoid displaying the number of records returned by a query.

- To finally export the data, you can use the spool command with the appended file path to direct the output.

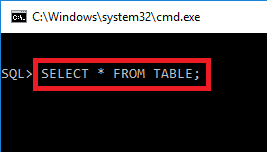

- SQLcl writes the result of your SELECT statement to table1.csv.

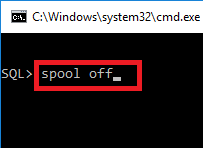

- Use the command spool off to finish the export; otherwise, you will not be able to access the exported CSV file.

You can store this CSV file containing data from the Oracle database in your local system.

Step 2: Import Data From CSV to PostgreSQL

You can create a table in Postgres and then use the COPY command to import data from CSV to Postgres.

Here’s a sample command to do this:

plaintextCOPY table_name(column1, column2, column3)

FROM '/file_path/file_name.csv'

DELIMITER 'your_delimiter'

CSV HEADER;You can use these steps as an Oracle to PostgreSQL converter and transfer your data to Postgres for further data-related operations.

Limitations of Using Manual Data Transfer Method

- Lack of Real-time Synchronization Capabilities: Using foreign data wrappers or CSV methods can result in latency in transferring data from Oracle to Postgres. This is inefficient, especially if your organization deals with real-time operations.

- Time-consuming and Effort-intensive: The manual method of data transfer is time-intensive, particularly if you are dealing with extensive datasets. It also requires you to perform the same process repeatedly, which is complex and error-prone.

Summing It Up

Oracle and PostgreSQL are two high-performing databases with distinctive features. However, when you connect Oracle to PostgreSQL, you achieve enhanced versatility and flexibility.

For an Oracle to PostgreSQL data migration, you can use a manual approach. This could be data transfer using a foreign data wrapper or export/import via CSV. However, both techniques are associated with challenges, including lack of real-time sync, time- and effort-intensive, and security issues.

The alternative approach to loading data from Oracle to PostgreSQL is an automated method that involves the use of Estuary Flow. It offers a set of pre-built connectors that enable you to transfer data quickly in real-time. You can utilize Estuary Flow to improve the efficiency of your organizational workflow and promote revenue growth.

Sign up for Estuary Flow today to build real-time data pipelines for effective data analytics!

FAQs

How to get data from Oracle to Postgres?

To transfer data from Oracle to Postgres, you can use a foreign data wrapper extension in Postgres to access data in the Oracle database. Alternatively, you can export data from the Oracle database as a CSV file using SQL Developer or SQLcl. You can then copy this data to a Postgres table using the COPY statement.

What are the challenges of an Oracle to PostgreSQL migration?

Some of the major challenges of Oracle to PostgreSQL migration include latency, lower performance, lack of data governance, and technical expertise. To overcome this, you can optimize your network connections, tune both databases and frame clear data governance policies. For troubleshooting, you can get assistance from community forums and technical consultants.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles