As companies investigate sophisticated applications such as recommendation systems, similarity searches, and personalization, the need for efficient and scalable vector databases becomes increasingly vital.

The migration from MongoDB to Pinecone is a calculated move towards high-dimensional vector databases. Migrating data from MongoDB to Pinecone offers numerous advantages, including optimized handling of high-dimensional vector data, greater real-time analytics, and improved similarity searches. For applications with sophisticated data processing capabilities, Pinecone’s unique features and cloud-based service open up many new possibilities.

This article explains how to migrate from MongoDB to Pinecone, which methods you can use to reliably move your data, and what’s needed to get it done quickly and painlessly. So, let's get started!

Introduction to MongoDB

Launched in 2009, MongoDB is a popular NoSQL database that stores data as flexible, JSON-like documents. It is designed to manage vast amounts of unstructured data and is a popular choice for applications with flexible and growing schemas.

MongoDB provides high availability, and horizontal scaling through automatic sharding, a method wherein the database divides data among several shards or partitions automatically. Typically, it is used for general purpose data storage and retrieval, including complex queries and indexing capabilities.

Some key features of MongoDB include:

- Replication and Sharding: MongoDB has methods such as replica sets and sharded clusters for data replication and sharding, ensuring high availability and efficient hardware utilization.

- Ad-hoc Queries: Ad-hoc queries are temporary queries whose values alter as variables change. MongoDB distinguishes itself from other databases by supporting Ad-hoc queries by field range queries and regular expression, allowing for real-time analytics.

- Load Balancing: Because of its horizontal scaling capabilities, MongoDB can manage numerous concurrent read and write requests.

What Is Pinecone?

Pinecone is a popular vector database that is used in the development of LLM-powered apps. It is adaptable and scalable for high-performance artificial intelligence applications. The main approach is based on the Approximate Nearest Neighbour (ANN) search, which helps you to find and rank faster matches in huge datasets.

Pinecone is ideal for scenarios where there is a requirement to store and query large numbers of vectors with low latency, allowing developers to create user-friendly applications.

Here are some key features of Pinecone:

- Vector Storage: Pinecone stores data as vectors, which are dense numerical representations of objects. This enables efficient and compact storage, as well as lightning-fast similarity searches.

- Real-time Search: The indexing and retrieval capabilities of Pinecone have been optimized for real-time use cases. It can search millions or even billions of vectors in milliseconds, making it ideal for applications requiring low latency.

- Scalability: Pinecone is meant to grow horizontally across numerous instances, similar to MongoDB, allowing seamless scalability and high availability.

- Simplicity: Pinecone has a simple API and interface with popular machine learning frameworks such as TensorFlow and PyTorch, making it simple to integrate into existing systems.

2 Ways to Migrate Data from MongoDB to Pinecone

There are two different methods that you can use to migrate data from MongoDB to Pinecone.

- The Automated Method: Using SaaS tools like Estuary Flow to connect MongoDB to Pinecone

- The Manual Method: Utilizing code base integration to Integrate MongoDB and Pinecone

The Automated Method: Leveraging Estuary Flow to Connect MongoDB to Pinecone

Estuary Flow is a user-friendly, fully-managed Extract, Transform, Load (ETL) tool that doesn’t require any programming, allowing for efficient data transfer even for those without the technical know-how.

Here’s a step-by-step guide on migrating data from MongoDB to Pinecone with Estuary Flow, a software-as-a-service platform.

Step 1: Configure MongoDB as the Source

- Visit Estuary’s website and log in using your details, or register for a new account.

- Go to the main dashboard and select Sources from the pane on the left side.

- Click on the + NEW CAPTURE on the top left of the Sources page.

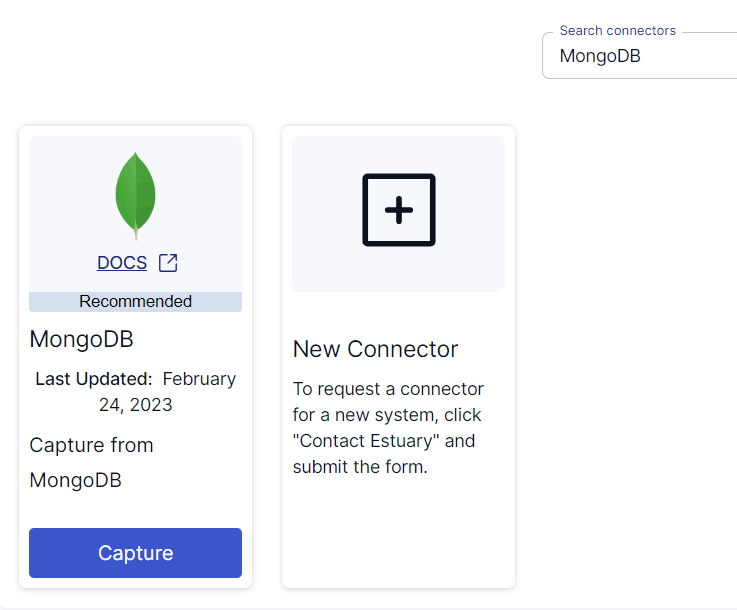

- Use the Search connectors box to find the MongoDB connector. When you see the connector in the search results, click on its Capture button.

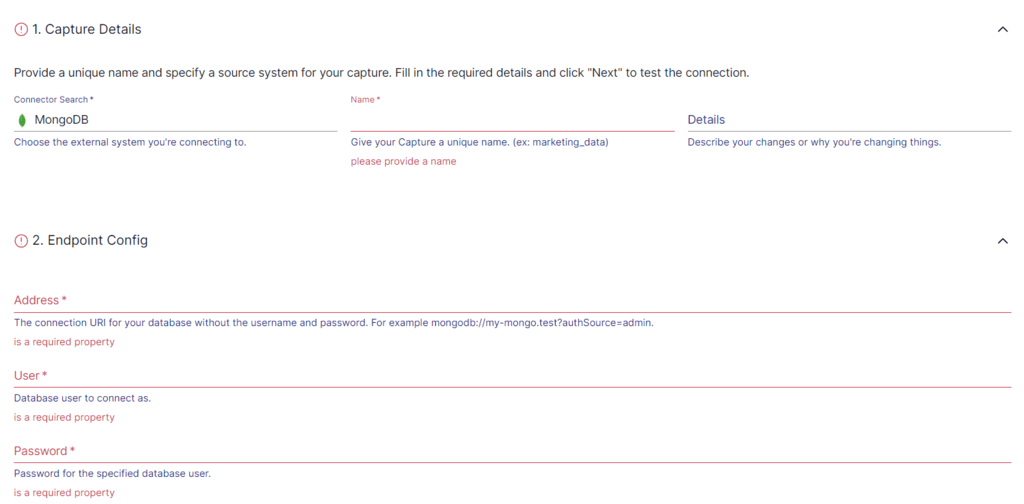

- This will redirect you to the MongoDB connector page. Enter a unique Name for the capture, the Address, User, and Password on the Create Capture page.

- Click on NEXT > SAVE AND PUBLISH.

This will capture data from MongoDB into Flow collections.

Step 2: Configure Pinecone as Destination

Following a successful capture, the capture details will be displayed in a pop-up window, where you must click MATERIALIZE CONNECTIONS to begin configuring the pipeline’s destination end.

Alternatively, after configuring the source, select the Destinations option on the left side of the dashboard. You will be redirected to the Destinations page.

- Click the + NEW MATERIALIZATION button on the Destinations page.

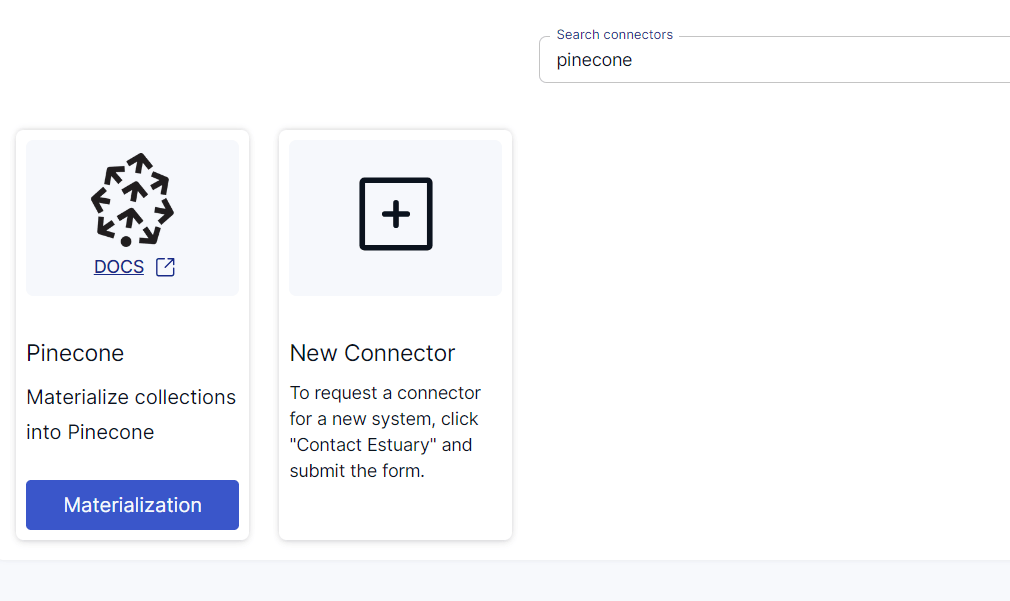

- In the Search connectors field, type Pinecone. Click on the connector’s Materialization button when you see it in the search results.

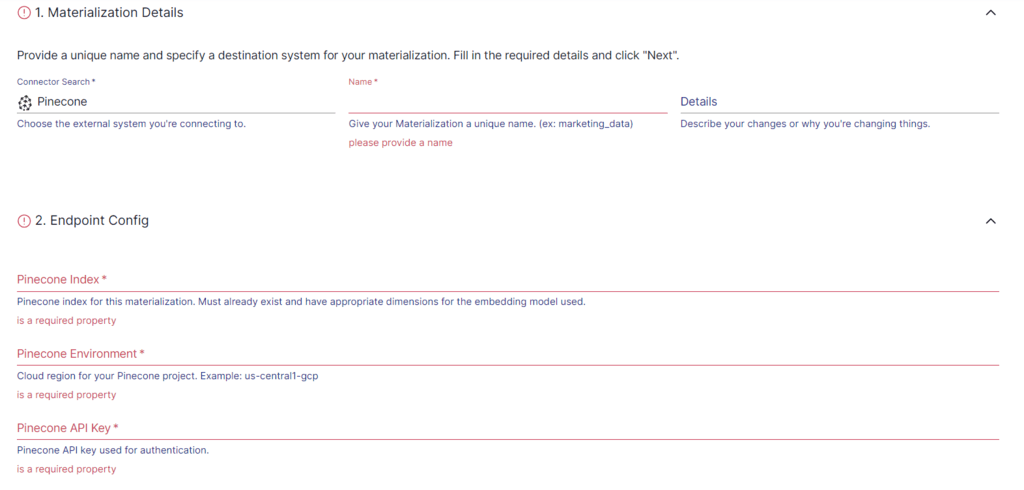

- On the Create Materialization page, fill in the required fields, such as Pinecone Index, Environment, API Key, and OpenAI API Key. Then, click on NEXT > SAVE AND PUBLISH.

Data from the Flow collections will be materialized into Pinecone. With just two simple steps, Estuary Flow considerably simplifies the integration of MongoDB to Pinecone.

To get a better idea of how Estuary Flow works, please check out the following documentation:

Benefits of Using Estuary Flow

- Pre-Built Connectors: Flow provides more than 200 pre-built connectors to help connect to various databases. Automation of the entire process helps avoid common data migration issues and enables you to effortlessly create data integration pipelines.

- Minimal Technical Skill: All it takes are a few clicks in Estuary Flow to complete the migration from MongoDB to Pinecone. So, people without major technical expertise can easily build a robust data pipeline to move their data from MongoDB into Pinecone without the headaches.

- Data Synchronization: Estuary Flow provides seamless data synchronization between MongoDB and Pinecone. This means that any updates or changes made in the MongoDB database will be automatically reflected in Pinecone without manual intervention. At the source, Flow uses powerful log-based change data capture (CDC) algorithms to collect granular data changes actively. This helps to reduce latency and preserve data integrity.

The Manual Method: Using Code-Based Integration to Integrate MongoDB and Pinecone

To manually migrate data from MongoDB to Pinecone, you can follow the following steps:

Step 1: Install the necessary libraries

- Install the MongoDB driver: ‘pip install pymongo’

- Install the Pinecone client: ‘pip install pinecone-client’

Step 2: Connect to your MongoDB database

Creates a connection with MongoDB using a URL and selects the desired database and collection.

plaintextfrom pymongo import MongoClient

# connect to your MongoDB instance

mongo_client = MongoClient (‘<mongodb_uri>’)

# Select The database and collection containing your data

db = mongo_client[‘<database_name>’]

collection = db[‘<collection_name>’] Step 3: Retrieve the data from MongoDB

Retrieves all documents from the MongoDB collection and generates a list of dictionaries, extracting appropriate information.

plaintext# Find all documents in the collection

documents = collection.find()

# Iterate over the documents and extract the necessary fields

data = [ ]

For doc in documents:

# Extract the necessary fields from the documents

# and create a dictionary for each data point

data_point = {

‘id’: str(doc[‘_id’]), # Assuming you want to use ObjectID as ID in Pinecone

‘embedding’: doc [‘embedding’], # Field containing the embedding data

}

data.append(data_point)Step 4: Install and configure Pinecone:

- Sign up for a Pinecone account.

- Create an index to store the embeddings on Pinecone.

Step 5: Initialize the Pinecone client and Insert data

- Create a Pinecone client instance with the supplied API key, connecting to the Pinecone service for future interactions. Enter data for Pinecone.

- To update or enter data into a specific index on Pinecone, use the initialized Pinecone client. The items argument provides MongoDB data, and the index name is provided for easy integration and storage within Pinecone.

plaintextfrom pinecone import Pinecone

# Initialize the Pinecone client

Pinecone_client = Pinecone(api_key= ‘<pinecone_api_key>’)

# Insert the data into Pinecone

pinecone_client.upsert_items(index_name= ‘<pinecone_index_name>’,items=data)Step 6: Verify the migration

- Retrieve a document from Pinecone to ensure the data migration was successful. The above code samples provide a basic overview of the manual migration process. Ensure you replace the placeholder values with actual values specific to your setup.

Your MongoDB data will be accessible in Pinecone for further analysis after the data migration process is complete.

Limitations of Migrating Data Manually from MongoDB to Pinecone

- Data Volume: For large datasets, manual migration may be time-consuming and error-prone. Handling massive volumes of data by hand can be difficult and may result in inconsistent or lost data.

- Schema Differences: Pinecone is designed for vector search, but MongoDB is a document-oriented database. So, there can be variations between the indexing and schema systems. For a manual migration to be accurate in Pinecone, the data schema must be carefully reviewed and fine-tuned.

- Real-time Updates: If your MongoDB database is updated often, manual migration may not be a suitable choice since you need to configure other tech stacks to capture changes in real time. Managing several techniques could make it difficult to guarantee data consistency between the source and destination.

The Takeaway

The transfer of data from MongoDB to Pinecone signifies a strategic advancement. A shift beyond mere database conversion toward unlocking the complete capabilities of vector-based applications. By ensuring a careful and methodical approach, manual data migration allows you to discover new possibilities in handling high-dimensional data.

Manual migration of data from MongoDB to Pinecone would require you to manage data structures, vectorization strategies, and application code modifications. The manual migration of large datasets can be tedious and error-prone. While this approach may provide more control, it requires careful preparation and execution.

For increased data volumes, consider using SaaS tools like Estuary Flow to migrate data from MongoDB to Pinecone. Thanks to Flow’s intuitive interface, ready-to-use connectors, and scalability, the migration process doesn’t involve manual efforts and ensures optimal scalability and performance.

Estuary Flow is your one-stop solution to build real-time data pipelines between a range of sources and destinations. Register for Estuary Flow or sign in to get started!

Frequently Asked Questions (FAQs)

What is the difference between Pinecone and MongoDB Atlas?

The primary difference between Pinecone and MongoDB Atlas lies in their data handling capabilities. Pinecone specializes in managing high-dimensional vector data and rapid similarity searches, suitable for real-time AI applications requiring high precision and speed. On the other hand, MongoDB Atlas offers diverse capabilities like Vector Search to help seamlessly create sophisticated AI language models.

How much memory does MongoDB allocate per connection?

MongoDB allocates 1 megabyte of RAM per connection. To optimize memory usage connections, monitor and limit the number of open connections, close unused connections, and adjust the ‘maxPoolSize’ option to manage your connection pool size effectively.

Which is faster, MongoDB or Pinecone?

MongoDB is faster at handling a wide variety of data types and complex queries. On the other hand, Pinecone is faster at performing similarity searches within high-dimensional vector data, commonly used in AI and ML applications.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles