Change data capture has been a very important strategy to solve the problem of keeping data in sync. With MongoDB CDC, you are able to get data from the source database and stream the data into a destination in near real-time.

Looking for something else? Check out our CDC Guides for other systems:

In a situation where you want the destination system to be up to date with the data in MongoDB, the system is constantly listening for changes in the source. These changes are populated into the target system immediately. There are different methods that have been developed, but in general, all of these methods require connecting to the database or a source system to retrieve database operations like inserts, deletes, and updates, and populate those changes into the target system.

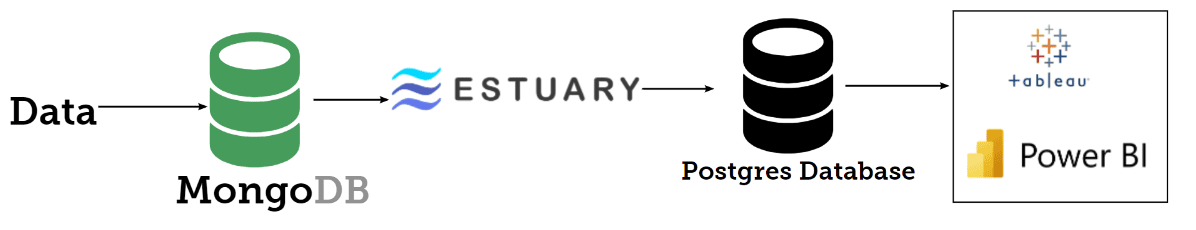

In this post, we’ll look at enabling change data capture for a MongoDB database sitting on the Atlas cloud. We’ll also look at some of the tools and methods that have been developed and we will go a step further to showcase the change in data capture from a MongoDB to a Postgres database sitting on AWS.

The same method will work for your MongoDB database irrespective of where you are running your MongoDB database: be it Atlas, Digital Ocean, IBM Cloud, or in ScaleGrid. For the most part, you will only need the database connection string and the credential to enable change data capture on your database.

In this post, we will delve into using Estuary Flow to efficiently capture and monitor data changes originating from MongoDB CDC and streaming them to your Postgres database in less than five minutes. This is very interesting because it is relatively easy, all you need is to connect your MongoDB to Estuary using your credentials, then Estuary will do its thing.

There are different reasons why you might want to capture data changes from your database, but in general, CDC helps to provide solutions to problems of data in sync.

Why is Change Data Capture so Important?

Data Analytics

Change Data Capture (CDC) is a crucial technique in data analytics: CDC helps to accelerate reporting and business intelligence capabilities. It helps with real-time tracking and insight into how a business is performing.

For example, in our case, MongoDB is not suitable for analytical workloads; MongoDB will not be able to support complex SQL queries that will be sent across from Tableau or PowerBI dashboards to the data. But we need to be able to analyze the data in our microservices with great KPIs to provide insight into the company's growth.

The best thing to do here is to enable Change Data Capture to a PostgreSQL database that supports all of these functionalities. This would let you leverage Postgres support for a wide range of SQL functions and window functions, handle large datasets with high performance, scale horizontally to handle more data, and leverage large-scale data processing

All of these are achieved by capturing every modification to the data, which in turn enables the identification of trends, patterns, and anomalies, leading to optimized business decisions and gaining a competitive advantage. CDC is particularly important in scenarios where traditional batch processing may not be feasible or fast enough to make informed decisions. In this case, CDC can be implemented to ensure data is always up-to-date and accurate, allowing analysts and data scientists to make informed decisions based on the most current information.

Synchronizing On-Premises Data to the Cloud

Change Data Capture (CDC) is important in synchronizing on-premise data to the cloud because it allows applications sitting in the cloud to have access to data in the on-premise data stores. This ensures that the data in the cloud is always up-to-date and consistent with the on-premise data.

Build an Audit Log

CDC is very helpful in building an audit log because it captures and records every modification made to a data store in real time. With CDC, you create an audit trail of all operations of the data stores. This allows you to create a detailed and accurate log of all changes to the data, including who made the changes, when they were made, and what was modified.

Information from the logs is very important for different purposes. We can use it to understand user behaviors, identify errors, and monitor the performance for queries that are taking longer time.

As regards security, it helps to enforce compliance with regulatory requirements and helps organizations identify and address security breaches and other unauthorized changes to the data. Interestingly, this in turn enables more efficient and accurate data recovery in the event of system failures or data loss. In fact, this information can be sent to systems like Elasticsearch for further processing.

Easing Operational Database Pressure

CDC supports the offloading of certain data-intensive tasks to secondary systems, which can help to reduce the burden on the primary operational database.

In the era of big data, data volume in businesses is growing faster, doubling the amount of data we manage each year. Of course, big data will surely improve decision-making across the business, it would also help to drive insights into customers' behavior, preferences, and needs.

Interestingly, an increase in the volume of data in our database would on the other hand cause data to stress transaction processing by slowing things down. One of the strategies to manage this stress is to stream data into the upstream data store and free up space in the primary database using the principle of change data capture.

This can lead to:

- Improved scalability

- Increased throughput

- Faster response times

This helps you handle large volumes of data, optimize resource use and reduce costs.

Application Integration

Change Data Capture (CDC) is important in application integration because it enables real-time tracking and integration of changes to data across multiple applications.

One of the use cases of CDC is data replication or propagation, you might be in a situation where you want to make your data available in other regions to solve latency and performance issues. Also, you might want to make sure the data in your data warehouse is up to date. With CDC you can capture these changes in the data stores and stream the data into your data warehouse using CDC.

Another use case is making data available to your customers, vendors, or your partners. In some cases, you don't want to give absolute accessibility to your database. In this case, you can capture data change from your own database and provide this data to the partner's database.

By capturing and propagating data changes in real time, CDC supports seamless data integration, improving data accuracy, and consistency across multiple systems. For example, CDC also supports the implementation of data integration architectures, such as event-driven and microservices applications.

With the help of these architectures, you can:

- Create more flexible and scalable systems

- Reduce development and maintenance costs

- Improve time to market

Methods of Change Data Capture

Log-based Change Data Capture

Log-based CDC is a technique used to capture and replicate changes made to a database by analyzing the transaction log, also known as the database's redo log.

You should consider this technique if you need to optimize performance: this method only leverages the database logs to retrieve the data changes from the database which does not affect the database processing capability. This technique is the best CDC method when you are looking to develop your CDC application, you can handle high-volume and high-velocity data streams easily.

The transaction log contains a record of all modifications made to the database, including inserts, updates, and deletes. These changes are then extracted and replicated to a target system, such as a data warehouse or another database.

Advantages of this technique: It’s non-intrusive and doesn’t require modifications to the source database, making it easier to implement and maintain.

Disadvantages of this technique: It requires access to the transaction log, which may not always be available for all databases or may require special permissions.

Trigger-based Change Data Capture

Trigger-based CDC is a technique used to capture and replicate changes made to a database by creating triggers on database tables of interest. A trigger is a special type of stored procedure that is executed automatically in response to certain database events, such as inserts, updates, or deletes.

Trigger-based CDC works by creating triggers on the tables to be monitored for changes. When a change is made to the monitored table, the trigger is activated, and the details of the change are captured and stored in a separate table or sent to a target system.

One thing to note about developing your application using Trigger-based CDC is that it is difficult to scale because it relies on database triggers.

Trigger-based CDC development needs special attention because they are more complex to develop. There is always a limit to accessing database tables because more attention are always been placed on performance and security.

Disadvantages of this technique: It can slow down the source database if not properly managed and can be more complex to implement and maintain than other CDC techniques. It may also require additional development effort to customize triggers for each table being monitored.

Timestamp-based Change Data Capture

Timestamp-based CDC helps to capture and replicate changes made to a database by tracking the time when a change was made.

Timestamp-based CDC is one of the easiest to develop of all the methods, you can relatively get started quickly. You can easily vary the amount of data you stream across to the other data systems giving you more control of the system, even if there is huge data to be captured.

Interestingly, you can timestamp back to previous dates or timestamp to capture data changes that interest you. The timestamp-based method always leverages system-generated records, such as the transaction start and end time to determine which records have been modified since the last check.

The process stores the timestamp of the last change made to a database row or table, and then periodically compares the timestamp to the current state of the row or table to identify any changes. The timestamp is stored in a separate table or database, along with the primary key of the row or table being monitored. Then, at regular intervals, the timestamp is compared to the current state of the row or table to identify any changes made since the last timestamp was captured. When changes are identified, usually using a repeated query, the details of the changes are captured and replicated to a target system.

Advantage of this technique: It’s relatively straightforward to set up with SQL.

Disadvantage of this technique: Tables must have a timestamp column, and delete events aren’t captured.

Difference-based / Snapshot-based Change Data Capture

Difference-based or Snapshot-based CDC is a technique used to capture changes made to a database by comparing two snapshots of the data and identifying the differences between them. Snapshot-based CDC works by periodically taking a full snapshot of the data in a database table, either by extracting data directly from the source system or by using a database backup or export. The snapshot is then compared to the previous snapshot to identify any changes. Unfortunately, difference-based CDC can be resource-intensive, especially if snapshots are taken frequently or for large datasets.

Change Data Capture Tools

CDC with Debezium

Debezium is an open-source distributed platform for change data capture. It monitors databases in order to be able to immediately react to changes in the database. Not only insert, update, and delete events, but also schema changes for example can be detected. Typically Debezium architecture revolves around connectors. The connectors help in capturing data changes as streams from the source system and sync the data into the target system.

CDC With Oracle GoldenGate

Oracle GoldenGate is a licensed software from Oracle used for real-time change data capture and replication in enterprise-level database environments. Oracle GoldenGate creates trail files that contain the most recently changed data from the source database and then pushes these files to the destination database. You can use Oracle GoldenGate to perform minimal downtime in production data migration and you can also use it for nearly continuous data replication tasks.

IBM InfoSphere Change Data Capture

InfoSphere CDC is a replication solution that captures database changes as they happen and delivers them to message queues, target databases, or an extract, transform, load (ETL) solution such as InfoSphere DataStage. This process is based on table mappings that are configured in the management console's graphical user interface.

The replication solution can be designed to work as an orchestration tool, where you can define the InfoSphere CDC subscription to run on a schedule. It can be used for dynamic data warehousing solution, application integration or migration, service-oriented architecture projects, and operational business intelligence.

Fivetran

Fivetran is a modern data integration solution. The platform provides more than 200 pre-built connectors. Fivetran’s connectors are also optimized with CDC technology, ensuring efficient, high-volume data movement for various deployment options.

Fivetran is fault tolerant to service interruptions and incidents. If there should be an interruption in the data capture process, Fivetran will automatically resume syncing process exactly where it left off once the issue is resolved, even if days or weeks after, as long as the logs are still present.

For the database connectors, Fivetran primarily uses log-based replication and a replication strategy that offers complete snapshots of databases with a speed that is close to that of log-based systems. After the first sync of your historical data, Fivetran will move to incremental updates of any new or modified data from your source database. Interestingly, each database uses a different data capture mechanism in Fivetran.

However, Fivetran uses a batch-based mechanism to move data to destination systems. This adds latency to your data pipeline, even if you ingested the data with CDC.

StreamSets CDC

StreamSets is an enterprise data integration platform with multiple CDC connectors to databases such as Microsoft SQL Server, Oracle, MySQL, and PostgreSQL. StreamSets Data Collector offers origins that are CDC-enabled right out of the box.

StreamSets supports different source-destination configurations. It supports change data capture enabled origin with CRUD-enabled destinations, change data capture enabled with non-CRUD destinations, and non-CDC origin to CRUD destinations.

With some simple configuration, you can set up a CDC operation within minutes and start to stream data into your database. With SteamSet’s data pipelines, you can build highly customizable and fully functioning data pipelines leveraging CDC via a drag-and-drop interface.

Estuary Flow

Estuary Flow is a DataOps platform that integrates all of the systems you use to produce, process, and consume data. Estuary Flow unifies today's batch and streaming paradigms so that systems both current and future are synchronized and updated in milliseconds.

How to use Estuary Flow to Capture Data from MongoDB to Postgres Database

With Estuary, you can connect to your databases and stream your data into your target system in real-time without needing to set up infrastructures/resources on-premise or in the cloud. You and your data team can focus more on developing the business application, which is very good for the business both now and in the future.

As part of this, you don't have to reinvent the wheel when there is a solid tool like Estuary Flow that can help you to achieve the same purpose with a few clicks.

Estuary Flow supports a lot of different database systems. You can use CDC on databases like MongoDB, PostgreSQL, MySQL, and Microsoft SQL Server.

Right now, Estuary Flow supports more than 50 data sources, and more sources are being added. One of the advantages of using Estuary over other CDC tools is the ease of use. With Estuary, you can easily provide the name of your database, the endpoint, ports, and the name of the table to capture, and Estuary will do its thing. Yes, it’s as simple as that. Also, in my experience using Estuary data capture from the source system to the target system is fast.

Implementing MongoDB Change Data Capture

Let's quickly look at how we can capture data changes from our MongoDB into our Postgres database sitting on AWS. There are different reasons why we might want to capture data changes to a new database, this might be for data replication for reliability and high availability. It might be that we are trying to keep the data in our data warehouse in sync so that other systems and applications can get the updated data from our data warehouse.

Note that this method can be used to send your data to a variety of destinations: for example, moving data from MongoDB to Snowflake.

For example, we might be interested in analyzing the data produced from our microservice application and we want to be able to make an informed decision about sales in real-time.

In fact, we can go a step further to connect our data in the Postgres database to a Tableau /Power BI dashboard to see how sales are performing. To get data into the Postgres database, we are going to perform the following:

- Create an account on Estuary

Head on to the Estuary Flow web app and create an account: make sure you activate your account. After this, you are all set to start performing change data capture of your database.

- Create an account on Altlas on MongoDB Atlas

If you don't have a MongoDB cluster to test things out, you can go to the MongoDB Atlas page to create a MongoDB cluster for free. Make sure to provide the necessary permissions needed to connect to your database from the internet. You can check out this blog post to see how you can create a MongoDB cluster on Atlas cloud.

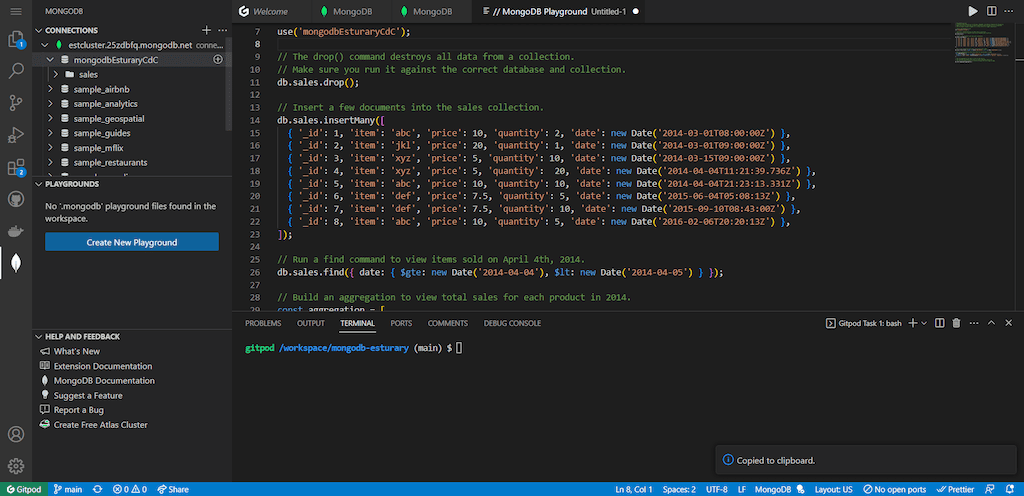

- Use MongoDB Visual Studio Code Extension to access MongoDB

With the MongoDB cluster up and running, now head on to VSC to download the MongoDB extension provided by Atlas. Install the extension: this extension would allow you to develop on MongoDB easily. Also, make sure to provide the connection string needed to connect to the MongoDB cluster you created in the previous step from the extension.

- Insert data into your MongoDB cluster using the VSC extension

Use the VSC extension to insert data into your MongoDB database. In my case, I have created a database called mongodbEsturaryCdC and a collection called sales.

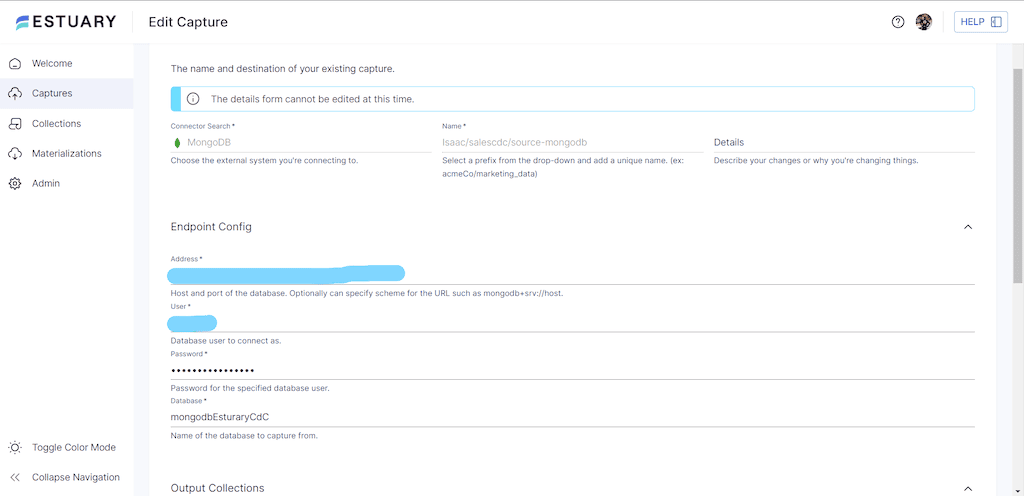

- Use Estuary to capture data in your MongoDB cluster

Now you need to go to Estuary to connect to the MongoDB cluster and make sure to specify the right database and collection for Estuary Flow to capture. In my case, I have specified mongodbEsturaryCdC and sales as my database and collection. Click on Next and test the connection, make sure you see a success message before you Save and Publish the CDC capture.

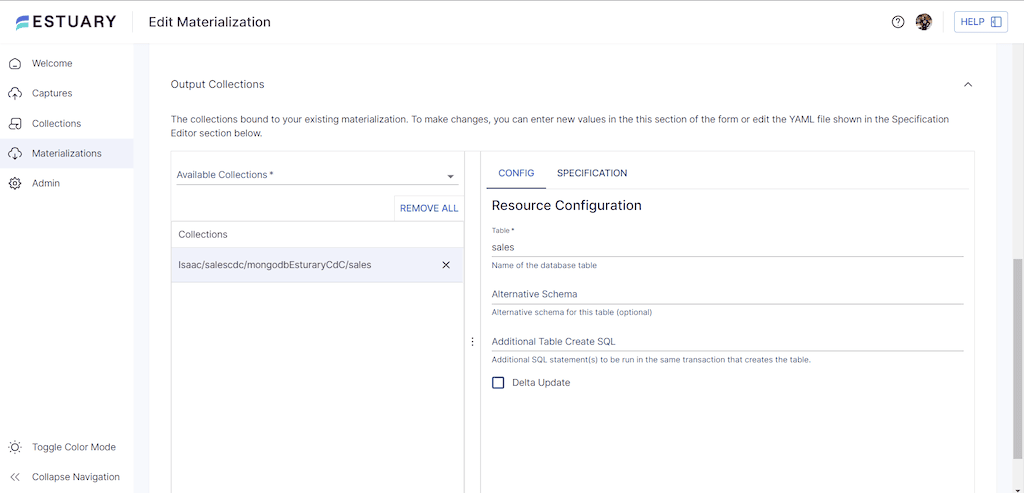

- Create a Materialization connection for the Postgres database to capture the Sales data in MongoDB

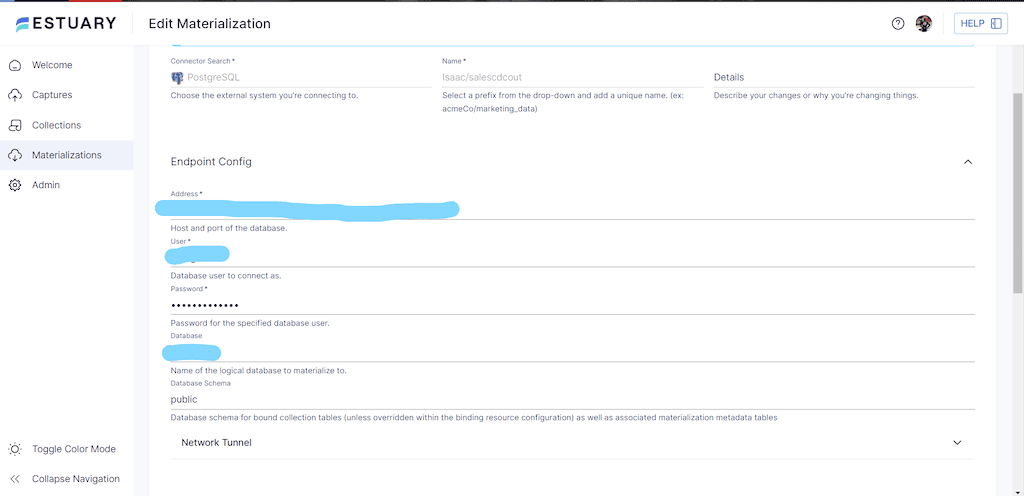

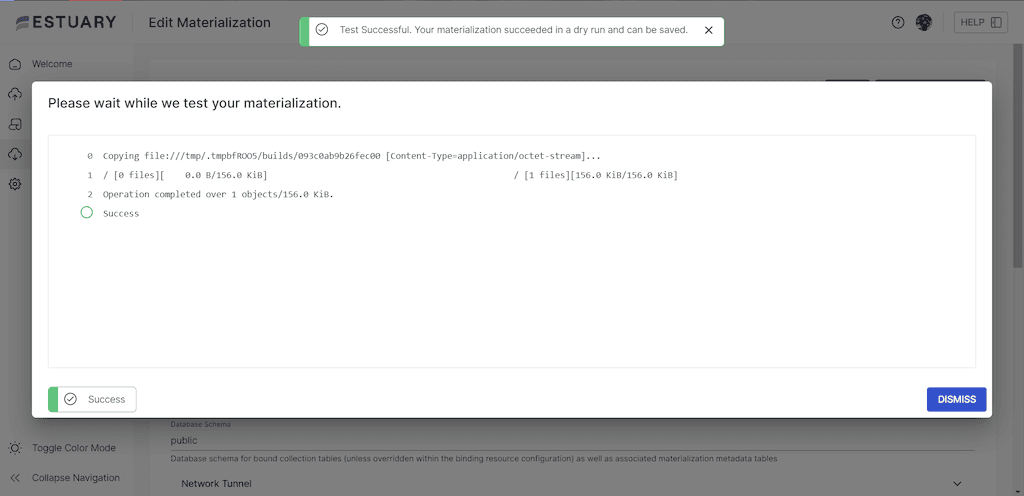

Now we are ready to materialize data from Estuary Flow into our Postgres database. First, we need to configure our connection setting to our Postgres database on Estuary. Also, we must make sure we are able to connect to our database from your network. In my case, my Postgres database is running on Amazon Web Services, and I have set the public accessibility to true. My connection setting looks like the one below. Again, make sure you test the connection before you move forward.

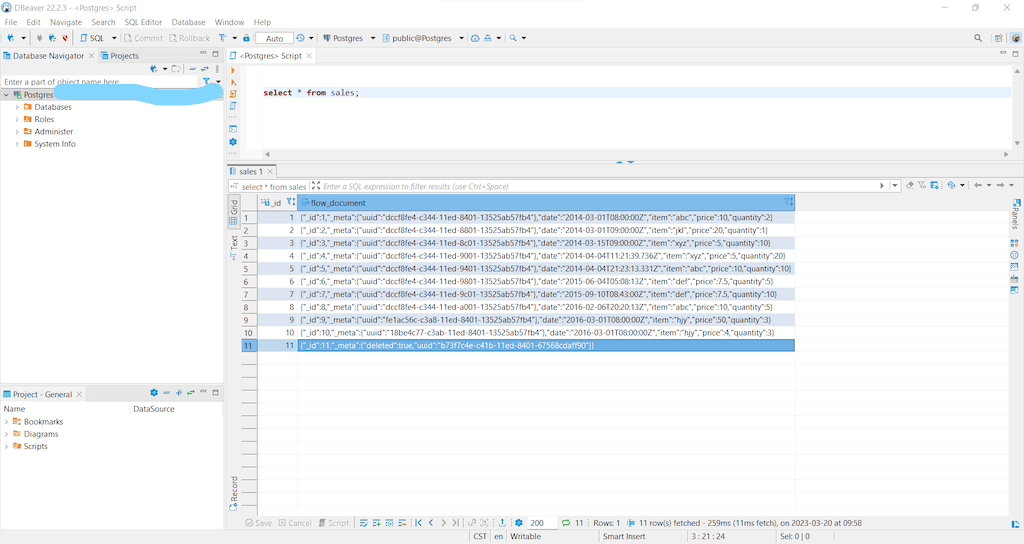

- Go into your Postgres Database to confirm that your data is present

At this point, our data have gotten to the Postgres database using Estuary Flow as the CDC tool. Interestingly, the data from MongoDB only took less than a second to get to the Postgres database. We can further use Data Build Tool (dbt) to further transform the data for the analytical dashboards.

For more details on this process, see our docs on:

Conclusion

Recently, there has been a lot of work on change data capture. Different modern data tools have been developed that can handle CDC, but using an ultra-simple tool like Estuary is very important and a game changer. Using Estuary would make the development process much faster and more seamless. You would be able to concentrate on delivering value and insight to the business instead of worrying about managing data infrastructures.

Are you an engineer looking for more info on how Estuary approached our CDC solutions? Check out the code on GitHub or talk directly to our engineering team!

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles