Originally published on TDWI.

Adhering to global privacy regulations and governance standards within the enterprise has become exponentially more complex over the past several years. On the one hand, there’s an ever-increasing swath of data an enterprise must deal with. On the other hand, regions and localities regularly impose new requirements governing the use of their citizens’ personal information.

Starting with the GDPR and more recently the CCPA, we’ve seen that despite their good intentions to keep their citizens’ data private and safe, governing bodies don’t make it easy for companies to comply. For example, privacy laws don’t align with each other. The GDPR and CCPA define personally identifiable information (PII) differently. The two regulations impose different requirements for company interaction with users who decide they don’t want to be tracked. Furthermore, the CCPA takes a broader view of PII by including browsing information (among other things) that falls outside of the GDPR’s definition. Meanwhile, companies need to ensure their data is safe internally in order to minimize chances for internal misuse.

Although no new privacy law implementations are currently on the horizon, there’s precedence for countries and states to implement their own requirements. We must plan as if it’s only a matter of time before more do.

Data Privacy and Governance: Implications for the Enterprise

Businesses need to treat privacy and governance as first-class citizens. When I worked at Google nearly 10 years ago, we implemented a process and policy to ensure that every product release went through privacy and legal review. At the time, that seemed like a ton of overhead, especially when making an innocuous change such as adding a new button in a user interface. Although requiring additional review for a button may seem extreme, in today’s climate, most enterprises should at least implement a flavor of extra privacy and governance review.

Understanding Privacy Laws

Privacy needs to be more than just a checkbox; to be able to adhere to today’s complex laws as well as tomorrow’s inevitable ones, our systems must be architected from the ground up with it in mind. Privacy laws tend to share common features, but they differ when it comes to specific definitions about the precise definition.

Both the GDPR and the CCPA share common high-level requirements that all companies must meet:

- Provide users the ability to opt in and out

- Delete all information about a specific person upon request

- Stop tracking users

- Send any user a copy of the data kept about them upon request

- Provide controls and transparency about third parties and what data will be shared

Users can additionally demand different information based on the law that governs them. California residents may legally request more detail about what data a company stores about them than European citizens can. To accommodate this, systems need to be designed flexibly.

Instead of designing a system that complies with a specific law, a privacy-forward company must create services designed to enforce each attribute of the regulations. Doing so provides future flexibility to implement new laws without rearchitecting a system.

Designing for Dat Governance: Access Control

Upholding governance becomes much simpler when a company’s most sensitive information is stored in a single, centralized repository where access can be limited. For example, it’s important to restrict who has access to revenue metrics at public companies to prevent insider trading and potential information leaks. Access control can ensure that only a subset of users with known, specific roles are privy to sensitive data.

Each company will inevitably decide which personas require access to such sensitive information, but the general rule is that the fewer entities that have access, the better. Engineers build aggregate reports that render otherwise-risky information safe for the rest of the company, so they’re one group that requires access. To best serve the masses with those reports, engineers need systems that make it easy and fast to support services that fulfill business tasks without compromising company secrets.

Such considerations need to be reviewed early in a project because they’re at odds with traditional engineering design. Common best practices for engineers center around creating open, accessible systems.

Designing for Data Governance: Privacy

A core principle of system design is to minimize “surface area.” When applying this principle to privacy, surface area directly correlates to the number of places that PII is stored because PII is precisely what privacy laws regulate. People can request a copy of what PII the enterprise holds as well as mandate the data’s deletion. Storing that information in as few places as possible is a necessary tactic to address this requirement.

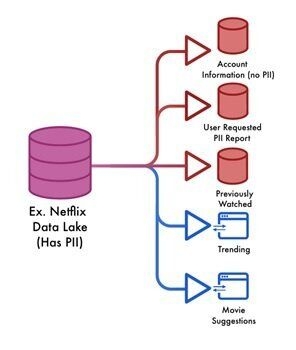

As with governance, minimizing the surface area of sensitive data starts with employing a single (primary) data lake. That data lake should feed information to fulfill use cases and business requirements. Whenever possible, data that leaves the lake should be aggregated or summarized to the point that it’s no longer personally identifiable and doesn’t contain sensitive information.

As a concrete example, when I log into Netflix and access my account information, the UI doesn’t show my full credit card details. It only shows me the minimal (and “safe”) level of detail that is enough for me to complete my task. If I want to know which credit card I’m using to pay, all I need is the last four digits of that card. Netflix most likely isn’t pulling my data from the same place that my PII is stored when they display these digits — it would be too risky from a security or privacy standpoint.

Instead, they likely create a derivative table that’s populated by their data lake and only has the necessary amount of information that’s rolled up as much as possible. For the case of account information, it may still be personally identifiable but definitely more secure. This methodology would also be employed when building other services, such as movie suggestions in which case it may be possible to completely remove all PII.

The Ideal System

Data lake design is critical for governance and privacy. Some elements need to be architected up front:

- Control of aggregations

- It should be simple to create reports and data streams that contain aggregate metrics with strong controls around how to populate individual fields

- This includes having the ability to encrypt values or columns by deleting or potentially transforming them (e.g., full account number to last four digits for credit cards)

- Accessibility

- Services will be dependent on data, so data needs to be easily accessible to any service

- The data lake should connect seamlessly to databases, SaaS tools, and warehouses, providing aggregated views described in “Control of aggregations” above

- Privacy and legal teams must define how data must be aggregated prior to going to third parties; these restrictions must be enforced in the data lake

- Speed

- The data lake should never be a bottleneck that a user needs to circumvent to fulfill a purpose; data in the lake must be accessible quickly — a data lake must supply instant access to new data upon request

It’s possible to accomplish these design needs by cobbling together tools that exist today, but it is challenging. For example, today’s data lakes don’t support true real-time access and require lambda architecture to fulfill those use cases.

As systems grow in complexity, so does the difficulty of enforcing privacy and governance. Imagine a California user requests a copy of their data, which the enterprise stores in 10 places. For most companies using microservices, 10 places wouldn’t be inconceivable, but fulfilling the user’s request is going to be time-consuming and error-prone.

Getting There Incrementally

Most companies have data that resides in multiple places and don’t have the luxury of designing their systems from the ground up. These principles can be applied to existing systems on a go-forward basis even if PII resides in multiple locations. Here are the steps to get there:

- Review the current state of PII in your system. Understand each and every service that generates it and where it flows.

- Create a data lake and flow all sources of data containing PII into it.

- Grant permission only to users who have roles that require access. The data lake should have properties similar to the ideal system discussed previously.

- Ensure that all new services and reports you create are based on data from that data lake.

- Slowly transition historical services and reports to receive their data from it.

These steps can help transition companies with legacy systems to a flexible, modern architecture that adheres to privacy and governance standards.

A Final Word

Following these best practices may not be easy and will likely be time-consuming. However, when compared to the consequences of a GDPR or CCPA violation, the investment is well worth the effort.

We’re building Estuary Flow with the principle that one accessible data lake should be able to ensure that privacy and governance is upheld downstream while simultaneously fulfilling all business use cases. Read more about Estuary Flow here.

About the author

David Yaffe is a co-founder and the CEO of Estuary. He previously served as the COO of LiveRamp and the co-founder / CEO of Arbor which was sold to LiveRamp in 2016. He has an extensive background in product management, serving as head of product for Doubleclick Bid Manager and Invite Media.

Popular Articles