Integrating Kafka to DynamoDB is an attractive solution for real-time data streaming, better processing, and distribution of your data. The seamless movement of streaming data from Kafka topics to DynamoDB tables facilitates almost instant and actionable insights.

Some of the use cases of a Kafka-DynamoDB integration include real-time inventory management in e-commerce, fraud detection in finance, and monitoring and analytics in IoT. If you’re looking for efficient and reliable ways to integrate Apache Kafka, a distributed streaming platform, with Amazon DynamoDB, a fully managed NoSQL database service, you’ve come to the right place.

Right after a quick overview of both platforms, we’ll explore the best ways to migrate from Kafka to DynamoDB to help you achieve a reliable data workflow.

Overview of Kafka

Apache Kafka is a data streaming platform designed to handle data through data streams and distributed event stores. Initially developed by LinkedIn to handle its messages, Kafka eventually became an open-source data streaming tool after it was transferred to Apache.

Streaming data implies that information is continuously being streamed by hundreds of data sources, and this information needs to be processed sequentially. With Kafka, you can subscribe, process, store, and publish these data records in real time.

Kafka operates on a hybrid messaging model that combines queuing and publish-subscribe systems. Queuing enables data processing by distributing the data through various consumers.

However, traditional queues are not multi-subscriber. And while the publish-subscribe approach is multi-subscriber, it cannot be used to distribute work across multi-subscribers because every message goes to all the subscribers.

For this, Kafka uses a partition log model. The logs are sequenced in order of records, and are partitioned. These partitions are then distributed among the subscribers in a group.

Important features of Apache Kafka:

- Publish-Subscribe Messaging: This feature of Apache Kafka enables producers to publish data on any topic and consumers to subscribe to its streaming. Such a decoupling can help in independent communication.

- Scalability: It can effectively handle large volumes of data. When your data volume increases, you can add more servers to the cluster through horizontal scaling. The partitions are small units that enable the distribution of data across multiple brokers.

- Distributed Architecture: This allows Kafka to manage and process data across multiple servers, enhancing fault tolerance. With data replication and distributed processing, there’s the benefit of high availability.

Overview of DynamoDB

Amazon DynamoDB is a NoSQL database service with optimized performance and high scalability. It is a fully managed database with a document and key-value data model. DynamoDB can help you create, store, and retrieve data irrespective of volume. It can lighten the burden of tasks such as cluster scaling, patching software, hardware provisioning, etc. It also provides encryption of your data through REST API.

DynamoDB stores data in tables, each consisting of numerous objects and characteristics. A primary key, which can be a single attribute or a combination of partition and sort keys, should be associated with each table and its elements.

The tables in DynamoDB are not hosted on one server but are spread across multiple servers. This avoids direct querying from the database host and ensures speed. It supports secondary indexes that are used to refer to and order items according to primary and sort keys.

You can use DynamoDB for applications that involve predictable read and write performance. However, you should avoid it when running your software outside the AWS cloud, as it can lead to latency issues.

Key features of DynamoDB include:

- NoSQL-based: DynamoDB is a NoSQL database that stores data in key-value pairs. This enables faster reading and writing, particularly for large datasets, and helps with real-time responsiveness.

- High Performance: DynamoDB's main strength is speed. It uses memory caching and solid-state drives to optimize performance for important applications. DynamoDB automatically scales the storage and processing power according to the volume of your application. For this, it provides provisioned as well as on-demand capacity.

- Flexible Data Schema: DynamoDB has an adaptable data schema to store data in various formats. This makes it flexible, unlike some other relational databases with rigid structures. However, you have to design the schema carefully, keeping in mind how you will access and query your data.

2 Easy Ways to Migrate Data from Kafka to DynamoDB

- The Automated Way: Using Estuary Flow to Migrate from Kafka to DynamoDB

- The Manual Approach: Using Custom Scripts to Migrate from Kafka to DynamoDB

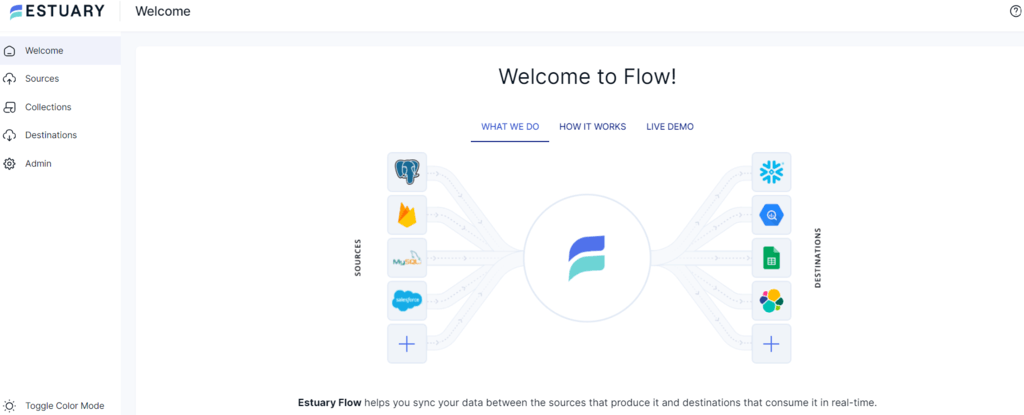

The Automated Way: Using Estuary Flow to Migrate From Kafka to DynamoDB

Estuary Flow is a powerful real-time data integration platform that provides you access to several connectors for data migration. It supports continuous streaming and synchronization of data to help with efficient analytics. Estuary Flow, with its intuitive interface, change data capture (CDC) support, and many-to-many integrations, makes an impressive choice for your varied data integration needs.

Here are the steps to migrate data from Kafka to DynamoDB using Estuary Flow.

Prerequisites

Before you get started with Flow, ensure the following:

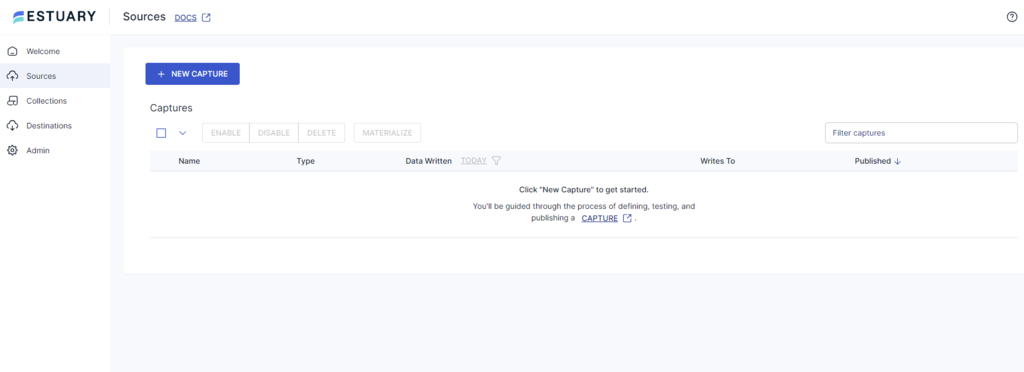

Step 1: Configure Kafka as a Source

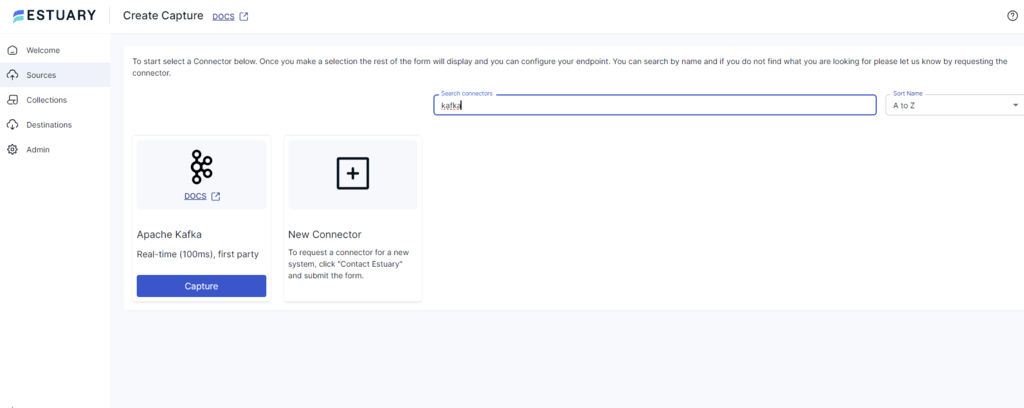

- Sign in to your Estuary account and click Sources on the dashboard.

- Click the + NEW CAPTURE button on the Sources page.

- Type Apache Kafka in the Search connectors box. When you see the Kafka connector in the search results, click on its Capture button.

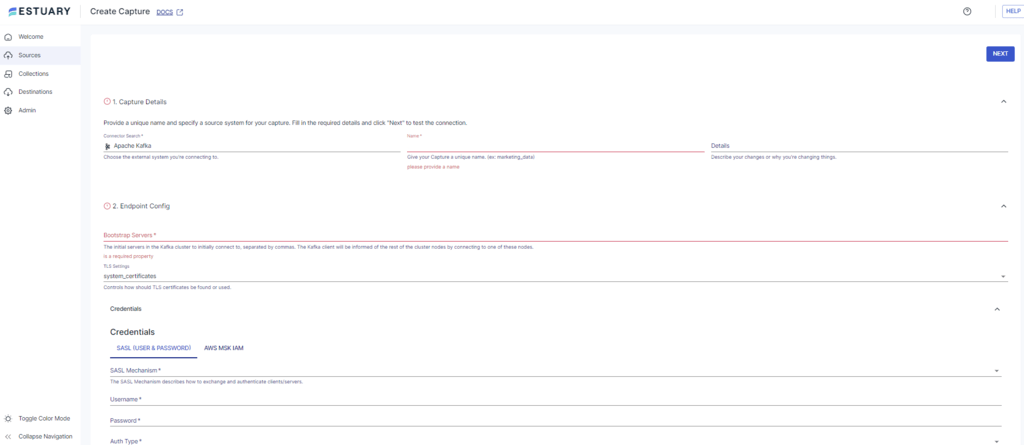

- On the Kafka Create Capture page, enter credentials such as Bootstrap Servers, Username, Password, and Auth Type. Then, click on NEXT > SAVE AND PUBLISH.

The connector will capture streaming data from Kafka topics. It supports Kafka messages that contain JSON data, and the Flow collections will store data as JSON, too. So, before you deploy the connector, ensure you modify the schema of the Flow collections you’re creating to reflect the structure of your JSON Kafka messages.

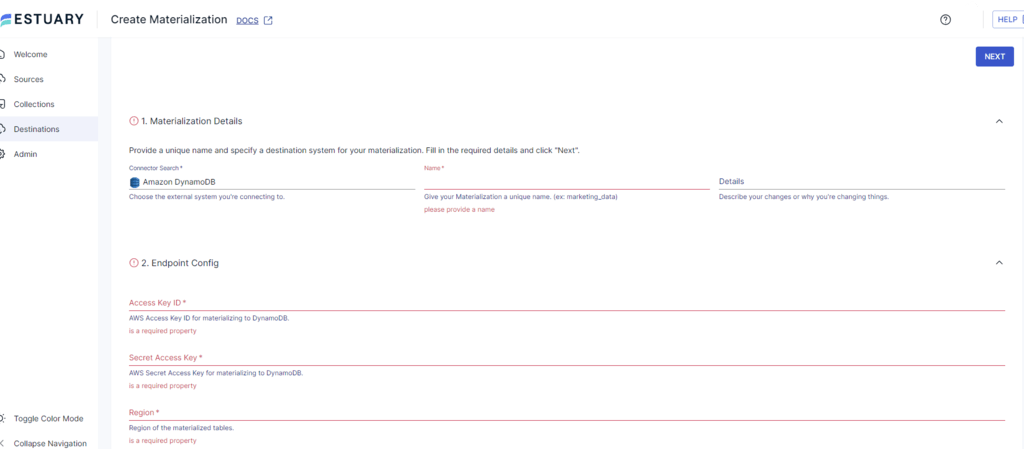

Step 2: Configure DynamoDB as the Destination

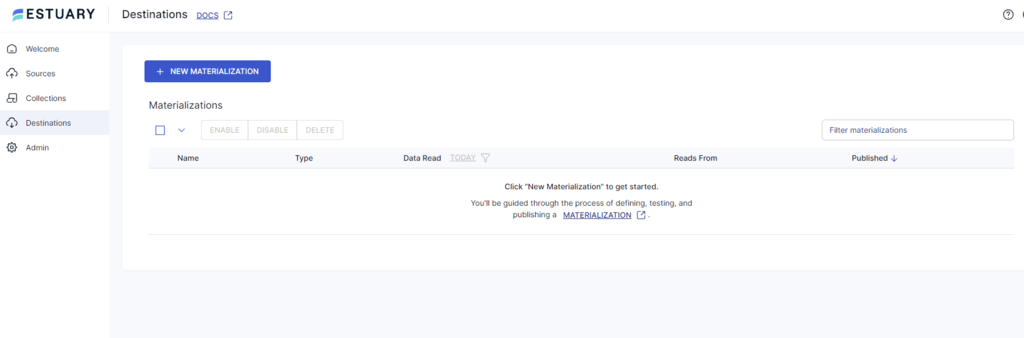

- To configure DynamoDB as the destination end of the data integration pipeline, navigate to the dashboard. Then, click on Destinations > + NEW MATERIALIZATION.

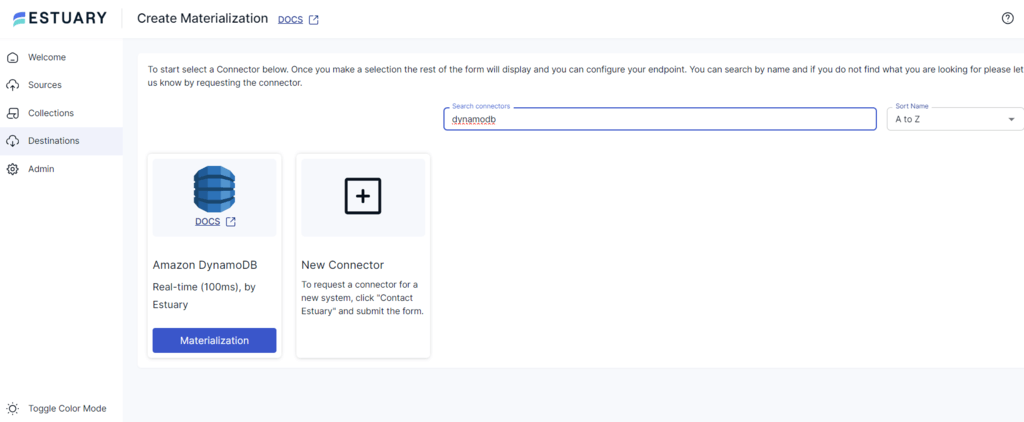

- In the Search connectors box, type DynamoDB. Click on the Materialization button of the connector.

- On the DynamoDB configuration page, enter the Access Key ID, Secret Access Key, and Region.

- Use the Source Collections section to link a capture to the materialization. Then click on NEXT > SAVE AND PUBLISH.

The DynamoDB connector materializes Flow collections of your Kafka data into tables in an Amazon DynamoDB instance.

Benefits of Using Estuary Flow

Let’s look at some impressive features of Estuary Flow that make it a popular choice for ETL (extract, transform, load) needs.

- Scalability: Estuary Flow’s distributed system enables the processing of large volumes of data. It can scale up to 7GB/s for change data capture from any sized database.

- Connectors: Estuary Flow has a vast repository of connectors that can be used to integrate data between different sources and destinations. It also provides you with real-time updates, making your workflow less time-consuming.

- Improved Data Quality: Estuary Flow has a built-in data validation feature that detects any discrepancies in the incoming data. After identifying them, it filters them to prevent further errors. It enables data enrichment and transformation, helping build an effective data pipeline with better data quality.

- Change Data Capture: CDC helps ensure data freshness. Through CDC, Estuary Flow pipelines only capture the recent changes to the source data since the last capture. This ensures that you are working with updated information.

- Cost Effectiveness: It offers flexible pricing plans for varied needs. This makes Estuary an affordable ETL tool for your organization, irrespective of its size.

The Manual Approach: Using Custom Script to Migrate Data From Kafka to DynamoDB

In this method, a custom script in Python helps migrate data from Kafka to DynamoDB. You can export data from Kafka as CSV files and then migrate it to DynamoDB. Here are the steps to do so.

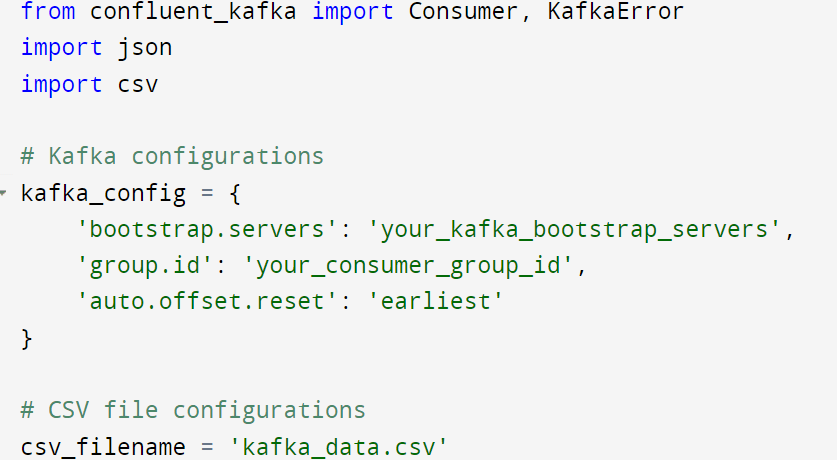

Step 1: Import Libraries and Set Configurations

First, import the necessary libraries and set up the configurations for connecting to Kafka, specifying the CSV file in which data will be stored.

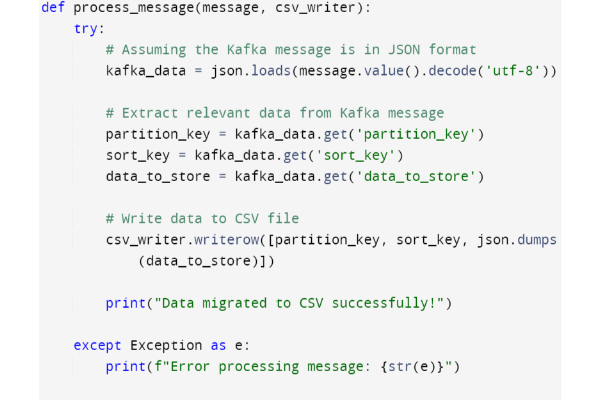

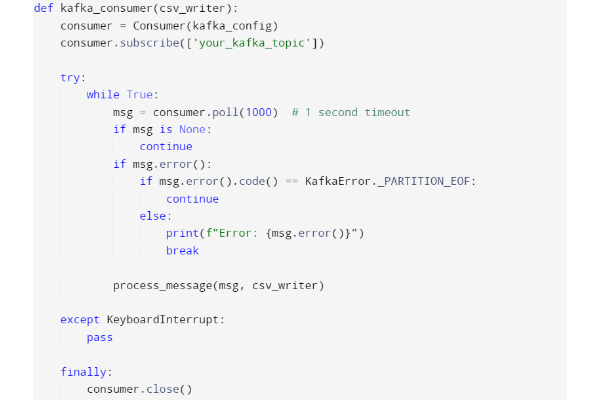

Step 2: Define Message Processing and Kafka Consumer

In this step, define functions for processing Kafka Messages and Kafka Consumer.

The process_message function extracts data from Kafka and writes it to CSV files.

You can use the kafka_consumer function to subscribe to the Kafka topic. This is done for polling messages in order to invoke a processing function for each message.

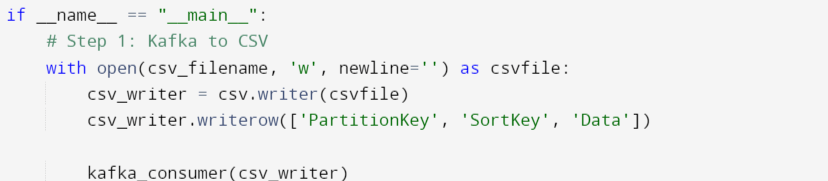

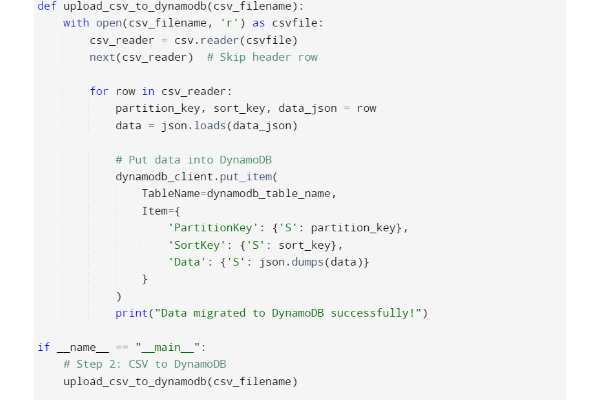

Step 3: Upload CSV Data to DynamoDB

You can upload the CSV data to DynamoDB using the following custom script.

Import libraries for connecting to DynamoDB. Define the function upload_csv_to_dynamodb, which reads data from CSV and uploads it to DynamoDB. It assumes that the CSV file has a header row and extracts data from each row for insertion in DynamoDB.

This completes the method of connecting Kafka to DynamoDB using a custom script.

Limitations of Using Custom Script for Data Migration from Kafka to DynamoDB

Let’s look into the limitations of using a custom script to migrate data from Kafka to DynamoDB.

- Complexity: Custom code for data migration is associated with several complexities. It requires sound knowledge of coding, debugging, and troubleshooting. Additionally, customizing the code becomes complicated when the data volumes increase.

- Error-prone: The use of custom scripts is error-prone as it can be difficult to spot and fix bugs. Thus, custom code may lack accuracy.

- Cost: Using a custom script for data migration has high associated costs in terms of personnel, infrastructure, and maintenance.

To overcome such limitations, you can use data migration tools like Estuary Flow to automate your data pipeline creation.

Conclusion

Data migration can be complex and challenging, with multiple solutions to execute the process. You explored two methods to migrate data from Apache Kafka to DynamoDB. While one method uses custom code to manually migrate Kafka data to DynamoDB, the other one uses an efficient data migration tool.

Estuary Flow, a real-time data integration tool, helps overcome the challenges of using custom code. You can leverage its features, such as real-time updates, scalability, intuitive interface, and affordability to get better analytical insights into your data.

Looking at integrating data from multiple sources into a single destination? Estuary Flow, with 200+ connectors, can help you achieve this almost effortlessly. Sign up for Flow right away to build efficient ETL data pipelines!

FAQs

- What data type does DynamoDB support?

DynamoDB supports three main data types:

- Scalar Type: this includes data types representing only one value such as number, string, binary, boolean, null

- Document Type: This includes complex data structure with nested attributes

- Set Type: This data type includes multiple scalar values such as string, number, and binary set.

- What is the difference between DynamoDB and DynamoDB streams?

DynamoDB is a database, while the DynamoDB stream captures changes made in the DynamoDB table. Thus, it is like a database trigger. You can use it to capture specific changes and take action on them.

- What are the uses of Kafka?

Apache Kafka is a stream processing system used to build real-time streaming data pipelines and applications. It is also used for website tracking, logging, messaging, and event sourcing.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles