Sales and support analytics provide a window into customer behavior, giving you the ability to uncover trends, look for patterns, and make smarter decisions. Analytics holds the key to a more personalized experience and in a world that’s getting more mechanical by the minute, it’s personalization FTW.

So, you have a tool taking care of sales and support analytics for you — Intercom. For analysis, you need to consolidate the data in a central data repository, like a data warehouse.

You landed on this article, so I’m guessing you’re looking for easy ways to move your data from Intercom to Redshift. Let’s dive in!

What is Intercom?

Intercom specializes in providing you with a customer service platform that ensures your customers keep coming back.

With Intercom’s AI-powered bot, Fin, you can easily answer support questions without providing any training to it beforehand. Since the need of the hour is automation to increase productivity, you can build powerful no-code automation with triggers, conditions, bots, and rules using Intercom’s workflows.

Here are three ways Intercom stands out from the pack:

- Article Suggestions: You can immediately recommend helpful content to your customers with machine learning straight into your product.

- AI-powered Inbox: Intercom also lets you generate recap conversations with other agents and replies, and helps you create new valuable support articles.

- Conversation Topics: With Intercom’s conversation topics, you get AI-powered analysis of support conversations. You can use these analyses to serve your customers better.

Intercom makes it ludicrously simple to integrate customer data across your tech stack, allowing your team a comprehensive view of your customer’s recent history.

Now, what kind of data are we talking about here?

- Behavioral Data: You can target customers based on the actions they take or don’t take with your product.

- Conversation Data: Route and prioritize customer conversations based on attributes like urgency or topic.

- Company Data: Intercom provides you with a complete picture of every customer in a single place, including their business type, location, spending, and any other details you feel are pertinent to your business.

- Custom Data: You can track custom data that’s distinct to your business like the product type or the pricing plans you offer.

You can use Intercom’s 200+ reports to better understand and measure your customer engagement, lead generation, and customer support. Here are a few examples of the stores we guess you’re looking to move to Redshift for analysis purposes:

- Sales Report: This report will show you the following information:

- Revenue influenced by Intercom (Pipeline, Opportunities, and Closed won.)

- Meetings booked through Intercom.

- Time to close opportunities.

- Deals influenced by Intercom.

- Conversions by page and message.

- Teammate’s sales performance.

- Revenue influenced by Intercom (Pipeline, Opportunities, and Closed won.)

- Conversation Report: You can use this report to get a better understanding of your team’s workload, and the state of your inboxes. You can take a peek at the following data organized by topic, team, channel, and more:

- Conversations replied to and distinct replies sent.

- New conversations (inbound, and in reply to bots or messages).

- Busiest periods for new conversations.

- Open, closed, and snoozed conversations.

- Conversations replied to and distinct replies sent.

What is Redshift?

Amazon Redshift is one of the best cloud-based data warehouses in the market today, boasting a pretty reliable and secure service that ensures you get 5X better price performance than its competitors.

Here are a few features that make analyzing your data in Redshift a breeze:

- Data Sharing: Amazon Redshift allows you to extend the performance, ease of use, and cost benefits in a single cluster to multi-cluster deployments while sharing data. It allows you to access data more instantly across clusters without having to move or copy it.

- Federated Query: Redshift’s federated queries allow you to reach into your operational relational databases. You can easily join data from your Redshift data warehouses, data in your operational stores, and data in your data lakes to make better data-driven decisions.

- Redshift ML: Redshift ML makes it simple for data practitioners to train, create, and deploy Amazon SageMaker models through SQL. You can use these models for financial forecasting, churn detection, risk scoring, and personalization in your queries and reports.

- Streaming Ingestion: Data analysts, data engineers, and big data developers are using real-time streaming engines to amp up customer responsiveness. Armed with the new streaming ingestion capability, you can use SQL in Redshift to directly connect to and ingest data from Amazon Managed Streaming for Apache Kafka (MSK) and Amazon Kinesis Data Streams. Streaming ingestion in Redshift makes it easy to create and manage downstream pipelines by letting you make materialized views on top of streams. You can manually refresh the materialized views to get the most recent streaming data.

What are the Benefits of Migrating Data from Intercom to Redshift?

- You can analyze every point of customer interaction to uncover the channels that are yielding the best dividends for you at each stage to keep your customers moving down your sales funnel.

- By integrating Intercom to Redshift, you can easily keep track of how every channel is performing vis-a-vis lead generation. It’ll also be easier for you to analyze customer communication habits, marketing ROI, and product usage behavior in a single customer view.

- You can analyze every marketing channel touchpoint to identify and segment the most engaged customers for your business. This’ll be simpler in a single data repository as compared to analyzing data present in different data silos.

Setting up Intercom to Redshift Integration: 2 Methods

In this section, we'll explore two distinct approaches for integrating Intercom with Redshift:

Method 1: Connecting Intercom to Redshift using Intercom APIs

The main components of Intercom API are:

- Leads

- Users

- Tags

- Segments

- Companies

- Notes

- Counts

- Events

- Conversations

However, you can’t pull all of the above entities from the Intercom API. Events, for instance, can only be pushed inside Intercom and it isn’t possible to extract them again.

Step 1: Say, you want to get the data around your users. You can execute the CURL command as follows:

plaintextcurl https://api.intercom.io/users

-u pi3243fa:da39a3ee5e6b4b0d3255bfef95601890afd80709

-H 'Accept: application/json'Step 2: You can then extract all the conversations performed on Intercom with the following command:

plaintext$ curl

https://api.intercom.io/conversations?type=admin&admin_id=25&open=true

-u pi3243fa:da39a3ee5e6b4b0d3255bfef95601890afd80709

-H 'Accept:application/json'Step 3: Now that you have your JSON file handy, you need to move it to an S3 bucket for staging before the data is moved to Redshift. You can load JSON data to an S3 bucket using the following options:

- Load from JSON data using the ‘auto’ option

- Load from JSON data using the ‘auto ignorecase’ option

- Load from JSON data using a JSONPaths file

- Load from JSON arrays using a JSONPaths file

Step 4: Let’s take an example of how you can load JSON data using a JSONPaths file. This option can be used to map JSON elements to columns when your JSON data objects don’t correspond directly to column names. Say your file is named conversations_object_paths.json. Now to load the data, run the following command:

plaintextcopy conversations

from 's3://mybucket/conversations_object_paths.json'

iam_role 'arn:aws:iam::0123456789012:role/MyRedshiftRole'

json 's3://mybucket/conversations_jsonpath.json';Limitations of using Intercom APIs

- Rate Limitation: Trying to send too many requests continuously without following the rate limits can lead to too many request exceptions being sent as responses. The application logic needs to have a way to process the response headers which contain the details of the remaining requests possible.

- Pagination: Intercom APIs are paginated, which means, for large amounts of data, your application will also need to include the logic to keep track of the present form requests and pages accordingly.

Method 2: Using Estuary Flow to Connect Intercom to Redshift

You can set up a migration flow from Intercom to Amazon Redshift using Estuary Flow in two simple steps.

Step 1: Configure Intercom as a Source

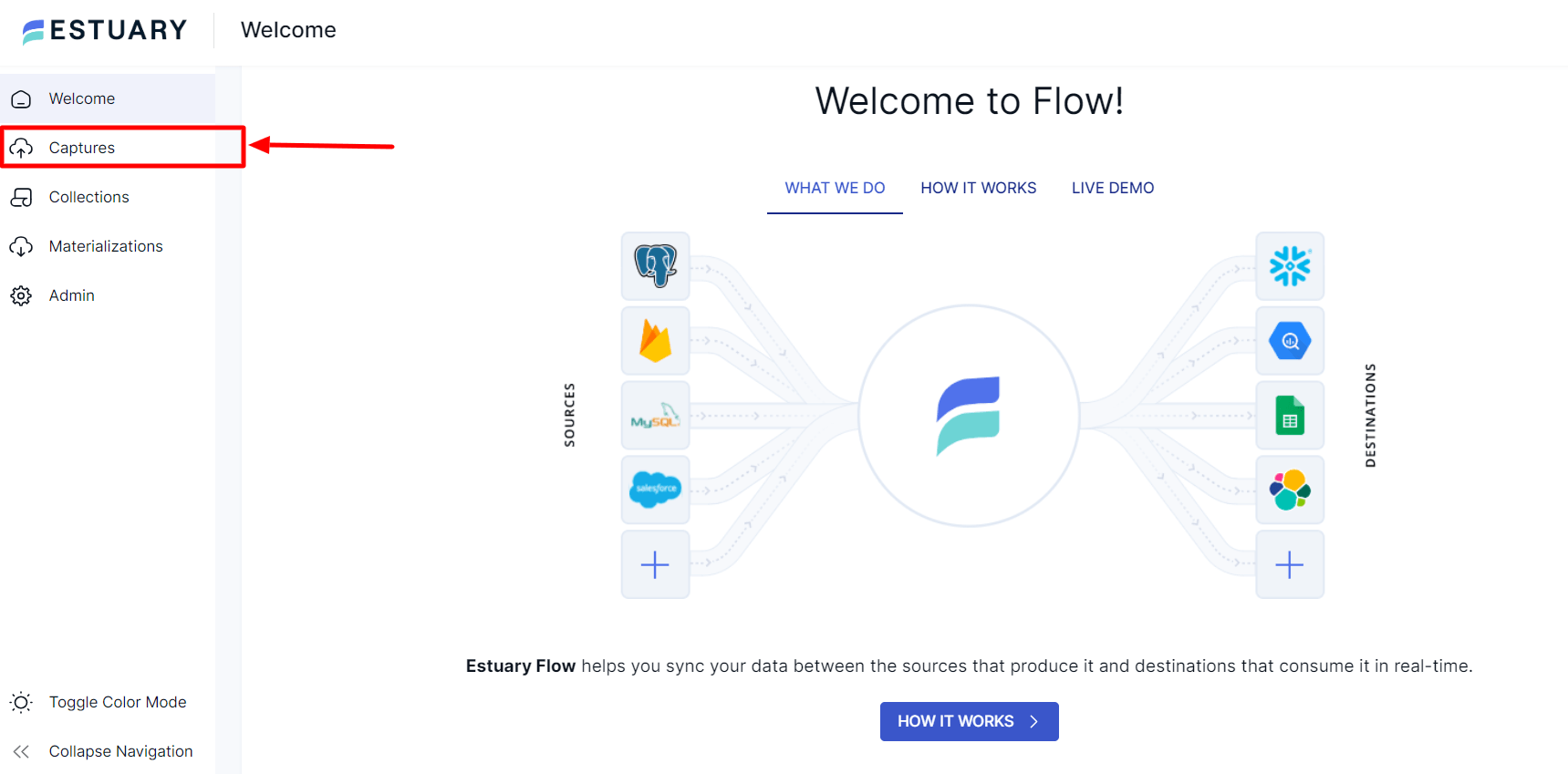

- You can either sign in to your Estuary account or sign up for free. Once you’ve signed in, click on Captures.

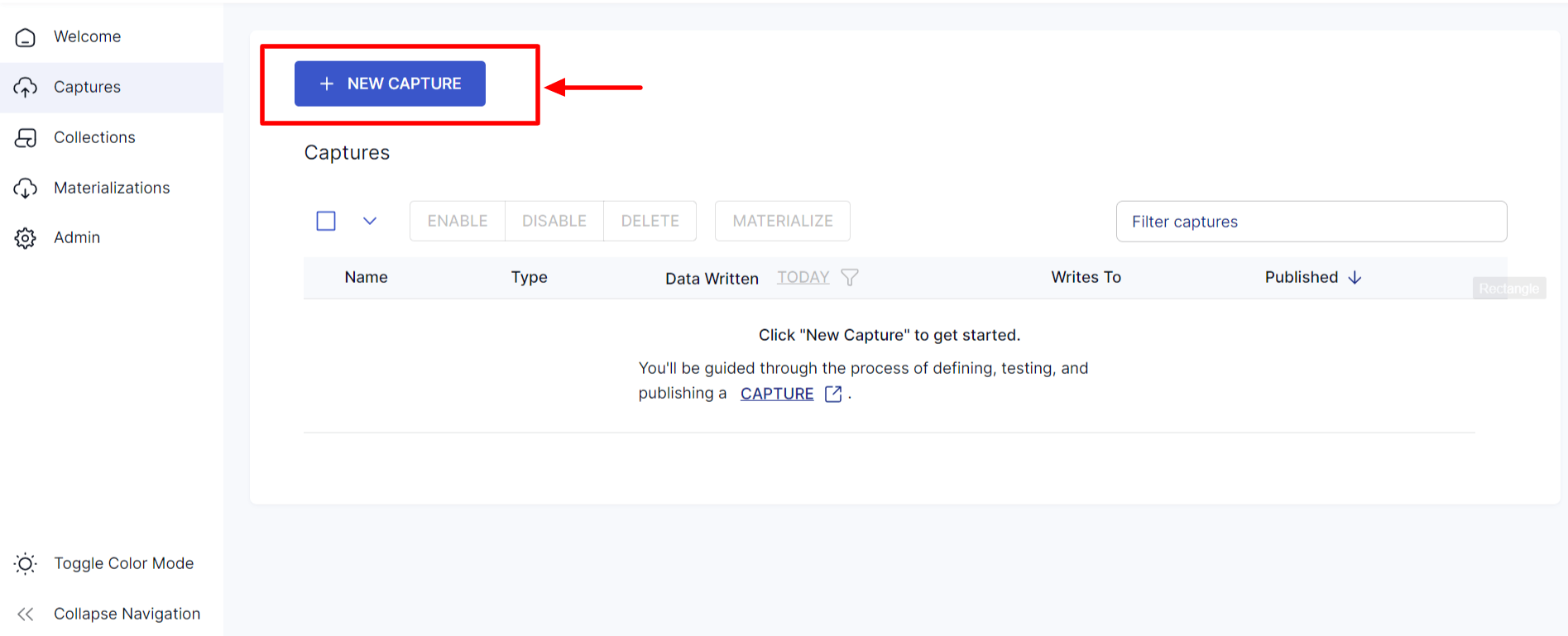

- Click on the + NEW CAPTURE button to get started.

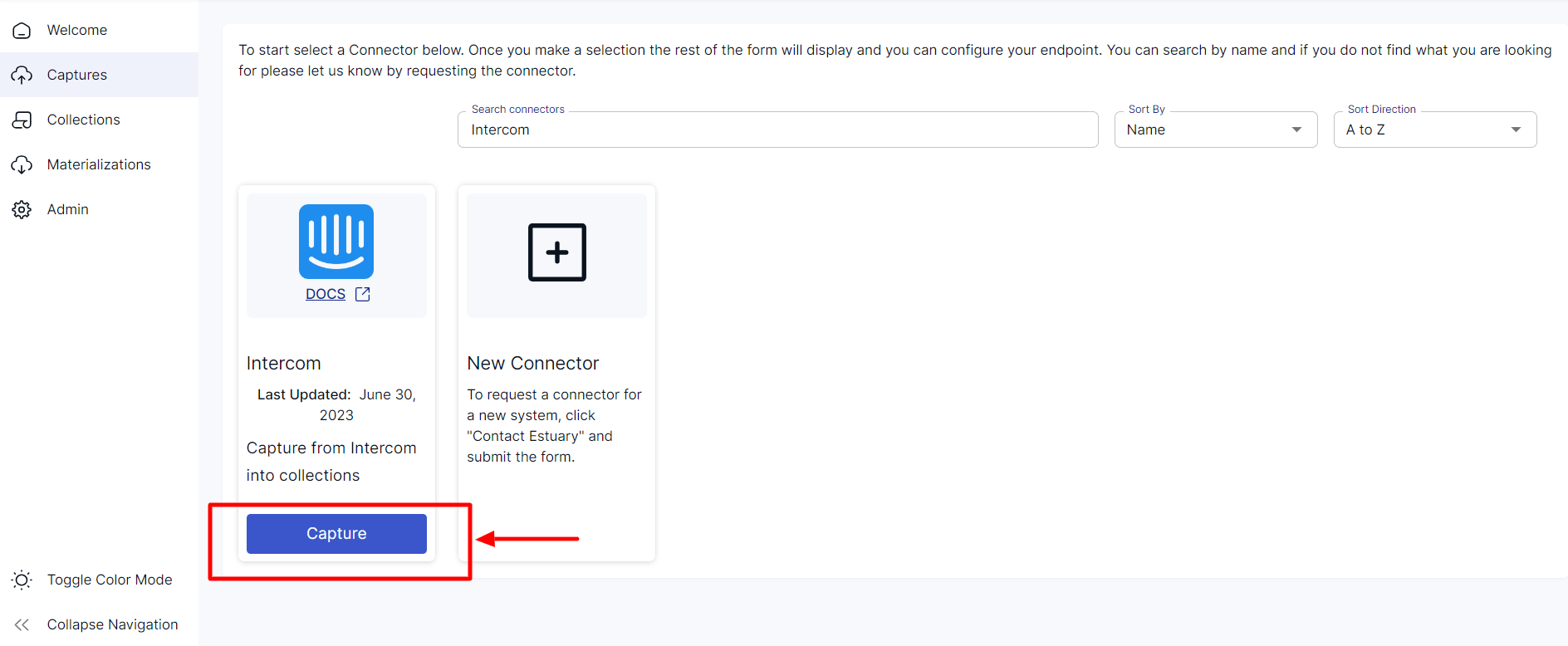

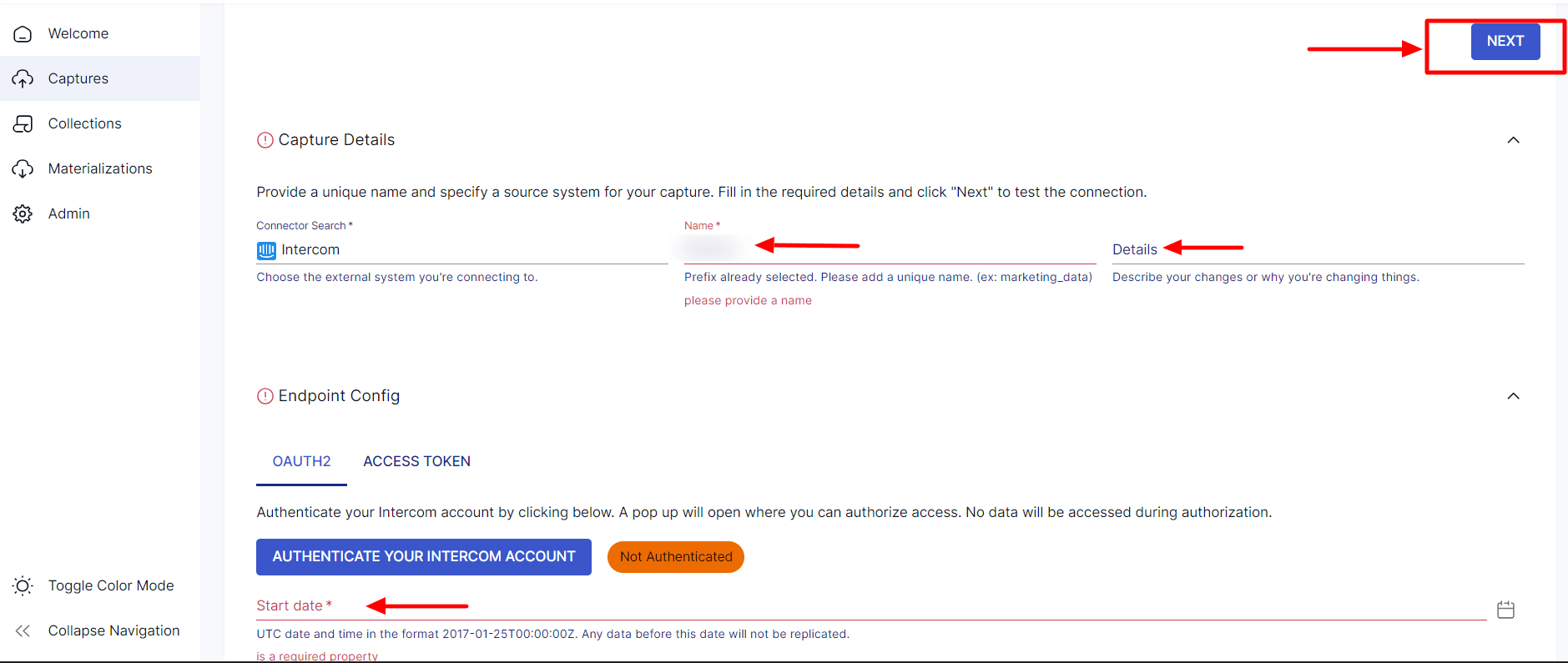

- On the Capture page, look for Intercom and click on Capture.

- Give the capture a name and fill in all the details like Name, Details, and Start Date. Once you’ve filled in all the details, click on Next. Estuary Flow will set up a connection to your Intercom account and pinpoint the data tables.

Click Save and Publish to save the capture configuration.

Step 2: Configure Redshift as a Destination

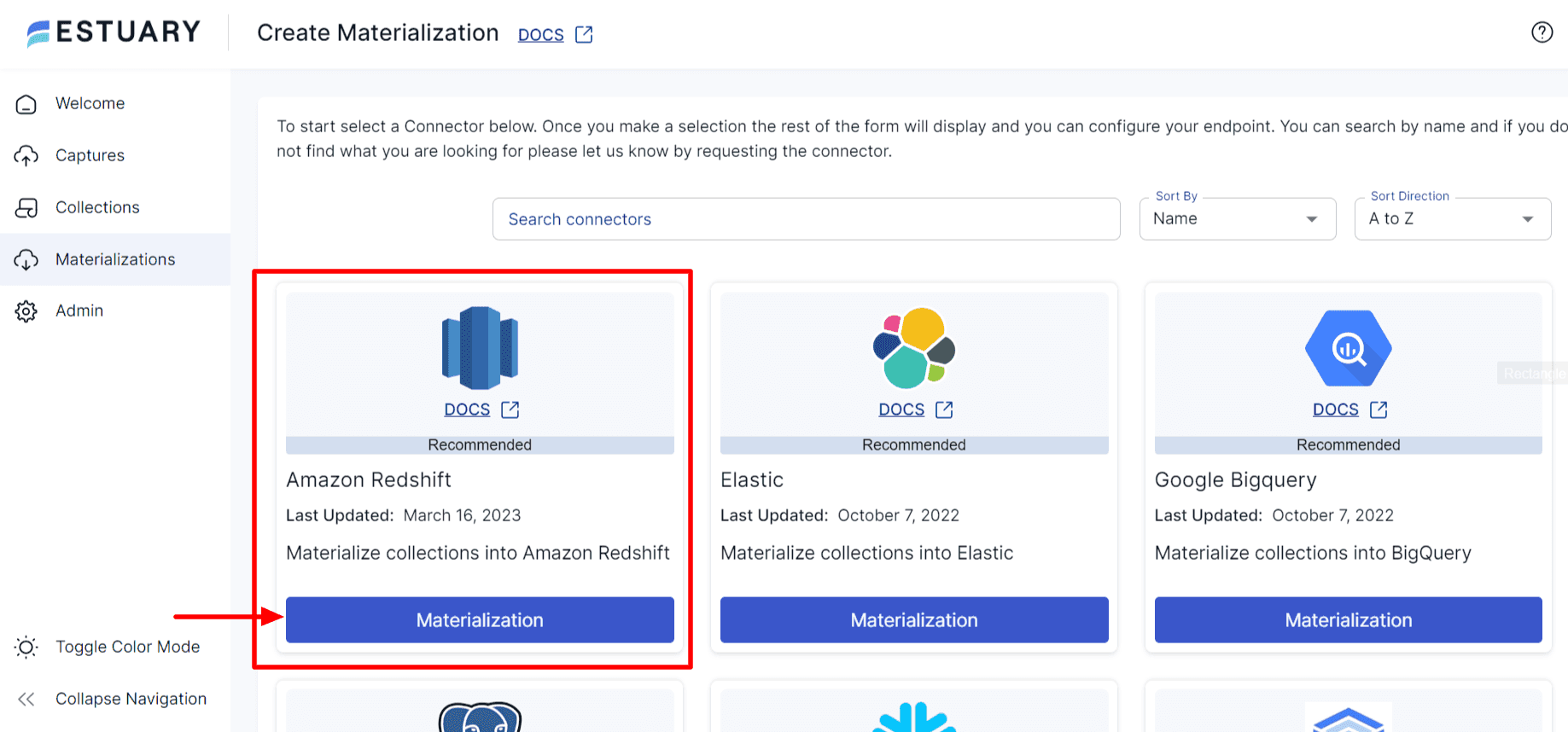

- Once you’ve captured data from Intercom, you need to configure Redshift as your destination. To do this, click on Materialize Connections in the popup or navigate to the Estuary dashboard and click on Materializations in the left pane.

- Click on +NEW MATERIALIZATION to get started.

- In the Materialization window, look for Amazon Redshift and click on Materialization.

- Give your materialization a suitable name and fill in all the Materialization Endpoint Config details like Address, User, Password, Database Name among others. Click on Next when you’ve filled those out.

- Choose the Source Collections from Intercom if you haven’t done that already.

- Click on Save and Publish and you’re done with the setup. Now, you can seamlessly move data from Intercom to Amazon Redshift for carrying out data analyses.

What makes Estuary Flow unique from the other tools in the market?

Estuary’s Intercom connector supports all the fields, including custom fields that other competitors don’t support. If you wanna capture “Custom Attributes” from your Intercom dataset, Estuary Flow provides out-of-box support to do just that.

You can use Estuary Flow to bring real-time data for analysis into your data warehouse without any prior coding knowledge. As a platform built for data architects, here’s why you should try Estuary Flow for your data migration needs:

- Streaming ELT: With Estuary Flow, you can centralize data with real-time replication from a vast multitude of sources to a destination of your choice.

- Customer 360: Estuary Flow provides you with a unified view of your customers while your teams interact with them. Enjoy seamless access to real-time and historical data.

- Data Modernization: Estuary Flow allows you to easily connect old systems to a cloud setup that can be expanded as needed.

Conclusion

In this article, we discussed two potential methods for you to move data from Intercom to Amazon Redshift for your analysis. You can opt for Intercom APIs if the data you’re planning to analyze isn’t voluminous. However, it's important to consider that in such cases, the presence of pagination and rate limitations may impact efficiency, leading to slower query speeds.

For these scenarios, choosing third-party tools like Estuary Flow can be a good way to ensure seamless analysis. Its code-free streaming data operations platform gives you access to real-time data with history and the integrations you need.

You can try Estuary Flow for free today to make working with real-time data more cost-effective and just as simple as working with batch data! Head to the web app to start (no credit card needed)!

References:

Interested in exploring how to transfer Intercom data to BigQuery? Dive into our guide on Intercom to BigQuery for detailed insights and strategies.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles