Companies need to be experts at their core business, so it’s rare that they can be leaders in other areas. Imagine the problems that a large bank or airline deals with on a daily basis. Their attention should squarely be on hedging the current state of financial risk to beat the market or handling logistics for their fleet and customers, not designing next-generation data systems, HR systems or recruiting software. For most non-core business applications, companies don’t feel the need to reinvent the wheel, but when it comes to tech infrastructure like data processing and integrations, the enterprise hasn’t yet been able to take advantage of experts and innovators — the option of “buy” vs “build” doesn’t yet truly exist.

Paying someone else to build only works for the few

To leverage an expert, a business can either outsource or work with a vendor. Outsourcing poses serious privacy and security risks by giving the keys to the castle to a third party. I’ve seen first hand that engagements with even the best of them (ex. McKinsey) lead to a combination of 7+ figure bills and B- deliverables/technology which makes that route less than ideal.

Vendors Integrations — SaaS Requires Trust

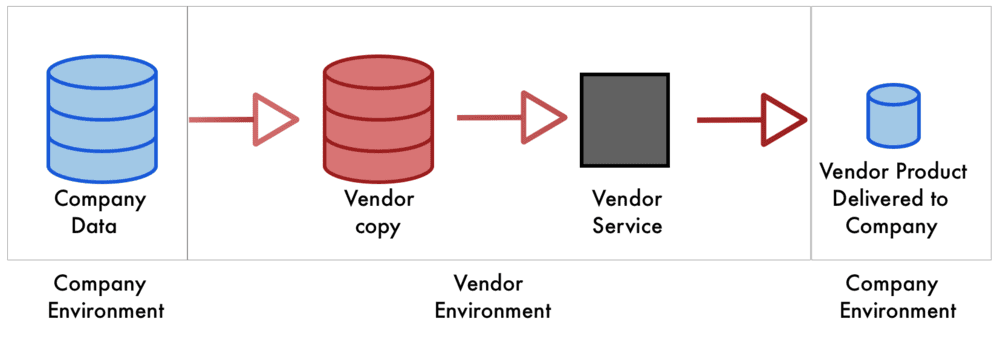

The majority of companies out there can’t afford McKinsey and therefore need to either build or work with a vendor, both of which have suboptimal prospects. Most data processing and orchestration vendors operate under a SaaS model where state of the art involves copying that data, sending it to the vendor, then having them process it and send it back to its owner along with derived assets — the “product” ex. reports, machine learning, etc. For the enterprise, this can be prohibitive; contracts and policies likely prevent the data from being sent externally. Even if they don’t, the whole concept of copying data limits what you can do with it since its lineage can’t be preserved after being copied. Finally, security risks increase each time data is provisioned to an external company. When data is copied and stored in a second location, there’s immediately twice the surface area for cyber attacks.

Imagine a large public bank provisioning their account data to a small start up that could help them gather insights faster — highly unlikely. Relying on a startup to manage the complex privacy and regulatory landscape in an arena with zero tolerance for mistakes would essentially be a non-starter. Not to mention the security risk that copying data to a third party would take on.

On Prem Solutions?

This is a space which has few if any on-prem alternatives. As such, an entire class of tools hasn’t been available to satisfy the security- and privacy-conscious enterprise. Anything that can’t be hosted internally is unlikely to garner usage. Some examples of technologies that haven’t broken through to the enterprise yet include ELT providers (FiveTran, Stitch), most transformation tools (Snowflake, dbt), machine learning (Alteryx, BigSquid) just to name a few, but essentially any company in the data processing and pipelining space which can’t be hosted.

Enterprises waste time away from their core business

Importantly, this means that the enterprise hasn’t been able to take advantage of experts in these fields. Instead of using custom-built solutions to powety rfully and thoughtfully address problems, companies are forced to become experts themselves in selecting, composing, combining, tuning and operating the myriad of different open source stacks required to replicate existing solutions. Realistically, nearly every company would be better served focusing on their core business than re-inventing the wheel.

Kubernetes can enable the ease of the cloud with the privacy and security of on-prem

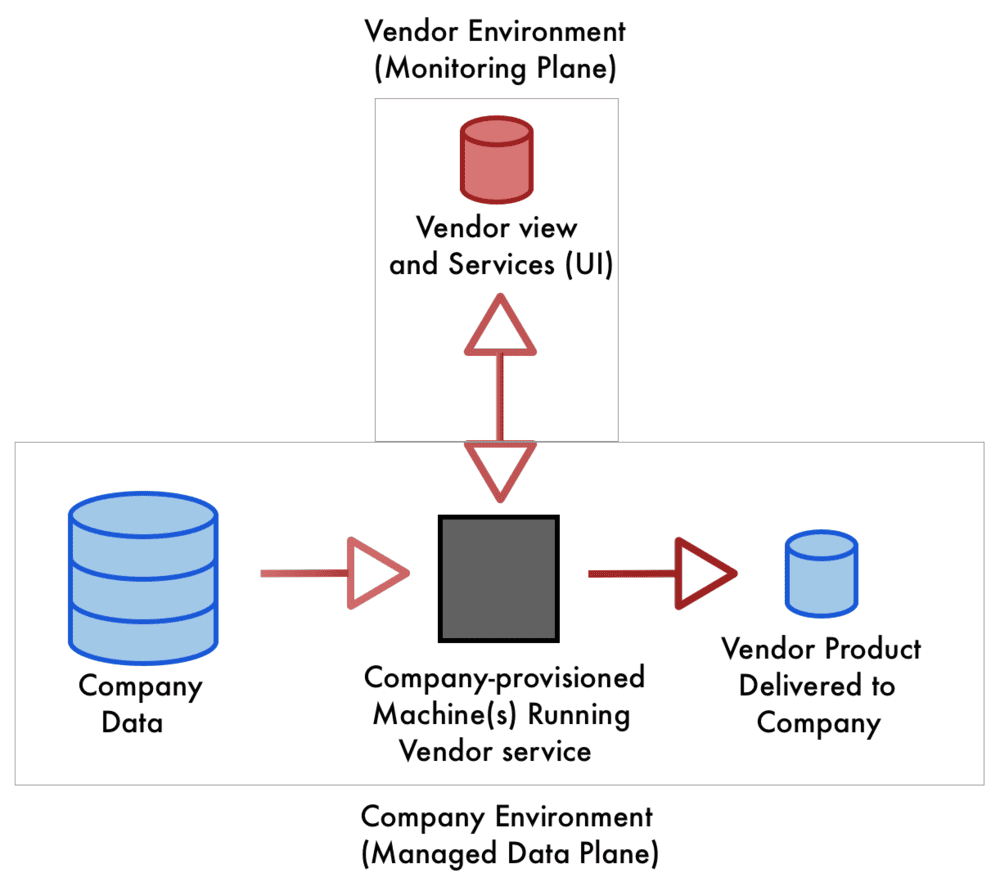

Vendor integrations have historically come in just a couple of varieties: a) SaaS where the vendor copies data from the client and works on it in the vendor’s environment or b) On-Prem where the vendor ships an application which is run on premise (ex. GitHub Enterprise). From a technical standpoint, new technology has only recently made it feasible to gain the monitoring, control and visibility that companies get from (a) while preserving privacy and security from (b). For example, Kubernetes has the power to allow vendors to manage a service which exists and runs on company servers. A service where all data is kept solely in an environment that is entirely controlled and secured by your company while still providing the vendor rich visibility and monitoring.

Vendors with data-processing expertise for the first time could package, deliver, monitor and maintain a managed service, a data-plane which runs within the company’s Kubernetes environment. All company data could reside within — and never leave — the managed data-plane. On top of this, the vendor is able to provide all of the upsides of typical cloud-managed SaaS as an external service run by the vendor. Companies would still be able to enjoy fancy UI and consolidated management as the control plane pairs with the customer-installed data-plane, allowing for a nearly identical customer experience as current industry standards.

The benefits of using this Kubernetes-fueled approach to create a managed data-plane are multifold:

- Cost and time Instead of paying for expensive and time consuming data transfers outside of your infrastructure to a third party, process it where it sits. Data transfer is routinely the biggest ticket item in cloud bills.

- Privacy and security All data resides within a company’s infrastructure and never leaves. The enterprise will be able to rest at ease knowing that it has full control over the security and privacy of its data.

- Engineering time Instead of needing to become experts in operating complex, distributed processing engines, outsourcing to an expert is now possible.

- Infrastructure agnostic Solutions built in this manner will work regardless of infrastructure in both hosted and cloud environments.

Furthermore, Kubernetes opens new possibilities to work with low-latency applications that exist on your infrastructure. Imagine being able to plug new services (that provide managed machine learning, reporting or analytics) that happen immediately without the expense of transferring over the internet. Testing out vendors becomes simple and painless.

There are challenges with the approach. For vendors, this operating model requires thoughtful approaches to application instrumentation and consolidated monitoring. Rather than having one or a few vendor-hosted multi-tenant environments to manage, the vendor must have scalable processes to manage and monitor thousands of external customer data planes. All good paradigm shifts have some complexity to work through.

Undoubtedly, the next generation of tech infrastructure vendors will leverage tools like Kubernetes to build technologies that can be used by the biggest enterprise out there without operational or business risk. The only real question is which industries and vendors will move first to enable this new paradigm. Estuary is committed to invoking these design principles for privacy, security and customer confidence.

About the author

David Yaffe is a co-founder and the CEO of Estuary. He previously served as the COO of LiveRamp and the co-founder / CEO of Arbor which was sold to LiveRamp in 2016. He has an extensive background in product management, serving as head of product for Doubleclick Bid Manager and Invite Media.

Popular Articles