Modern applications generate large amounts of data daily, making robust data management necessary for optimal performance. Real-time data synchronization is one such technique that is essential for dynamic data management within your applications.

Google Firestore, a scalable NoSQL database, excels in providing real-time data syncing capabilities, making it an ideal choice for dynamic applications. However, while Firestore is perfect for real-time operations and scalability, it may not be the best fit for large-scale, complex data analysis.

This is where Google BigQuery steps in. BigQuery is engineered to handle high-concurrency analytics workloads with ease, enabling you to perform complex queries and derive actionable insights that drive better business decisions.

Let's dive into a quick overview of both platforms and explore the various methods for ingesting data from Firestore into BigQuery, empowering you to harness the full potential of your data.

If you’re a seasoned data engineer who is already familiar with these tools, feel free to skip to the implementation section to learn how to quickly ingest Firestore data into BigQuery.

Firestore – The Source

Firestore, a cutting-edge NoSQL cloud database developed on the robust Google Cloud infrastructure, is designed to seamlessly support web, mobile, and server applications. Its flexible and hierarchical data model allows you to organize data into documents, which are then grouped within collections, providing a streamlined and efficient data management system.

One of Firestore's standout features is its offline support. This functionality enables your applications to access and modify data even without an internet connection, ensuring that your users experience uninterrupted performance. Once connectivity is restored, Firestore automatically synchronizes any offline changes across multiple client apps, maintaining data consistency and enhancing your application's responsiveness regardless of network conditions.

With Firestore, you can build dynamic, responsive applications that perform reliably in both connected and disconnected environments.

BigQuery – The Destination

Google BigQuery is a powerful, fully managed data warehouse and analytics platform that streamlines the process of analyzing large datasets. With its robust built-in features for machine learning, business intelligence, and geospatial analysis, BigQuery enables you to derive insights efficiently and effectively.

BigQuery’s architecture is divided into two key components:

- Storage Layer: This layer is where data is ingested, stored, and optimized. Utilizing columnar storage, BigQuery ensures efficient data storage and retrieval by accessing only the necessary columns during queries. This design enhances performance and reduces costs.

- Compute Layer: Separate from the storage layer, this layer provides the analytics capabilities. You can dynamically allocate computing resources as needed without impacting storage operations, allowing for flexible and scalable data processing.

Let’s look at some of BigQuery's key capabilities.

- Scalability: BigQuery is engineered for effortless scalability, handling data volumes from gigabytes to petabytes seamlessly. Its robust infrastructure supports rapidly growing datasets and intensive data analysis without any performance degradation, ensuring that your analytical needs are met as your data expands.

- SQL Querying: BigQuery uses standard SQL, a language familiar to many developers and data analysts. This familiarity allows you to write queries for data analysis using syntax you already know, eliminating the learning curve associated with new languages and accelerating the adoption of BigQuery within your team.

- BigQuery ML: Typically, implementing machine learning or AI on large datasets requires extensive knowledge of programming machine learning frameworks. BigQuery ML simplifies this process by enabling you to create and run machine learning models using standard SQL queries. This user-friendly approach significantly speeds up the development of predictive models, allowing for faster and more efficient decision-making.

- Security and Compliance: BigQuery provides robust security features, including data encryption at rest and in transit, fine-grained access controls, and compliance with various regulatory standards. These features ensure that your data is secure and meets industry compliance requirements.

Why Connect Firestore to BigQuery?

- Scalability: BigQuery's robust scalability allows you to easily manage large volumes of incoming Firestore data. You can store and maintain this data in a central repository for comprehensive analysis.

- Complex Querying: As your application grows, it will generate more data, requiring intensive BI analysis. BigQuery helps you perform advanced analysis on your Firestore data, using machine learning and predictive analytics to generate valuable insights for improved decision-making.

- Performance Optimization: BigQuery optimizes performance with columnar storage, which organizes data in columns instead of rows. This storage method enhances data compression and enables efficient data distribution across servers.

How to Stream Data from Firestore to BigQuery

You can stream data from Firestore to BigQuery in two ways:

The Automated Way: Using Estuary Flow

Estuary Flow offers a streamlined and automated solution for streaming data from Firestore to BigQuery. With Estuary Flow, in just a few minutes you can set up a continuous data pipeline that ensures real-time data synchronization between the two platforms. This method not only reduces manual intervention but also guarantees that your data is always current, allowing you to focus on generating insights rather than managing data transfers. Estuary Flow's user-friendly interface and robust features make it the optimal choice for maintaining a seamless data flow.

The Manual Way: Using Google Cloud Storage

While it's possible to manually stream data from Firestore to BigQuery via Google Cloud Storage, this method involves multiple steps and considerable manual effort. The process requires exporting Firestore data to Cloud Storage and then importing it into BigQuery. This approach can be time-consuming and prone to errors, making it less efficient and more challenging to maintain consistent data updates.

Let's explore both methods to sync Firestore data to Bigquery:

Method 1: Using Estuary Flow to Stream Firestore to BigQuery

Estuary Flow is a powerful CDC (Change Data Capture) and ETL (Extract, Transform, Load) platform that enables real-time integration of data between various sources and destinations. With Estuary Flow, you can build and test your data pipelines to streamline data flow across multiple systems, including cloud storage, databases, APIs, and more.

Estuary Flow simplifies the process of synchronizing data between Firestore and BigQuery, making your data readily accessible for comprehensive analysis using BigQuery's robust capabilities.

Here’s a detailed rundown on using Estuary Flow to stream Firestore to BigQuery:

Prerequisites

Step 1: Configure Firestore as Your Source

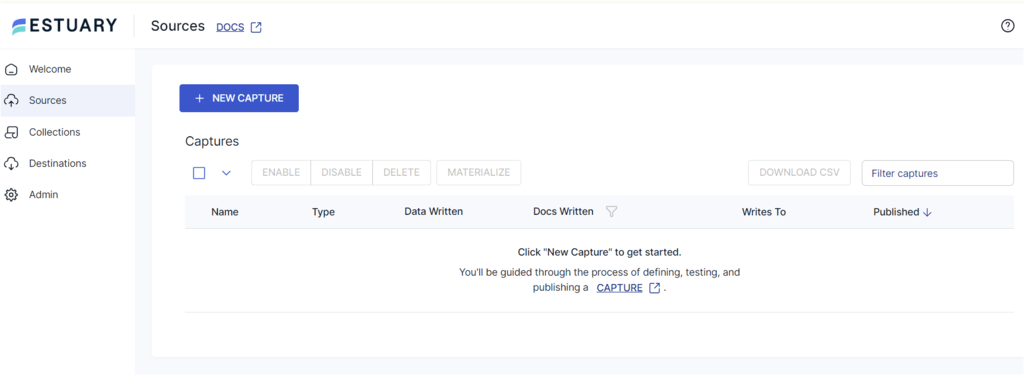

- Sign in to your Estuary account.

- Select Sources from the sidebar and click on the + NEW CAPTURE button.

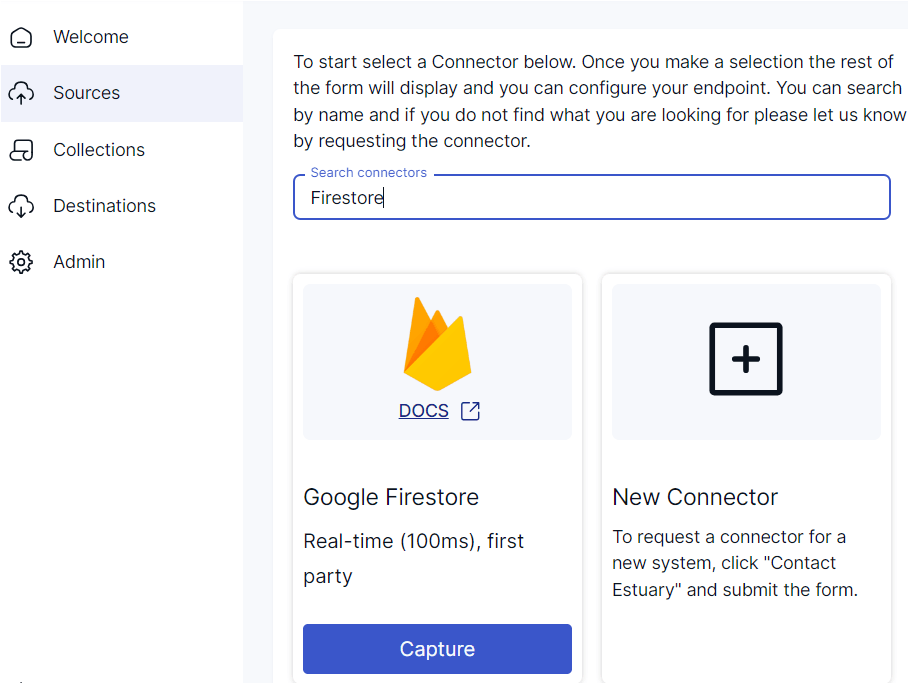

- Search for Firestore using the Search connectors field and select Google Firestore as your source by clicking its Capture button.

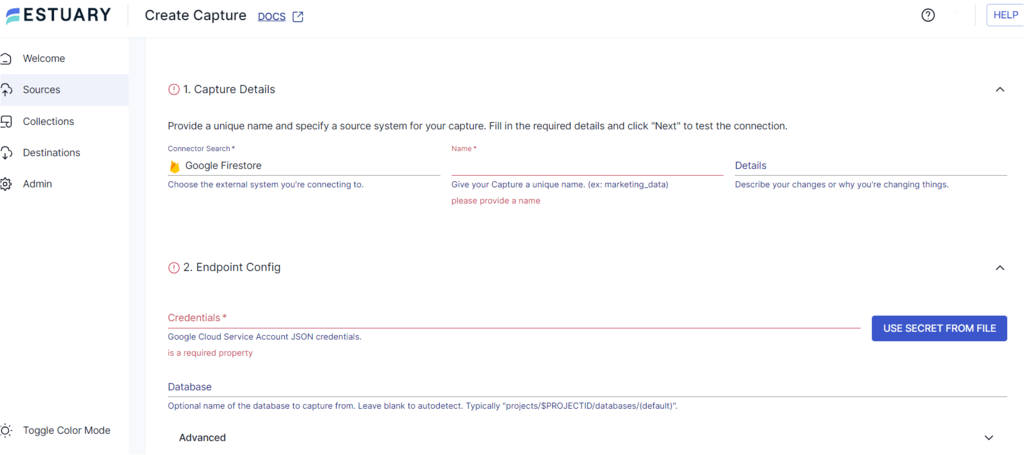

- On the Firestore connector configuration page, specify the following:

- Insert a unique Name for your capture.

- Add the JSON Credentials for your Google Cloud Service account.

- Click NEXT. Then, click SAVE AND PUBLISH. The connector will capture the data from your Google Firestore collections into an Estuary Flow collection.

Step 2: Configure BigQuery as Your Destination

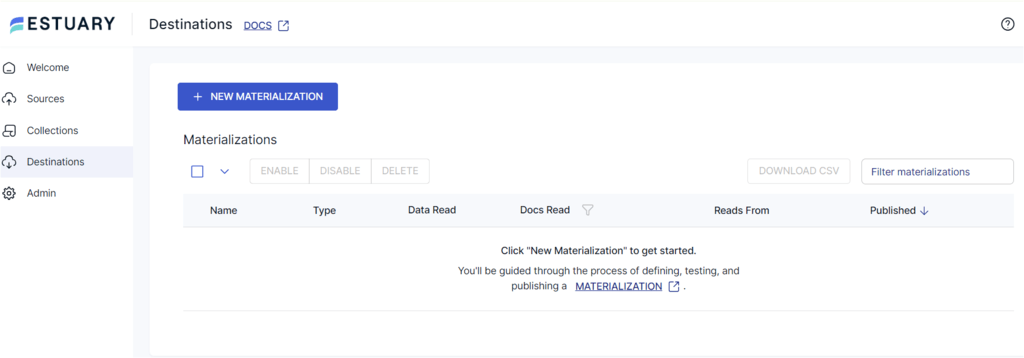

- Select Destinations from the sidebar on the Estuary dashboard. Then, click the + NEW MATERIALIZATION button.

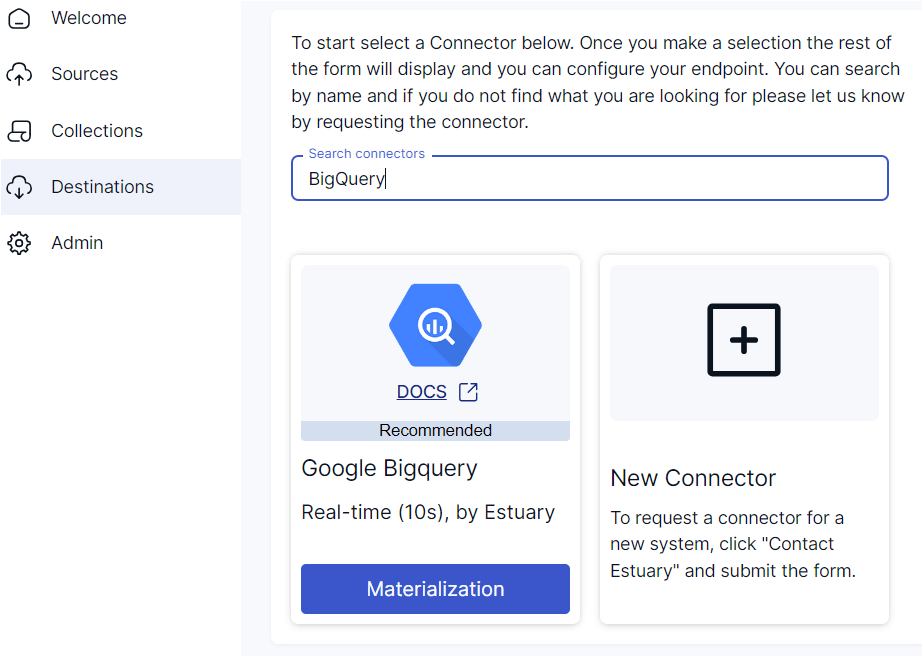

- Search for BigQuery using the Search connectors field and select Google BigQuery as your destination by clicking its Materialization button.

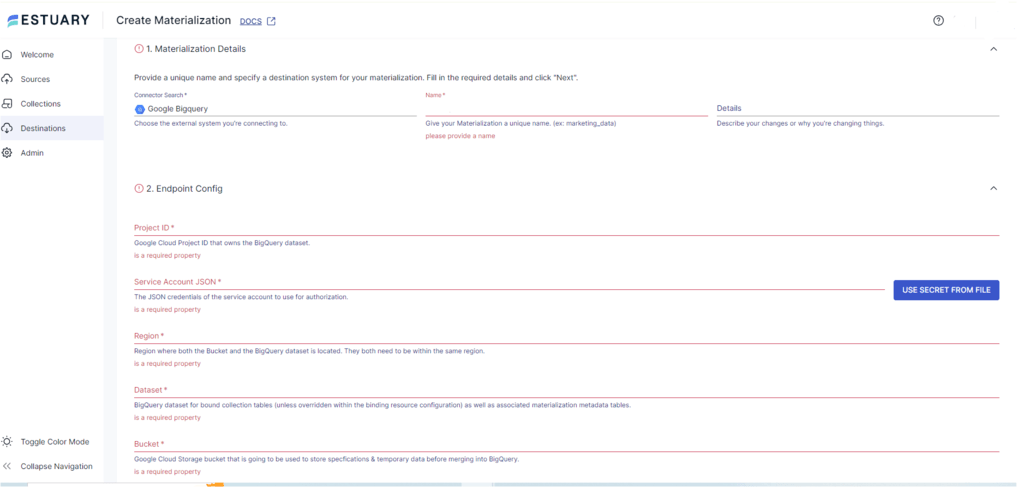

- On the BigQuery connector page, specify the mandatory details, including:

- A unique name for your materialization.

- For Endpoint Configuration:

- Project ID: This is the Google Project ID, which contains the BigQuery dataset.

- Service Account JSON: Enter the JSON credentials for your Google service account. It will be used for authorization.

- Region: Specify the region where the GCS bucket and BigQuery dataset are present (both should be present in the same area)

- Dataset: Specify the data and materialization metadata tables where the data will be stored.

- Bucket: This is the GCS bucket where the data will be temporarily stored.

NOTE: Even though your Capture Flow collection automatically links to the materialization, you can manually add a capture to your materialization through the Source Collections section.

4. Once you specify all the mandatory fields, click NEXT > SAVE AND PUBLISH. The connector will then materialize Flow collections of your Firestore data into BigQuery tables.

Benefits of Using Estuary Flow

- Change Data Capture (CDC) Support: Estuary Flow utilizes Change Data Capture (CDC) to integrate your data in real-time. This feature is particularly beneficial for performing real-time analytics on your Firestore data using BigQuery, enhancing operational efficiency and enabling timely decision-making based on the most current data.

- Efficient Data Transformation: Estuary Flow supports both SQL and TypeScript transformations. The inclusion of TypeScript enhances data pipeline reliability by enabling type-check features during data transformation. This ensures your data transformations are accurate and consistent, reducing errors and improving data quality.

- Automated Backfills: Estuary Flow offers automated backfills for your historical data, ensuring that your data pipeline includes comprehensive past records. Once the historical data backfill is complete, you can seamlessly transition to real-time data streaming, maintaining a continuous and up-to-date data flow.

Method 2: Using Google Cloud Storage to Stream Data from Firestore to BigQuery

This method involves exporting Firestore data to a Google Cloud Storage (GCS) bucket, from which you can subsequently load the data into BigQuery. While this approach requires more manual steps and is less efficient compared to automated solutions like Estuary Flow, it provides a flexible way to manage your data transfer process in a pinch.

Here are the steps to perform Firestore export to BigQuery using Google Cloud Console:

Prerequisites

- Enable Billing: Ensure that billing is enabled for your Google Cloud Project to use the export functionality.

- Create a GCS Bucket: Set up a Google Cloud Storage (GCS) bucket in a location that is geographically close to your Firestore database. This minimizes latency and enhances data transfer efficiency.

- Permissions: Verify that you have the necessary permissions to access and export data from the Firestore database. This includes permissions for Firestore, Google Cloud Storage, and BigQuery. See an outline below of the specific permissions needed to do this:

- Firestore Permissions:

- datastore.export: Required to export data from Firestore.

- datastore.import: Required if you plan to import data back into Firestore.

- datastore.entities.export: Allows exporting Firestore entities.

- Google Cloud Storage Permissions:

- storage.buckets.create: Required to create a GCS bucket if you don’t have one.

- storage.objects.create: Allows you to upload objects (exported Firestore data) to the GCS bucket.

- storage.objects.get: Required to read objects from the GCS bucket during the BigQuery import process.

- storage.objects.list: Allows listing objects within the GCS bucket.

- BigQuery Permissions:

- bigquery.tables.create: Required to create new tables in BigQuery.

- bigquery.tables.updateData: Allows inserting data into BigQuery tables.

- bigquery.jobs.create: Required to run load jobs to import data from GCS to BigQuery.

- Service Account Permissions:

- If using a service account to perform these actions, ensure the service account has the above permissions assigned through the relevant IAM roles:

- roles/datastore.importExportAdmin

- roles/storage.admin

- roles/bigquery.admin

- If using a service account to perform these actions, ensure the service account has the above permissions assigned through the relevant IAM roles:

- Firestore Permissions:

Step 1: Export Data from Your Cloud Firestore Database

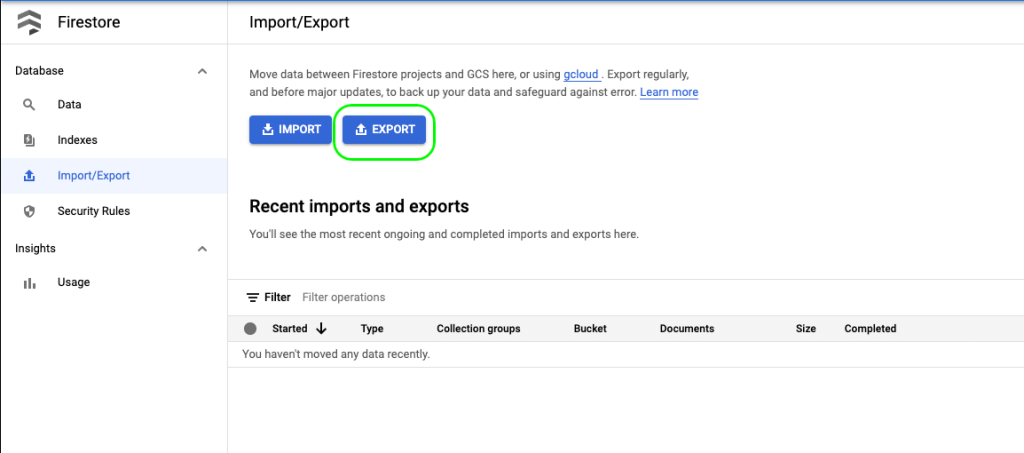

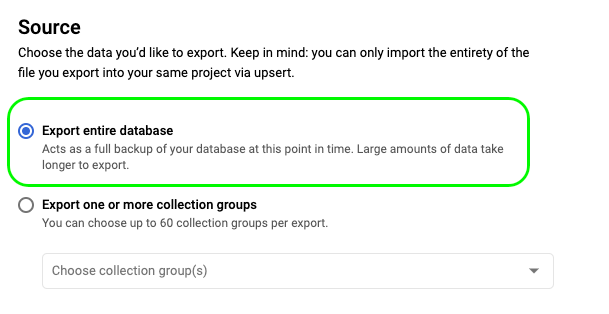

- Navigate to the Cloud Firestore Import/Export page on the Google Cloud Platform Console.

- Click on Export.

- Select the Export entire database option.

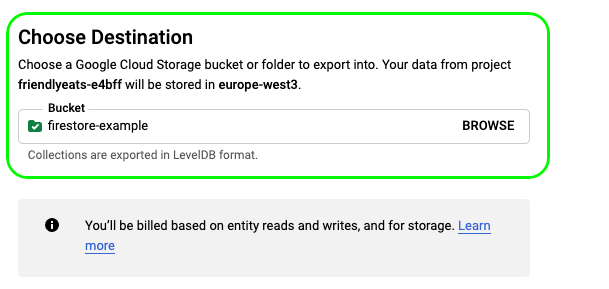

- In Choose Destination, either enter the name of a Google Cloud Storage bucket or click on the BROWSE option to select an existing bucket.

- After selecting your bucket, click on Export to begin the export process.

Once you click on Export, it will create a folder within your GCS bucket that will contain the following:

- An export_metadata file for each specified collection.

- Files containing your exported Firestore collections.

To ensure data compatibility with the BigQuery-acceptable formats, consider transforming your Firestore-exported data into CSV or JSON format.

Step 2: Import GCS Data into BigQuery Table

The next step is to import your Firestore data in the GCS bucket into BigQuery tables.

- In your BigQuery Console, navigate to a project and either Create a data set or select an existing dataset.

- For the chosen dataset, select Create table.

- In the Create table form:

- For Create table from, select Google Cloud Storage as your source from the dropdown menu.

- Click on BROWSE to select the file generated during the export process.

- Based on the converted format of the Firestore file, choose CSV or JSON accordingly.

- Provide a table name for your destination table.

- Then, click on CREATE TABLE.

Your data from the selected GCS bucket will load into the newly created BigQuery table.

Limitations of Using Google Cloud Storage to Stream Data from Firestore to BigQuery

- Export operations in Firestore incur charges for one read operation per document exported. This Cloud Firestore pricing model can incur significant costs when dealing with large datasets.

- Time-consuming and prone to errors: This method, while functional in urgent situations, isn't scalable and will create technical debt. Engineers and analysts should focus on developing new features rather than repetitive data upload tasks. Consider the effort required to perform this multiple times daily or hourly.

- Complex and requires detailed planning: Manual data uploads can become a bottleneck, tying up engineers with tedious tasks. This process diverts attention from strategic projects and often relies heavily on one person. If that person is unavailable, the entire data pipeline becomes stale and downstream decision-making can come to a standstill.

- Risk to data quality and security: Manual processes involving human intervention are susceptible to errors. During the stages of exporting data from Firestore and importing it into BigQuery, there's a greater risk of data being accidentally altered or exposed to unauthorized users. Such mistakes can compromise the integrity and security of your data, often without being immediately detected.

Conclusion

Integrating Firestore with BigQuery offers significant benefits by combining effective data synchronization with advanced analytics capabilities. This integration enhances the scalability of your data operations and helps you derive valuable insights from large datasets.

While it is possible to migrate your Firestore data into BigQuery using Google Cloud Storage (GCS), this method has significant drawbacks, including a lack of real-time integration and potentially high costs. A more efficient and cost-effective alternative is using a user-friendly ETL tool like Estuary Flow.

Estuary Flow enables faster and seamless data migration from Firestore to BigQuery, ensuring real-time synchronization and minimizing manual effort. By leveraging Estuary Flow, you can maintain up-to-date data in BigQuery, allowing for timely and accurate analysis.

If you haven’t yet explored Estuary Flow, sign up today and start creating efficient, automated data migration pipelines that will transform your data operations.

FAQs

How do I transfer data to BigQuery?

To transfer data to BigQuery, you can choose between batch-loading or streaming individual records. The data transfer can be executed using Google Cloud Console, the command-line tool bq, or third-party services such as Estuary Flow. Estuary Flow, in particular, offers an automated, no-code solution for seamless data integration.

How to transfer data from Firestore to BigQuery?

You can use Estuary Flow to transfer data from Firestore to BigQuery. Its automated, no-code data pipeline ensures a smooth and efficient integration between the two platforms, allowing real-time data synchronization and analysis.

What is the difference between Firestore and BigQuery?

Firestore is a NoSQL database designed for real-time data syncing and optimizing operational workloads, making it ideal for web, mobile, and server applications. In contrast, BigQuery is a fully managed data warehouse that specializes in analyzing large datasets using SQL queries, providing powerful tools for business intelligence, machine learning, and geospatial analysis.

Related guides:

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles