ETL vs ELT: Key Differences, Benefits, and When to Use Each

Discover the key differences in ETL vs ELT, including benefits, use cases, and how to choose the right data integration method for your business.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two primary methods for data integration that help organizations streamline workflows and derive insights.

The main difference between ETL and ELT lies in the sequence of data transformation and loading. ETL transforms data before loading it into a storage system, while ELT loads data first, transforming it within the storage system itself. Each approach impacts performance, scalability, and cost differently, making it essential to choose the right one based on your data needs.

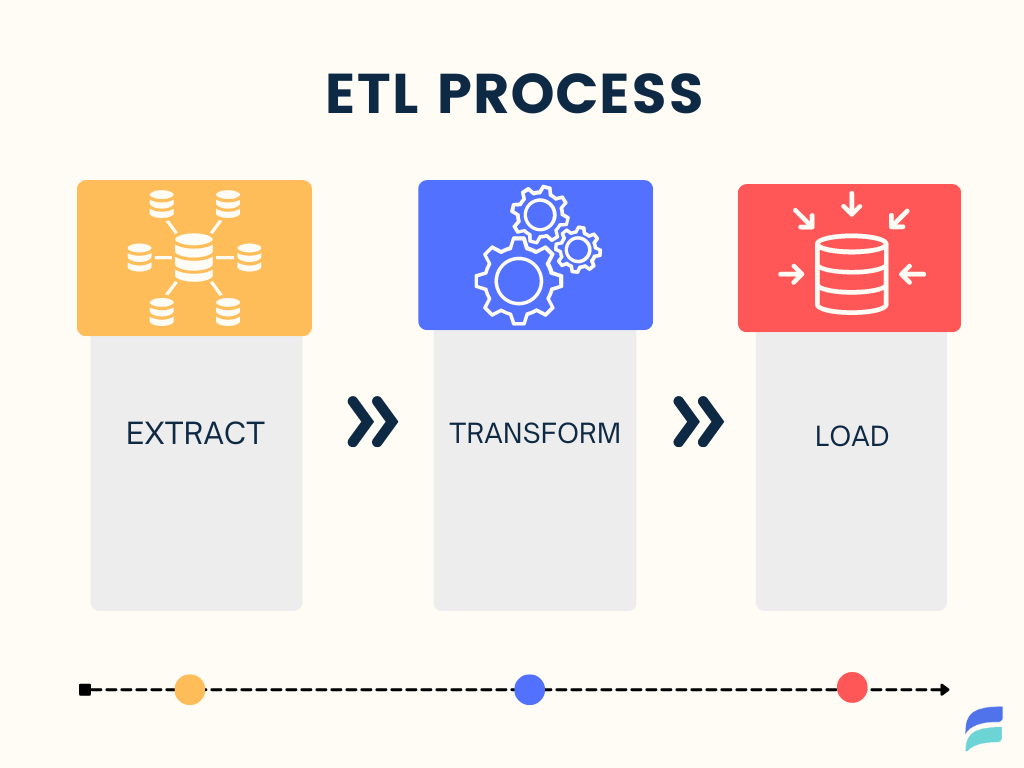

What is ETL (Extract, Transform, Load)?

ETL, short for Extract, Transform, Load, is a three-step data integration process that has been foundational in data management for decades. It’s commonly used to consolidate data from multiple sources into a centralized destination, such as a data warehouse, for streamlined analysis and reporting.

ETL Process

- Extract: Data is collected from various sources like APIs, applications, databases, and sensors.

- Transform: In this stage, data is cleaned, formatted, and restructured to fit the requirements of the target storage system, ensuring data quality and consistency.

- Load: The transformed data is then loaded into a data warehouse or other final storage systems, making it ready for analysis and reporting.

Real-World Example of ETL

Imagine a company gathering customer data from multiple applications, social media, and online surveys. This data needs cleansing and formatting before being stored in a data warehouse to be used in a marketing analysis. ETL performs these transformations before loading the data, allowing data analysts to access clean, structured data for immediate analysis.

Advantages of ETL

ETL has been around longer than ELT, as its advantages were initially non-negotiable in data integration. Though data integration technologies have evolved, ETL remains essential, especially for structured data use cases. Here’s why:

- Data Quality Assurance: ETL pipelines almost always transform messy data into the correct format. If they can’t, the process typically halts, preventing corrupt or unusable data from entering the destination system.

- Ensures Readiness for Analysis: In many organizations, multiple teams rely on a single source of truth to conduct analyses. ETL ensures all data in the warehouse is pre-processed, so there’s no ambiguity about what’s clean and ready for use.

- Efficient Use of Storage and Compute Resources: By pre-aggregating and filtering data, less of it needs to be stored in the data warehouse. Transforming data in a separate environment saves on warehouse query costs, and in some cases, transforming data outside the warehouse can be more cost-effective.

Disadvantages of ETL

While ETL remains valuable, it has limitations in the ETL vs ELT landscape, especially for handling modern, high-volume data environments.

- Limited Scalability: Traditional ETL processes can struggle to handle high data volumes, especially in real-time scenarios, where transformations can create performance bottlenecks.

- Adds Latency: Transformation always adds processing time, which can delay data availability. This latency increases at scale, especially without real-time transformation capabilities.

- Restricts Analytical Flexibility: By transforming data before loading, some raw data characteristics are lost, limiting the potential for certain types of analysis that might require the raw, untransformed data.

ETL Use Cases and Examples

When is ETL most commonly used?

- Highly Unstructured Data with Known Format Needs: ETL is useful when incoming data is unstructured, but the final data format is well-defined. For example, if you’re using a webhook to collect user data across online stores, ETL ensures that your analysis team can work with a predictable structure for marketing research.

- Latency Is Not a Primary Concern: ETL suits situations where data doesn’t need to be available immediately. For example, when updating models weekly for long-term planning rather than for real-time decision-making.

- Strict Data Governance Requirements: Enterprises with strict data management standards often favor ETL, as it ensures that every dataset meets specific schema requirements before it’s stored.

Not all organizations fit neatly into these categories. With the rise of cloud computing, data needs have changed, prompting the development of new methods like ELT to meet complex, large-scale transformation requirements.

What is ELT (Extract, Load, Transform)?

ELT stands for Extract, Load, Transform and is a modern data integration method that flips the order of operations found in ETL. Many organizations leverage ELT tools to streamline this process and optimize data transformation.

ELT Process Breakdown:

- Extract: Data is collected from sources such as databases, APIs, and real-time sensors.

- Load: Data is loaded directly into a cloud data lake or flexible data warehouse. This stage leverages the scalability of cloud storage to handle large data volumes.

- Transform: After loading, data is transformed as needed within the storage system using SQL or other processing tools, allowing transformations to happen on-demand for specific use cases.

Real-World Example of ELT

Consider a media company gathering vast amounts of social media data, including text, images, and videos. This data is loaded in its raw form into a data lake, where it can be accessed by data scientists and transformed as required for machine learning and analytics purposes.

Advantages of ELT

- Speed of Loading: Data can be loaded immediately, making it accessible for near-real-time analysis.

- Flexibility for Analysis: Raw data is available in its entirety, allowing different teams to transform data according to their needs.

- Simplified Architecture: Without the need for a staging area, ELT simplifies data integration architecture.

Disadvantages of ELT

- Data Quality Risks: Since raw data is loaded first, there’s a risk of creating a “data swamp” with unstructured, hard-to-manage data.

- Post-Load Transformation Costs: Transforming data within a cloud environment can become costly, especially if repetitive transformations are needed.

ELT Use Cases and Examples

You’ll likely run into ELT when…

- The source and destination system are the same, or the source is highly structured. For example, moving data between two SQL databases.

- Someone’s performing big data analytics.

- The destination is a less-structured storage type, like a NoSQL database.

Key Differences Between ETL and ELT

Use cases aside, let’s take a look at the tangible differences between ETL vs ELT processes and components.

| Feature | ETL | ELT |

|---|---|---|

| Order of Operations | Extract -> Transform -> Load | Extract -> Load -> Transform |

| Transformation | Before loading, in a separate processing environment. | After loading, within the data warehouse or data lake itself. |

| Data Processing | Often batch-oriented, may be real-time. Requires a staging area, can be complex and expensive to manage. | Typically real-time or near-real-time. Leverages the processing power of the data warehouse, often using SQL. |

| Data Storage | Designed for structured data stores like relational databases and traditional data warehouses. | Suited for flexible, scalable storage like cloud data lakes and modern data warehouses. |

| Schema Flexibility | Strict adherence to schemas, data must be transformed to fit before loading. | More flexible schema requirements, can handle unstructured and semi-structured data. |

| Scalability | Less scalable, transformations can be a bottleneck. | Highly scalable, leverages the scalability of cloud storage and processing. |

| Latency | Can introduce latency due to pre-load transformations. | Typically low latency, as data is loaded immediately and transformed later. |

| Cost | Can be expensive due to separate processing environment and storage requirements. | Can be cost-effective if the data warehouse or data lake is optimized for transformations. |

| Use Cases | Ideal for structured data with well-defined schemas and when data warehouse resources are limited. | Suitable for big data analytics, unstructured data, and when flexibility and speed are paramount. |

| Examples | Webhook data collection for marketing analysis, updating models weekly for long-term planning. | Machine learning model training on social media data, data transfer between SQL databases. |

Order of Operations: ETL vs. ELT

In ETL, transformation occurs before loading the data into storage. In ELT, transformation happens after data is loaded. The other differences are byproducts of this distinction.

Data Processing: ETL vs. ELT Approaches

In an ETL pipeline, processing happens before loading. This means that the processing component is completely independent of your storage system. ETL transformation can be batch or real-time. Either way, it needs its own staging area, which you either build or get from an ETL vendor.

Common processing agents include Apache Spark and Flink. These are powerful systems, but they can be expensive and require experienced engineers to operate. Alternatively, an ETL vendor might provide you with processing tools.

By contrast, ELT processing happens on top of the data storage platform — the data warehouse or data lake. This simpler architecture doesn’t require a staging area for transformations, and you can transform data with a much simpler method, SQL.

Often in ELT, what we call operational transformations (joins, aggregations, and cleaning — the “T” in ELT) happen at the same time as analytical transformations (complex analysis, data science, etc). In other words, you’re adding a prerequisite step to any analytical workflow, but if you set this up well, it can be fairly straightforward for analysts to do.

The “T” in ELT can come in other forms, too. For instance, you might clean up data with a pre-computed query and use the resulting view to power analysis. Be careful, though: this can get expensive in a data warehouse environment!

Data Storage Requirements: ETL vs. ELT

ETL usually requires less data storage, and offers you more control and predictability over the amount of data stored. That’s why ETL was the go-to method in the days before cloud storage. ETL is designed for more traditional, rigid data storage that will only accept data adhering to a schema. Traditional relational databases and data warehouses are common storage systems, but more modern, flexible data storage systems can be used, too.

ELT requires a large, highly scalable storage solution, so cloud storage is pretty much the only option. It must also be permissive and flexible. Data lakes, certain warehouses, and hybrid architectures are popular in ELT pipelines.When to Choose ETL vs. ELT

Choosing Between ETL and ELT: Decision-Making Factors

Deciding whether to use ETL or ELT depends on several factors, including the nature of your data, compliance requirements, infrastructure, and budget.

- Data Structure: If data is highly structured, ETL ensures it’s clean and ready to use before loading. For unstructured data or large data lakes, ELT may be a better fit.

- Technology Stack: Organizations with on-premises or traditional databases often use ETL. In contrast, cloud-based systems with scalable storage favor ELT.

- Compliance: ETL may be preferred in industries with strict data quality and governance needs, as data is cleansed and structured before storage.

- Latency Requirements: For real-time or near-real-time data access, ELT allows faster access, loading data without immediate transformation.

- Cost Considerations: ELT can become costly in terms of storage and transformation within the cloud, while ETL can save costs by transforming data outside the storage environment.

ETL and ELT Use Cases

Common ETL Use Cases:

- Financial Reporting: Ensuring data is clean, consistent, and ready for regulatory reporting.

- Customer Data Integration: Aggregating data from multiple sources to create a single view of the customer.

- Legacy Systems: ETL is often used with older systems that don’t support cloud storage.

Common ELT Use Cases:

- Real-Time Analytics: Data is available immediately for analysis and is transformed as needed.

- Machine Learning Pipelines: For machine learning models that require access to raw data stored in cloud lakes.

- Big Data Environments: ELT is ideal when working with large volumes of unstructured data that don’t require immediate transformation.

Hybrid Solutions: Combining ETL and ELT

With advancements in data integration, some platforms now offer hybrid solutions that combine the best of both ETL and ELT. These solutions allow organizations to pre-process certain data elements while loading others directly into the target storage, providing flexibility, speed, and data quality.

For instance, Estuary Flow enables both real-time ingestion and transformation, allowing users to capture data, apply schema, and load it directly into a destination with optional transformation steps. This hybrid approach ensures data is analysis-ready without the complexities of traditional ETL.

Future of Data Integration: Beyond ETL and ELT

As data integration needs continue to evolve, modern platforms increasingly blur the lines between ETL and ELT. With cloud-native solutions and machine learning, data integration tools now offer customizable workflows that adapt to specific data needs.

Key Trends to Watch:

- Real-Time Data Integration: More organizations are moving towards real-time ingestion and transformation to support streaming data applications.

- elf-Service Transformation: With the rise of SQL-based transformation tools, data analysts can perform transformations independently.

- Integrated Data Governance: As regulations tighten, solutions that maintain data integrity and compliance across ETL and ELT processes are gaining traction.

Conclusion

Both ETL vs ELT offer unique advantages, and selecting the right choice depends on your specific organizational needs. ETL provides data governance, quality, and efficiency, making it ideal for highly structured data in compliance-heavy industries. ELT, on the other hand, supports flexibility, scalability, and faster loading times, making it the go-to choice for cloud-native and big-data environments.

For businesses looking to leverage the benefits of both, hybrid solutions like Estuary Flow provide a balanced approach that offers scalability without sacrificing data quality. By assessing your data needs, storage capabilities, and budget, you can select the best data integration method to support your organization’s goals. Check out Estuary Flow’s docs or check out the code — we develop in the open.

Author

Popular Articles