Choosing the right ETL tool is more important than ever. Organizations now have many options, each with its own strengths and specialties. If you are new to data engineering or looking to change your current tools, this guide is for you. It covers the 25 best ETL tools for 2024.

This guide serves two key audiences:

- Organizations evaluating their first ETL software: Discover the best options available to streamline your data processes and lay a strong foundation for future growth.

- Organizations considering a switch from their current ETL solution: Explore alternatives that might better suit your evolving needs, ensuring your data pipelines are efficient, scalable, and futureproof.

We have assembled a list of 25 ETL tools for 2024, including both old and new tools.

We also highlight the key features that matter. This will help you make a thorough comparison.

Why 25? Because each tool on this list is unique in important ways. Knowing their strengths and weaknesses will help you make the best choice for your needs.

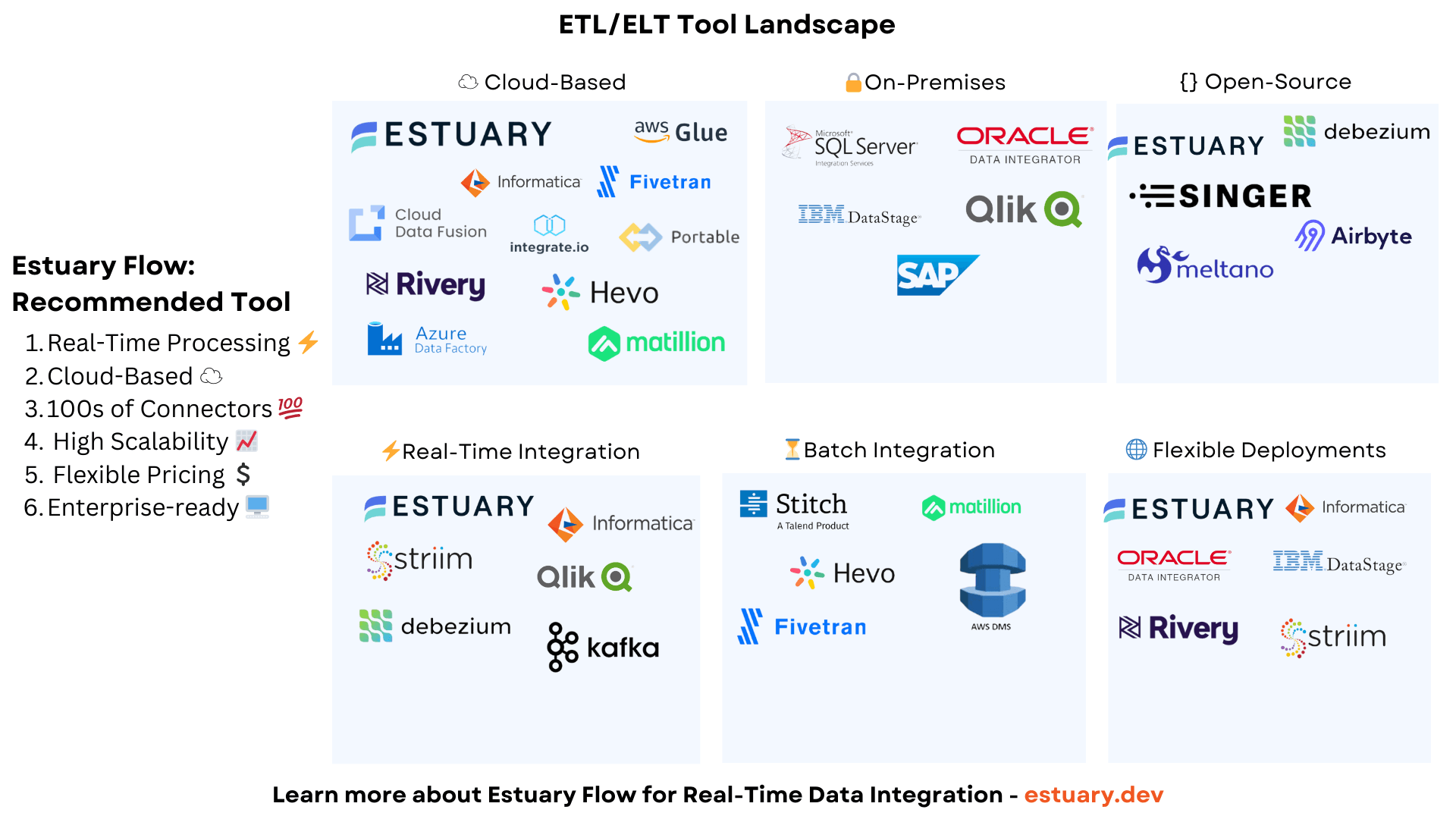

Here’s a look at the top ETL tools list featured in this guide:

- Estuary

- Informatica

- Talend

- Microsoft SQL Server Integration Services (SSIS)

- IBM InfoSphere DataStage

- Oracle Data Integrator (ODI)

- SAP Data Services

- AWS Glue

- Azure Data Factory

- Google Cloud Data Fusion

- Fivetran

- Matillion

- Stitch

- Airbyte

- Hevo Data

- Portable

- Integrate.io

- Rivery

- Qlik Replicate

- Striim

- Amazon DMS

- Apache Kafka

- Debezium

- SnapLogic

- Singer

By reading through this guide, you’ll gain insights into the key features and use cases of each ETL tool, along with considerations for how they align with both your current and future needs. By the end, you will have the knowledge to choose an ETL solution. This solution will meet your current needs and help you succeed in the changing world of data integration.

What is ETL?

Extract, Transform, Load (ETL) is the process of moving data from a source, usually applications and other operational systems, into a data lake, lakehouse, data warehouse, or other type of unified store. ETL tools help gather data from different sources. They change the data to meet operational or analytical needs. Finally, they load the data into a system for further analysis.

Historically, it has been used to support analytics, data migration, or operational data integration. More recently, it’s also being used for data science, machine learning, and generative AI.

- Extract - The first step in the ETL process is extraction, where data is collected from various sources. These data sources might include databases, on-premises or SaaS applications, flat files, APIs, and other systems.

- Transform - Once the data is extracted, the next step is to transform it. This is where data is cleansed, formatted, merged, and enriched to meet the requirements of target systems. While this used to be done with proprietary tools, increasingly, people are using SQL, the #1 language of data, Python, and even JavaScript to write transforms.

Transformation is the most complex part of the ETL process, requiring careful planning to ensure that the data is not only accurate but also optimized for performance in the target environment. - Load - The final step is loading the transformed data into the target data storage system, which could be a data warehouse, a data lake, or another type of data repository. During this phase, the data is written in a way that supports efficient querying and analysis. Depending on the requirements, loading can be done in batch mode (periodically moving large volumes of data) or in real-time (continuously updating the target system as new data becomes available).

ETL was originally the preferred approach for building data pipelines. But when cloud data warehouses started to become popular, people started to adopt newer ELT tools. The decoupled storage-compute architecture of cloud data warehouses made it possible to run any amount of compute, including SQL-based transforms. Today you need to consider both ETL and ELT tools in your evaluation.

ETL or ELT isn’t just about transforming data. It’s a core part of effective data management involving data integration processes for business intelligence and other data needs in the modern enterprise.

Types of ETL Tools

On-premises ETL Tools

A company uses on-premises ETL tools in its data centers or private clouds. This setup gives full control over data processing and security. These tools tend to be highly customizable and designed for large enterprises with advanced data governance requirements and existing investments in on-premises infrastructure.

Cloud-Based ETL or ELT Tools

Cloud-based ETL tools are popular tools due to their scalability and ease of use. These tools are hosted on public or private cloud platforms. Their cloud-native design provides true elastic scalability, ease of use, and ease of deployment. Some start with pay-as-you-go pricing, making them cost-effective for companies who are starting small and using cloud data warehouses. Others have more complex pricing and sales processes. They are often the cloud replacement for older on-premises ETL tools.

Open-source ETL Tools

Open Source ELT and ETL tools offer a more affordable choice for organizations with skilled technical teams. They provide flexibility but often come with higher development and administration costs. Examples of open-source ETL tools include Airbyte, Singer, and Meltano.

Real-Time ETL Tools

Real-time ETL tools process data as it arrives, enabling up-to-the-second insights and decision-making. These tools are essential for business processes where timely data is critical. There aren’t real-time ELT tools, which will become a little clearer later.

Batch ETL and ELT Tools

Batch ELT and ETL tools process large volumes of data at scheduled intervals rather than in real time. This approach works well for environments where data does not need to be processed instantly, such as where sources only support batch. Batch ingestion can also help save money with cloud data warehouses, which often end up costing more for low-latency data integration.

Hybrid ELT and ETL Tools

Hybrid ETL and ELT tools combine the capabilities of on-premises, private, public, and public cloud-based tools. They can sometimes support both real-time and batch processing. They offer versatility and scalability, making them suitable for organizations with complex data environments or those transitioning between on-premises and cloud infrastructures.

Top 25 ETL Tools in 2024

Here is the list of top ETL tools:

Estuary

Estuary Flow is a real-time ETL, ELT, and Change Data Capture (CDC) platform that supports any combination of batch and real-time data pipelines. Its intuitive, user-friendly interface makes it possible to create new no-code data pipelines in minutes across databases, SaaS applications, file stores, and other data sources. Whether you're managing streaming data or large-scale batch processes, Flow offers the ease of use, flexibility, performance, and scalability needed for modern data pipelines.

Pros

- Real-time and Batch Integration: Flow perfectly combines real-time and batch processing within a single pipeline, making it the only vendor that supports streaming from real-time sources and loading a data warehouse in batch, for example.

- ETL and ELT Capabilities: Flow supports both ETL and ELT, offering the best of both worlds. You can perform in-pipeline transformations (ETL) using streaming SQL and TypeScript, and in-destination (ELT) transformations using dbt.

- Schema Evolution: Flow automatically handles schema changes as data flows from source to destination, significantly reducing the need for manual intervention. This automated schema evolution ensures that your data pipelines stay up and running even as your data source schemas change.

- Multi-destination Support: Flow can load data into multiple destinations concurrently from a single pipeline. Multi-destination support makes sure data is consistent without overloading sources or the network with multiple pipelines.

- High Scalability: Estuary is proven to scale, with one pipeline in production exceeding 7GB+/sec, 100x the scale of other public benchmarks and numbers of ELT vendors. These are more inline with messaging, replication, or the top ETL vendors.

- Deployment Options: Support for public cloud, private cloud, and self-hosted open source.

- Low Cost: Estuary has among the lowest pricing of all the commercial vendors, especially at scale, making it an ideal choice for high-volume data integration.

Cons

- Connectors: Estuary has 150+ connectors, which is more than some and less than some others. But they can also support 500+ Airbyte, Meltano, and Stitch connectors. As with all tools, you need to evaluate whether the vendor has all the connectors you will need and whether they have all the features that meet all your needs.

Pricing

- Free Plan: Includes up to 2 connectors and 10 GB of data per month.

- Cloud Plan: Starts at $0.50 per GB of change data moved, plus $100 per connector per month.

- Enterprise Pricing: Custom pricing tailored to specific organizational needs.

If you're looking for the best tools for ETL in 2024, Estuary offers scalability, low cost, and user-friendliness.

Informatica

Informatica is one of the leading ETL platforms designed for enterprise-level data integration. It is known for its on-premises and cloud data integration and data governance, including data quality and master data management. The only price of all its advanced features is that it’s more complex to learn and use and more costly.

It lacks simplicity and modern data pipeline features. It does not have advanced schema inference or evolution and does not support SQL for transformations. Informatica provides advanced data transformation capabilities, which make it one of the best tools for ETL for enterprise-level needs.

Pros

- Comprehensive Data Transformation: Offers advanced data transformation capabilities, including support for slowly changing dimensions, complex aggregations, lookups, and joins.

- Real-time ETL: Informatica has good real-time CDC and data pipeline support.

- Scalability: Engineered to support large-scale data integration pipelines, making it suitable for enterprise environments with extensive data processing needs.

- Data Governance: Includes integrated data quality and master data management to support advanced data governance needs.

- Workflow Automation: Supports complex workflows, job scheduling, monitoring, and error handling, which are all crucial for managing large-scale mission-critical data pipelines.

- Deployment Options: Support for Public and Private Cloud deployments.

- Extensive Connectivity: Provides connectors for a wide range of databases, applications, and data formats, ensuring seamless integration across diverse environments.

Cons

- Harder to Learn: Informatica offers many features and works well for larger teams, but Informatica Cloud takes more time to learn than modern ELT and ETL tools.

- High Cost: Informatica Cloud (and PowerCenter) is more expensive than most other tools.

Pricing

Informatica Pricing is usually tailored to the size and needs of the organization. It often includes license fees and charges based on usage.

Talend

Talend, now owned by Qlik, has two main products: Talend Data Fabric and Stitch. Talend acquired Stitch, which is discussed in the section on Stitch. In case you’re interested in Talend Open Studio, it was discontinued following the acquisition by Qlik.

Talend Data Fabric is a broader data integration platform, not just ETL. It also offers data quality and data governance features, ensuring that your data is not only integrated but also accurate.

Pros

- ETL platform: Data Fabric provides robust transformation, data mapping, and data quality features that are essential for constructing efficient data pipelines.

- Real-time and batch: The platform supports both real-time and batch processing, including streaming CDC. While the technology is mature, it still offers true real-time capabilities.

- Data Quality Integration: Offers built-in data profiling and cleansing capabilities to ensure high-quality data throughout the integration process.

- Strong monitoring and analytics: Similar to Informatica, Talend offers robust visibility into operations and provides strong monitoring and analytics capabilities.

- Scalable: Talend supports some large deployments and has evolved into a scalable solution.

Cons

- Learning curve: While Talend Data Fabric is drag-and-drop, its UI is older, and requires some time to master, much like other more traditional ETL tools. Developing transformations can also be time-consuming.

- Limited connectors: Talend claims 1000+ connectors. But it lists 50 or so databases, file systems, applications, messaging, and other systems it supports. The rest are Talend Cloud Connectors, which you create as reusable objects.

- High costs: there is no pricing listed, but it ends up costing more than most pay-as-you-go tools.

Pricing

Pricing for Talend is available upon request. This suggests that Talend's costs are likely to be higher than many pay-as-you-go ELT vendors, with the exception of Fivetran.

Microsoft SQL Server Integration Services (SSIS)

Microsoft SQL Server Integration Services (SSIS) is a platform for data integration. It helps manage ETL processes. SSIS supports both on-premises and cloud-based data environments.

Microsoft first introduced this platform in 2005 as part of the SQL Server suite to replace Data Transformation Services (DTS). It is widely used for general-purpose data integration, enabling organizations to extract, transform, and load data between various sources efficiently.

Pros

- Tight Integration with the Microsoft Ecosystem: SSIS integrates seamlessly with other Microsoft products, including SQL Server, Azure, and Power BI, making it an ideal choice for organizations using Microsoft technologies.

- Advanced Data Transformations: Supports a wide range of data transformation tasks, including data cleaning, aggregation, and merging, which are essential for preparing data for analysis.

- Custom Scripting: Allows for custom scripting using C or VB.NET, providing data engineers with the flexibility to implement complex logic.

- Robust Error Handling: Includes features for monitoring, logging, and error handling, ensuring that data pipelines are reliable and resilient.

Cons

- Limited connectors: SSIS has 20+ built-in connectors (some need to be downloaded like Oracle, SAP BI, and Teradata.) It’s limited compared to many other tools.

- Steep Learning Curve for Custom Scripting: While SSIS allows for custom scripting in C# or VB.NET, this requires advanced technical expertise, which can be a barrier for teams without strong programming skills.

- On-Premises Focus: SSIS is traditionally an on-premises tool, and while it supports cloud environments, its native cloud integration is not as seamless as some modern cloud-native ETL tools. Azure Data Factory is recommended for cloud deployments.

- Limited Cross-Platform Support: SSIS is tightly integrated into the Microsoft ecosystem, which limits its flexibility for organizations using a variety of non-Microsoft platforms or databases.

- Scalability: SSIS is still a single-threaded 32-bit app, which means it has its limits and is not suitable for all enterprise-level data integration tasks.

- No Native Real-Time Data Integration: SSIS is primarily a batch-processing ETL tool and does not natively support real-time data integration, which may be a drawback for businesses that need real-time data replication or processing.

Pricing

SSIS is included with SQL Server licenses, with additional costs based on the SQL Server edition and deployment options.

IBM InfoSphere DataStage

IBM InfoSphere DataStage is an enterprise-level ETL tool that is part of the IBM InfoSphere suite. It is engineered for high-performance data integration and can manage large data volumes across diverse platforms. With its parallel processing architecture and comprehensive set of features, DataStage is ideal for organizations with complex data environments and stringent data governance needs.

Key Features

Key Features

- Parallel Processing: DataStage is built on a parallel processing architecture, which enables it to handle large datasets and complex transformations efficiently.

- Scalability: Designed to scale with large enterprises' needs, DataStage can easily manage extensive data integration workloads.

- Data Quality and Governance: Features builtin data quality and governance tools, ensuring data remains accurate, consistent, and adherent to industry regulations.

- Real-time Data Integration: Supports real time data integration scenarios, making it suitable for businesses that require uptodate data for decision making.

Cons

- Multiple data integration tools: While Data Stage is IBM's main data integration tool, IBM recently acquired StreamSets. The question is, what will happen with both going forward?

- Nearly 100 connectors: while DataStage is a great option for on-premises deployments, including on-premises sources and destinations, it has slightly less than 100 connectors and limited SaaS connectivity.

- High Cost and Complex Pricing: IBM InfoSphere DataStage comes with a high cost, with pricing that is typically customized based on the organization’s scale and requirements. This can be prohibitive for smaller businesses or those with limited budgets.

- Steep Learning Curve: The tool’s powerful features come with a steep learning curve, especially for smaller teams or those without extensive experience in enterprise-level ETL tools.

- Complex Setup and Maintenance: Deploying and maintaining DataStage can be time-consuming, requiring specialized technical skills to configure and optimize its performance in large, multi-platform environments.

Pricing

IBM InfoSphere DataStage pricing is customized based on the organization’s specific needs and deployment scale. Typically, it involves a combination of licensing fees and usage-based costs, which can vary depending on the complexity of the integration environment.

Oracle Data Integrator (ODI)

Oracle Data Integrator (ODI) is a data integration platform designed to support high-volume data movement and complex transformations. Unlike traditional ETL tools, ODI uses an ELT architecture, executing transformations directly within the target database to enhance performance. Although it works seamlessly with Oracle databases, ODI also offers broad connectivity to other data sources, making it a versatile solution for enterprise-level data integration needs.

Pros

- Connectivity: While ODI can integrate with many different technologies, it’s not out of the box. Even with Snowflake, you need to download and configure a driver. For applications, it relies on Oracle Fusion application adapters.

- Complexity for Non-Oracle Users: While ODI integrates seamlessly with Oracle databases, it may be less intuitive or complex to set up and use for organizations not heavily invested in Oracle products.

- Costly Licensing: As part of Oracle’s enterprise suite, ODI can come with significant licensing and infrastructure costs, which might not be ideal for smaller businesses or teams with limited budgets.

- Limited Non-Oracle Features: While ODI supports a broad range of data sources, its features and functionality are optimized for Oracle environments, making it less versatile for non-Oracle-centric organizations.

Cons

- Connectivity: While ODI can integrate with many different technologies, it’s not out of the box. Even with Snowflake, you need to download and configure a driver. For applications, it relies on Oracle Fusion application adapters.

- Complexity for Non-Oracle Users: While ODI integrates seamlessly with Oracle databases, it may be less intuitive or complex to set up and use for organizations not heavily invested in Oracle products.

- Costly Licensing: As part of Oracle’s enterprise suite, ODI can come with significant licensing and infrastructure costs, which might not be ideal for smaller businesses or teams with limited budgets.

- Limited Non-Oracle Features: While ODI supports a broad range of data sources, its features and functionality are optimized for Oracle environments, making it less versatile for non-Oracle-centric organizations.

Pricing

ODI is part of Oracle’s data management suite, with pricing typically customized based on the organization’s deployment scale and specific needs. Pricing includes licensing fees and potential usage-based costs, depending on the size and complexity of the integration environment.

SAP Data Services

SAP Data Services is a mature data integration tool. In 2002, Business Objects acquired Acta and integrated it into the Business Objects Data Services Suite.

SAP acquired Business Objects in 2007, and it became SAP Data Services. It is designed to manage complex data environments, including SAP systems, but it also supports non-SAP systems, cloud services, and extensive data processing platforms. With its focus on data quality, advanced transformations, and scalability, SAP Data Services is an enterprise-ready solution for large-scale data integration projects.

Pros

- Data Quality Integration: This includes robust data profiling, cleansing, and enrichment capabilities, ensuring high-quality data throughout the integration process.

- Advanced Transformations: Provides a rich set of data transformation functions, allowing for complex data manipulations and calculations.

- Scalability: Built to handle large-scale data integration projects, making it suitable for enterprise environments.

- Tight Integration with SAP Ecosystem: SAP Data Services is deeply integrated with other SAP products, such as SAP HANA and SAP BW, ensuring seamless data flows within SAP environments.

Cons

- Connectivity: Offers native connectors to nearly 50 data sources, including SAP and nonSAP systems, cloud services, big data platforms, and 20+ targets. However, given its limited SaaS connectors, it is better suited for on-premises-based systems than SaaS applications.

- High Cost: SAP Data Services is generally priced at the enterprise level, which can be expensive for smaller organizations or those with budget constraints.

- SAP-Centric Features: While it supports non-SAP environments, its strongest integration and functionality are tailored toward SAP products, which may make it less suitable for businesses outside of the SAP ecosystem.

Pricing

SAP Data Services is priced based on usage and deployment size, with enterprise-level agreements tailored to each organization's specific needs. This makes it a costlier solution but one that provides extensive capabilities for large-scale enterprise use.

AWS Glue

AWS Glue is a fully managed serverless ETL service from Amazon Web Services (AWS) designed to automate and simplify the data preparation process for analytics. Its serverless architecture eliminates the need to manage infrastructure. As part of the AWS ecosystem, it is integrated with other AWS services, making it a go-to choice for cloud-based data integration for AWS-centric data engineering shops.

Pros

- Serverless Architecture: AWS Glue is serverless, removing the need for infrastructure management with its automatic scaling to match workload demands.

- Integrated Data Catalog: Features an integrated data catalog that discovers and organizes data schema using a crawler (which you configure for each source).

- Broad Connectivity within AWS: Supports a wide range of AWS sources and destinations, making it a great choice for AWS-centric IT organizations.

- Python-based Transformations: Allows users to write custom ETL scripts in Python, Scala, and SQL.

- Tight Integration with AWS Ecosystem: AWS Glue seamlessly integrates with other AWS services like Amazon S3, Redshift, and Athena, ensuring a streamlined data processing workflow.

Cons

- Limited Connectivity Outside AWS: While AWS Glue offers excellent integration within the AWS ecosystem, it only provides less than 20 connectors to other systems.

- Complexity with Large Jobs: For extremely large-scale jobs, AWS Glue’s performance can sometimes become slower or require careful tuning of Data Processing Units (DPUs), adding complexity to scaling efficiently.

- Not for Real-Time ETL: AWS Glue is primarily designed for batch ETL jobs, and while it can handle frequent updates, it is not optimized for real-time data streaming or low-latency use cases.

- Cost Control: Although AWS Glue offers pay-as-you-go pricing, costs can rise quickly depending on the complexity and duration of ETL jobs, making it essential to monitor Data Processing Unit (DPU) usage carefully.

Pricing

AWS Glue is priced based on the number of Data Processing Units (DPUs) used and the duration of your ETL jobs. This usage-based pricing model allows for flexibility but requires careful monitoring of job duration to control costs, especially for larger workloads.

Azure Data Factory

Azure Data Factory (ADF) is a cloud-based ETL and ELT service from Microsoft Azure that enables users to create, schedule, and orchestrate data pipelines. It is the cloud equivalent of SSIS, though they are not really compatible. Its seamless integration with other Azure services makes it a good choice for Microsoft-centric IT organizations.

Pros

- Hybrid Data Integration: ADF supports integration across on-premises, cloud, and multi-cloud environments, making it highly versatile for modern data architectures.

- Visual Pipeline Design: Provides a visual interface for designing, deploying, and monitoring data pipelines, simplifying the creation of complex workflows.

- Wide Range of Connectors: Offers 100s connectors to data sources and destinations, including Azure services, databases, and third-party SaaS applications.

- Data Movement and Transformation: Supports data movement and transformation with complex ETL/ELT processes capabilities.

- Integration with Azure Services: ADF integrates tightly with other Azure services, such as Azure Synapse Analytics, Azure Machine Learning, and Azure SQL Database, enabling end-to-end data workflows.

Cons

- Complex Pricing Model: Azure Data Factory’s pricing can become complex based on several factors, including the number of activities, integration runtime hours, and data movement volumes. This may make cost forecasting more difficult for larger-scale projects.

- Limited Non-Azure Optimization: While ADF offers multi-cloud support, it is most optimized for Azure services. Organizations operating primarily outside the Azure ecosystem may find limited advantages in using ADF.

- Batch-oriented: while ADF now supports real-time CDC extraction, it is still batch-based and unsuitable for real-time data.

- No Native Data Quality Features: Unlike other ETL tools, ADF lacks built-in data quality and governance capabilities, requiring additional third-party tools or custom configurations to manage data quality.

Pricing

Azure Data Factory uses a pay-as-you-go pricing model based on several factors, including the number of activities performed, the duration of integration runtime hours, and data movement volumes. This flexible pricing allows for scaling based on workload but can lead to complex cost structures for larger or more complex data integration projects.

Google Cloud Data Fusion

Google Cloud Data Fusion is a fully managed cloud service that helps users quickly build and manage ETL/ELT data pipelines. Leveraging Google Cloud’s infrastructure, Data Fusion offers robust scalability and reliability, making it an ideal solution for businesses that require seamless data integration across various sources and destinations.

Pros

- Visual Interface: Features a visual drag-and-drop interface for building data pipelines, making it accessible for users with varying technical expertise.

- Native Google Cloud Integration: Integrates seamlessly with Google Cloud services like BigQuery, Google Cloud Storage, and Pub/Sub, enabling streamlined data workflows.

- Real-time Data Integration: Supports real-time data processing and streaming, allowing up-to-date data availability across your pipelines.

- Scalability: Built on Google Cloud's infrastructure, Data Fusion provides automatic scaling to efficiently handle large volumes of data.

Cons

- Google Cloud-Centric: While Data Fusion excels within the Google Cloud environment, organizations using multi-cloud or hybrid infrastructures may find it less versatile than other data integration tools offering broader cross-platform support.

- Limited Connectors: While the list looks long, perhaps 50 different sources are supported, which is low compared to several other vendors. You need to make sure everything that you need is supported.

- Complexity for Non-Technical Users: Despite the visual interface, more complex data transformations may require advanced technical knowledge, potentially presenting a learning curve for non-technical users.

- Cost Monitoring Required: Although Data Fusion offers flexible pricing based on data processing hours and the type of instance selected, costs can rise quickly for large-scale data operations, requiring careful monitoring to avoid unexpected expenses.

- Limited Transformation Capabilities: While suitable for many common data integration tasks, Data Fusion’s transformation capabilities may be less extensive than those offered by dedicated ETL platforms with a deeper focus on complex data transformations.

Pricing

Google Cloud Data Fusion’s pricing is based on the number of data processing hours and the type of data fusion instance selected (Basic or Enterprise). It uses an on-demand pricing model, which offers flexibility but requires careful cost management to ensure it remains within budget.

Fivetran

Fivetran is a leading cloud-based ELT tool that focuses on seamless data extraction and loading into your data warehouse. Developed for simplicity and efficiency, Fivetran eliminates the need for extensive manual setup, making it an ideal choice for teams that require quick and reliable data pipeline solutions.

Pros

- Ease of Use: Fivetran’s user-friendly interface enables users to set up data pipelines with minimal coding effort. This makes it accessible for teams with varying levels of technical expertise, from data engineers to business analysts.

- PreBuilt Connectors: Offers nearly 300 pre-built connectors for diverse data sources and another 300+ lite connectors that call APIs, enabling quick and seamless data integration without extensive development effort.

- Dbt integration: Fivetran has added integration to dbt (Data Build Tool) open source, dbtcore, into its UI and done other work such as building dbt-core compatible data models for the more common connectors to make it easier to get started. Dbtcore runs separately within the destination, such as a data warehouse.

- Scalability: Fivetran is proven to scale with your data needs, whether you're dealing with small data streams or large volumes of complex data. It supports multiple data sources simultaneously, making it easy to manage diverse data environments.

Cons

- High costs: Fivetran’s pricing model, based on Monthly Active Rows (MAR), is one of the most expensive modern ELT vendors, often 5-10x the alternatives. Fivetran measures MARs based on its internal representation of data. Costs are especially high with connectors that need to download all source data each time or that have nonrelational data because Fivetran converts it into highly normalized data that ends up having a lot of MARs.

- Unpredictable costs: Fivetran’s pricing can also be unpredictable because it’s hard to predict how many rows will change at least once a month, especially when Fivetran counts MARs based on its own internal representation, not each source’s version.

- Limited Control Over Transformations: Fivetran writes into destinations based on its own internal data representation. It does not give you much control over how data is written. This may be an issue for organizations that need more control over in-pipeline transformations.

- Limited Customization: While Fivetran offers a wide array of prebuilt connectors, it provides less flexibility for custom integrations or workflows than more customizable ETL/ELT tools.

- No Real-Time Streaming: Fivetran is optimized for batch processing and does not support real-time data streaming, which can be a drawback for businesses needing low-latency, real-time data updates.

Pricing

Fivetran uses a pricing model based on Monthly Active Rows (MAR), meaning that costs are determined by the number of rows in your source that change each month. Pricing can vary significantly depending on usage patterns, with costs ranging from a few hundred to several thousand dollars per month, depending on the size and frequency of data updates.

Also read:

Matillion

Matillion is a comprehensive ETL tool initially developed as an on-premises solution before cloud data warehouses gained prominence. Today, while Matillion retains its strong focus on on-premises deployments, it has also expanded to work effectively with cloud platforms like Snowflake, Amazon Redshift, and Google BigQuery. The company has introduced the Matillion Data Productivity Cloud, which offers cloud-based options, including Matillion Data Loader, a free replication tool. However, the Data Loader lacks the full capabilities of Matillion ETL, particularly in terms of data transformation features.

Pros

- Advanced (on premises) Data Transformations: Matillion supports a wide range of transformation options, from drag-and-drop functionality to code editors for complex transformations, offering flexibility for users with different levels of technical expertise.

- Orchestration: The tool provides advanced workflow design and orchestration capabilities through an intuitive graphical interface, allowing users to manage multi-step data processes with ease.

- Pushdown Optimization: Matillion allows transformations to be pushed down to the target data warehouse, optimizing performance and reducing unnecessary data movement.

- Reverse ETL: Matillion supports reverse ETL, enabling data to be extracted from a source, processed, and then reinserted into the original source system after cleansing and transformation.

Cons

- On-Premises Focus: While Matillion’s main product, Matillion ETL, is designed for on-premises deployment, it does offer the Matillion Data Loader as a free cloud service for replication. However, migrating from Data Loader to the full ETL tool requires moving from the cloud back to a self-managed environment.

- Mostly Batch: Matillion ETL had some real-time CDC based on Amazon DMS that has been deprecated. The Data Loader does have some CDC, but overall, the Data Loader is limited in functionality, and if it’s based on DMS, it will have the limitations of DMS as well.

- Limited Free Tier: The free Matillion Data Loader lacks support for data transformations, making it difficult for users to fully evaluate the platform's capabilities before committing to a paid plan.

- Limited SaaS capabilities: Matillion ETL is more functional than Data Loader. For example, while Matillion ETL does support dbt and has robust data transformation capabilities, neither of these features is in the cloud.

- Schema Evolution Limitations: While Matillion supports essential schema evolution, such as adding or deleting columns in destination tables, it does not automate more complex schema changes, like adding new tables, which requires modifying the pipeline and redeploying.

Pricing

Marillion's pricing starts at $1,000 monthly for 500 credits, each representing a virtual core hour. Costs increase depending on the number of tasks and resources used. With each task consuming two cores per hour, the minimum cost can easily rise to thousands of dollars monthly, making it a pricier option for larger data workloads.

Also Read: Matillion vs Informatica: Which ETL Tool Should You Choose?

Stitch

Stitch is a SaaS-based batch ELT tool originally developed as part of the Singer open-source project within RJMetrics. After its acquisition by Talend in 2018, Stitch has continued to provide a straightforward, cloud-native solution for automating data extraction and loading into data warehouses. Although branded as an ETL tool, Stitch operates primarily as a batch ELT platform, moving raw data from sources to targets without real-time capabilities.

Pros

- Open-Source Foundation: Built on the Singer framework, Stitch allows users to leverage open-source taps, providing flexibility for data integration projects across multiple platforms like Meltano, Airbyte, and Estuary.

- Log Retention: Stitch offers up to 60 days of log retention, allowing users to store encrypted logs and track data movement, a benefit that surpasses many other ELT tools.

- Qlik Integration: For users already in the Qlik ecosystem, Stitch offers seamless integration with other Qlik products, providing a more cohesive data management solution.

Cons

- Batch-Only Processing: Stitch operates solely in batch mode, with a minimum interval of 30 minutes between data loads, lacking real-time processing capabilities.

- Limited Connectors: Stitch supports just over 140 data sources and 11 destinations, which is lower compared to other platforms. While there are over 200 singer taps in total, their quality levels vary.

- Scalability Issues: Stitch (cloud) supports only one connector running at a time, meaning if one connector job doesn’t finish on time, the next scheduled job is skipped.

- Limited DataOps Features: It lacks automation for handling schema drift or evolution, which can disrupt pipelines without manual intervention.

- Price Escalation: Pricing can escalate quickly, with the advanced plan costing $1,250+ per month and the premium plan starting at $2,500 per month.

Pricing

- Basic Plan: $100/month for up to 3 million rows.

- Advanced Plan: $1,250/month for up to 100 million rows.

- Premium Plan: $2,500/month for up to 1 billion rows.

Airbyte

Airbyte, founded in 2020, is an open-source ETL tool that offers cloud and self-hosted data integration options. Originally built on the Singer framework, Airbyte has since evolved to support its own protocol and connectors while maintaining compatibility with Singer taps. As one of the more cost-effective ETL tools, Airbyte is an attractive option for organizations seeking self-hosting flexibility and open-source control over their data integration processes.

Pros

- Open-Source Flexibility: Airbyte's open-source nature allows users to customize and expand its functionality, giving organizations more control over their data integration processes.

- Broad Connector Support: Airbyte supports over 60 managed connectors and hundreds of community-contributed connectors, offering extensive coverage for data sources.

- Compatibility with Singer: While it has evolved beyond the Singer framework, Airbyte still maintains support for Singer taps, giving users access to a wide array of existing connectors.

Cons

- Batch Processing Latency: Airbyte operates with batch intervals of 5 minutes or more, which can increase latency, particularly for CDC connectors. This makes it less suitable for real-time data needs.

- Reliability Concerns: Airbyte’s reliance on Debezium for most CDC connectors means data is delivered at least once, requiring deduplication at the target. Additionally, there is no built-in staging or storage, so pipeline failures can halt operations without state preservation.

- Limited Transformation Support: Airbyte is primarily an ELT tool and relies on dbt Cloud for transformations within the data warehouse. It does not support in-pipeline transformations outside of the warehouse.

- Limited DataOps Features: Airbyte lacks an "as code" mode, meaning there are fewer options for automation, testing, and managing schema evolution within the pipeline.

Pricing

Airbyte Cloud pricing starts at $10 per GB of data transferred, with discounts available for higher volumes. The open-source version is free, though hosting and maintenance costs will apply.

Also read:

- See how Airbyte compares with Fivetran in this in-depth comparison

- Looking for other options? Check out these Airbyte alternatives

Hevo Data

Hevo Data is a cloud-based ETL/ELT service that allows users to build data pipelines easily. Launched in 2017, Hevo provides a low-code platform, giving users more control over mapping sources to targets and performing simple transformations using Python scripts or a drag-and-drop editor (currently in Beta). While Hevo is ideal for beginners, it has some limitations compared to more advanced ETL/ELT tools.

Pros

- Low-Code Flexibility: Hevo’s platform enables users to create pipelines with minimal coding and includes support for Python-based transformations and a drag-and-drop editor for ease of use.

- ELT and ETL Support: While primarily an ELT tool, Hevo offers some ETL capabilities for simple transformations, providing versatility in processing data.

- Reverse ETL: Hevo supports reverse ETL, allowing users to return processed data to the source systems.

- Wide Connectivity: Hevo offers over 150 pre-built connectors, ensuring good coverage for various data sources and destinations.

Cons

- Limited Connectors: With just over 150 connectors, Hevo offers fewer options compared to other platforms, which may impact future projects if new data sources are required.

- Batch-Based Latency: Hevo’s data connectors operate primarily in batch mode, often with a minimum delay of 5 minutes, making it unsuitable for real-time use cases.

- Scalability Limits: Hevo has restrictions on file sizes, column limits, and API call rates. For instance, MongoDB users may face a 4090 column limit and 25 million row limits on initial ingestion.

- Reliability Concerns: Hevo's CDC operates in batch mode only, which can strain source systems. Additionally, bugs in production have been reported to cause downtime for users.

- Limited DataOps Automation: Hevo does not support "as code" automation or a CLI for automating data pipelines. Schema evolution is only partially automated and can result in data loss if incorrectly handled.

Pricing

Hevo offers a free plan for up to 1 million events per month, with paid plans starting at $239 per month. Costs rise significantly at larger data volumes, particularly if batch intervals and latency are reduced.

Also Read: Hevo vs Fivetran: Key Differences

Portable

Portable is a cloud-based ETL tool built for teams that need quick and straightforward deployment of data pipelines without much configuration. It is especially suited for small—to mid-sized businesses with limited technical resources. Portable focuses on integrating a wide range of niche or less common applications, making it unique for businesses with very specific data integration needs.

Pros

- Quick Deployment: Portable is designed for rapid setup, enabling users to create and deploy data pipelines in minutes without extensive technical expertise.

- Cloud-Native: As an entirely cloud-based solution, Portable eliminates the need for on-premises infrastructure, offering scalability and flexibility as your data needs grow.

- Ease of Use: With its intuitive interface, Portable simplifies connecting data sources, transforming data, and loading it into destinations, making it accessible even for non-technical users.

- Very Cost-effective: Portable offers a budget-friendly pricing model, especially for smaller organizations or teams with limited data volumes.

Cons

- Batch-Only Data Processing: Portable is not ideal for real-time data processing needs as it primarily handles batch operations.

- Smaller Catalog for Core Business Applications: While Portable excels in supporting niche applications, it may fall short for companies that require more mainstream connectors and database sources.

- Scalability Concerns: Although great for small to medium businesses, Portable may not scale well for large enterprise workloads involving massive datasets and more complex pipelines.

Pricing

Portable offers a simple, flat-rate pricing model, which is a key selling point compared to other ETL platforms.

Integrate.io

Integrate.io is a general-purpose cloud-based integration platform for analytics and operational integration. It can update data as frequently as 60 seconds and is also batch-based. It was founded in 2012, and while it is cloud-native, it provisions dedicated resources for each account.

Pros

- Visual Interface: Integrate.io provides a drag-and-drop interface for building data pipelines, making it easy to design and manage complex workflows without coding.

- Connectivity: Supports various data sources and targets, including databases, cloud services, and SaaS applications for different use cases.

- Data Transformations: Offers robust data transformation capabilities. This includes filtering, mapping, and aggregation, allowing for complex data manipulations.

- Batch and Real-time Processing: Supports both batch and Real-time data processing, providing flexibility depending on the specific requirements of your data pipelines.

- Scalability: Designed to scale with your data needs, Integrate.io can handle both small and large data integration projects efficiently.

Cons

- Limited connectivity for analytics: while there are ~120 connectors, they are spread across many use cases. The source connectivity for a data warehouse or data lake use cases is less than that of other platforms focused on ETL or ELT for analytics.

- Cost Escalation: Pricing is based on credits and the cost per credit increases with each plan. Costs are generally higher than modern ELT platforms.

- Learning Curve for Advanced Features: While basic features are user-friendly, more complex workflows and advanced capabilities may take time to master.

- Batch-Only: while you can do 60-second intervals, it is still not real-time.

- Limited DataOps Automation: Integrate.io lacks comprehensive "as code" automation capabilities, limiting advanced automation for users needing complete control over schema evolution and pipeline management.

Pricing

Integrate.io’s pricing is based on credits for starter, professional, and expert editions and a custom-cost business critical edition. Pricing has changed from connector-based to credit-based. Costs were $15-25K a year.

Rivery

Rivery, established in 2019, has quickly grown to a team of 100 employees, serving over 350 customers globally. As a multi-tenant public cloud SaaS ELT platform, Rivery offers a blend of ETL and ELT functionalities, including inline Python transformations, reverse ETL, and workflow support. It also allows data loading into multiple destinations, offering flexibility in data handling.

Despite its capabilities, Rivery leans more towards a batch ELT approach. While it does offer real-time data handling at the source, particularly through its unique CDC implementation, the overall process remains batch-oriented. This is due to its method of extracting data to files and utilizing Kafka for file streaming, with data being loaded into destinations at intervals of 60, 15, and 5 minutes, depending on whether you're on the Starter, Professional, or Enterprise plan.

Pros

- Modern Data Pipeline Capabilities: Rivery, alongside Estuary, is recognized as a modern data pipeline platform, offering up-to-date solutions for data integration.

- Flexible Transformations: The platform allows users to perform transformations using Python (ETL) or SQL (ELT), though it requires destination-specific SQL for the latter.

- Graphical Workflow Orchestration: Users can quickly build and manage workflows through a graphical interface, simplifying the orchestration process.

- Reverse ETL Functionality: Rivery includes reverse ETL capabilities, allowing data to be pushed back to source systems or other applications.

- Flexible Data Loading: It supports soft deletes (append-only) and multiple update-in-place strategies, including switch merge, delete merge, and a standard merge function.

Cons

- Batch Processing Limitation: Although Rivery performs real-time extraction from CDC sources, it lacks support for messaging-based sources or destinations and restricts data loading to intervals of 60, 15, or 5 minutes, depending on the chosen plan.

- Limited to Public SaaS only: Rivery is only available as a public cloud solution, restricting deployment flexibility for some organizations.

- API Schema Evolution: Lacks robust schema evolution for API-based connectors, which might cause integration challenges with changing API structures.

Pricing:

Rivery uses a credit-based pricing model. Credits cost $0.75 for the Starter plan and $1.25 for the Professional plan, with custom pricing for enterprise plans. The amount of data processed determines costs, but large data volumes can lead to high expenses. Estuary, in contrast, offers a more scalable pricing structure that may be more cost-effective for businesses handling large volumes of data or seeking real-time and batch processing in a single platform.

Also Read:

Qlik Replicate

Qlik Replicate, previously known as Attunity Replicate, is a data integration and replication platform that offers real-time data streaming capabilities. As part of Qlik’s data integration suite, it supports both cloud and on-premises deployments for replicating data from various sources. Qlik Replicate stands out for its ease of use, particularly in configuring CDC (Change Data Capture) pipelines via its intuitive UI.

Pros

- User-Friendly CDC Replication: Qlik Replicate simplifies the process of setting up CDC pipelines through a user-friendly interface, reducing the technical expertise needed.

- Hybrid Deployment Support: It supports both on-premises and cloud environments, making it versatile for streaming data to destinations like Teradata, Vertica, SAP Hana, and Oracle Exadata—options that many modern ELT tools do not offer.

- Strong Monitoring Tools: Qlik Replicate integrates with Qlik Enterprise Manager, providing centralized monitoring and visibility across all data pipelines.

Cons

- Older Technology: Although proven, Qlik Replicate is based on older replication technology. Attunity, the original developer, was founded in 1988, and the product was acquired by Qlik in 2019. Its future, especially in relation to other Qlik-owned products like Talend, is unclear.

- Traditional CDC Approach: In case of connection interruptions, Qlik Replicate requires a full snapshot of the data before resuming CDC, which can add overhead to the replication process.

- Limited to Replication: The tool focuses primarily on CDC sources and replicates data mostly into data warehouse environments. It may not be the right fit if broader data integration is required, such as for ETL/ELT or application integration.

Pricing

Qlik Replicate’s pricing is not publicly available and is customized based on organizational needs. This can make it more expensive compared to pay-as-you-go ELT solutions or Confluent Cloud's Debezium-based offerings.

Striim

Striim is a platform for stream processing and replication that also supports data integration. It was created in 2012 by members of the GoldenGate team. Striim uses Change Data Capture (CDC) to move data in real-time and handle analytics. Over time, it has grown to support many connectors for different use cases.

Striim is great for tasks that need complex stream processing and replication. It is well-known for its CDC features and strong support for Oracle databases. Striim competes with tools like Debezium and Estuary, especially in scalability. It is a top choice for environments that need both real-time and batch data processing.

Pros

- Advanced CDC Capabilities: Striim is renowned for its market-leading CDC functionality, particularly for Oracle environments. It can efficiently capture and replicate real-time changes across various data sources and destinations.

- Stream Processing Power: Striim combines stream processing with data integration, enabling organizations to handle both data replication and real-time analytics within the same platform.

- High Scalability: Similar to other top vendors like Debezium and Estuary, Striim is built to handle large-scale data replication, making it a suitable choice for organizations with extensive data processing needs.

- Graphical Flow Design: Striim Flows offers a visual interface for designing complex stream processing pipelines, allowing users to manage real-time data streams easily once they are learned.

Cons

- Steep Learning Curve: Striim’s complexity lies in its origins as a stream processing platform, making it more challenging to learn compared to more straightforward ELT/ETL tools. While powerful, its Tungsten Query Language (TQL) is not as user-friendly as standard SQL.

- Manual Flow Creation: Building CDC flows requires manually designing Striim Flows or writing custom TQL scripts for each data capture scenario, adding complexity compared to ELT tools that offer more automated solutions.

- Limited Data Retention: Striim does not support long-term data storage, meaning there’s no built-in mechanism for backfilling data to new destinations without creating a new snapshot. This can result in losing older change data when adding new destinations.

- Best for Stream Processing Use Cases: While Striim is excellent for real-time stream processing and analytics, it may not be the best fit for organizations seeking a simpler, more straightforward data integration tool.

Pricing

Striim offers enterprise-level pricing, typically customized based on the organization's scale and specific requirements. Its pricing reflects its advanced capabilities in CDC and stream processing, which may be higher than simpler ETL/ELT tools.

Amazon DMS

Amazon Data Migration Service (DMS) is great for data migration, but it is not built for general-purpose ETL or even CDC. Amazon released DMS in 2016 to help migrate on-premises systems to AWS services. It was originally based on an older version of what is now Qlik Attunity. Amazon then released DMS Serverless in 2023.

Pros

- AWS Support: support for moving data from leading databases to Aurora, DynamoDB, RDS, Redshift, or an EC2-hosted database.

- Low Cost: DMS is low cost compared to all other offerings at $0.087 per DCU (see pricing).

Cons

- AWS-Centric: DMS is limited to CDC database sources and Amazon database targets and requires VPC connections for all targets.

- Limited Regional Support: Cross-region connectivity has some limitations, such as with DynamoDB. Serverless has even more restrictions.

- Excessive Locking: The initial snapshot done for the CDC requires full table-level locking. Changes to the table are cached and applied before change data streams start. It adds an additional load on the source database and delays before receiving change data.

- Limited Scalability: Replication instances have 100GB memory limits, which can cause failures with large tables. The only workaround is to partition the table into smaller segments manually.

Pricing

Amazon DMS pricing is $0.87 per DMS credit unit hour (DCU), 2x for multi-AZ.

Apache Kafka

Apache Kafka is a highly scalable, open-source platform designed for real-time data streaming and event-driven architectures. It is widely used in distributed systems to handle high-throughput, low-latency data streams. It is a vital tool for real-time analytics, data pipelines, and other streaming applications.

Pros

- Real-Time Data Streaming: Kafka allows publishing and subscribing to streams of records in real-time, which is crucial for immediate data processing in industries like finance, telecommunications, and e-commerce.

- Scalability: Kafka is designed to scale horizontally, allowing it to handle massive amounts of data by distributing the load across multiple brokers.

- Integration with ETL Pipelines: Kafka can be used with ETL tools or frameworks like Kafka Connect, Apache Flink, or Apache Storm, which allow you to perform transformations on streaming data in real time.

- Wide Adoption: Kafka is widely used across industries for real-time analytics, event streaming, monitoring, and data integration, making it a well-trusted choice for managing streaming data.

Cons

- Complex Setup and Maintenance: Setting up and maintaining Kafka clusters requires significant expertise.

- Operational Overhead: Due to its distributed nature, managing Kafka at scale can involve higher operational costs and complexities.

- Limited ETL Capabilities: While Kafka excels in streaming, it requires additional tools like Kafka Connect for ETL and data transformation tasks.

Pricing

Apache Kafka is an open-source tool, meaning the software itself is free. However, depending on how it is deployed, costs can accumulate.

Self-Hosted Costs: If you are hosting Kafka, you must factor in infrastructure costs (servers, storage), maintenance, and engineering resources.

Managed Services: Companies offering managed Kafka services (like Confluent Cloud) provide Kafka as a service with pricing typically based on data throughput, storage, and additional features like monitoring and scaling. Managed solutions can be easier to deploy but may lead to higher recurring costs compared to self-hosting.

Debezium

Debezium is an open-source Change Data Capture (CDC) tool that originated from RedHat. It leverages Apache Kafka and Kafka Connect to enable real-time data replication from databases. Debezium was partly inspired by Martin Kleppmann’s "Turning the Database Inside Out" concept, which emphasized the power of the CDC for modern data pipelines.

Debezium is a highly effective solution for general-purpose data replication, offering advanced features like incremental snapshots. It is particularly well-suited for organizations heavily committed to open-source technologies and have the technical resources to build and maintain their own data pipelines.

Pros

- Real-time Data Replication: Unlike many modern ELT tools, Debezium is real-time change data capture. This has made it the de facto open-source CDC framework for modern event-driven architectures.

- Incremental Snapshots: With Debezium's ability to perform incremental snapshots (using DDD-3 rather than whole snapshots), organizations can start to receive change data streams earlier than when using full snapshots.

- Kafka Integration: Since Debezium is built on Kafka Connect, it seamlessly integrates with Apache Kafka, allowing organizations already using Kafka to extend their data pipelines with CDC capabilities.

- Schema Registry: Supports Kssfka Schema Registry, which helps keep schema in sync from source to destination.

Cons:

- Connector Limitations: While Debezium offers robust CDC connectors, users must add another framework for non-CDC sources or batch and non-Kafka destinations, which may require additional custom development.

- Complex Pipeline Management: While Debezium excels in CDC, it is built on Kafka, which is not easy to use or manage.

- At Least Once Delivery: While Debezium could implement and test exactly once delivery with Kafka, it is currently not guaranteed to work. This really should be fixed.

- No Built-in Backfilling or Replay: Kafka's data retention policies mean that Debezium does not store data indefinitely, and organizations must build their own replay or backfilling mechanisms to handle historical data or “time travel” scenarios.

- Backfilling Multiple Destinations: Since backfilling and CDC use the same Kafka topics, reprocessing data from a snapshot can create redundant loads on all destinations unless separate pipelines are set up for each target.

- Maintenance Overhead: Managing Kafka clusters and connectors can be resource-intensive, requiring ongoing administration and scaling to ensure the system runs efficiently.

Pricing

Debezium is free and open-source, but the true cost lies in the infrastructure and resources required to manage Kafka clusters and build custom data pipelines. For organizations using Kafka as their core messaging system, Debezium can be an economical choice. For others, the engineering and maintenance overhead can add up quickly.

SnapLogic

SnapLogic is a more general application integration platform that combines data integration, iPaaS, and API management features. Its roots are in data integration, which it does very well.

Pros

- Unified Integration Platform: SnapLogic supports application, data, and API integration within a single platform, providing a comprehensive solution for enterprise data management.

- Visual Pipeline Design: Features a visual drag-and-drop interface for building data pipelines, making designing and deploying complex integrations easier.

- Scalability: Designed to scale with enterprise needs, SnapLogic can easily handle large volumes of data across multiple systems.

Cons

- Connectivity: While SnapLogic claims over 700 snaps, these are for building functionality. There are nearly 100 snaps for connecting to different sources and destinations. This may meet your needs, but it is less than some other ELT or ETL tools, so evaluate whether you have all the necessary connectors.

- High Costs: SnapLogic’s pricing model is a little more complex than some others and is designed more for enterprise deals. It is not as well suited for starting small with pay-as-you-go pricing for cloud data warehouse deployments.

- Steep Learning Curve for Advanced Features: Although the basic features are user-friendly, mastering the more advanced functionalities may require time and training.

Pricing

SnapLogic’s pricing model is not transparent and is a little complex. There are two tiers of premium snaps (+$15K, +$45K), which you may need for source app connectivity (e.g., NetSuite, Workday), and add-ons for scalability, recoverability, pushdown (ELT), real-time, and multi-org support. While there is generally no volume-based pricing, there is for API calls.

Singer

Singer is an open-source ETL framework that simplifies data integration by using a standardized format for writing scripts to extract data from sources (known as "taps") and load it into destinations (called "targets"). It’s particularly popular among developers and data engineers who need a flexible, customizable solution for creating data pipelines.

Pros

- Modular Architecture: Singer operates on a modular architecture, where each component (tap or target) is a separate script, allowing users to mix and match different taps and targets based on their specific needs.

- Wide Range of Taps and Targets: There are roughly 200 prebuilt taps and targets available, covering popular databases, SaaS applications, and other data sources, which can be easily integrated into your ETL pipeline.

- Customizability: Being open-source, Singer allows developers to create custom taps and targets or modify existing ones to fit their unique data integration requirements.

- Ease of Use: Singer’s simplicity in writing and running ETL scripts makes it an attractive option for developers who prefer to work with Python and want a straightforward, code-based approach to data integration.

- Community-Driven: Singer benefits from a strong community that actively contributes to its library of taps and targets, ensuring ongoing improvements and support for new data sources.

Cons

- Older framework: Singer flourished while Stitch was doing well. But after it was acquired by Talend, which then got acquired by Qlik, it is buried as one of three overlapping tools inside Qlik. Meltano is a newer Singer-based framework that is continuing to grow. If you’re committed to Singer, you should evaluate it.

- Manual Management: While highly customizable, Singer requires manual setup and management, which can increase complexity for organizations without strong engineering resources.

- Limited Automation: Singer lacks built-in automation features in other ETL platforms, meaning users must rely on external tools to schedule and monitor jobs.

Pricing

Singer is free to use as an open-source framework. However, organizations that deploy Singer must consider the cost of development, maintenance, and infrastructure required to run and monitor ETL jobs, particularly if running large or complex pipelines.

Key Considerations When Selecting an ETL Tool:

- Ease of Use: Opt for a user-friendly tool that reduces the learning curve, especially for teams with varying technical expertise. Features like drag-and-drop interfaces and robust documentation can speed up deployment and make the tool accessible to a broader audience.

- Pricing Models: Understand the pricing structure to avoid unexpected costs. Whether it's based on data volume, connectors, or compute resources, ensure the model aligns with your budget and offers flexibility as your usage scales.

- Supported Data Sources: Ensure the tool supports all your current and future data sources, including databases, SaaS applications, and file formats. A wide range of reliable connectors is crucial for seamlessly integrating diverse data environments.

- Data Transformation Capabilities: Strong transformation features are essential for cleaning, enriching, and preparing data. Look for tools that support complex transformations, both within the data pipeline (ETL) and in the data warehouse (ELT).

- Integration with Existing Systems: The tool should integrate smoothly with your existing data infrastructure, including data warehouses and analytics platforms. Compatibility with your security protocols, system monitoring, and regulatory and internal compliance requirements is also important.

- Performance: Ensure you understand your end-to-end latency requirements before choosing your data integration platform. Most ELT tools are batch-based and deliver latency in minutes at best.

- Scalability: Choose an ETL tool that can grow with your data needs, handling larger datasets and more sources without sacrificing performance. Look for cloud-native architectures that scale automatically, ensuring your tool can adapt to increasing demands.

- Reliability: Reliability, uptime, and robust error-handling features ensure smooth and consistent data operations. Check for reliability issues during your POC and also online to see what others say.

- Security and Compliance: Ensure the tool offers robust security features like data encryption and role-based access controls and is compliant with standards such as GDPR, HIPAA, and SOC II.

- Vendor Support and Community: Great support from both the vendor and the community is critical. Make sure you evaluate support. Look for comprehensive documentation, responsive support teams, and active forums that can help you overcome challenges and optimize your ETL processes. Also, check online for issues or comments. They may surprise you in a bad way.

Conclusion

Choosing the right ETL tool is not just a technical choice. It is a strategic decision that can impact your data management and analytics in the future.

In this guide, we have examined ETL tools in 2024. Many options are available, each with unique strengths for different needs.

You may need a cloud-native solution that scales easily. Alternatively, you might want an open-source tool for flexibility. A hybrid option can connect on-premises and cloud systems. The key is to choose a solution that meets your organization's current and future needs.

When making your decision, think about what matters most to your business. Consider scalability, ease of use, pricing, data transformation, and security.

Remember that the best ETL tool does not always have the most features. It should fit well into your current setup. It should also support your long-term data strategy. Most importantly, it should help your team work more efficiently and effectively.

A reliable, scalable, and flexible ETL tool is crucial as data drives business decisions. By choosing the right tool, you will simplify your data processes and open up new opportunities for innovation and growth.

This guide gives you the insights you need. You can now make smart decisions for your organization, which will help it succeed in the changing world of data integration.

The journey doesn’t stop here. Stay informed on the latest advancements in ETL technology and remain agile to meet evolving data requirements.

Your chosen ETL tool is more than just software; it is a critical component of your data infrastructure, driving insights that propel your business forward.

FAQS

- What is the difference between ETL and ELT?

ETL (Extract, Transform, Load) involves extracting data, transforming it into the data pipeline, and loading it into the data warehouse. ELT (Extract, Load, Transform) involves loading raw data into the data warehouse, where the transformations are performed.

- What are the best ETL tools?

The best ETL tools in 2024 are Estuary, Informatica, Talend, and AWS Glue, due to their real-time processing, scalability, and ease of use.

- Are there any free ETL tools available?

Yes, several free ETL tools are available, including Estuary's free plan, which provides up to 2 connectors and 10 GB of data per month. Other options include open-source Airbyte, Meltano, and Singer, though they may require more technical expertise to set up and maintain.

- How do you choose the best ETL tool for your business?

Choose an ETL tool based on your business's specific needs, including scalability, ease of use, pricing, and the types of data sources and destinations you need to integrate. Estuary is a strong option for businesses looking for a scalable, user-friendly solution that supports both Real-time and batch processing with minimal overhead.

Ready to Get Started?

- Create Your Free Account: Sign up here to begin your journey with Estuary.

- Explore the Documentation: Dive into the Getting Started guide to familiarize yourself with the platform.

- Join the Community: Connect with others and get support by joining the Estuary Slack community. Join here.

- Watch the Walkthrough: Check out the Estuary 101 webinar for a comprehensive introduction.

Need Help? Contact us anytime with your questions or for further assistance.

Additional Related Tools Resources

About the author

Dani is a data professional with a rich background in data engineering and real-time data platforms. At Estuary, Daniel focuses on promoting cutting-edge streaming solutions, helping to bridge the gap between technical innovation and developer adoption. With deep expertise in cloud-native and streaming technologies, Dani has successfully supported startups and enterprises in building robust data solutions.

Popular Articles