The traditional ETL (Extract, Transform, Load) process, a common approach within the realm of data integration tools, involves extracting, transforming (outside the target system), and loading the data to the destination. However, with the exponential growth of data, it gets challenging to perform transformations on large datasets within reasonable timeframes. As a result, this approach can be time-consuming and resource-intensive for handling massive data volumes.

This challenge led to the adoption of ELT (Extract, Load, Transform), a modern data integration approach that allows organizations to quickly collect and load data into the target system as-is without performing any transformation.

There are several ELT tools in the market that allow you to quickly collect data from different sources and store it in a centralized system. In this article, we will discuss the best ELT tools for your modern data stack. But first, let's get a clear understanding of what exactly ELT is.

What is ELT?

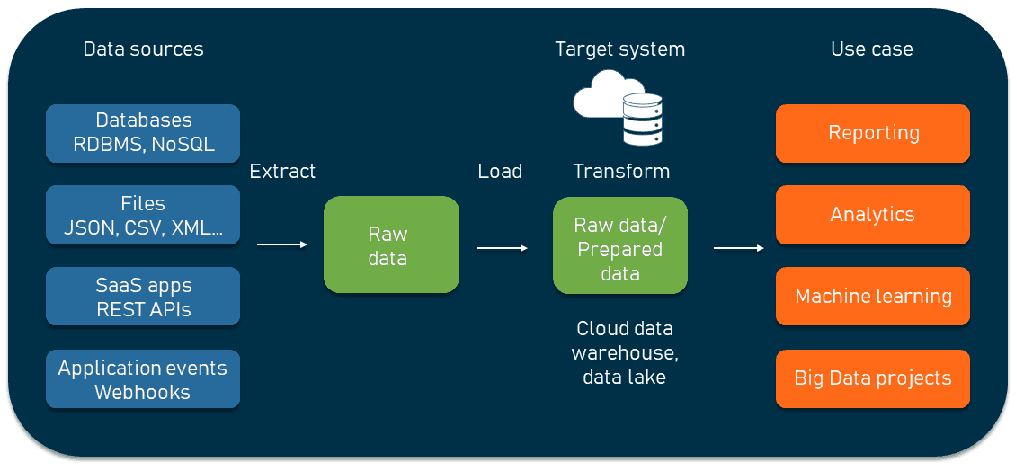

ELT (Extract, Load, and Transform) is one of the more common data integration patterns, an approach which became more popular with the growth of cloud data warehouses. It includes extracting data from multiple sources and loading it into the target source. Unlike the ETL process, where data is transformed before loading into a centralized system, in ELT, data is transformed after loading in the target system.

The ELT process is divided into three different stages:

- Extract: Initially, raw or unstructured data is extracted from multiple sources—spreadsheets, databases, applications, APIs, etc. The extracted data can be in different formats, such as CSV, JSON, or relational data.

- Load: The extracted data is loaded into the desired destination system, like a data lake, without any extensive standardization or cleaning.

- Transform: After data is loaded into the destination system, the transformation phase begins. In this stage, the data is cleaned, aggregated, filtered, and standardized whenever required.

Benefits of the ELT process:

- Faster Data Ingestion: ELT allows faster data loading as the transformation step is performed after the data is loaded into the target system. This minimizes the need to perform complex (batch-based) transformations before the loading process, enabling a simpler EL architecture and the ability to take advantage of SQL support for transforming data in the data warehouse.

- Cost-Effective: ELT leverages the power of the target system to perform the transformations. This lets you use your existing data engineering skillsets and take advantage of the scalability of your data warehouse.

ETL vs ELT

ELT and ETL are two common approaches used in data integration. Let’s understand the fundamental differences between both processes.

| Category | ETL | ELT |

|---|---|---|

| Process Sequence | Extract data from the source system, transform and manipulate it, and load it into the target data warehouse. | Extract data from source systems, load raw data directly into the target system, and then transform data whenever needed. |

| Target System | Data is structured as per the target (mostly data warehouse or database) system’s specific requirements. When loaded, it is in ready-to-use format. | Data is loaded into the target system (mostly data lakes) in its raw or near-raw form. |

| Pipeline Maintenance | ETL pipelines require changes when source data, transformation rules, or targets change. | ELT pipelines require you to maintain any changes in the source, transformation, or target data within the target system. |

| Data Compatibility | Modern ETL handles all types of data, even when the targets cannot. | Modern ELT also handles all types of data but requires the target to be able to handle it as well. |

| Use Case | Suitable for scenarios with 1 or more targets where real-time streaming and low latency is important and running transformations mid-stream makes more sense. | Suitable for scenarios with a single target where latency isn’t as important as having access to all the data in one place with SQL. |

For more key differences, read this blog: ETL vs ELT

Factors to Consider When Choosing ELT Tools

Before finalizing any ELT or ETL tool for your organization, consider analyzing the following questions:

- Does the tool provide a pre-built connector to your required data sources?

- Can the ELT tool handle large amounts of data?

- Does it offer real-time data movement?

- Will the tool pass complete data security compliance?

- Does the ELT tool provide an easy user interface to use?

- Is the pricing structure based on the number of users, connectors, or volume of data size?

The above questions will help you gauge various prerequisites and find the perfect ELT tool for your requirements.

Tip: Check how you can break down the data integration costs and minimize it with a realistic strategy.

10 Best ELT Tools for Modern Data Stack in 2024

Here are the top 10 ELT (and ETLT) tools in 2024 for data ingestion that are well-known for their flexibility, compatibility, and robust capabilities.

- Estuary Flow

- Matillion

- Airbyte

- Blendo

- Fivetran

- Informatica

- MuleSoft

- Talend

- Qlik

- Azure Data Factory

Let's embark on this journey of discovery together, exploring the world of ELT solutions. We'll navigate the intricacies of each platform, providing you with the insights you need to make an informed decision.

1. Estuary Flow

Estuary Flow is one of the best data integration and transformation tools in the market. With its 150+ real-time and batch connectors built by Estuary and support for 500+ connectors from Airbyte, Meltano, and Stitch, you can quickly establish connections between databases and platforms without writing a single line of code. This allows you to extract data from various sources and move it to the required destination.

Some of the top features of Estuary Flow include:

- Many to many ETL: Unlike the other ELT tools listed, Estuary not only supports ELT. It enables many-to-many real-time or batch ETL, with multiple sources and targets in the same pipeline, and streaming transformations.

- Real Time Data Movement: For real-time data capture, Flow uses Change Data Capture (CDC) that captures the changed events from the source and moves them to centralized storage systems. This reduces the latency between data updates and their availability for downstream applications.

- Exactly Once-Processing: Flow is built on Gazette (similar to Kafka), which provides exactly once-processing semantics and guarantees the de-duplication of real-time data.

- Pricing: Flow offers three pricing tiers—Free, Cloud, and Enterprise, which are suitable for small to large-scale enterprises.

2. Matillion

Matillion is a cloud-native tool that supports both ELT and ETL processes and is specifically designed to work with cloud data warehouse platforms. It allows you to extract data, load it into a data warehouse, and transform it as needed for analysis. To seamlessly integrate with different systems, Matillion offers 150+ pre-built source and destination connectors. If you don't find pre-built connectors for your needs, you can build a custom connector with a REST API connector.

Some of the best features of Matillion include:

- Scalability and Performance: Matillion is built on cloud infrastructure, which is designed to handle massive data workloads. It securely handles enormous data and processes complex transformations without lag or delay, enabling you to scale data integration workflows as needed.

- Pricing: Matillion offers three pricing plans—Basic, Advanced, and Enterprise. You can use its free plan for trial purposes.

3. Airbyte

Airbyte is a robust data integration tool for data movement and transformation. As one of the leading ELT tools, Airbyte allows you can collect, process, and centralize data in data warehouses, data lakes, or databases of your choice. To streamline data movement between a wide range of data storage systems, it offers over 300 connectors. However, using Airbyte, you can also build custom connectors to support applications of your choice.

While Airbyte focuses more on the extract and load steps, you can perform custom transformation with SQL and program deep integration with (dbt). Airbyte automatically generates a dbt project by setting up a dbt docker instance. By combining Airbyte and dbt, you can build an end-to-end data pipeline that covers data ingestion, transformation, and modeling.

Some of the salient features of Airbyte include:

- Extensive Connectors: Although it has several pre-built connectors, you can quickly build custom connectors with its Connector Development Kit (CDK). By using tools and libraries provided by CDK, you can also handle common data integration tasks.

- Monitoring and Analysis: Airbyte allows you to track the status of data sync jobs, monitor the data pipeline, and view detailed logs. It also offers an alert mechanism that notifies you instantly in case of any failure during the data integration process.

- Real time and Batch Data Synchronization: Airbyte supports both batch and real time data synchronization, enabling you to choose the synchronization mode that fits your needs.

- Pricing: Airbyte is completely free to use if you self-host the open-source version. Other than that, it has two plans—Cloud and Enterprise.

4. Blendo

Blendo is one of the most flexible, cloud-based data integration platforms that offer ELT capabilities. It allows you to seamlessly integrate with various data sources and destinations with its 45+ data connectors. Using Blendo, you can quickly replicate your databases, events, applications, and files into a destination of your choice, such as Redshift, BigQuery, Snowflake, or PostgreSQL.

Some of the best features of Airflow include:

- Fully Managed Data Pipeline: Blendo is designed to handle massive amounts of data and can scale horizontally as data grows. It offers managed data pipeline features such as scheduling data extraction, loading jobs, and constantly informing you about your data syncs. This reduces the data maintenance and manual intervention burden so that you can focus on data analysis.

- Automated Schema Migration: Blendo creates the necessary tables in your data warehouse by inferring your source schema. It detects the changes that take place in schema or source data without requiring a pre-defined schema. This saves your time and effort in manually mapping the data fields.

- Custom Transformation: With Blendo, you can transform and enrich your data by applying custom transformations, mapping, or aggregations to the data using SQL or custom Python code.

- Pricing: Blendo offers a full-featured 14-day free trial plan. However, depending on the number of pipelines and usage, you can choose its—Starter, Grow, and Scale plans.

5. Fivetran

Fivetran is a cloud-based, fully automated ELT tool used for extracting, loading, and transforming data. Apart from data integration and transformation, it also supports data replication. It rapidly detects all of your data changes and replicates them to your destination, ensuring that the replicated data is always up to date.

With Fivetran’s easy-to-integrate 300+ pre-built source and destination connectors, you can seamlessly connect with different systems. When Fivetran does not have a pre-built connector for your required source, you can use Function Connector to build a custom connector. Function connector requires you to write the cloud function to extract the data from your source, and Fivetran takes care of loading and processing data into your destination.

Some of the top features of Fivetran include:

- Automation: One of the key features of Fivetran is that it automates different processes such as transformation, normalization, de-duplication, governance, and more.

- Private Deployment: Fivetran gives you flexible options to deploy and manage Fivetran connectors either on-premises or in your private cloud environment. This gives you more security and control over the location and environment where your data integration process runs.

- Pricing: Fivetran follows a usage-based pricing model, so you only pay for active rows for each month and not total rows. It offers five pricing plans—Free, Starter, Standard, Enterprise, and Business Critical. These plans are suitable for users from startups to enterprises that need the highest data protection and compliance.

6. Informatica

Informatica is a data integration tool that supports both the ELT as well as ETL processes. It enables you to extract data from diverse source systems, load it into target repositories, and transform it as needed.

Data transformations in Informatica are executed within the Informatica PowerCenter, a powerful tool used for data integration operations. You can create complex data transformation processes by designing mappings and workflows within PowerCenter. These mappings contain transformation logic that defines how the data should be modified, cleansed, enriched, or manipulated during the ELT process.

Besides data integration solutions, Informatica also supports data orchestration, monitoring, error handling and offers a range of tools for ensuring data quality and compliance.

Here are some of Informatica’s features:

- Snowflake Data Cloud Connector: Informatica provides over 100 pre-built cloud connectors. Among these connectors, the Snowflake Data Cloud connector is a popular cost-saving connector by Informatica. This connector is enhanced with AI capabilities, allowing you to create robust data integration mappings. You can seamlessly connect various on-premises and cloud data sources to Snowflake’s data warehouse. If you’re in search of ETL or ELT tools for Snowflake, Informatica is a proven choice.

- Pricing: Informatica provides a 30-day free trial service. To inquire about pricing details and available plans, you can get in touch with their sales team.

7. MuleSoft

MuleSoft’s Anypoint Platform is a comprehensive solution for data integration processes. It allows you to extract, load, and transform data with a wide set of 100 pre-built connectors, including databases, cloud services, applications, and more.

Key features of Anypoint Platform:

- Data Transformation: To transform data effectively, you’ll need to use DataWeave, a functional programming language created for data transformation. It’s Mule’s primary language for configuring components and connectors.

- Real-time and Batch Processing: Anypoint Platform caters to both real-time and batch processing requirements. This makes it suitable for multiple data integration scenarios.

8. Talend

Acquired by Qlik, Talend is a versatile data integration solution that allows you to connect and manage data efficiently. It supports a range of data integration processes, including extraction, transformation, and loading. This makes it a flexible solution for diverse data workflows. With its extensive library of connectors, you can simplify data extraction from various sources, like databases, cloud applications, and data warehouses.

Here are the notable features of Talend:

- Data Transformation: Talend’s intuitive visual interface allows you to define data transformations. You can apply business logic and quality rules to data as it traverses through the integration process, ensuring data consistency and accuracy.

- Pipeline Monitoring: Talend provides a monitoring feature to track the execution of data jobs. You can view logs, troubleshoot issues, and set up alerts for job failures. It also offers real-time visibility into data integration processes.

- Pricing: Talend offers a range of pricing plans tailored to various data integration and management needs. These include subscription-based pricing models—Stitch, Data Management Platform, Data Fabric, and Big Data Platform.

9. Qlik

Qlik Compose is a data integration and transformation platform offered by Qlik, a popular business intelligence and analytics solutions provider. Qlik Compose allows you to efficiently handle data ingestion, synchronization, distribution, and consolidation. With a wide range of connectors, it empowers you to connect with diverse data sources, ensuring a streamlined ELT process.

Qlik is well-known for its following features:

- Flexible Deployment: Qlik provides flexible deployment options, allowing you to choose between on-premise and cloud solutions based on your needs.

- Qlik Replicate: You can seamlessly integrate Qlik Compose with Qlik Replicate, a data ingestion and replication solution. This integration automates data extraction from diverse source systems, eliminating the need for manual coding.

- Pricing: Qlik offers two data integration pricing models, each with its unique features. Qlick Data Integration model allows you to control your data integration processes. This model automates data streaming, transformation, and cataloging. On the other hand, Qlick Cloud Data Integration is an iPaaS (integration platform as a service) solution that also provides real-time data movement, transformation, and unification.

10. Azure Data Factory

Azure Data Factory is a cloud-based data integration service offered by Microsoft. It allows you to design and execute both ETL and ELT workflows based on your specific integration requirements. You can create data pipelines that include extraction, transformation, and loading tasks. It also provides you an option to choose whether to perform transformations in the Azure Data Factory or within the destination data warehouse.

Here are some of the amazing features included in Azure Data Factory:

- Wide Range of Connectors: With its user-friendly interface and diverse 90+ in-built connectors, you can connect with a variety of data sources and destinations. These connectors include Azure services, SaaS applications, relational databases, or cloud-based data warehouses.

- Integration with Azure Services: Azure Data Factory seamlessly integrates with other Azure services, allowing you to leverage services like Azure Synapse Analytics, Azure Data Lake Storage, Azure SQL Data Warehouse, and Azure HDInsight.

- Data Orchestration: It offers a workflow-based approach to create, schedule, and manage data pipelines. This simplifies the coordination of data movement processes, making it reliable for your data integration needs.

- Pricing: It offers a pricing solution where you need to pay for the data processing and storage you use.

Conclusion

ELT tools are designed to handle various data types, making them versatile for different data integration and processing scenarios. Whether it’s structured, semi-structured, or streaming data, ELT tools provide the necessary capabilities to extract, load, and transform data into centralized storage for further analysis. But depending on the use scenario, you should gauge each tool and select the best-fit for your organization.

Key Takeaways:

- ELT streamlines data integration by loading raw data first, then transforming it.

- Top ELT tools offer scalability, automation, and extensive connector libraries.

- Consider factors like data volume, real-time capabilities, and pricing when choosing a tool.

- Estuary Flow, Matillion, Blendo, and Fivetran are among the best ELT tools in the market in 2024.

Are you looking for a fully-automated seamless data integration tools to analyze your data quickly? Get started with Estuary’s free trial today

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles