Data migration is an integral part of data analytics. It is essential to harness better features of other systems or servers, access improved resources, boost efficiency, or for cost-effectiveness.

DynamoDB is a serverless and highly scalable NoSQL database. However, it has certain drawbacks, like limited querying options and the absence of table joins. For these reasons, you may consider migrating data from DynamoDB to a platform better suited for your needs.

MongoDB would be a great choice here. It is a complete developer data platform that offers powerful querying, speed, simple installation, and cost-effectiveness. Therefore, it would be well-suited for efficiently developing and working with applications.

In this tutorial, we’ll show you two ways to reliably migrate your data from DynamoDB to MongoDB to streamline your workflow.

Dive straight into the implementation methods if you're already DynamoDB and MongoDB ninjas. If you're new to the scene, a quick rundown awaits!

Explore our comprehensive guide for the reverse migration from MongoDB to DynamoDB.

DynamoDB Overview

DynamoDB is a NoSQL database service offered by AWS that can be used to develop modern applications of any scale. It is a serverless database with a simple API for interaction, eliminating the need for database management. With this, you can store and retrieve data from the database tables that you have created. You can utilize DynamoDB Auto Scaling to adjust the scale of your database without affecting its performance. To maintain performance while controlling costs, you can track the performance metrics using the AWS Management Console. These metrics are essential for monitoring and optimizing the performance of the database.

DynamoDB can create full backups of your tables, which you can retain for a long time. It also enables point-in-time recovery of your databases to protect them from accidental write or delete operations. With regard to security, DynamoDB protects sensitive data through its encryption feature.

Some key features of DynamoDB are:

- Flexibility: DynamoDB's schema is flexible because of its key-value and document data models. The attributes are stored collectively in the table.

- Serverless Management: DynamoDB is a fully managed AWS service. You don’t have to perform administration tasks like provisioning, patching, scaling, etc. This results in a cost-effective model where you pay only for the data storage and read and write throughput you use.

- Fast Performance: DynamoDB delivers single-digit millisecond performance even at a massive scale. Because of its uptime SLA, you can access your data anytime. It also scales automatically depending on your application's traffic volume without compromising performance.

What Is MongoDB?

MongoDB is an open-source, NoSQL document-based database that stores data in BSON format, a type of JSON. It is a fast-performing database capable of storing a high volume of data. The MongoDB architecture stores data in the form of collections and documents. The document consists of basic key-value pairs, and document sets are collectively called Collections, which are analogous to SQL tables in relational databases.

Documents are in Binary JSON (BSON) format and can accommodate various data types. They consist of a primary key as a unique identifier. You can change the structure of a document by adding, deleting, or modifying fields. To query, update, and conduct other operations on your data, you can use MongoShell. It is the prominent component of MongoDB that acts as an interactive Javascript interface. However, MongoDB also provides drivers and APIs, allowing operations beyond the shell.

Key features of MongoDB are:

- Data Model: It simplifies data modeling by storing information in JSON-like format. This removes the need for rigid table structures and allows you to group related information.

- Scalability: MongoDB enables sharding, i.e. horizontal scaling, by distributing data across multiple servers. This increases MongoDB's storage capacity and processing speed. It also enables data replication, allowing you to access it even during server outages.

- Performance: It enables indexing of frequently accessed data, improving query performance and providing faster data retrieval. Also, its in-memory storage caches the frequently accessed data, further enhancing retrieval.

How to Migrate From DynamoDB to MongoDB

Here are two methods that can help you easily migrate DynamoDB to MongoDB:

- The Automated Way: Using Estuary Flow for DynamoDB to MongoDB Integration

- The Manual Approach: Using Custom Scripts for DynamoDB to MongoDB Migration

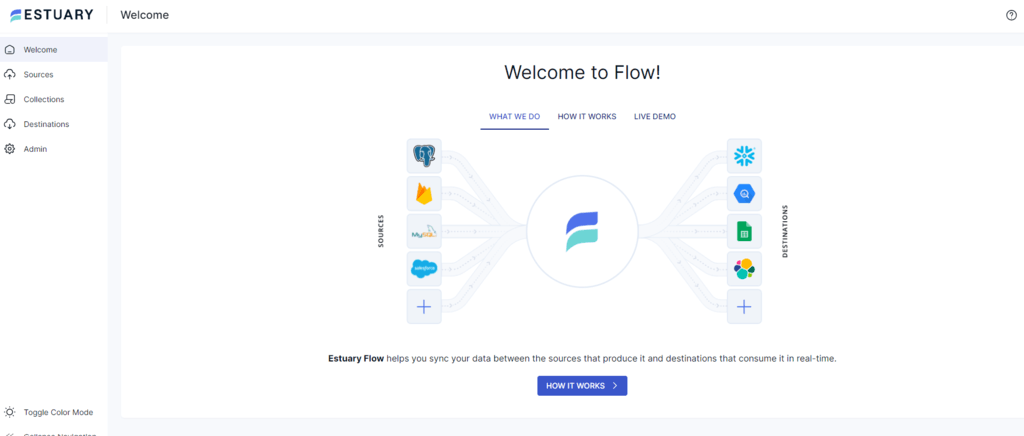

The Automated Way: Using Estuary Flow for DynamoDB to MongoDB Integration

Estuary Flow is a real-time ETL (extract, transform, load) tool that helps you build data pipelines to integrate different sources and destinations. To migrate from DynamoDB to MongoDB using a no-code SaaS tool like Flow, follow the steps below.

Connectors used for this integration: DynamoDB & MongoDB

Prerequisites for Connecting DynamoDB to MongoDB

Step 1: Configure DynamoDB as a Source

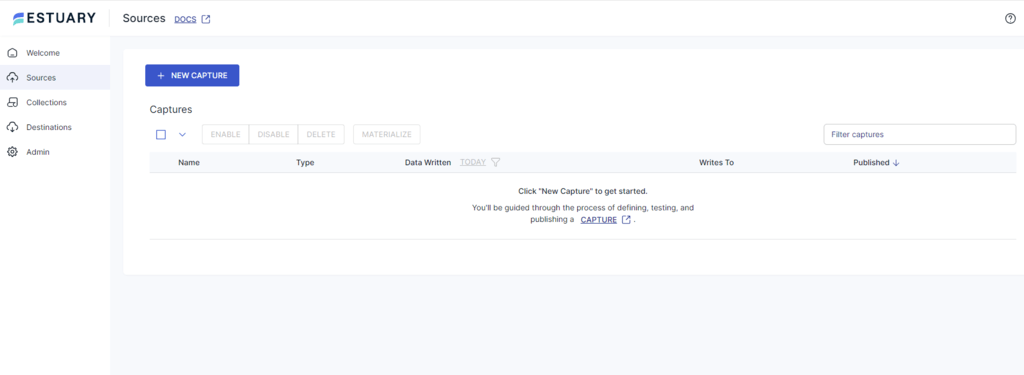

- Sign in to your Estuary account and select Sources from the main dashboard.

- Click on the + NEW CAPTURE button on the Sources page.

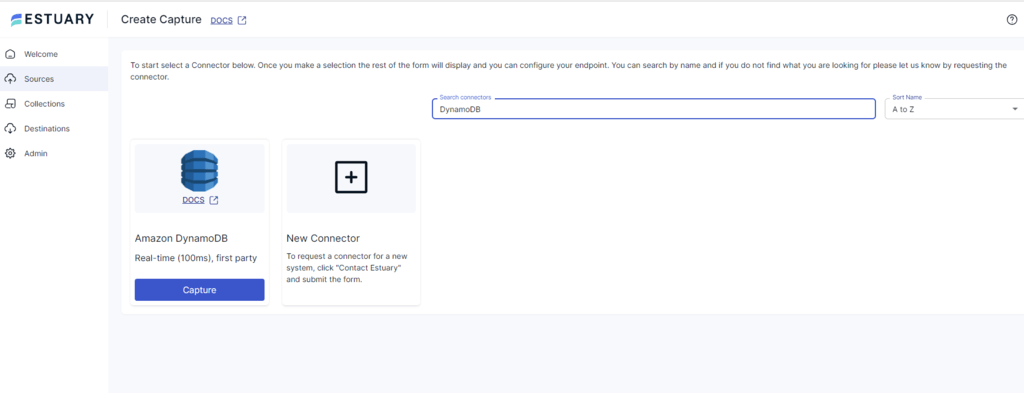

- In the Search connectors box, type DynamoDB. You will see the DynamoDB connector in the search results; click on its Capture button.

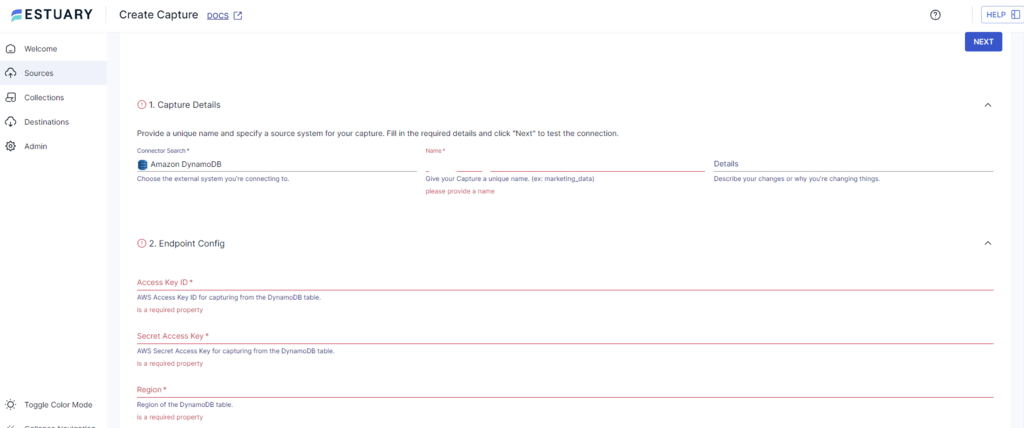

- On the DynamoDB Create Capture page, enter a Name for your capture, Access Key ID, Secret Access Key, and Region. Then, click on NEXT > SAVE AND PUBLISH.

The connector uses DynamoDB streams to capture updates from DynamoDB tables constantly and convert them into Flow collections.

Step 2: Configure MongoDB as Destination

- Following a successful capture, a pop-up window appears with the capture details. To proceed with setting up the destination end of the pipeline, click on the MATERIALIZE COLLECTIONS button.

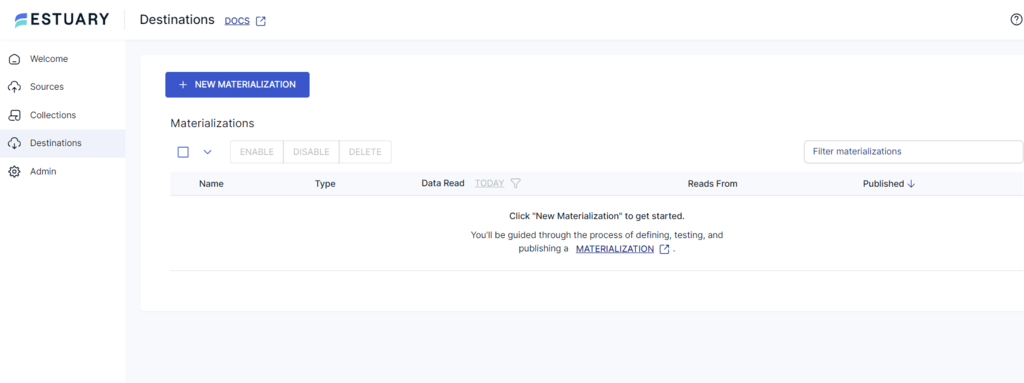

However, you can also navigate to the main dashboard and select Destinations in the left-side panel. Then, click on + NEW MATERIALIZATION on the Destinations page.

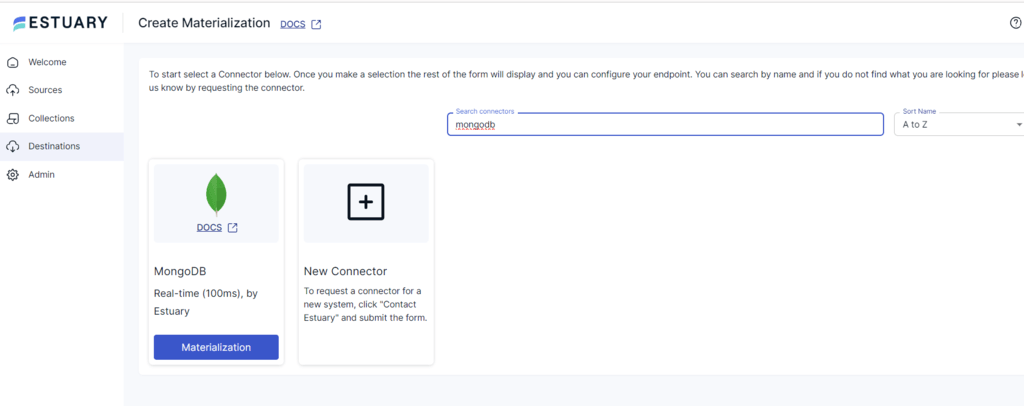

- Type MongoDB in the Search connectors box and click on its Materialization button.

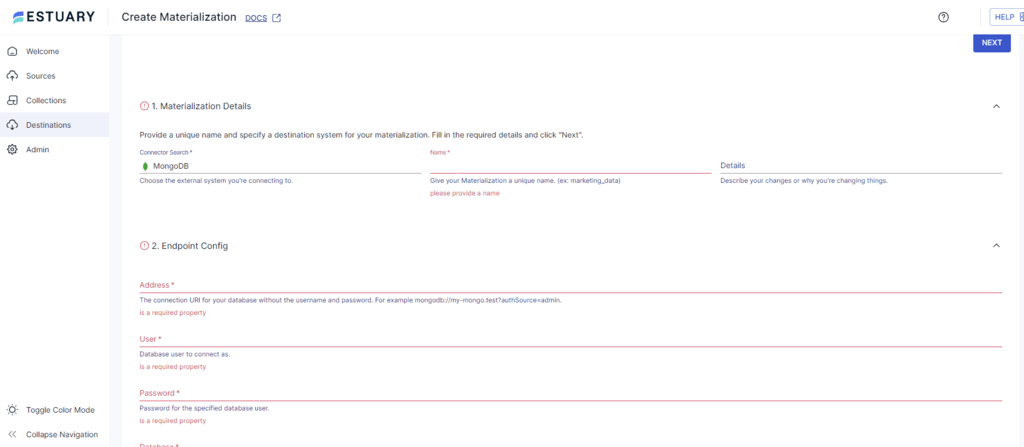

- On the Create Materialization page, enter the Address, User, Password, and Database, among other mandatory details. Then, click NEXT > SAVE AND PUBLISH.

The connector will then materialize Flow collections to MongoDB collections.

Key Features of Estuary Flow

Estuary Flow is one of the prominent SaaS tools for building ETL data pipelines. Some of its key features are:

- Powerful Transformations: Estuary Flow processes data using micro-transitions, which helps maintain data integrity even if the system crashes.

- Stream Processing: Flow offers streaming SQL, enabling you to transform your data on the data stream. You do not need to store and process entire datasets, which helps reduce processing latency.

- Cost-Effective: The platform is based on a pay-as-you-go model. It allows you to process your data at a reasonable cost, irrespective of its volume. This is contrary to traditional ETL tools that are mostly based on fixed licensing.

- Change Data Capture: Estuary supports CDC. Instead of capturing the entire dataset, it only captures the changes that were made in the database since the last update. This improves efficiency by minimizing processing time.

- Materialized Views: These are pre-computed and stored forms of query results. You can specify the destination of your data pipelines in the form of materialized views. This helps improve query performance.

The Manual Approach: Using Custom Script for DynamoDB to MongoDB Migration

This method uses a Python script to extract data from DynamoDB and load it into MongoDB. You can use the lambda function to do this.

Step 1: Setting Up Connections

Create a table in DynamoDB to store your data. Also, create a collection in MongoDB to store the migrated data. Import libraries such as boto3 for DynamoDB and Pymongo for MongoDB to establish a connection with the databases.

plaintextimport boto3

from pymongo import MongoClient

import json

# Setting up DynamoDB and MongoDB connections

dynamodb = boto3.resource('dynamodb', region_name='your_dynamodb_region')

dynamodb_table = dynamodb.Table('your_dynamodb_table')

mongo_client = MongoClient('your_mongodb_connection_string')

mongo_db = mongo_client['your_mongodb_database']

mongo_collection = mongo_db['your_mongodb_collection']Step 2: Extracting Data from DynamoDB

In this step, you will have to extract data from DynamoDB by defining a lambda function. You can do so with the help of the code given below:

plaintextdef lambda(event, context):

dynamodb_table=dynamodb.Table(“your_dynamodb_table_name)

response=dynamodb_table.scan()

Items = response[‘Items’] #Extract items from response

with open(json_file_name, ‘w’) as json_file:

json.dump(item,json_file, indent=4) #write items to JSON file

# Example usage:

table_name = 'YourTableName' # Replace with your DynamoDB table name

json_file_name = 'output.json' # Specify the name of the output JSON file

extract_dynamodb_data(table_name, json_file_name)Ensure the lambda function has appropriate permissions to read from DynamoDB and write in MongoDB. The lambda function gets triggered by an event, such as an API gateway request. It then scans the specified DynamoDB table. The data is retrieved from it and placed into a JSON file first, then inserted into the MongoDB table.

Step 3: Loading Data to MongoDB

You can load the extracted DynamoDB data from the JSON file to MongoDB using boto3 and Pymongo library.

plaintextdef load_data_to_mongodb(json_file_name, mongodb_uri, database_name, collection_name):

client = pymongo.MongoClient(mongodb_uri)

# Access the specified database

db = client[database_name]

# Access the specified collection

collection = db[collection_name]

# Open JSON file and load data

with open(json_file_name, 'r') as json_file:

data = json.load(json_file)

# Insert data into MongoDB collection

collection.insert_many(data)

print("Data loaded into MongoDB successfully!")

json_file_name = 'output.json'

mongodb_uri = 'mongodb://localhost:27017/'

database_name = 'your_database_name'

collection_name = 'your_collection_name'

load_data_to_mongodb(json_file_name, mongodb_uri, database_name, collection_name)This completes the method using a custom script for DynamoDB to MongoDB migration.

Limitations of Using Custom Scripts to Migrate Data from DynamoDB to MongoDB

There are some limitations of using a custom script for DynamoDB to MongoDB migration of data, such as:

- Error-Prone: Using a custom script for data migration can be inefficient since it is susceptible to bugs and errors. Any errors in the script can lead to data loss or corruption. Additionally, the debugging process can be time-consuming.

- Security Loopholes: Security issues may arise while transferring sensitive data from one database to another using a custom script. These problems include unauthorized access, the use of outdated libraries, or a lack of expertise. The migrated data may be exposed to potential threats that can compromise data integrity.

- Skilled Developers: An organization has to maintain skilled personnel to write, maintain, and debug custom migration scripts. This can be expensive, and you must also continuously invest in training and development to manage evolving database features and security practices.

In Summary

Data migration is essential to streamlining any organization's workflow. Here, you’ve explored two methods to migrate your data from DynamoDB to MongoDB. One way to go about this is to use a custom script. However, it can be a cumbersome and error-prone task.

Powerful, real-time ETL platforms like Estuary Flow can come in handy when you’re looking for an automated, cost-effective, and scalable solution. You can use it to transfer your data from DynamoDB to MongoDB in a few minutes without extensive technical expertise.

With impressive features, such as automation, an easy-to-use interface, readily available connectors, and scalability, it’s one of the most efficient tools for setting up complex data pipelines. Sign up for Estuary Flow today to leverage our fully-managed platform to simplify your workflows.

Looking to Move DynamoDB to Other System? Read these Related Guides:

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles