If you’ve found your way to this page, you’re probably looking to migrate data from DynamoDB to BigQuery. By combining BigQuery’s analytical strength and warehousing capabilities, along with DynamoDB’s operational efficiency, you can maximize the potential of your data. In return, this helps you to achieve valuable insights and gain a deeper understanding of your products or customer journey. What’s not to love about this integration?

To get things going for you, we’ll explore the two approaches to streamline your DynamoDB to BigQuery data pipeline and cover a few use cases. But, before we start with the replication process, let’s introduce the platforms in question.

DynamoDB Overview

Developed by AWS, DynamoDB is a fully-managed NoSQL database service. It offers a serverless and scalable solution to handle various analytics workloads. With its NoSQL data model, DynamoDB accommodates both structured and semi-structured data for analysis. Its schema design flexibility allows you to store and retrieve data using key-value and document-oriented models.

In addition, DynamoDb offers active-active replication with global tables, allowing you to create globally distributed databases that operate concurrently. With this feature, you gain the flexibility to read and write from any replica, facilitating your globally distributed applications to access data locally within selected regions. This ensures data consistency and real-time access across multiple regions.

BigQuery Overview

BigQuery, developed by Google Cloud, is a serverless data warehousing and analytics solution. It allows you to store, manage, and analyze massive volumes of data efficiently. For superior performance, BigQuery supports parallel processing and columnar storage.

Beyond its powerful querying capabilities, BigQuery distinguishes itself with its real-time data streaming features. You can effortlessly process streaming data, enabling you to achieve immediate insights from changing data sources. This is particularly suitable for applications seeking up-to-date information for decision-making.

For in-depth analysis, BigQuery also supports machine learning functionalities via BigQuery ML. This helps you to develop and deploy ML models within the platform.

2 Ways to Replicate Data From DynamoDB to BigQuery

- The Automated Method: Replicate data from DynamoDB to BigQuery using Estuary Flow

- The Manual Method: Connect DynamoDB to BigQuery using CSV files

The Automated Method: Replicate Data From DynamoDB to BigQuery Using Estuary Flow

Estuary Flow is a real-time ETL (extract, transform, load) and data integration platform that allows you to efficiently complete your data integration tasks. With support for 100+ connectors, it provides an extensive framework for seamless data connectivity without writing extensive codes.

Estuary Flow is a suitable solution for:

Real-Time Analysis: Flow’s near real-time data synchronization and Change Data Capture technology helps you to capture change data. This ensures that your target warehouse is always updated and analysis is based on the most current information.

Codeless Approach: With a wide range of connectors, Estuary eliminates the need for complicated scripts. This allows you to effectively manage the migration process without needing advanced coding skills.

Scalability: Flow efficiently manages large datasets and heavy workloads, achieving data transfer speeds up to 7 GB/s CDC for datasets of any size.

Eliminates Data Disruption: Flow handles changes in data structures, such as adding or modifying columns, ensuring a smooth transition while maintaining data integrity. This feature minimizes the change in data disruption and the need for manual intervention.

Time-Saving: Its intuitive interface and pre-built connectors facilitate quick implementation and deployment. This reduces manual efforts required in the integration process and allows you to focus on more strategies.

Before proceeding with our step-by-step guide on connecting DynamoDB to BigQuery using Estuary Flow, it is important to ensure that you have everything you need to set up the pipeline.

Prerequisites:

Refer to the documentation below to see what prerequisites need to be fulfilled:

Step 1: Login / Register

Step 2: Connect and Configure DynamoDB as Source

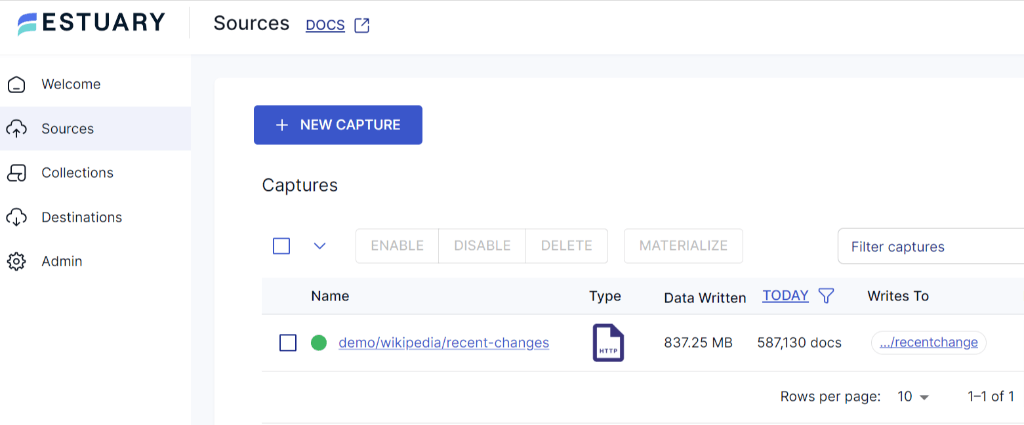

- After logging in, you’ll land on Estuary’s dashboard. Go to the Sources tab.

- Click on the + NEW CAPTURE button on the Sources Page.

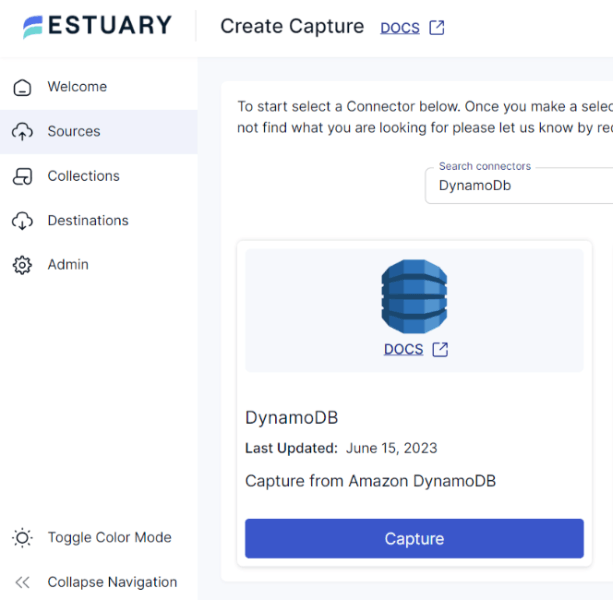

- You’ll be directed to the Create Capture page. Search for DynamoDB in the Search connectors box. Once you find it, click on the Capture button of the connector.

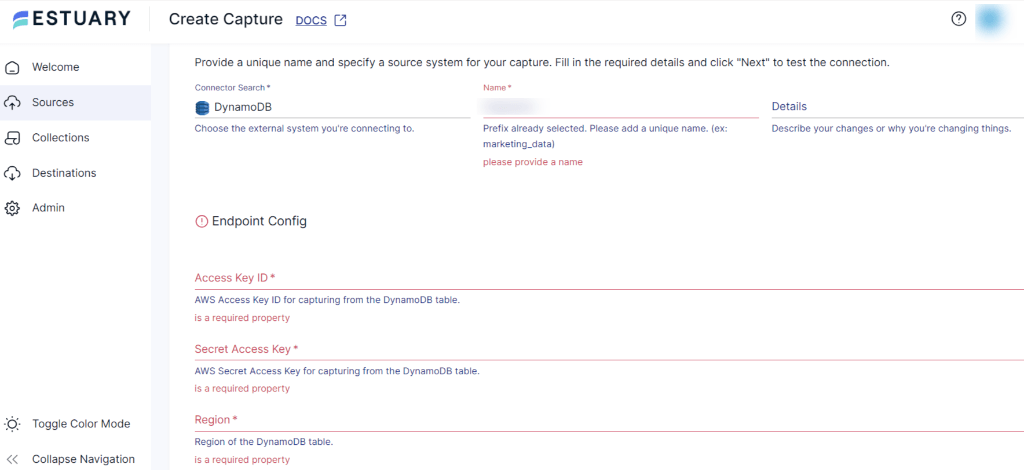

- On the DynamoDB Create Capture page, provide a unique Name for your connector. Fill in the required information, including Access Key ID, Secret Access Key, and Region.

- After filling in all the details, click on NEXT, followed by SAVE AND PUBLISH.

Step 3: Connect and Configure BigQuery as Destination

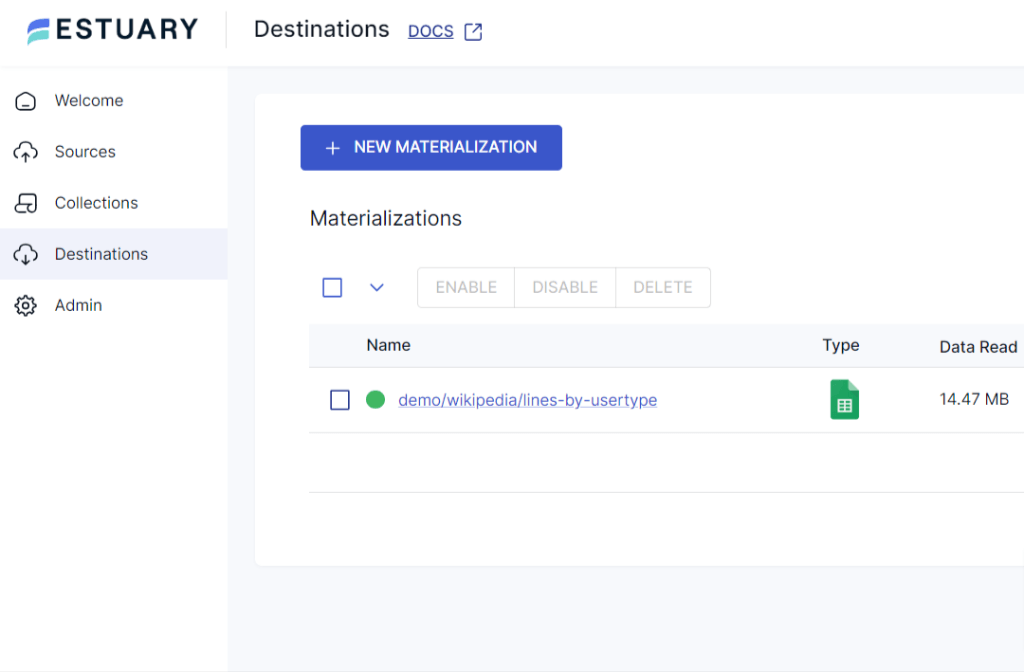

- To connect BigQuery as the Destination of your pipeline, navigate to the Estuary dashboard and click on Destinations.

On the Destinations page, click on the + NEW MATERIALIZATION button.

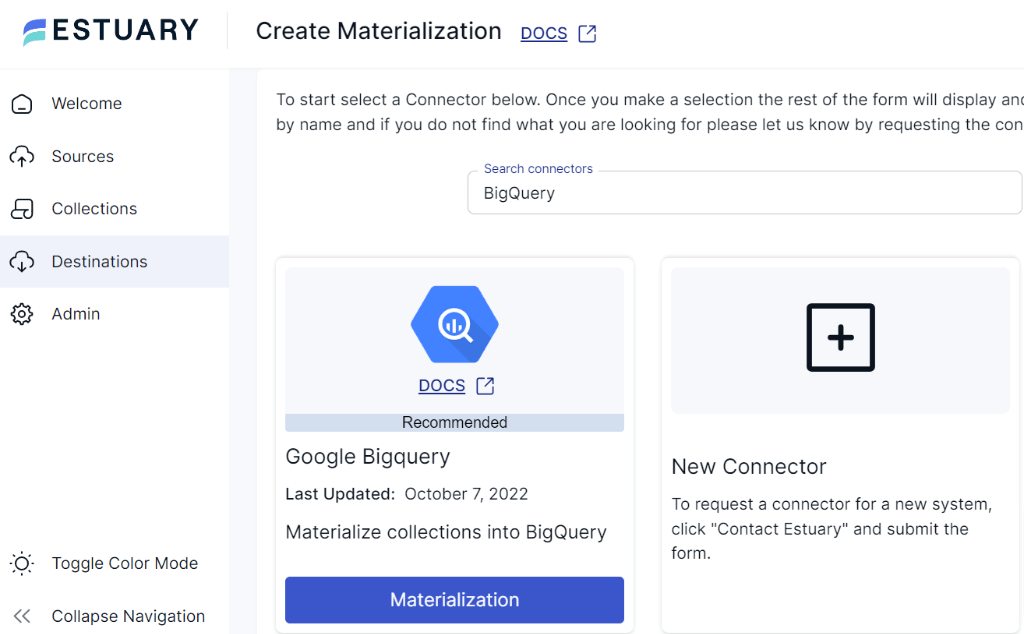

- You’ll be directed to the Create Materialization page. Search for the Google BigQuery connector in the Search connectors box. Once you locate the connector, click on the Materialization button associated with it.

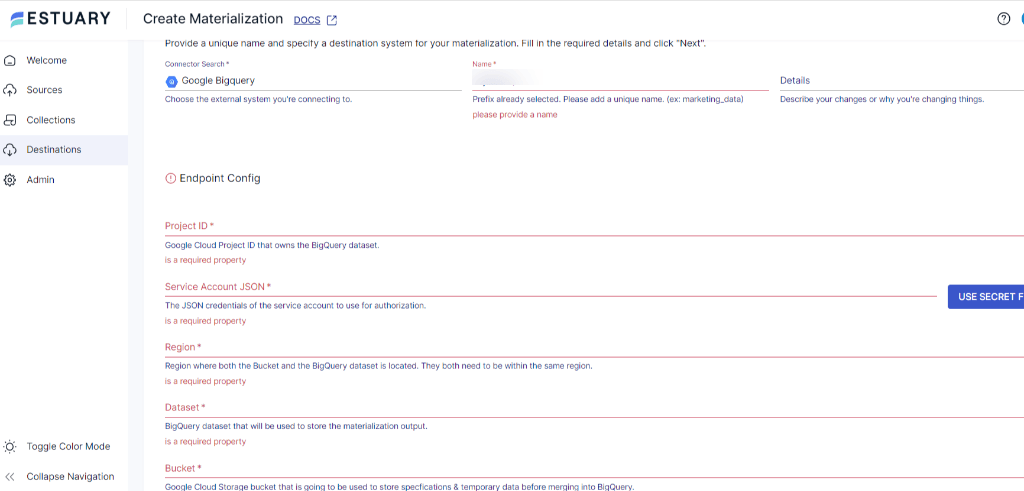

- On the BigQuery Create Materialization page, provide a unique Name for your BigQuery connector. Fill in the necessary Endpoint Config details, such as Project ID, JSON credentials, Region, and Dataset.

- If the data from the DynamoDB is not filled in automatically, you can manually add it from the Source Collections section.

- Once all the important details are provided, click on NEXT > SAVE AND PUBLISH. Estuary Flow will now create a data pipeline from DynamoDB to BigQuery and start replicating data in real time.

For a comprehensive understanding of the above data flow, follow the Estuary’s documentation on:

Unlock real-time insights by connecting DynamoDB to BigQuery with ease. Sign Up for Estuary Flow or Contact Us to start building your pipeline today!

The Manual Method: Connect DynamoDB to BigQuery Using CSV Files

The manual approach for exporting data from DynamoDB to BigQuery involves extracting DynamoDB data into CSV files, which are then uploaded to BigQuery.

Here’s a detailed guide on how to replicate data from DynamoDB to BigQuery, along with the prerequisites.

Prerequisites:

- Access to DynamoDB and Google BigQuery.

- A Google Cloud Platform (GCP) account.

- BigQuery project.

- A BigQuery dataset created in your BigQuery project.

Step 1: Export DynamoDB Data into CSV Files

Exporting data from DynamoDB into CSV files can be achieved using several methods depending on your use case and technical familiarity. Here are some of the ways to migrate DynamoDB data into CSV files:

- Using AWS CLI: Use the aws dynamodb scan command to export data from the DynamoDB table to JSON files. Mention the table name that you want to replicate, the file name, and the file path for the JSON file. Then convert these JSON files into CSV file format with your preferred programming language.

- AWS Management Console: Navigate to the DynamoDB service and select the table you want to export. In the Operation Builder, select the operation of your choice. Choose Export to CSV. Provide the file name and location for your CSV file and click on the Save button. The CSV files will be downloaded to the given path.

- Custom Scripts: If you like coding, you can opt for this method. Use AWS SDKs such as Boto3 for Python in your scripts to read DynamoDB tables, retrieve items, and convert them into CSV format.

- AWS Lambda Function with DynamoDB Streams: Enable a DynamoDB Stream on your table to capture changes in the DynamoDB table in real time. Implement the Lambda function to scan and query the DynamoDB table to fetch data. Within the Lambda function, convert this fetched data into CSV format. Use the AWS SDK to upload CSV file data to an Amazon S3 bucket. You can invoke the Lambda function manually or set up a trigger based on events like table updates. This function will read the DynamoDB table, convert it into the CSV file format, and load it into the S3 bucket. Then with the help of AWS CLI or AWS console, you can download the required CSV files to your local machine.

- Using AWS Data Pipeline: AWS Data Pipeline allows you to streamline data movement and transformation tasks. You can create and configure a data pipeline that reads data from DynamoDB and writes it into CSV file format in the Amazon S3 bucket.

From the above-mentioned list, you can choose the method that best fits your requirements and familiarity with AWS services.

Step 2: Load CSV Files Data to BigQuery

Exporting CSV files to BigQuery can be done through two different approaches:

- Using the Google Cloud Console (Web UI)

- First, log in to the Google Cloud Console and navigate to Google Cloud Storage (GCS).

- Use an existing bucket or create a new one to store your CSV files. Upload your CSV files to the GCS bucket.

- Navigate to BigQuery in the Google Cloud Console and select your project and dataset.

- Click on Create Table.

- Under the Source section, choose Create table from Cloud Storage option and select the CSV file from your GCS bucket. Mention the file format as CSV.

- Specify the schema and other necessary fields for your table.

- Click on Create Table.

- Using the bq Command-Line Tool

- To use this method, you need to install Google Cloud SDK on your local machine.

- Use the gsutil command to upload CSV files to your GCS bucket. Then run the bq command to load the CSV files into the BigQuery table.

plaintextbq load

--autodetect

--source_format=CSV

dataset_name.table_name gs://your-gcs-bucket/csv-file.csvReplace dataset_name.table_name gs://your-gcs-bucket/csv-file with the respective BigQuery dataset, table, bucket, and CSV file name.

For more information, you can use this comprehensive guide for exporting data from CSV to BigQuery.

Conclusion

We’ve seen two different methods for integrating DynamoDB with BigQuery, each offering its unique approach to data synchronization and analysis.

While manually handling the data transfer process grants control, it is suitable for scenarios where custom transformation or manual backups for small datasets are required. However, it can limit you from carrying out real-time analysis and might include human errors.

Automated, fully-managed platforms like Estuary Flow streamline DynamoDB to BigQuery replication, offering efficient automation. Flow’s capabilities ensure continuous real-time data synchronization, making it well-suited for applications where up-to-date insights are essential.

Unlock seamless DynamoDB to BigQuery Data Flow with Estuary Flow today! Sign up now to build your first pipeline

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles