A data warehouse is a repository of large, integrated and transformed data that can be used to generate insights and drive decision-making. It is crucial to the development of accurate forecasting models. The data warehousing industry is large—predicted to exceed $30 Billion by 2025. But using and engaging with data warehouses requires careful planning, design, and implementation. This article explores best practices for data warehousing—including data modeling, ETL (extract, transform, load) processes, data quality management, performance tuning, and security and governance.

How does a Data Warehouse work?

A data warehouse collects and stores data from multiple sources. It offers access to current and historical data, which can be used for ad-hoc querying, decision-making, and reporting. Data engineers source data from many systems—like customer relationship management (CRM) systems, enterprise resource planning (ERP) systems, online transaction processing (OLTP) applications, SaaS tools, and relational databases—and store this data in a data warehouse.

Data warehouses clean collected data for accuracy and consistency, easing data management and analysis. Although similar to databases and data lakes, data warehouses ingest data faster than databases and data lakes, are purpose-built, and have more efficient querying mechanisms.

From an engineering perspective, the architecture of a data warehouse depends on the needs of your organization. Imagine you work in an e-commerce organization that needs to analyze its sales data to identify trends, patterns, and anomalies and use this information to make data-driven decisions to improve sales. You must first define the questions like "What are the top-selling products by region and period?" or "What is the average revenue per customer by product category?". After this, you must identify relevant data sources such as sales, customer, product, and regional data, then extract and load the data into a warehouse for structuring, cleaning, and filtering. The structured data is then pushed into a BI/Data Analysis tool for visualization and analysis.

Depending on the organization, the questions and approach will differ. Therefore, flexibility is key when architecting a data warehouse. To build a flexible data warehouse, use the 3NF model, otherwise called the “whole key,” instead of predefined join paths where every primary key in a dimensional table is a foreign key in the fact table.

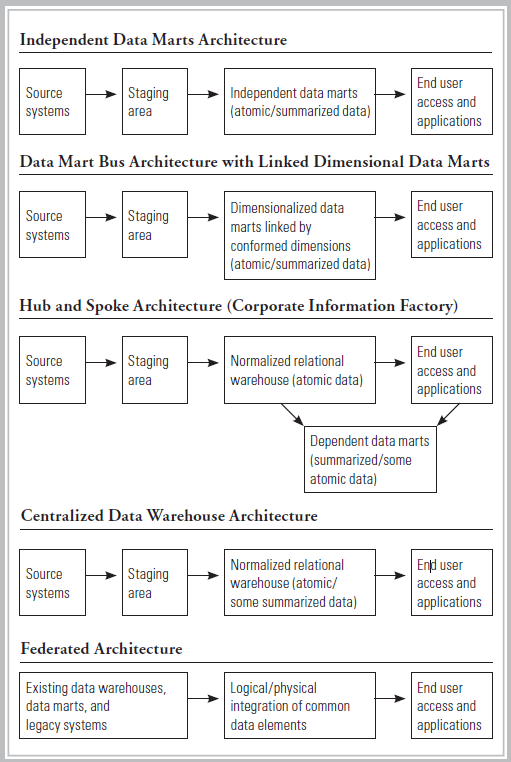

There are five kinds of data warehouse architectures most used by organizations:

- Independent data mart architecture

- Data mart bus architecture with linked dimensional data marts

- Hub-and-spoke architecture

- Centralized data warehouse architecture

- Federated architecture

According to a survey, the predominant architecture is the hub-and-spoke architecture scoring similar scores when assessed according to information quality, system quality, individual impacts, and organizational impacts.

The five architectures cited above have four primary components:

- A central database is the pillar of the data warehouse. It is usually an RDBMS running on-prem or in the cloud.

- Data integration tools like Estuary flow or your choice ELT/ETL pipeline.

- Metadata is the representation of objects defined in the RDBMS—it could be a table, column, query, report, or even a transformational algorithm.

- Data access tools like query, OLAP, application development, or data mining.

Best Practices

Implement these ten best practices when choosing and operating a data warehouse:

Understand your data warehousing needs

You must first identify the relevance of a data warehouse to your specific use case. You may need a data lake or an RDBMS instead of a data warehouse. You can check available data sources and the level of structuring your data sets may need. For example, a data warehouse is your best bet if you need to collect data from different sources and aggregate and study historical data. Otherwise, check twice.

Choose the right data warehouse architecture

Depending on the size of your organization and the scope of its business, it is necessary to know what works best for you, between a cloud data warehouse or an on-premises data warehouse. According to a TDWI 2021 Survey, 53% of companies have an on-premise data warehouse, and 36% operate a cloud-based data warehouse. This data highlights that on-prem data warehouses are more modern than we may assume.

A cloud data warehouse is cost-efficient, time-saving, and scalable. It needs no hardware, server rooms, IT staff, or operational costs. On the other hand, an on-prem data warehouse enables you to exercise additional control and implement strict governance and regulatory compliance.

Many options abound—you may need to combine both on-prem and cloud, employ a managed data warehouse as a service (DWaaS) instead of setting up yours, and make many other decisions specific to your project and organizational needs.

Select befitting data warehouse Platform and Tools

You must consider the tools to be used for feeding data into the data warehouse—between an ETL (extract, transform, and load) or an ELT (extract, load, and transform) tool. You must also decide if the tools should be source-agnostic or not.

ELT tools are flexible and store new, unstructured data easily. They extract data from the source system(s), load the data into the warehouse, and finally transform the data into the best-structured format. It also removes duplicated data and any inconsistencies for ease of analysis. The data is after that pushed through the BI tools.

Another important tool is the business intelligence (BI) tool. Choosing a no-code BI tool to erase complexities and technicalities is important. An efficient BI tool will have a user-friendly interface and be easily integrated with other systems. Additionally, carefully select the data sources based on their relevance to the business objective, quality, and availability.

Implement Master Data Management (MDM) Practice

Data accuracy is vital for your engineering functions. Implementing an MDM practice means developing a controlled process so that only correct, consistent, and validated Master data is streamed and warehoused.

Apply Change Data Capture (CDC)

Change data capture (CDC) is part of an ETL/ELT pipeline. It eases the tracking of changes that occur to the data in the warehouse and ensures that the changes are up-to-date. The changes history is stored in change tables showing how the data has changed over time. For security purposes, layer your CDC tools with data backup and encryption tools that shield sensitive data from unauthorized users, such as warehouse testers and external trainers.

Establish an Operational Data Plan

An operational plan is a mapped-out strategy of protocols you will follow throughout development, testing, and production. The plan gives you foresight and hindsight—you can discover your current and future warehousing needs by analyzing the trends from the plan. It also helps you know how much space your data will take up at a point in time and determine whether your warehouse has the required capacity. With a plan, you can analyze your current technology stack and the limitations of your OLAP, ELT, and BI tools to maximize workflows.

To develop an effective plan, you must consider the kinds of queries you will run for your projects and index the data warehouse schema accordingly. A data plan should equally contain what steps to take during a disaster for recovery purposes, as well as the consideration of governance and regulatory compliance. This helps you implement a GRC—governance, risk, and compliance—approach to your projects.

Define access

Establish governance rules which define who gets access to the system, when, and upon what task the access is granted. This is more important, especially when in a cloud-based warehouse. Compliment access control with other cybersecurity measures like Just-in-Time access, RBAC, ABAC, and many others that enable granular access control and management.

Automate Management and Maintenance

Automating the technical management functions of the data warehouse helps you cut costs on maintenance infrastructures. You can automate data streaming from source using your choice ELT tool and visualization in your choice BI tools by leveraging machine learning and artificial intelligence. You can also automate the monitoring tools that give end-to-end visibility into data distribution within the warehouse. It helps you observe what goes where and the relationships between data sets.

Analyze Data Loading Frequency

The schedule of data processing is important for efficiency. It is important to understand when to process specific data. While batch processing is efficient for some data, real-time processing could be more productive for others. When a volume of data is collected for a while and later processed in batches, you can reduce operational costs and manage time. Real-time processing, which is immediate and continual, is useful when timely action is vital.

Setup reporting

Use reporting tools to extract relevant data from the data warehouse and create reports, dashboards, and visualizations that help end users make informed decisions. You can customize the reports and visualizations to meet the specific needs of different users or departments within your organization. At the end of collecting, organizing, and storing data from the various sources, it is pertinent to query, analyze and present the eventual insights and intelligence for reporting.

Be Agile

Building and standardizing a data warehouse takes months or years, depending on the complexities and volume of work in your organization. Agility eases this complexity by quickening feedback and offering more transparency into the operations and interdependencies within the data warehouse. Follow these three steps for agility in your data warehousing workflow:

- Execute processes in multiple sprints. Work with small, well-defined tasks and develop testing plans.

- Update your data warehouse as new data sources become available or as your organization’s business objectives change. Do not pile up updates.

- Train other teams—engineering and otherwise—on how to access and use the data and how to resolve any issues they encounter. Enlighten them on best practices. You may develop SOPs specific to your organization’s business objectives and share them with your colleagues.

Conclusion

As the amount of data generated continues to grow, it is essential to implement best practices in data warehousing to ensure that the data is accurate, timely, and accessible. In this comprehensive guide, we have explored various best practices, including data modeling, ELT processes, data governance, data security, and performance optimization.

Adhering to these best practices will enable you to operate reliable, efficient, and secure data warehouses. These best practices can also help you to streamline data access, reduce costs, and improve overall business decision-making. To know more about how data engineers in other organizations approach data warehousing, connect with them on Slack.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles