Data streaming is a key component of real-time data processing and has become increasingly popular over the last few years. It allows for real-time processing and analysis of data as soon as it’s generated, giving organizations a competitive edge. Using data streaming technology, companies can make faster and more accurate decisions, provide better customer service, and eventually maximize their profits.

In this article, we will discuss what streaming technology is, the challenges faced when using it, and look into some best practices you should adopt to get the most out of this amazing technology.

By the end of the article, you will have a thorough understanding of data streaming technology and how it is used in different sectors along with its best practices and challenges.

So let’s dive in.

Understanding Data Streaming Technology

Data streaming is reshaping the data industry in previously impossible ways. It’s different from batch processing because it doesn’t require you to collect and process data as a set interval. Instead, data can be transported and analyzed in real time as it’s generated.

Data streaming technology is what enables your favorite websites and apps to process information so quickly.

This process makes it possible to process data in real time so that the data can flow continuously from source to target.

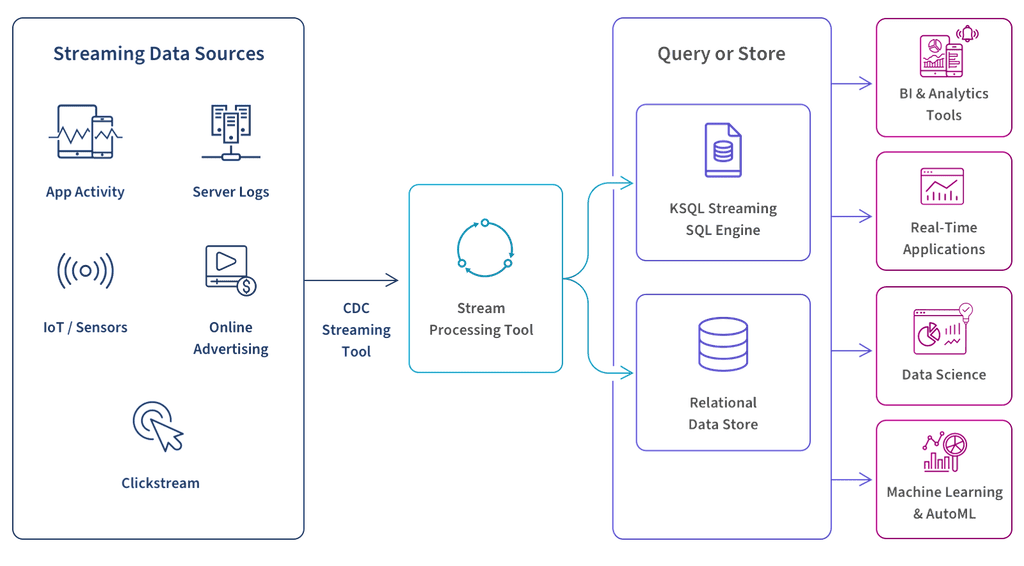

With streaming data, businesses can utilize this real-time data as it’s generated, leading to deeper insights and competitive advantage. This data includes things like:

- Social media feeds

- Geospatial services

- Financial transactions

- eCommerce purchases

- Logs from the web and mobile apps

Real-time fraud detection, social media monitoring, IoT data processing, and eCommerce personalization are using data streaming technology to adapt rapidly to new conditions and remain ahead of the curve.

Now that we have a clear understanding of data streaming technology, let’s see how it compares with batch processing.

Data Streaming Vs Batch Processing – A Detailed Overview

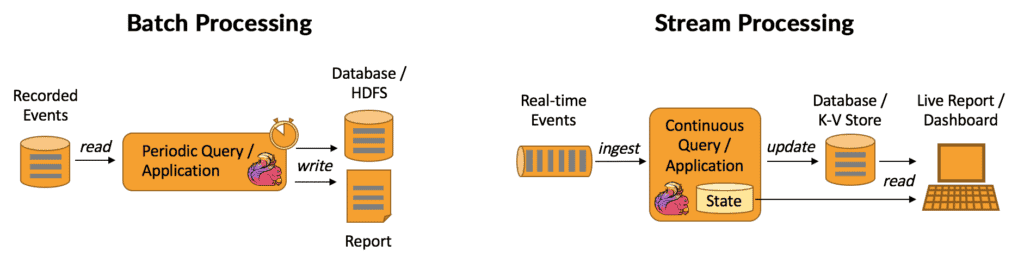

Data streaming and batch processing are two very different methods for processing data.

While data streaming processes and analyzes data as soon as it is generated, batch processing is a data processing technique in which large amounts of data are collected over time and then processed all at once. This process is typically scheduled to occur at a specific time and the results are usually available a few hours or days later.

With that understanding of batch processing, here are some of the key differences between these two methods:

A. Time Sensitivity

Probably the most fundamental difference between batch processing and data streaming is time sensitivity.

Batch processing is meant for processing large volumes of information that are not time-sensitive. On the other hand, data streaming is fast and meant for information that is needed immediately.

B. Data Collection

Batch processing normally deals with a large volume of data over a period of time. On the other hand, data streaming processes data continuously and piece-by-piece in real time. This means that batch processing of data can’t begin until the complete data has been collected while stream processing allows you to process data as it is generated.

C. Data Size

In batch processing, the time interval can be determined but the data size is unknown and variable, which makes it difficult to estimate how much data will be accumulated over a fixed time interval or window. Therefore, you can predict how frequently the batch data processing will take place but it is not possible to accurately estimate the amount of time it will take for each individual batch to complete processing.

On the contrary, data streams are the complete opposite. With data streams, the size of the data being generated in real-time is usually known but the volume of data produced can be highly unpredictable.

D. Processing Time

The data size difference between batch and stream processing also results in different processing times for the two technologies. It can take several hours or even days to complete a single batch process. Meanwhile, stream processing is capable of processing data on a sub-second timescale.

E. Input Graph

In batch processing, the input graph is static while in data streaming, the input graph is dynamic. Fixed input graphs work well for processing large, well-defined datasets that are relatively stable over time. Therefore, it is ideal for batch processing.

On the other hand, the stream processor needs to handle data that is continuously changing. Therefore, they utilize dynamic input graphs which allows for more flexible and adaptive data processing as per requirement.

F. Data Analysis

Batch processing analyzes data on a snapshot while data streaming analyzes historical data continuously in small time windows. Because of this, both methods are suited to different types of data analysis techniques.

G. Response Time

In batch processing, the response is provided after the entire batch of data has been processed whereas in data streaming, the response is provided in real-time. This immediate response of data streaming is essential in cases where businesses need to react to events as they happen.

H. Use Cases

Batch processing is primarily used in applications where large amounts of data need to be processed but the processed output is not immediately required, such as payroll and billing systems.

I. Required Data Engineering Expertise

In batch processing, data engineering teams can typically build a program using existing tools such as SQL queries or scripts. These tools are designed to process a fixed amount of data at predetermined intervals, which makes the batch-processing approach more straightforward and less complex to implement.

However, managing a stream-broker platform like Kafka or Kinesis makes creating a framework from scratch in data streaming more difficult and time-consuming. It takes specialized knowledge and experience in data engineering to set up and configure these systems, which can be difficult.

On the other hand, data streaming is used in stock market analysis, eCommerce transactions, social media analysis, and other applications where timely decisions are critical.

Continue reading about the differences between real-time and batch-processing pipelines here.

Now that we are familiar with the key differences between batch processing and data streaming, let’s take a look at the best practices of data streaming technology.

Data Streaming Technology – 7 Best Practices To Consider

Data streaming is becoming increasingly popular in the data integration and analytics world because of its ability to provide real-time velocity and insights.

To maximize the benefit of data streaming technologies, it is important to adhere to best practices for designing, developing, and managing real-time data pipelines.

1. Take A Streaming-First Design Approach

Data streaming should be the primary focus of any organization that wants to leverage real-time or near-real-time insights. Taking a streaming-first approach means having all new sources of data enter through streams, rather than batch processes. This makes it easier to capture changes in data faster and integrate them into existing systems more quickly.

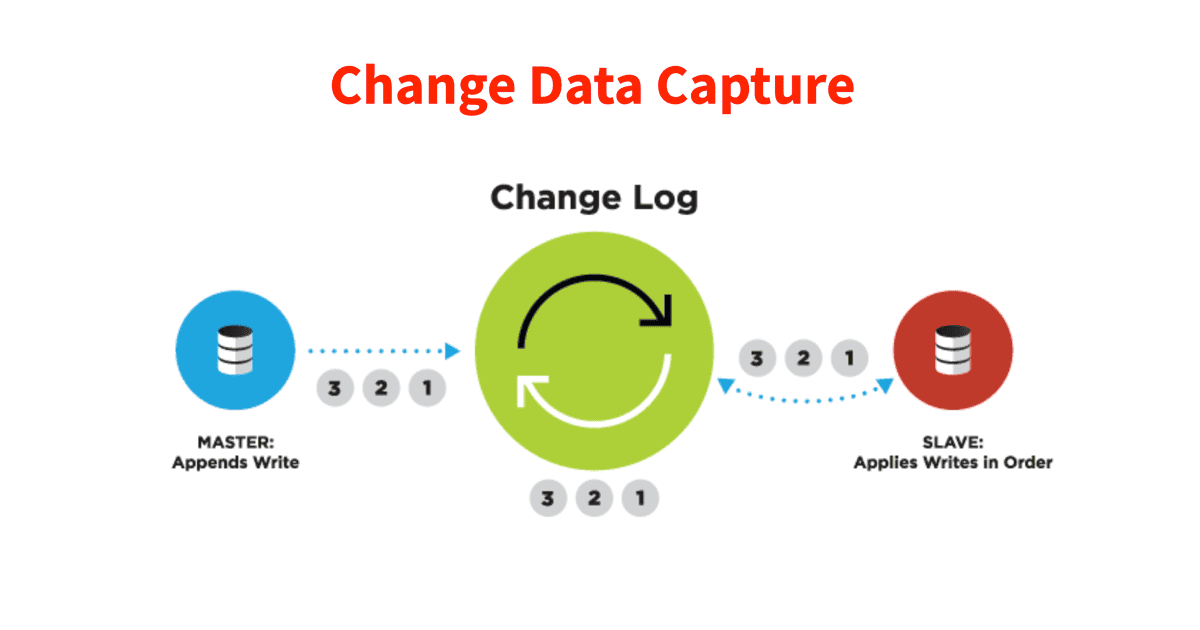

2. Adopt Change Data Capture (CDC) & File Tailing Technologies

CDC is an essential tool for capturing and transferring only changed or new records from one database to another with minimal overhead. On the other hand, file tailing technologies enable organizations to monitor log files and watch for changes in real-time, allowing them to react faster when changes occur.

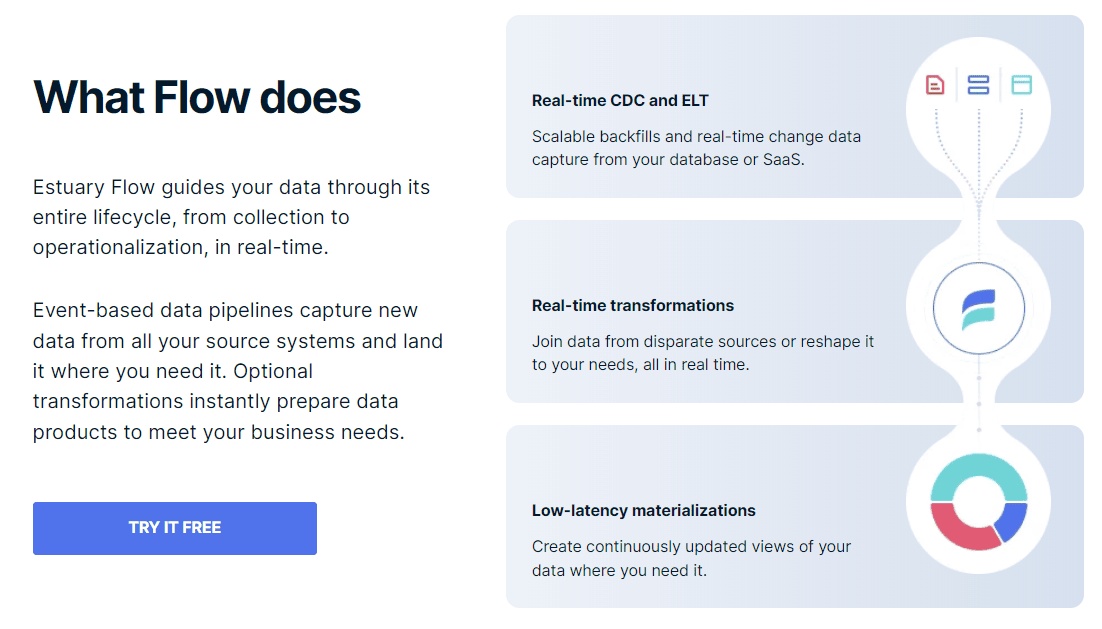

3. Use No-Code Stream Processing Solutions

Utilizing specialized data streaming tools like Estuary Flow allows organizations to process data incrementally in real-time without engineering work, giving them access to up-to-date insights from their data sources faster than ever before. Stream processing engines can be used for both historic analyses as well as reacting quickly when changes occur in the source system.

Estuary Flow is a powerful no-code stream processing solution that makes it easy for organizations to ingest, process, and analyze data in real time. With our proprietary tool, organizations can easily create and manage data pipelines without needing to write any code.

Estuary Flow supports a wide range of data sources and formats, including streaming sources such as social media feeds, IoT sensors, and clickstream data, as well as batch sources like databases and file systems.

4. Analyze Data In Real Time With Streaming SQL

While traditional SQL queries have been used for decades, they’re often too slow when dealing with big datasets that need immediate results. With streaming SQL, organizations can exploit massive amounts of incoming data without sacrificing the speed or accuracy of their analyses.

5. Support Machine Learning Analysis Of Streaming Data

With the rise of AI technology, machine learning techniques are becoming increasingly popular for predictive analytics tasks such as fraud detection and customer segmentation. Leveraging streaming data enables organizations to make more accurate predictions by taking into account recent developments within their dataset more quickly than ever before.

6. Minimize Disk I/O

To ensure optimal performance, designers should minimize disk I/O while using real-time streaming data for multiple purposes whenever possible.

This may include using a single streaming source for delivery into multiple endpoints. In addition, developers should consider pre-processing incoming streams to simplify inspection and profiling tasks or adopting an ingestion tool or framework that does this automatically.

7. Incorporate Continuous Monitoring & Self-Healing Features

Data stream designers should incorporate monitoring capabilities with self-healing features into the streaming data architecture to address any potential reliability issues that may arise with mission-critical flows. Furthermore, systems should be put in place to sanitize the raw data upon ingestion to ensure high-quality consumption-ready output from the system.

Let’s now examine some of the obstacles associated with data streaming technology.

8 Most Common Challenges Of Data Streaming Technology

Real-time data streaming applications require systems that can quickly scale according to the partitioning that common streaming infrastructure typically employs. This calls for a fast data processing and ingestion system that won’t slow down performance or latency.

Here are some of the difficulties that come with using data streaming technology

I. Scalability

Real-time data streaming applications require systems that can quickly scale according to the partitioning that common streaming infrastructure typically employs. This calls for a fast data processing and ingestion system that won’t slow down performance or latency.

II. Ordering

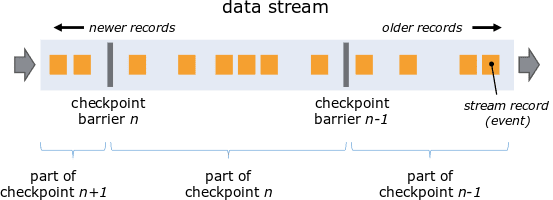

For stream processing systems to work properly, processed data must arrive at its destination in the right order. But in real-time data streaming, there may be discrepancies between the order of data packets generated and the order in which they reach their destination. This can result in out-of-order data and larger systems are especially at risk.

III. Consistency & Durability

One of the biggest challenges when working with data streaming is ensuring consistency and durability. The data you read at any given time can already be modified and stale in another data center, leading to discrepancies between different nodes in the system. This makes it hard to ensure that the data is correct and can also lead to data loss or corruption.

IV. Fault Tolerance & Data Guarantees

To make sure your data streaming system never has a single point of failure, implement a fault-tolerant mechanism that can automatically store streams of data with high availability and durability.

Also, you need data guarantees which ensure that once data is written to a stream, it won’t be changed or deleted. Without these mechanisms, inconsistencies could cause big differences in the insights you get.

V. Latency

In data streaming, low latency is essential. If processing and data delivery take too long, the streaming data can quickly become irrelevant. To keep streaming applications running smoothly and efficiently, minimize latency by ensuring data is processed quickly enough and reaches its destination promptly.

Since data flows continuously, there’s no going back to get missed data. Therefore, the system must prevent delays and problems that could lead to lost information.

VI. Memory & Processing Requirements

Memory and processing are key challenges when working with data streaming. Organizations must make sure they have enough memory to store all continuously arriving data, as well as the processing power to handle real-time data processing. This means real-time data streaming systems need CPUs with more processing power than systems handling batch processing tasks.

VII. Choosing Data Formats, Schemas, & Development Frameworks

For processing to work well, it’s important to select the right tools to process time-series data since different data types must be formatted correctly. The right storage schema also ensures that the application handles different data types and scales quickly and easily. Furthermore, development frameworks designed for streaming applications are essential to enable upgrades without causing disruptions or latency issues.

VIII. Security

Real-time data processing systems must be secure, with mechanisms to prevent unauthorized access or manipulation of sensitive data. This includes integrating the streaming data platform with existing security layers within the organization, such as authentication and authorization protocols.

The system should also be designed to identify and prevent malicious attacks like data injection and denial of service. Organizations should also implement strong encryption schemes for data in transit and at rest.

Now that we are aware of the challenges that come with data streaming technology, it’s important to note that a fully managed streaming data platform such as Estuary Flow can help address these challenges. We take care of all the complexities, allowing you to focus on using your streaming data to improve your day-to-day experiences.

Next, let’s examine how it impacts our day-to-day experiences.

Examples Of Data Streaming Technology – How It Affects Our Daily Lives

Here are some real-world applications of data streaming systems.

i. Financial Sector

The financial sector is one of the earliest adopters of streaming data analytics as it is vital to ensuring efficient market operations and reducing risk. Real-time streaming analytics enables:

- Risk analysis

- Faster order fulfillment

- More accurate pricing models

- Timely identification of anomalies

- Compliance with regulatory guidelines

- Real-time monitoring of financial markets

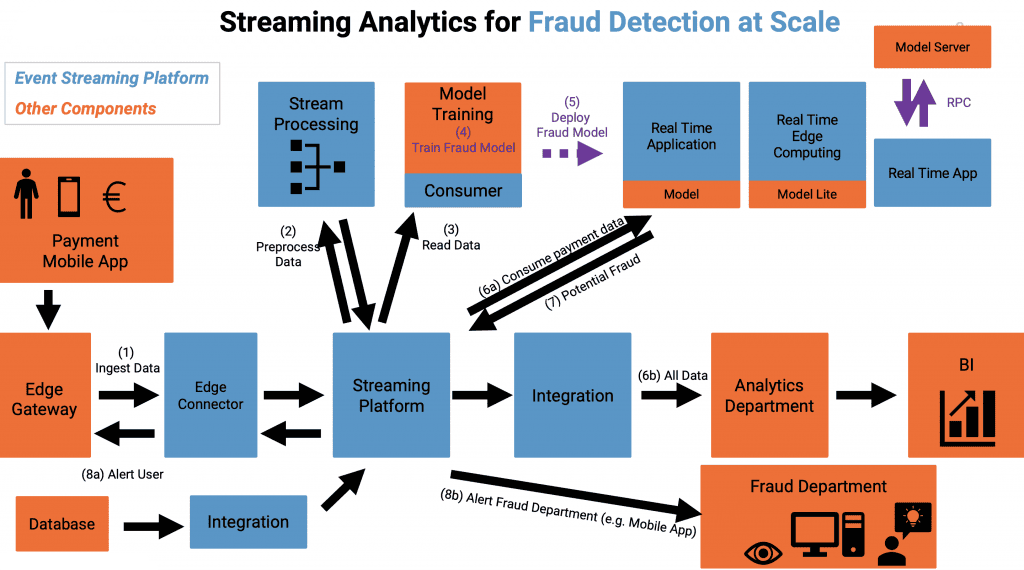

Real-time stream data analytics can be used to detect fraud or money laundering activities by monitoring patterns in customer behavior using techniques such as anomaly detection. This data can then be used to identify suspicious activities that may otherwise go unnoticed.

Businesses can also use streaming data to predict customer behavior, helping with decision-making regarding marketing campaigns or product offerings. Accessing data quickly and accurately gives businesses an advantage in the competitive financial sector.

ii. Energy Sector

Data streaming technology is an essential component of modern energy grids with many use cases for power producers as well as utility companies.

With data streaming, energy companies can get real-time insights into electricity usage and break down the usage into residential, commercial buildings, and industrial facilities. The contribution of renewable energy sources like solar panels or wind turbines can also be actively monitored with the help of real-time data.

By incorporating real-time analytics into their infrastructure, operators proactively manage grid demand and supply while responding quickly to changing conditions in the electricity market.

Streaming data also helps utility companies understand their customers’ electricity usage patterns so they can refine their offerings accordingly, such as evaluating demand charges across different seasons or providing tailored tariff plans based on usage patterns.

Real-time processing of data from IoT devices enables companies to detect equipment failure before it leads to system outages, giving them time to prepare for emergencies or reduce maintenance costs while keeping system reliability high at all times.

Learn more about data streaming architecture and how it supports these processes.

iii. Media & Entertainment Industry

Data streaming technology has had a profound impact on the media and entertainment industry. Thanks to streaming technology, media companies can now deliver content faster than ever before without sacrificing quality in either video or audio formats.

Streaming video content requires less bandwidth than traditional television broadcasts. This allows viewers to have greater flexibility when consuming content on any device such as smart TVs, smartphones, or gaming consoles.

Similarly streaming audio content offers users access to any type of music library imaginable which was not previously possible through traditional radio broadcasts.

Furthermore, media companies have used data streaming technology to create new ways of making money by monetizing digital services on subscription-based platforms like Spotify and Netflix. These companies tailor their plans based on the user’s interests.

Nowadays, these technologies are also utilized to broadcast traditional shows more interactively. Real-time show elements are combined with fan interactions to create new ways for viewers to engage with program hosts. These innovative methods provide audiences with an interactive experience that was not possible before using standard broadcasting techniques.

iv. Transportation Sector

The application of data streaming technology in transportation provides operators with deeper insight into vehicle performance. This enables them to analyze a variety of factors simultaneously such as location, speed, acceleration, driver behavior, etc.

With access to all this data, transportation companies can:

- Improve safety

- Reduce emissions

- Increase efficiency

- Reduce operational costs

- Enhance customer service

- Optimizing maintenance schedules

Moreover, data streaming is widely applied in automated scheduling systems that use GPS tracking and traffic flow information to redirect vehicles around heavily congested areas. This not only saves time, money, and fuel consumption but also helps to reduce overall commute times.

Conclusion

Data streaming technology holds many benefits for businesses that require insights into real-time data to make timely decisions. With the rise of the Internet of Things (IoT), social media, and other sources of streaming data, the ability to process and analyze data in real time has become increasingly important.

While data streaming technology offers numerous benefits, there are also associated challenges that companies must address to fully leverage its potential. Adopting best practices for deploying real-time streaming systems can help organizations overcome these challenges and unlock the full benefits of this technology.

If you’re interested in exploring this technology further, why not register for Estuary Flow? With our help, you can quickly set up your own real-time data streaming pipeline and experience efficient data integration across platforms.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles