Gone are the days when analyzing historical data provided sufficient insights. Today, businesses thrive on quickly reacting to evolving market dynamics, customer preferences, and operational challenges. With data streaming technologies, organizations gain a dynamic and deep understanding of their environments.

However, the sheer number of options available, coupled with the dynamic nature of technological advancements, can make the search for the perfect data streaming tool an overwhelming task. Choosing the wrong tool can cause operational inefficiencies, missed opportunities, and substantial financial losses.

Today’s guide aims to help you in this quest. We will discuss the 15 best data streaming technologies and tools for 2024 built to unlock the potential of streaming data. By the end of this 10-minute guide, you’ll know the key features of each tool, making it easier to pick the best option.

15 Best Data Streaming Technologies & Tools For Data-driven Decision Making

Here are our top picks for the best data streaming technologies of 2023:

- Estuary Flow - Top Pick

- Amazon Kinesis - Best from a major cloud provider

- Equalum - Intelligent parsing capabilities

Let's take a detailed look at each of the 16 data streaming platforms and pick the one that best suits your needs.

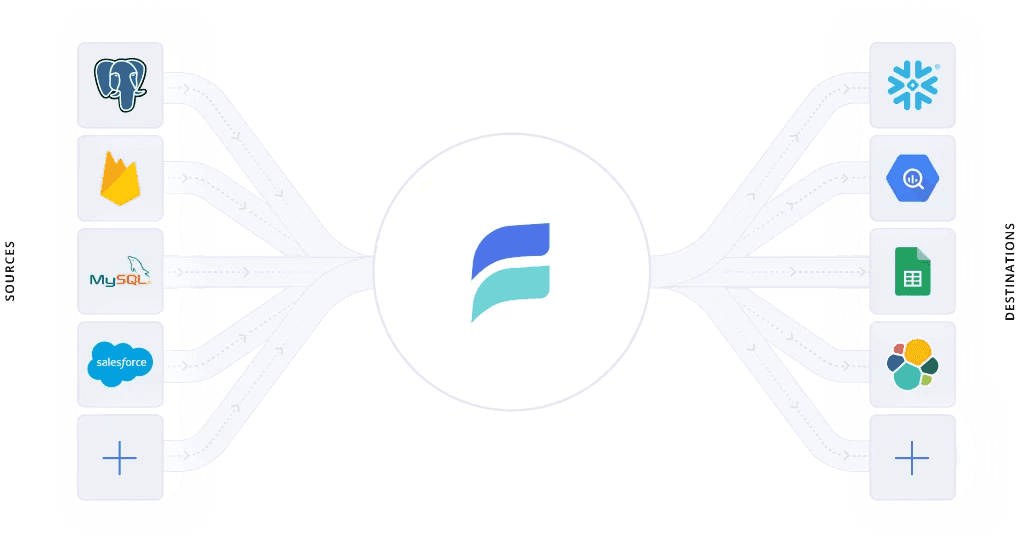

Estuary Flow - Top Pick

Estuary Flow is not just another data streaming tool; it is our managed solution for real-time ETL processes, designed to revolutionize the way data is handled. With its exceptional features and unrivaled capabilities, Estuary Flow emerges as the clear frontrunner in the data streaming landscape.

By using an advanced streaming broker and robust data synchronization techniques, Estuary Flow ensures the utmost consistency and integrity of data throughout the streaming pipeline. With its advanced architecture and innovative features, Estuary Flow excels in processing data in real time. It is built to minimize latency which ensures data streams flow seamlessly.

Estuary Flow supports event time processing and windowing which help define time-based windows for aggregations, time-sensitive operations, and data analysis. This allows you to gain insights into time-bound data patterns, identify trends, and derive meaningful insights from temporal data streams.

Estuary Flow intelligently distributes data streams based on predefined rules and conditions. This gives granular control over data flows and ensures that the right data reaches the right destination at the right time. Its fault-tolerant architecture is built to withstand failures and ensure uninterrupted data streaming.

Estuary Flow goes beyond being a data streaming tool and offers advanced features for streamlined data governance. With built-in data lineage tracking, auditing capabilities, and access control mechanisms, Estuary Flow empowers you to maintain data integrity, enforce governance policies, and meet regulatory requirements seamlessly.

Why Choose Estuary Flow

- Unmatched data consistency and integrity

- Seamless scalability to handle growing data loads

- Real-time data processing capabilities for instant insights

- Extensive integration capabilities with diverse data sources

- Advanced analytics and machine learning integration for real-time insights

Estuary Pricing

- Free: 10GB/month (2 Connector Instances)

- Cloud ($100/month): $0.50/GB after initial usage, up to 12 Connector Instances, $100/connector instance

- Enterprise (Custom pricing): For large or custom deployments of Flow, includes SOC2 & HIPAA Reports, 24/7 support, and more.

Unlock real-time data integration with Estuary Flow. Sign up for free today—no credit card required!

Amazon Kinesis - Best from Major Cloud Provider

Amazon Kinesis is a robust and scalable data streaming technology that empowers businesses to benefit from the potential of streaming data. It enables the ingestion, processing, and analysis of vast amounts of data streams in real time. Amazon Kinesis seamlessly integrates with various data sources so you can capture and process data from diverse streams such as:

- IoT devices

- Server logs

- Social media feeds

- Website clickstreams

The powerful stream processing capabilities of Amazon Kinesis are truly unmatched. It gives you a comprehensive suite of tools and APIs to build custom applications for processing and analyzing data streams. With the ability to write applications using popular programming languages, you can integrate Kinesis into your data processing pipelines without breaking a sweat.

Why Choose Amazon Kinesis

- Real-time insights

- Handles any volume of streaming data, from megabytes to terabytes

- Ensures data integrity, reliability, and durability and safeguards against data loss

- Native integration with other AWS services including AWS Lambda, Amazon Elasticsearch Service, and Amazon Redshift

Amazon Kinesis Pricing

Pay as you go.

Equalum - Intelligent Parsing Capabilities

Gone are the days of relying solely on batch processing methods as Equalum unlocks the power of real-time data streaming. By leveraging stream processing techniques, it empowers your organization to process and analyze data streams as they flow.

Equalum seamlessly handles both structured and unstructured data streams. Its advanced algorithms and intelligent parsing capabilities integrate and process diverse data formats. This ensures that no nugget of valuable information goes unnoticed and gives you a detailed view of your data ecosystem.

Equalum's distributed architecture intelligently allocates resources for optimal performance regardless of the size or complexity of the data stream. It also incorporates built-in data quality checks and by proactively identifying data anomalies and issues, Equalum helps in making informed decisions based on reliable insights.

Why Choose Equalum

- Effortless scalability

- Processes both structured and unstructured data streams

- A comprehensive suite of tools for data quality checks, data transformation, and data manipulation

- Powerful data transformation capabilities to cleanse, enrich, and manipulate data streams on the fly

Equalum Pricing

Custom pricing plans are available on demand.

Apache Kafka - Trusted By Fortune 100 Companies

Apache Kafka is an open-source, distributed streaming platform that has revolutionized the way we process streaming data. It provides a fault-tolerant architecture which makes it highly resilient in the face of failures. Kafka uses a distributed cluster of servers to replicate data across multiple nodes for flawless recovery and data loss prevention.

Apache Kafka handles vast amounts of data streams with minimal latency and can ingest data from multiple sources. Because of its distributed nature, it can add more nodes to the cluster to scale horizontally. Kafka can integrate with popular stream processing frameworks like Apache Flink, Apache Storm, and Apache Samza, Kafka to provide real-time data processing and analysis.

Apache Kafka’s support for various data stream patterns allows both point-to-point messaging and publish-subscribe messaging models. This gives you flexibility in designing data flow architectures that are best suited for your business. This low-level tool is best suited for experienced engineers.

Why Choose Apache Kafka

- Efficient parallel processing

- Integrates seamlessly with existing data ecosystems

- Ideal for building complex data pipelines and event-driven architectures

- Kafka's publish-subscribe model distributes data across multiple consumers

- Connectors for popular systems like Apache Hadoop, Apache Spark, Elasticsearch, and more

Apache Kafka Pricing

Free to use.

Confluent Cloud - Most Unified & Scalable Solution

Confluent Cloud is a cloud-based platform that offers a suite of tools and services that simplify the complexities of stream data processing and can handle the entire data streaming pipeline. From data ingestion to processing, storage, and analysis, Confluent Cloud provides a unified and scalable solution.

Built on Apache Kafka's foundation, Confluent Cloud has a fault-tolerant architecture in which data is replicated across multiple clusters to provide redundancy and protection against failures. It can seamlessly integrate with popular data storage systems such as Apache Kafka and Apache Cassandra which simplifies the migration process and offers a smooth transition to a real-time data streaming architecture.

With its elastic infrastructure, Confluent Cloud dynamically scales resources to accommodate high data ingestion rates and intensive processing workloads. It also provides extensive monitoring and management tools for full visibility into your data streams.

Why Choose Confluent Cloud

- Advanced monitoring and alerting capabilities

- Robust data governance and metadata management

- Automated schema management and compatibility checks

- Multi-region replication for high availability and disaster recovery

Confluent Cloud Pricing

Confluent Cloud offers custom pricing plans.

Talend - Ideal For Complex Data Transformations

Talend is designed to handle the challenges of real-time data integration and streaming. It has a user-friendly interface and its drag-and-drop functionality enables you to visually map data sources, transformations, and destinations, significantly reducing the learning curve and accelerating time-to-insights.

Talend has an extensive library of pre-built connectors for seamless integration with different data sources, like databases, cloud storage, messaging systems, and social media platforms. Its distributed processing frameworks and optimized data streaming algorithms guarantee efficient parallel processing, fault tolerance, and high throughput.

With Talend's data transformation functionalities, you can cleanse, enrich, aggregate, and analyze data in real time. You can also implement complex data transformations, business rules, and machine learning models within your data streams. It offers enterprise-grade reliability features such as fault tolerance, data lineage, and data quality checks.

Why Choose Talend

- Comprehensive data quality management features

- Advanced analytics and machine learning integration

- Robust support for data governance and compliance

- Efficient data replication and synchronization across distributed systems

Talend Pricing

Tailor-made pricing plans are available on demand.

Azure Stream Analytics - Hybrid Architectures For Stream Processing

Azure Stream Analytics is a fully managed and scalable event-processing engine that ingests, processes, and analyzes streaming data from different sources with remarkable speed and efficiency. Whether it's log monitoring, clickstream analysis, IoT telemetry, or any other data stream processing requirement, Azure Stream Analytics stands tall as one of the best-in-class solutions available today.

Using a simple yet powerful SQL-like query language, you can effortlessly define the desired data processing logic for quick and efficient development of complex data analysis scenarios. From popular cloud platforms like Azure Blob Storage and Azure Data Lake Storage to on-premises systems such as SQL Server and Oracle Database, Azure Stream Analytics provides comprehensive connectivity options.

Why Choose Azure Stream Analytics

- Supports low-latency processing

- Highly intuitive and user-friendly interface

- Robust scalability to handle sudden spikes in data volume

- Unified programming model for batch and streaming data processing

Azure Stream Analytics Pricing

Pay-as-you-go pricing plans are available on demand.

Hitachi Pentaho - End-To-End Data Integration & Analytics Platform

Hitachi Pentaho offers a complete package of tools and functionalities to tackle the challenges posed by high-volume, high-velocity data streams, whether it's analyzing sensor data from IoT devices, monitoring social media feeds, or processing financial transactions in real time.

By blending batch and stream processing, Hitachi Pentaho combines real-time streaming data with historical data for holistic analyses and deeper insights into your operations. It uses advanced algorithms and machine learning models for real-time fraud detection as they occur. This reduces the impact and financial losses associated with such transactions.

Hitachi Pentaho offers different features to explore, visualize, and extract meaningful insights from streaming data. Its easy-to-use user interface and drag-and-drop functionality make it easy to design data pipelines, define transformations, and create real-time dashboards for monitoring key metrics.

Why Choose Hitachi Pentaho

- Robust data governance and security features

- Collaborative workflow and data pipeline management

- Scalable architecture for handling high-volume streaming data

- Advanced machine learning capabilities for real-time predictive analytics

Hitachi Pentaho Pricing

You can get in touch with the sales team for a custom quote.

Google Cloud Dataflow - Distributed Processing Capabilities

Google Cloud Dataflow is designed to handle the complexities of processing data in real time. By using a flexible and scalable infrastructure, Google Cloud Dataflow empowers data scientists and engineers to focus on extracting meaningful insights from their data, rather than dealing with the intricacies of data streaming architecture.

Google Cloud Dataflow processes data at scale, thanks to its distributed processing capabilities. It automatically parallelizes the execution of tasks for efficient utilization of resources and ensures lightning-fast processing speeds even for vast amounts of streaming data.

Google Cloud Dataflow effectively handles failures and automatically retries failed tasks, ensuring that no data is lost or left unprocessed. The tight integration with other Google Cloud services facilitates data ingestion from various sources, such as Pub/Sub or Cloud Storage, and enables the easy integration of real-time analytics results with downstream services like BigQuery or Data Studio.

Why Choose Google Cloud Dataflow

- Cost optimization through a pay-per-use pricing model

- Comprehensive monitoring and debugging capabilities

- Dynamic pipeline updates for real-time adjustments and enhancements

- Advanced windowing and event-time processing for complex streaming scenarios

Google Cloud Dataflow Pricing

Google Cloud Dataflow provides custom pricing plans based on a pay-as-you-go model.

IBM Streams - Highly Fault-Tolerant Streaming Data Architecture

IBM Streams has a highly scalable and fault-tolerant streaming data architecture for handling peak data ingestion loads so that no data is lost or delayed during the streaming process. With its advanced stream processing capabilities, you can apply complex analytical algorithms and business rules to streaming data.

IBM Stream Analytics supports a wide range of data types and formats which allows you to work with structured, unstructured, and semi-structured data seamlessly. It can easily integrate with existing internal IT systems to capture and process data from various sources in real time.

IBM Streams’ user-friendly development environment enables data scientists and developers to quickly build and deploy sophisticated stream processing applications and reduce the time to market for new data-driven solutions. It also offers advanced monitoring and management capabilities to monitor the health and performance of the streaming applications and make necessary adjustments in real time.

Why Choose IBM Streams

- Support for multi-cloud and hybrid cloud environments

- Real-time data visualization and monitoring capabilities

- Advanced data analytics and machine learning integration

- Efficient handling of high-volume and high-velocity data streams

- Comprehensive management and operational tools for streamlined administration

IBM Streams Pricing

You can request a custom quote for their pay-as-you-go pricing model.

Striim Cloud - 99.5% Uptime Guarantee

Striim Cloud excels in consistently integrating data from diverse sources, including databases, log files, messaging systems, and more. With a 99.5% uptime guarantee, its real-time data ingestion capabilities ensure that data is captured and delivered without delays.

Striim Cloud has a powerful stream processing engine that applies complex transformations and enrichments to their data streams in real time. With support for industry-standard SQL-like queries and a rich set of functions and operators, you can easily manipulate and aggregate data on the fly and unlock valuable insights at lightning speed.

Whether it's performing real-time aggregations, identifying patterns and anomalies, or detecting fraud as it occurs, Striim Cloud's real-time analytics capabilities help you respond swiftly to changing conditions. It can easily integrate with popular cloud platforms, like Amazon Web Services (AWS) and Microsoft Azure.

Why Choose Striim Cloud

- Real-time monitoring and alerting

- Data security and compliance features

- Extensive integration ecosystem and APIs

- Comprehensive support for diverse data sources

- Intelligent data transformation and enrichment capabilities

Striim Cloud Pricing

- Striim Developer (Free): For 10 million events/month

- Data Product Solutions ($4,400/month): For 100 million events/month

- Striim Cloud Enterprise ($4,400/month): For 100 million events/month

Apache Spark - Most Widely-Used Engine For Scalable Computing

Apache Spark is known for its exceptional capabilities and unparalleled performance. Its core engine is designed to deliver blazingly fast processing speeds for real-time analysis of large amounts of streaming data. Spark uses in-memory computing and advanced optimization techniques to reduce data access latency and minimize disk I/O operations.

Spark has an extensive ecosystem of libraries and APIs, empowering data scientists and analysts with a wide range of tools and functionalities. Whether it's ML, graph processing, or SQL querying, Spark provides specialized libraries such as MLlib, GraphX, and Spark SQL.

Apache Spark benefits from a thriving and vibrant open-source community that actively contributes to its development, improvement, and support. With a large number of developers, data scientists, and domain experts constantly working to enhance Spark's features and performance, you can depend on this active ecosystem for troubleshooting, knowledge sharing, and continuous innovation.

Why Choose Apache Spark

- Unified data processing engine for batch, streaming, and interactive analytics

- Active support for real-time data streaming with low latency and high throughput

- Advanced analytics capabilities, including machine learning and graph processing

- Support for multiple programming languages, including Java, Scala, Python, and R

- Integration with popular big data tools and frameworks, such as Hadoop and Apache Kafka

Apache Spark Pricing

Free to use.

StreamSets - Best For End-To-End Data Integrity & Reliability

With its highly efficient parallel processing and distributed architectures, StreamSets handles vast volumes of stream data with ease. The platform prioritizes end-to-end data integrity and reliability and provides a range of built-in mechanisms to guarantee the accuracy and consistency of stream data. StreamSets has comprehensive error handling and recovery mechanisms, ensuring that data quality is never compromised for the entire streaming process.

From popular databases and cloud storage services to message queues and IoT devices, StreamSets provides pre-built connectors and adapters for hassle-free data ingestion and integration across different environments. It also offers detailed monitoring dashboards, alerting mechanisms, and detailed performance metrics which give you insights into pipeline health, latency, and data quality.

Why Choose StreamSets

- Robust data lineage and auditing capabilities

- Integration with popular cloud platforms and data lakes

- Supports data drift detection and automated schema evolution

- Built-in data masking and encryption for enhanced data security

StreamSets Pricing

Custom pricing plans are available on demand.

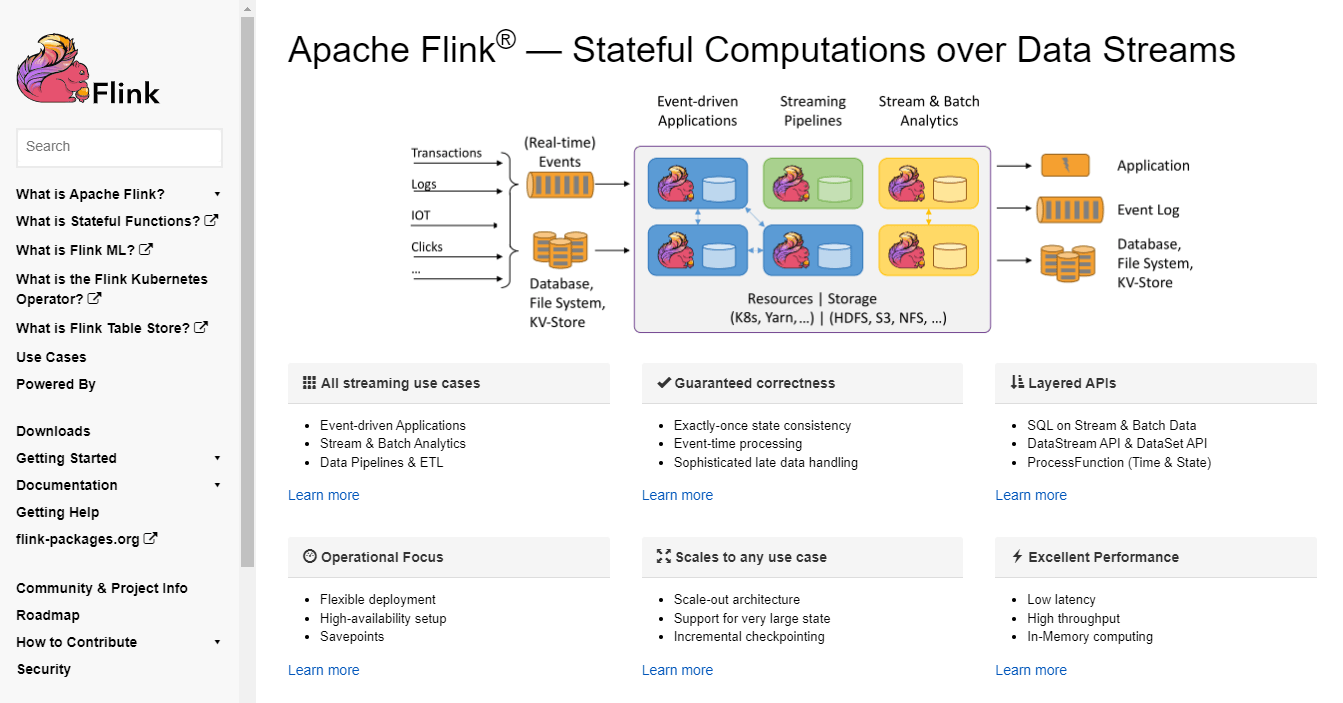

Apache Flink - Ideal For Maintaining Large Stateful Computations

Apache Flink has changed the way real-time data streams are handled. It employs a distributed snapshot mechanism that ensures consistency during failures and makes it resilient to crashes and network partitions.

With its advanced event time processing, it handles out-of-order events and late arrivals to accommodate real-world scenarios where data arrival may not align with event timestamps. Flink's windowing capabilities enable efficient processing of time-based aggregations for precise insights into streaming data.

Apache Flink simplifies the complexities of state management. It provides fault-tolerant, scalable, and low-latency state storage options, enabling applications to maintain large stateful computations efficiently. It offers a wide range of connectors, libraries, and integrations.

Why Choose Apache Flink

- Extensive support for complex event processing (CEP)

- Streamlined batch and stream processing in a single platform

- Advanced data watermarking and handling of late data arrivals

- Built-in support for machine learning algorithms and model serving

- Native support for processing both bounded and unbounded data streams

Apache Flink Pricing

Free to use.

Apache Storm - Fine-Grained Parallelism

Apache Storm enables complex event processing, making it an ideal choice for applications that require sophisticated event pattern recognition and rule-based analysis. Storm's powerful CEP capabilities detect intricate event correlations, identify anomalies, and trigger immediate actions based on predefined rules.

In scenarios where data reliability is paramount, Apache Storm offers superior data guarantees. Storm's built-in reliability mechanisms, such as message acknowledgment and tuple anchoring, ensure that data is processed reliably and without loss.

Apache Storm's architecture is designed for seamless scalability and to handle vast amounts of data and scale their processing resources dynamically. Its fine-grained parallelism allows you to distribute data processing tasks across multiple worker nodes efficiently.

Why Choose Apache Storm

- Language flexibility with support for multiple programming languages

- Dynamic fault tolerance for uninterrupted operation even in the face of failures

- Low-latency real-time data processing for immediate insights and decision-making

- Simplified end-to-end data pipeline construction through seamless integration with diverse data sources and frameworks

Apache Storm Pricing

Free to use.

Conclusion

The transformative potential of data streaming technologies knows no bounds. You can tap into the full power of real-time data only if you embrace these cutting-edge tools. Monitoring changing trends, detecting anomalies, and responding swiftly to critical events sets the stage to be on top of the data-driven hierarchy, where domination awaits.

Remember, the key lies not only in the selection of the right data streaming technology but also in its perfect integration with existing infrastructure. Enter Estuary, a revolutionary data streaming solution designed to seamlessly merge with your organization's systems.

For organizations looking for efficient and reliable ways to process and stream data, Estuary Flow emerges as the ultimate solution. It continuously analyzes data streaming patterns, identifies bottlenecks, and automatically optimizes the data processing pipeline for enhanced performance. This intelligent performance optimization of our solution minimizes latency, improves throughput, and ensures that data streams are processed with exceptional speed and efficiency.

Take the first step towards transforming your data streaming capabilities with Estuary Flow. Sign up for a free account today and witness the remarkable difference it can make in your data-driven workflows.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles