A strong data quality foundation is not a luxury; it is a necessity – one that empowers businesses, governments, and individuals to make informed choices that propel them forward. Can you imagine a world where decisions are made on a shaky foundation? Where information is riddled with inaccuracies, incomplete fragments, and discrepancies?

This is where data quality emerges as the champion of order, clarity, and excellence. However, without a clear understanding of what constitutes high-quality data, you will struggle to identify and address the underlying issues that compromise data integrity.

To help you navigate the complexities of data management and drive better outcomes, we will look into different dimensions of data quality, commonly used data standards in the industry, and real-life case studies. By the end of this 8-minute guide, you'll know everything that is there to know about data quality and how it can help your business.

What Is Data Quality?

Data quality refers to the overall accuracy, completeness, consistency, reliability, and relevance of data in a given context. It is a measure of how well data meets the requirements and expectations for its intended use.

So why do we care about data quality?

Here are a few reasons:

- Optimizing Business Processes: Reliable data helps pinpoint operational inefficiencies.

- Better Decision-Making: Quality data underpins effective decision-making and gives a significant competitive advantage.

- Boosting Customer Satisfaction: It provides a deeper understanding of target customers and helps tailor your products and services to better meet their needs and preferences.

Enhancing Data Quality: Exploring 8 Key Dimensions For Reliable & Valuable Data

The success of any organization hinges on its ability to harness reliable, accurate, and valuable data. To unlock the full potential of data, it is important to understand the key dimensions that define its quality. Let's discuss this in more detail:

Accuracy

Accuracy is the degree to which your data mirrors real-world situations and aligns with trustworthy references. The fine line between facts and fallacy is crucial in every data analysis. If your data is accurate, the real-world entities it represents can function as anticipated.

For instance, correct employee phone numbers ensure seamless communication. On the flip side, inaccurate data like incorrect date of joining could deprive them of certain privileges.

The crux of ensuring data accuracy lies in verification, using credible sources, and direct testing. Industries with stringent regulations, like healthcare and finance, particularly depend on high data accuracy for reliable operations and outcomes.

Different modes can be adopted to verify and compare the data, which are:

- Reference Source Comparison: This method checks the accuracy by comparing actual data with a reference or standard values from a reliable source.

- Physical Verification: This involves matching data with physical objects or observations, like comparing the items listed on your grocery bill to what's actually in your cart.

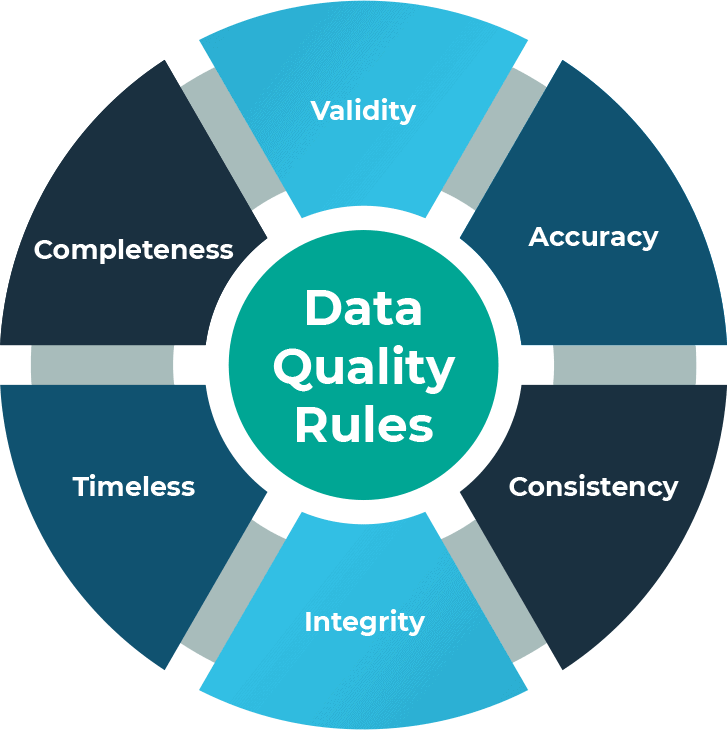

Completeness

Data completeness is a measure of how much essential data you have. It's all about assessing whether all necessary values in your data are present and accounted for.

Think of it this way: in customer data, completeness is whether you have enough details for effective engagement. An example is a customer address – even if an optional landmark attribute is missing, the data can still be considered complete.

Similarly, for products or services, completeness indicates important features that help customers make informed decisions. If a product description lacks delivery estimates, it's not complete. Completeness gauges if the data provides enough insight to make valuable conclusions and decisions.

Consistency

Data consistency checks if the same data stored at different places or used in different instances align perfectly. It's basically the synchronization between multiple data records. While it might be a bit tricky to gauge, it's a vital sign of high-quality data.

Think about 2 systems using patients’ phone numbers; even though the formatting differs, if the core information remains the same, you have consistent data. But if the fundamental data itself varies, say a patient's date of birth differs across records, you'll need another source to verify the inconsistent data.

Uniqueness

The uniqueness of data is the absence of duplication or overlaps within or across data sets. It's the assurance that each instance recorded in a data set is unique. A high uniqueness score builds trusted data and reliable analysis.

It's a highly important component of data quality that helps in both offensive and defensive strategies for customer engagement. While it may seem like a tedious task, maintaining uniqueness becomes achievable by actively identifying overlaps and promptly cleaning up duplicated records.

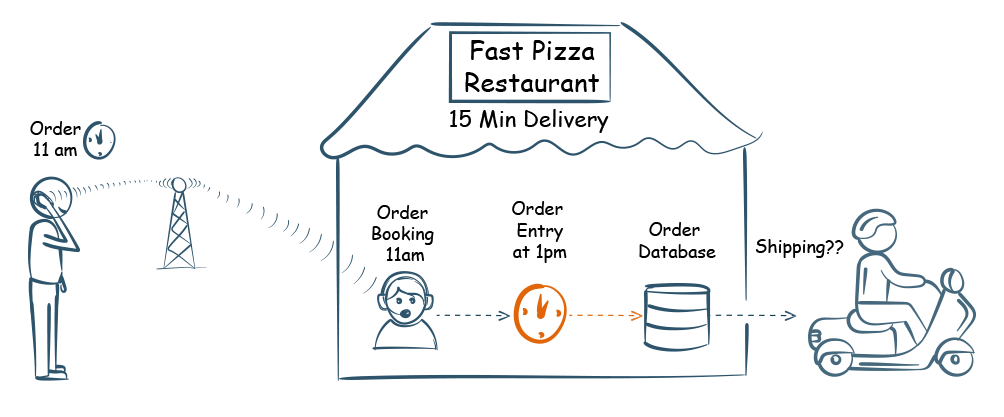

Timeliness

The timeliness of data ensures it is readily available and up-to-date when needed. It is a user expectation; if your data isn't prepared exactly when required, it falls short of meeting the timeliness dimension.

Timeliness is about reducing latency and ensuring that the correct data reaches the right people at the right time. A general rule of thumb here is that the fresher the data, the more likely it is to be accurate.

Validity

Validity is the extent to which data aligns with the predefined business rules and falls within acceptable formats and ranges. It's about whether the data is correct and acceptable for its intended use. For instance, a ZIP code is valid if it contains the right number of characters for a specific region.

The implementation of business rules helps evaluate data validity. While invalid data can impede data completeness, setting rules to manage or eliminate this data enhances completeness.

Currency

In the context of data quality, the currency is how up-to-date your data is. Data should accurately reflect the real-world scenario it represents. For instance, if you once had the right information about an IT asset but it was subsequently modified or relocated, the data is no longer current and needs an update.

These updates can be manual or automatic and occur as needed or at scheduled intervals, all based on your organization's requirements.

Integrity

Integrity refers to the preservation of attribute relationships as data navigate through various systems. To ensure accuracy, these connections must stay consistent, forming an unbroken trail of traceable information. If these relationships suffer damage during the data journey, it could result in incomplete or invalid data.

Building upon the foundation of the data quality dimensions, let's discuss data quality standards and see how you can use them for data quality assessments and improve your organization’s data quality.

Understanding 6 Data Quality Standards

Here are 6 prominent data quality standards and frameworks.

ISO 8000

When it comes to data quality, ISO 8000 is considered the international benchmark. This series of standards is developed by the International Organization for Standardization (ISO) and provides a comprehensive framework for enhancing data quality across various dimensions.

Here's a quick rundown:

- Applicability: The best thing about ISO 8000 is its universal applicability. It is designed for all organizations, regardless of size or type, and is relevant at every point in the data supply chain.

- Benefits of Implementation: Adopting ISO 8000 brings significant benefits. It provides a solid foundation for digital transformation, promotes trust through evidence-based data processing, and enhances data portability and interoperability.

- Data Quality Dimensions: ISO 8000 emphasizes that data quality isn't an abstract concept. It's about how well data characteristics conform to specific requirements. This means data can be high quality for one purpose but not for another, depending on the requirements.

- Data Governance & Management: The ISO 8000 series addresses critical aspects of data governance, data quality management, and maturity assessment. It guides organizations in creating and applying data requirements, monitoring and measuring data quality, and making improvements.

Total Data Quality Management (TDQM)

Total Data Quality Management (TDQM) is a strategic approach to ensuring high-quality data. It's about managing data quality end-to-end, from creation to consumption. Here's what you need to know:

- Continuous Improvement: TDQM adopts a continuous improvement mindset. It's not a one-time effort but an ongoing commitment to enhancing data quality.

- Root Cause Analysis: A key feature of TDQM is its focus on understanding the root causes of data quality issues. It's not just about fixing data quality problems; it's about preventing them from recurring.

- Organizational Impact: Implementing TDQM can have far-reaching benefits. It boosts operational efficiency, enhances decision-making, improves customer satisfaction, and ultimately drives business success.

- Comprehensive Coverage: TDQM covers all aspects of data processing, including creation, collection, storage, maintenance, transfer, utilization, and presentation. It's about ensuring quality at every step of the data lifecycle.

Six Sigma

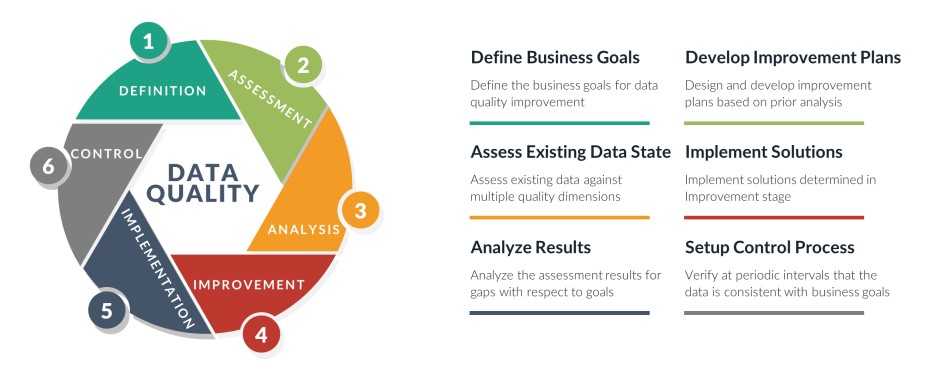

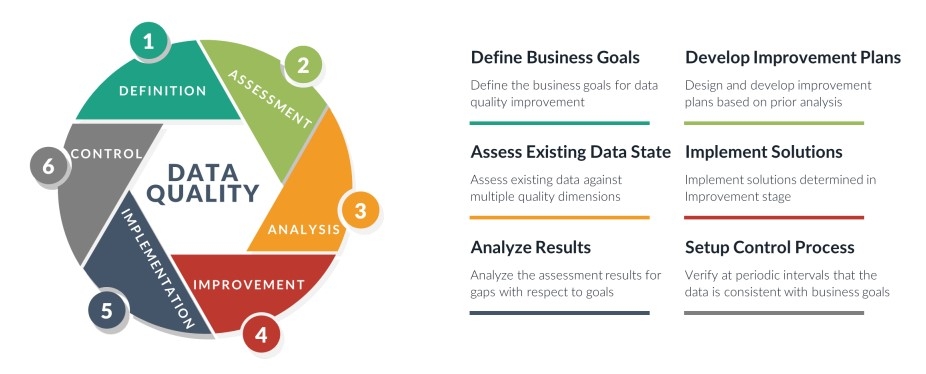

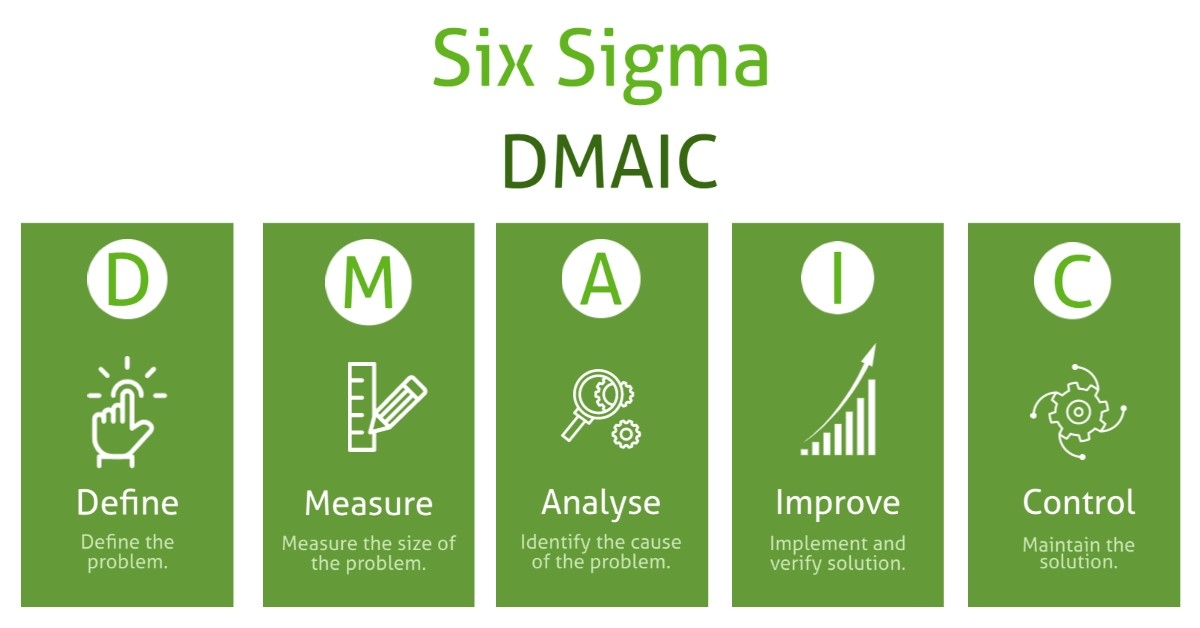

Six Sigma is a well-established methodology for process improvement. It is a powerful tool for enhancing data quality and uses the DMAIC (Define, Measure, Analyze, Improve, Control) model to systematically address data quality issues. Details of DMAIC are:

- Define: Start by identifying the focus area for the data quality improvement process. Understand your data consumers and their needs, and set clear data quality expectations.

- Measure: Assess the current state of data quality and existing data management practices. This step helps you understand where you stand and what needs to change.

- Analyze: Dive deeper into the high-level solutions identified in the Measure phase. Design or redesign processes and applications to address data quality challenges.

- Improve: Develop and implement data quality improvement initiatives and solutions. This involves building new systems, implementing new processes, or even a one-time data cleanup.

- Control: Finally, ensure the improvements are sustainable. Monitor data quality continuously using relevant metrics to keep it at the desired level.

DAMA DMBOK

The Data Management Body of Knowledge (DAMA DMBOK) framework is an extensive guide that provides a blueprint for effective master data management (MDM). Here’s how it can help your organization elevate its data quality.

- Emphasis on Data Governance: The framework highlights data governance in ensuring data quality and promotes consistent data handling across the organization.

- Structured Approach to Data Management: The framework offers a systematic way to manage data, aligning data management initiatives with business strategies to enhance data quality.

- The Role of Data Quality in Decision-Making: DAMA DMBOK underscores the importance of high-quality data in making informed business decisions and achieving organizational goals.

- Adaptability of the DAMA DMBOK Framework: DAMA DMBOK is designed to be flexible so you can tailor your principles to your specific needs and implement the most relevant data quality measures.

Federal Information Processing Standards (FIPS)

Federal Information Processing Standards (FIPS) are a set of standards developed by the U.S. federal government for use in computer systems. Here's how they contribute to data quality:

- Compliance: For U.S. federal agencies and contractors, compliance with FIPS is mandatory. This ensures a minimum level of data quality and security.

- Global Impact: While FIPS are U.S. standards, their influence extends globally. Many organizations worldwide adopt these standards to enhance their data security and quality.

- Data Security: FIPS primarily focuses on computer security and interoperability. They define specific requirements for encryption and hashing algorithms, ensuring the integrity and security of data.

- Standardization: FIPS promotes consistency across different systems and processes. They provide a common language and set of procedures that can significantly enhance data quality.

IMF Data Quality Assessment Framework (DQAF)

The International Monetary Fund (IMF)has developed a robust tool known as the Data Quality Assessment Framework (DQAF). This framework enhances the quality of statistical systems, processes, and products.

It's built on the United Nations Fundamental Principles of Official Statistics and is widely used for assessing best practices, including internationally accepted methodologies. The DQAF is organized around prerequisites and 5 dimensions of data quality:

- Assurances of Integrity: This ensures objectivity in the collection, processing, and dissemination of statistics.

- Methodological Soundness: The statistics follow internationally accepted standards, guidelines, or good practices.

- Accuracy and Reliability: The source data and statistical techniques are sound and the statistical outputs accurately portray reality.

- Serviceability: The statistics are consistent, have adequate periodicity and timeliness, and follow a predictable revisions policy.

- Accessibility: Data and metadata are easily available and assistance to users is adequate.

Now that we are familiar with the data quality standards, let’s look at some real-world examples that will help you implement the best data quality practices within your organization.

3 Real-life Examples Of Data Quality Practices

Let's explore some examples and case studies that highlight the significance of good data quality and its impact in different sectors.

IKEA

IKEA Australia's loyalty program, IKEA Family, aimed to personalize communication with its members to build loyalty and engagement.

To understand and target its customers better, the company recognized the need for data enrichment, particularly regarding postal addresses. Manually entered addresses caused poor data quality with errors, incomplete data, and formatting issues, leading to a match rate of only 83%.

To address the challenges with data quality, IKEA ensured accurate data entry and improved data enrichment for their loyalty program. The solution streamlined the sign-up process and reduced errors and keystrokes.

The following are the outcomes:

- The enriched datasets led to a 7% increase in annual spending by members.

- The improvement in data quality enabled more targeted communications with customers.

- IKEA Australia's implementation of the solution resulted in a significant 12% increase in their data quality match rate, rising from 83% to 95%.

- The system of validated address data minimized the risk of incorrect information entering IKEA Australia's Customer Relationship Management (CRM) system.

Hope Media Group (HMG)

Hope Media Group was dealing with a significant data quality issue because of the continuous influx of contacts from various sources, leading to numerous duplicate records. The transition to a centralized platform from multiple disparate solutions further highlighted the need for advanced data quality tools.

HMG implemented a data management strategy that involved scanning for thousands of duplicate records and creating a 'best record' for a single donor view in their CRM. They automated the process using rules to select the 'best record' and populate it with the best data from other duplicates. This saved review time and created a process for automatically reviewing duplicates. Ambiguous records were sent for further analysis and processing.

HMG has successfully identified, cleansed, and merged over 10,000 duplicate records so far, with the process still ongoing. As they expand their CRM to include more data capture sources, the need for their data management strategy is increasing. This approach allowed them to clean their legacy datasets.

Northern Michigan University

Northern Michigan University faced a data quality issue when they introduced a self-service technology for students to manage administrative tasks including address changes. This caused incorrect address data to be entered into the school's database.

The university implemented a real-time address verification system within the self-service technology. This system verified address information entered over the web and prompted users to provide missing address elements when an incomplete address was detected.

The real-time address verification system has given the university confidence in the usability of the address data students entered. The system validates addresses against official postal files in real time before a student submits them.

If an address is incomplete, the student is prompted to augment it, reducing the resources spent on manually researching undeliverable addresses and ensuring accurate and complete address data for all students.

Estuary Flow: Your Go-To Solution For Superior Data Quality

Estuary Flow is our no-code DataOps platform that offers real-time data ingestion, transformation, and replication functionality. It can greatly enhance the data quality in your organization. Let's take a look at how Flow plays a vital role in improving data quality.

Capturing Clean Data

Estuary Flow starts by capturing data from various sources like databases and applications. By using Change Data Capture (CDC), Estuary makes sure that it picks up only the fresh and latest data. This means you don’t have to worry about your data getting outdated or duplicated. Build-in schema validation ensures that only clean data makes it through the pipeline.

Smart Transformations

Once the data is in the system, Estuary Flow gives you the tools to mold it just like you want. You can use streaming SQL and Javascript to make changes to your data as it streams in.

Thorough Testing

A big part of maintaining data quality is checking for errors. Estuary built-in testing that’s like a security guard for your data. It ensures that your data pipelines are error-free and reliable. If something doesn’t look right, Estuary will help you spot it before it becomes a problem — corrupt data will never land in your destination system.

Keeping Everything Up-to-Date

Estuary Flow makes sure that your data is not just clean but stays clean. It does this by creating low-latency views of your data which are like live snapshots that are always up to date. This means you can trust your data to be consistent across different systems.

Bringing It All Together

Finally, Estuary helps you combine data from different sources to get a full picture. This ensures that you have all the information you need, in high quality, to make better decisions and provide better services.

Conclusion

Achieving high-quality data is an ongoing journey that requires continuous effort. However, when you acknowledge the importance of data quality and invest in improving it, you set yourself on a transformative path toward remarkable success.

By prioritizing data quality, you can make better decisions, drive innovation, and thrive in today's dynamic landscape. It's a journey worth taking as the rewards of trustworthy data are substantial and pave the way for significant positive changes within organizations.

Estuary Flow greatly boosts data quality. It offers real-time data transformations and automated schema management. Its flexible controls, versatile compatibility, and secure data sharing make it an ideal choice for top-notch data management. With Estuary, you're not just handling data, you're enhancing its quality and preparing it for real-time analytics.

If you are looking to abide by the data quality standards in your organization, Estuary Flow is an excellent place to start. Sign up for Flow to start for free and explore its many benefits, or contact our team to discuss your specific needs.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles