Imagine a world where data flows seamlessly, without human intervention or bottlenecks. A world where complex data tasks are executed effortlessly and insights are derived at lightning speed. This is the promise of a well-designed and properly implemented data pipeline automation.

While the thought of automating data pipelines can be tempting, it’s not a one-size-fits-all solution. Understanding the different types of automatic data pipelines and their impact on your operations is crucial to making the right choices for your business.

This comprehensive guide is designed to be your go-to resource for creating an automated data pipeline. We will discuss what data pipeline automation is and why your company needs it. We will also walk you through a step-by-step guide to creating your automated data pipeline.

By the end of this 8-minute power-packed guide, you’ll be well-equipped with the knowledge and insights to efficiently automate your data pipelines and lead your business toward enhanced productivity and resource optimization.

What Is An Automated Data Pipeline?

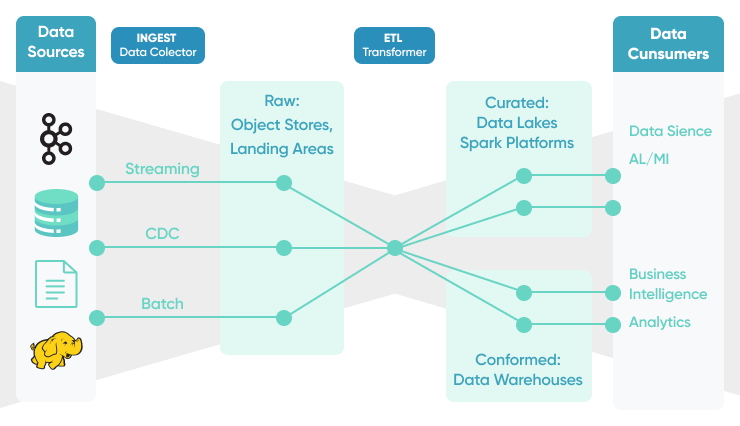

An automated data pipeline is an organized set of processes that takes data from various sources, refines it through transformation, and seamlessly loads it into a destination for further analysis or other uses. Simply put, it’s the backbone that ensures data flows efficiently from its origin to a destination while allowing organizations to derive insights and value from the data.

These pipelines can be highly sophisticated, dealing with massive volumes of data, or fairly simple depending on the use. They play an important role in data analytics, data science, and business intelligence.

Classification Of Automated Data Pipelines

Automated data pipelines can be classified based on several factors, like the timing of data processing, the location of data processing, and the order of operations. Let’s discuss the specifics of these classifications.

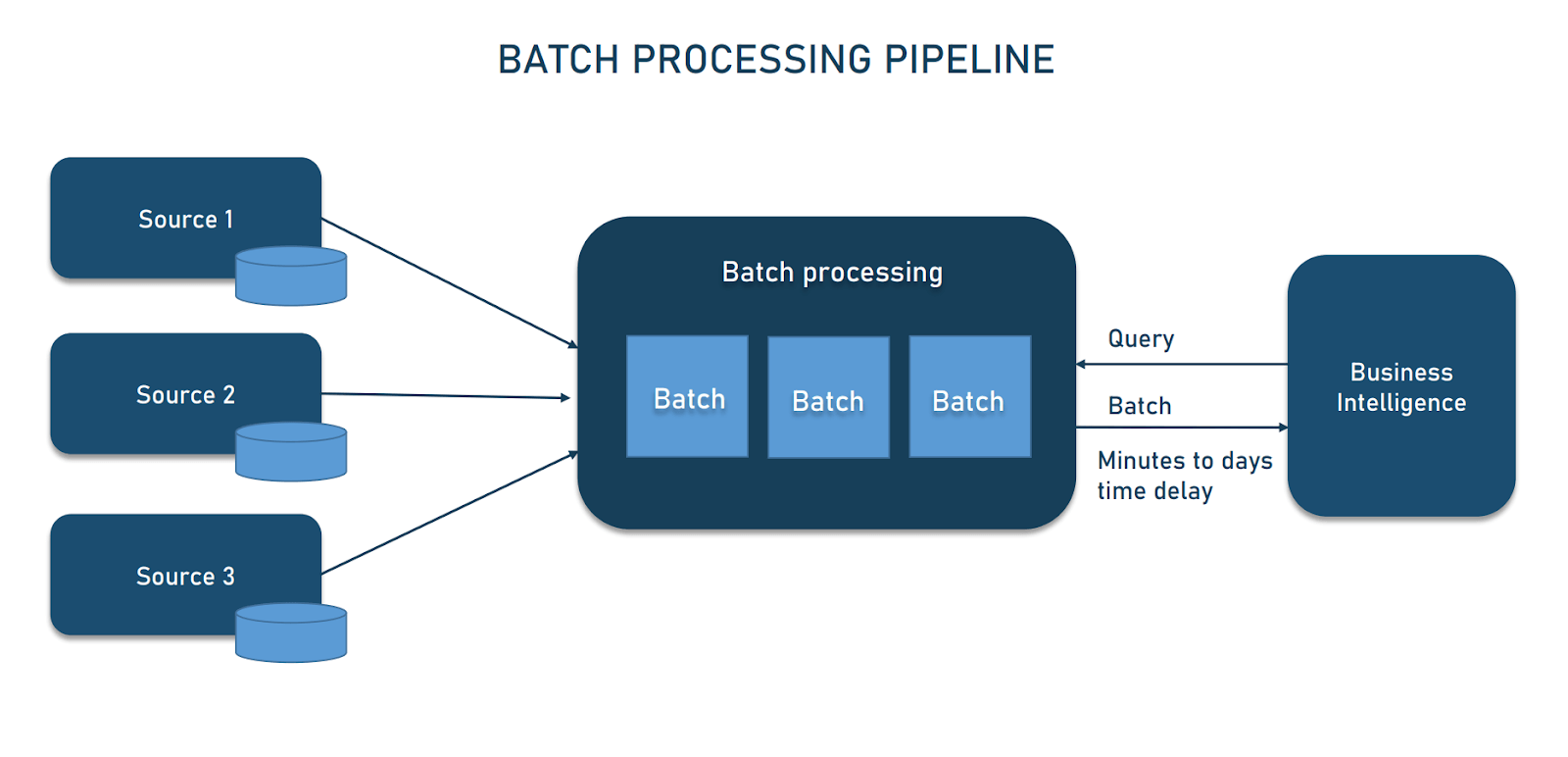

Batch Vs Real-Time Data Pipelines

Batch processing pipelines deal with data in large batches, collecting data over a period and processing it all at once. This type of pipeline is commonly used for historical data analysis and regular reporting.

On the other hand, real-time or streaming pipelines handle data as it arrives, in real-time or near real-time. This type is ideal for applications where immediate insights are critical, like monitoring systems or financial markets.

On-Premises Vs Cloud-Native Data Pipelines

On-premises pipelines are traditionally used in organizations where data is stored and processed within the local infrastructure. These pipelines are set up and maintained on local servers. They offer a high level of control over the data and its security as all the data remains within the organization's physical location.

Cloud-native pipelines, on the other hand, are built and operated in the cloud. They leverage the power of cloud computing to provide scalability and cost-efficiency. These pipelines are ideal for businesses that want to quickly scale their data operations up or down based on demand. They also eliminate the need for upfront hardware investment and ongoing maintenance costs.

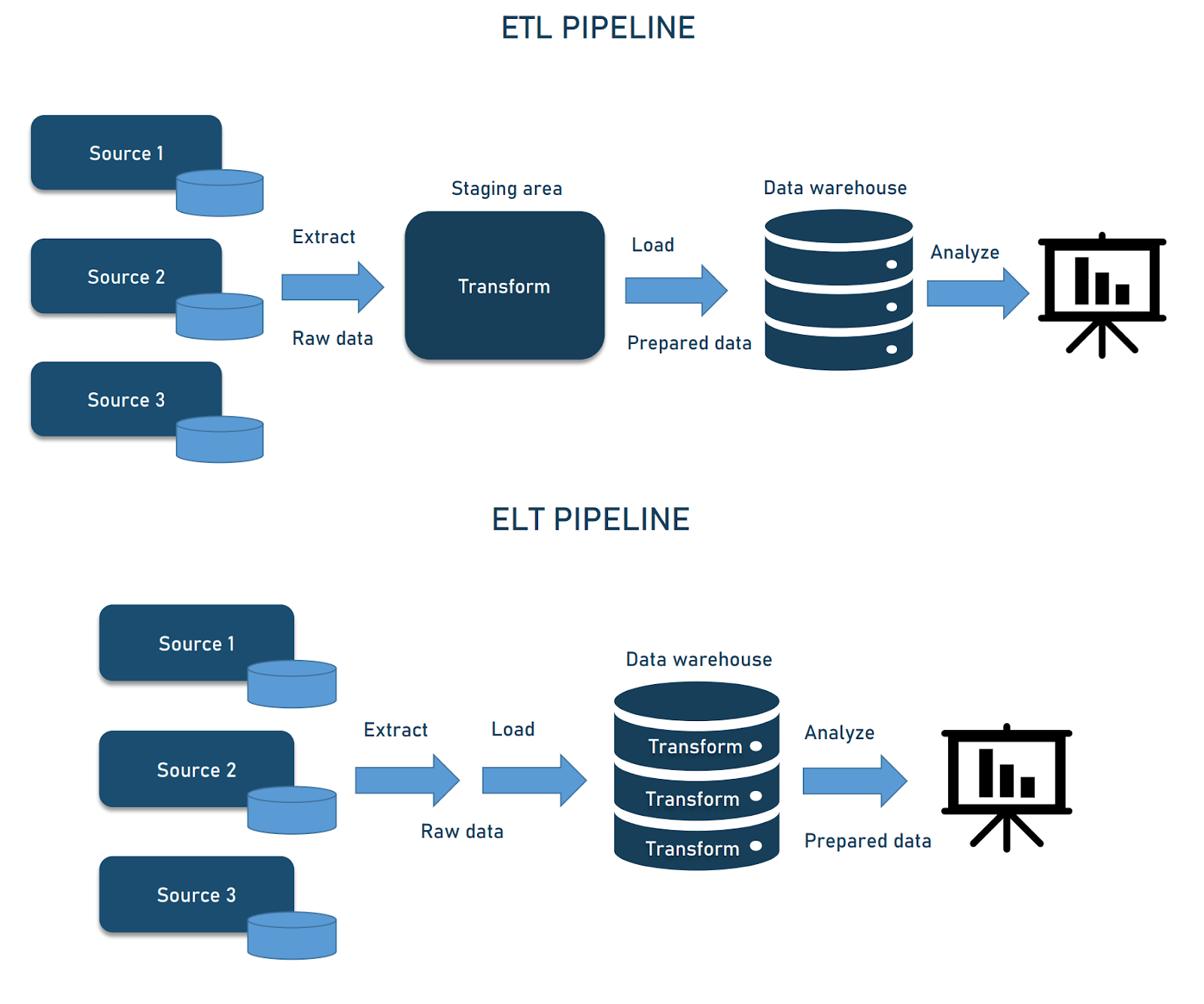

ETL (Extract, Transform, Load) Vs ELT (Extract, Load, Transform)

Lastly, there are ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) pipelines. An ETL pipeline involves extracting data from sources, transforming it into a uniform format, and then loading it into a destination system.

On the other hand, ELT pipelines involve loading raw data into the destination first and then processing it there. The choice between ETL and ELT depends on data volumes, processing needs, and specific use cases.

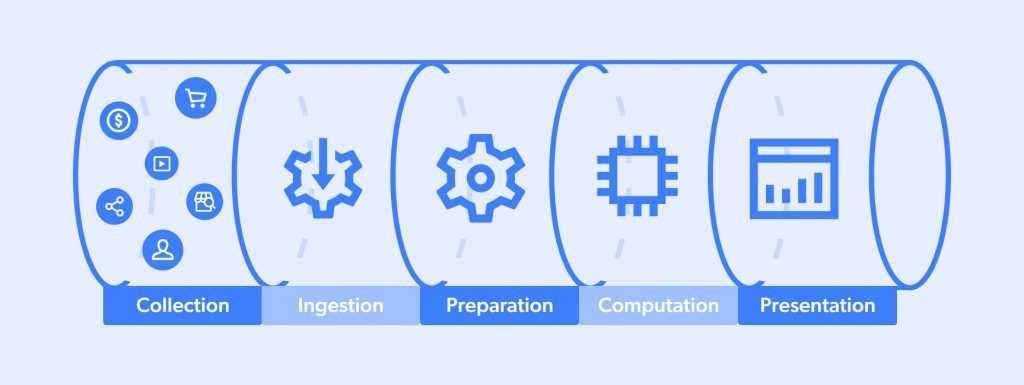

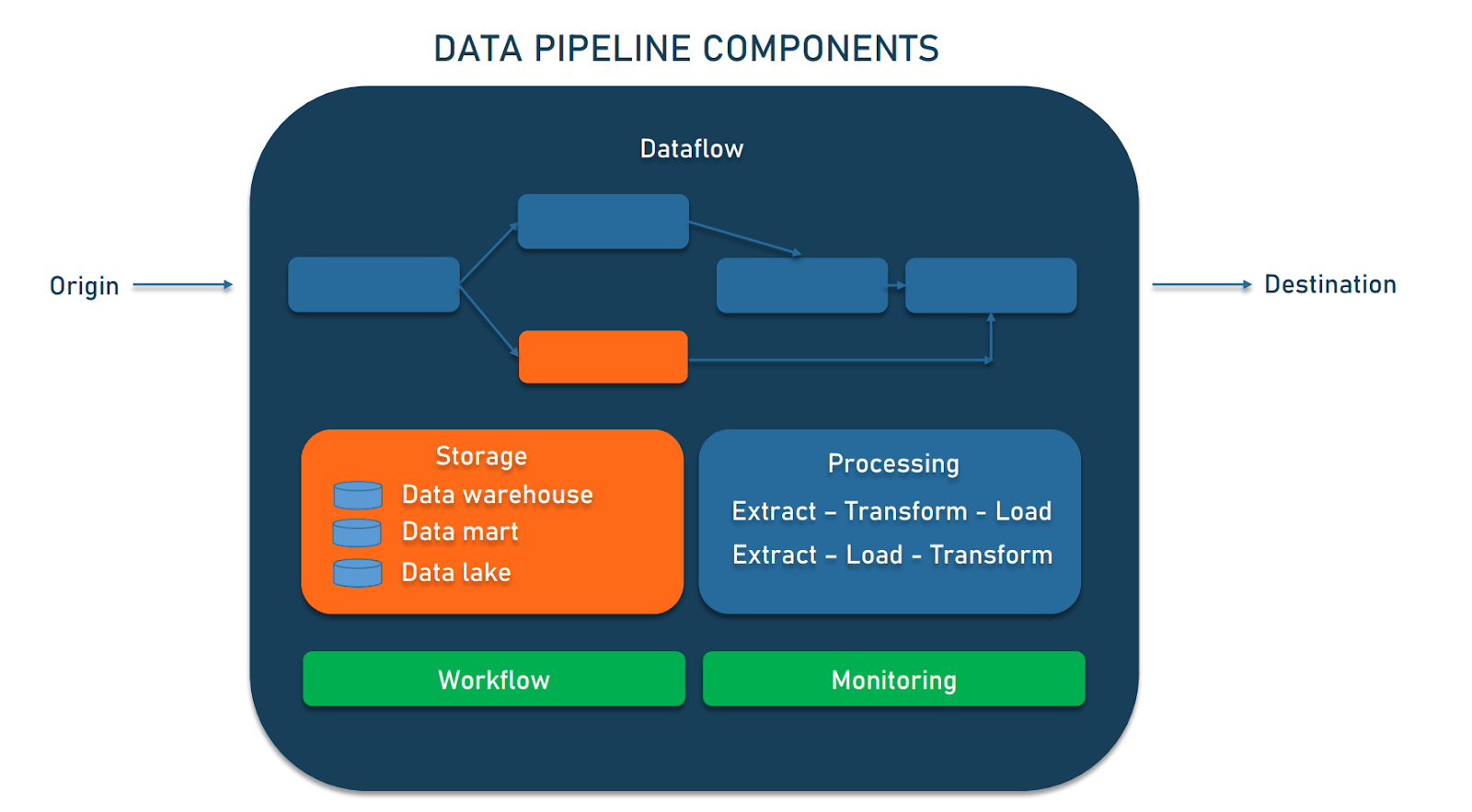

5 Basic Components Of An Automated Data Pipeline

Automatic data pipeline architectures consist of several components that each serve a different purpose. Now, let’s take a closer look at these components:

- The data source is where your data is coming from. It can be anything from databases and files to real-time data streams.

- Data processing is the crucial step where the data is cleaned, transformed, and enriched. This step ensures that the data is useful and in the format that you need for analysis or other applications. It's also where schema evolution is often handled automatically so that the pipeline adapts to changes in the structure of the incoming data without manual intervention.

- The data destination is the endpoint where your processed data is loaded. It could be in databases, data warehouses, or data lakes depending on your use case.

- Workflow management tools are the control system of the data pipeline. They orchestrate how data moves through the pipeline and how it is processed. These tools handle scheduling and error handling and ensure that the pipeline operates efficiently.

- Monitoring and logging services involve tracking the health and performance of the data pipeline. It logs data for auditing, debugging, and performance tuning.

8-Step Guide To Create An Automated Data Pipeline

Plan carefully before setting up your data pipeline automation and choose your tools and technologies wisely. Be very clear about your data sources and destination and how the data needs to be transformed. With this in mind, let’s get to the step-by-step guide.

Step 1: Planning & Designing The Pipeline

Outline your goals and requirements for the data pipeline. Identify the data sources, the volume of data to be processed, the final format you need, and the frequency at which data needs to be processed.

Decide the type of data pipeline you need. Consider if batch or real-time processing is more suited, whether the data infrastructure should be on-premise or cloud-based, and if an ETL or ELT approach is preferable. This stage is important for ensuring the effectiveness of the pipeline.

Step 2: Choosing The Right Tools & Technologies

Next, decide on the tools and technologies. You can choose between low-code/no-code and code-based solutions. Low-code/no-code solutions are easier to use but might lack customization. Code-based solutions offer more control but require programming knowledge. Weigh the pros and cons according to your team’s capabilities and needs.

When choosing, consider the following:

- Use case: Identify your data processing needs and complexity.

- Integrations: Check if it supports essential integrations like databases or cloud services.

- Resources & budget: Consider setup complexity, maintenance, and costs relative to features.

- Data volume & frequency: Ensure the tool handles your data scale and processing speed requirements.

- Team expertise: Check whether the data engineers are equipped to handle code-based or no-code solutions.

Step 3: Setting Up The Data Sources & Destinations

Now connect your data sources to the pipeline. This could include databases, cloud storage, or external APIs. Use connectors available with data pipeline tools and platforms to easily connect to various data sources and destinations. Also, define where the processed data will be stored or sent. Ensure that all connections are secure and comply with data protection regulations.

Step 4: Implementing Data Transformations

At this point, structure the data transformation processes. This means cleaning to remove errors, sorting in a logical and meaningful order, and converting data into the desired formats. Establish error handling procedures to address any issues that may arise during transformation. Errors could include missing data, incompatible formats, or unexpected outliers.

Step 5: Automating The Data Flow

Next, automate the flow of data through the pipeline. It reduces the risk of human error and ensures consistency in the processing steps. Automation also lets you manage larger volumes of data efficiently as it can handle repetitive tasks more quickly and accurately than manual processing.

If using a low-code/no-code solution, this involves configuring the tool to automate tasks. For code-based solutions, write scripts to automate the process.

Step 6: Testing & Validation

Before deployment, test the pipeline with sample data. Run the pipeline with representative sample data to simulate real-world scenarios so you can identify any potential issues, errors, or bugs that can affect the data processing.

During the testing phase, closely monitor the pipeline's performance and output. Pay attention to any discrepancies, unexpected results, or deviations from the expected outcomes. If you encounter any issues, document them and investigate their root causes to determine the appropriate fixes.

Step 7: Documentation & Knowledge Sharing

When creating the documentation, provide clear and easy-to-understand explanations of how the data pipeline operates. Describe the different components, their roles, and how they interact with each other.

Include step-by-step instructions on how to set up, configure, and maintain the pipeline. This documentation should cover all relevant aspects, like:

- Data sources

- Transformation processes

- Error-handling procedures

- Automation configurations

Once the documentation is complete, share it with your team. This can be done through a centralized knowledge-sharing platform, such as a shared drive, a wiki, or a collaboration tool.

Step 8: Continuous Monitoring & Optimization

Regularly monitor the performance of your data pipeline to identify any errors, inefficiencies, or bottlenecks that may arise. Keep a close eye on the pipeline's operation and output. Monitor for any unexpected errors, data inconsistencies, or delays in processing. This helps you maintain the reliability and accuracy of the pipeline.

Regularly assess the effectiveness of the pipeline. Look for any areas where improvements can be made to enhance its performance. This can involve optimizing the data transformation processes, refining the automation configurations, or upgrading the hardware infrastructure if necessary.

Why Automate Data Pipelines?

Manually transferring data between systems is not only time-consuming, but also prone to errors. As your business expands, the volume of data increases, and along with it, the complexity of managing this data escalates. Manually handling it becomes inefficient and causes inconsistencies and inaccuracies.

This is where automating data pipelines comes in. Automation streamlines the process of transferring and transforming data from its source to the destination system. By automating data pipelines, you can focus on analyzing data and deriving insights, rather than struggling with data collection and preparation.

Let’s take a look at some of the benefits of data pipeline automation.

5 Proven Benefits Of Data Pipeline Automation

Here are five key advantages for your wider data system when you transition to automated data pipelines:

Real-Time Analytics

Automated data pipelines not only streamline the integration of data with cloud-based databases but also significantly reduce latency through high-volume data streaming. This ensures access to fresh and error-free data, which is vital for real-time analytics.

This removal of manual data coding and formatting enables the instantaneous analysis of data, empowering you to extract valuable insights and detect anomalies or potentially fraudulent activities with heightened efficiency.

Building A Complete Customer 360-Degree View

As data collection accelerates through automation, data quality improves and you get deeper insights from the data you collect. This plays a significant role in nurturing a better data culture. Automated data pipelines also help extract data from fragmented customer touchpoints and consolidate it. This gives you a real-time, comprehensive view of each customer’s journey.

This 360-degree view is important for:

- Improving customer service

- Efficient stock management

- Matching real-time inventory

- Improving digital conversion rates

With access to this in-depth and real-time data, you gain valuable insights that empower you to make informed decisions.

Database Replication Using Change Data Capture

Automated data pipelines can detect changes in data and even database schemas as application and business requirements evolve. They are also efficient in replicating data between databases by using CDC technology.

CDC ensures the movement of data without latency and errors and enables real-time data replication, especially from legacy databases to analytics environments or backup storage systems like a data warehouse or a data lake. This ensures data consistency and integrity with no data loss and zero downtime.

Increased Data Utilization, Mobility, & Seamless Database Migration

Automation allows you to handle vast amounts of data generated through digitization and transform it into a form better suited for analysis. It also moves data rapidly across systems and applications, ensuring faster data mobility.

If you decide to transition from on-premises storage to cloud storage, automated data pipelines can help you with the database migration process as well. Many automatic data pipeline tools integrate efficiently with leading legacy databases and cloud warehouses, which ensures that data migration is swift, error-free, and cost-effective.

Streamlined Data Integration Across Legacy & Cloud-Based Systems

Automated data pipelines facilitate the integration of old, legacy systems with modern, cloud-native technologies for higher scalability and flexibility in data management.

This way, you can plan your system migration more efficiently without compromising functionality. With this hybrid system, you also get the added advantage of easily managing and analyzing large datasets in the cloud while continuing to use your old applications.

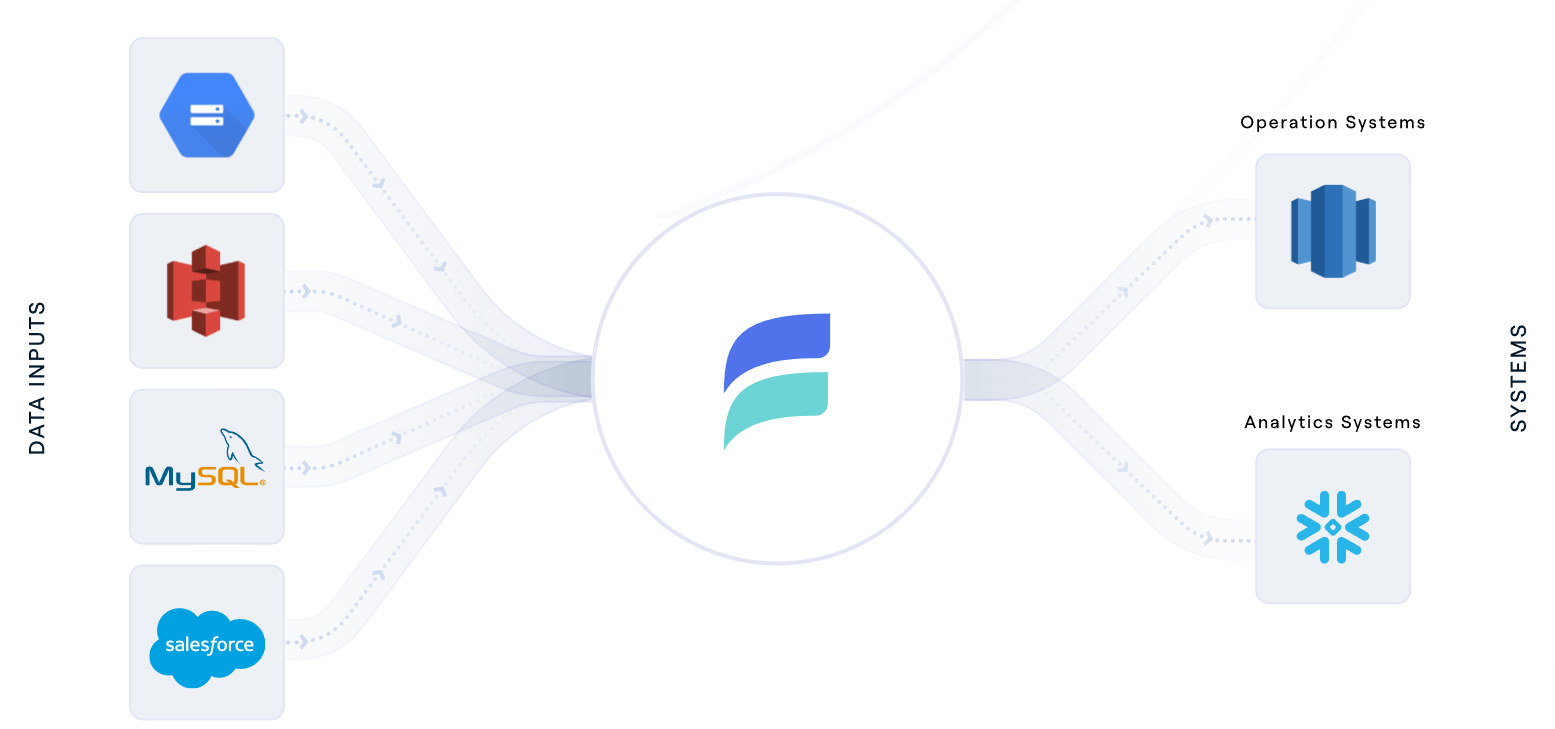

Automating Real-Time Data Pipelines Using Estuary Flow

Estuary Flow is our state-of-the-art platform tailored for data pipeline automation. Whether you are working with data from cloud services, databases, or SaaS applications, Flow has been built on a fault-tolerant architecture to handle it all. It is also highly accessible and user-friendly, which makes it suitable for teams with varying degrees of technical proficiency.

Flow has been built to scale and it can handle large data workloads of up to 7GB/s. In terms of accuracy and control, our platform gives you the ability to change schema controls and keeps data integrity by using exactly-once semantics.

Let's dive into the details of how Flow can assist you through the steps of data pipeline automation.

Supports Both Batch & Real-time Data

Estuary Flow can handle real-time as well as batch data in its streaming ETL pipelines. This simplifies the process of choosing the appropriate data pipeline for your needs.

Seamlessly Connects With Hundreds Of Data Sources & Destinations

Flow makes connecting data sources very easy. It comes with over 100 built-in connectors for various data sources like databases and SaaS applications. You just need to configure them and you’re all set.

Facilitates Real-Time Transformations

Flow allows you to create real-time transformations of your data. With its powerful streaming SQL and Typescript transformations, you can clean, sort, and format your data the way you need it.

Offers Tools For Automating Data Flow

With features such as automated schema management and data deduplication, Flow simplifies the automation process of data pipelines. It also allows you to make updates via an intuitive user interface or a CLI interface. The CLI is especially good for embedding and white labeling pipelines seamlessly.

Provides Automatic Unit-testing

With Estuary Flow, validating and testing your data pipelines is straightforward and efficient. The platform is equipped with unit tests that ensure data accuracy so you can confidently develop and evolve your pipelines.

Includes Real-Time Pipeline Monitoring

Flow’s built-in monitoring features make it possible to keep an eye on how your data pipeline is doing at all times. This feature lets you see how real-time analytical workloads affect performance, allowing you to make changes quickly for the best results.

Conclusion

Creating a data pipeline automation is a transformative step to efficiency and productivity in managing your data processes. Data quality and consistency become non-negotiable when automation takes the wheel. So, there is no need to worry about human errors or inconsistencies in data processing.

Your automated system ensures that every piece of information is handled uniformly, laying the foundation for trustworthy insights that pave the way for confident decision-making.

However, the main issue when implementing data pipeline automation is the initial setup and maintenance. Invest time and effort in designing a well-structured pipeline architecture, establishing strong monitoring and error-handling mechanisms, and selecting the right tools and technologies.

With Estuary Flow, you can easily set up your automatic data pipelines and make sure they run smoothly. Flow simplifies the entire process and excels in handling real-time data.

If you are ready to set up automated data pipelines and want to make your data work for you, Estuary Flow is the perfect tool to get you started. Start exploring Flow for free by signing up here, or reach out to our team for more information and assistance.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles

![How To Create A Data Pipeline Automation [Complete Guide]](/static/31db893c43167a99056ddc304a2d96b0/f4886/05_Data_Pipeline_Automation_How_To_Build_Automated_Data_Pipelines_e1d9d92c7f.png)