“Data pipeline architecture” may sound like another deliberately vague tech buzzword, but don’t be fooled.

Well-thought-out architecture is what differentiates slow, disorganized, failure-prone data pipelines from efficient, scalable, reliable pipelines that deliver the exact results you want.

As the digital world expands to encompass businesses and governments alike — and the data volume that is produced each day grows exponentially — good architecture is more important than ever.

In this world, there’s more room for mistakes, massive failures, and even compliance issues than ever before. Thankfully, thoughtful planning, like the planning you’ll do for data pipeline architecture, can help you prevent these issues.

In this article, we’ll go over everything you need to know to get started designing data pipeline architecture, including:

- What exactly “data architecture” means.

- Common patterns at different scales, and their advantages and disadvantages.

- Important considerations that are often overlooked.

By the end, you’ll have a better sense of the complexities involved in data pipeline architecture, what’s required, and who is involved.

Let’s begin by discussing what data pipeline architecture is, and why we should care about it.

What is Data Pipeline Architecture?

A data pipeline is an automated system for transferring and transforming data. It is a series of steps that allows data to flow from one system to another in the correct format.

Data pipelines keep large sets of information in sync across an organization’s many systems. The data in each system is changing constantly, so it must be updated constantly to stay up to date. Data pipelines allow all your data-driven programs to function correctly and give accurate results.

But there isn’t just one way to build a data pipeline. That’s where data pipeline architecture comes in.

A data pipeline architecture is the design and implementation of the code and systems that copy, cleanse, transform, and route data from data source systems to destination systems.

Common Data Pipeline Architecture Patterns

Data pipeline architecture is applicable on multiple levels. You need to think not only about the architecture of each individual pipeline but also about how they all interconnect as part of the wider data infrastructure.

To get started, look at the architecture patterns that are common in the data industry. Different patterns apply to different aspects of your architecture. We’ll discuss three different aspects, each with two or three possible approaches:

- ETL vs ELT (the order of data processing steps)

- Batch vs Real-time (the timing of the data pipeline)

- Siloed vs Data Monolith vs Data Mesh (overall data infrastructure design)

None of these decisions are actually binary — there is a middle ground. They can also be mixed and matched in different ways. But they’re a great place to start the complex planning process.

ETL vs ELT

The first way we can look at data pipelines is in terms of the order of their steps.

Data pipelines begin with raw data from an outside source. They must load that data into a destination system, where it’ll need to be in a specific format: ready for data analytics, for example, or in a format that’s compatible with an important SaaS app.

This means the data doesn’t just need to be moved. It must also be transformed. So, do you move or transform it first?

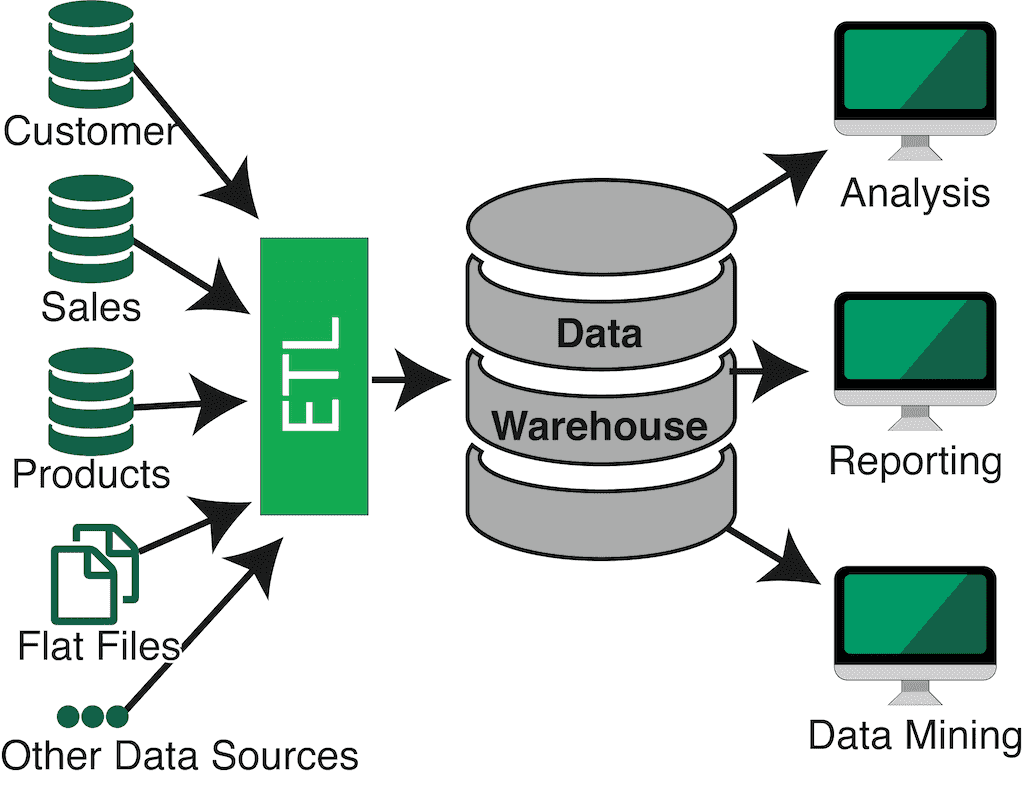

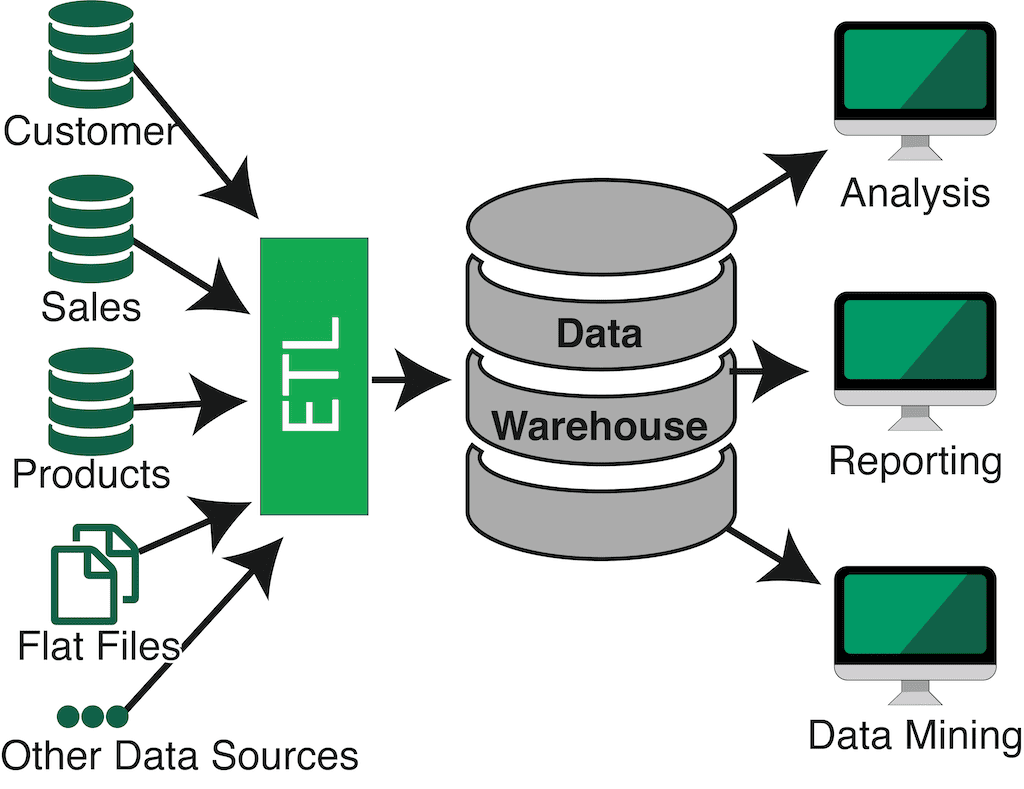

ETL and ELT are two models that describe this decision. ETL stands for “extract, transform, load.” ELT stands for “extract, load, transform.”

The answer might depend on the pipeline. For example, if you are loading the data to a SaaS app with a strict schema, you’ll need an ETL pipeline. But if you’re loading into a data store like a data warehouse or data lake, you’ll have more flexibility, so ELT might be acceptable.

In the real world, there’s not such a clear division between ETL and ELT. Most pipelines include different data transformations at various steps, both before and after loading. For example, you might perform only basic data validation and cleaning at first, so your options for data analysis remain flexible.

To hone in on the right approach, consider:

- How disorganized is the data coming in, and what transformations will it require?

- Are those required transformations always the same, or will they vary over time and by use case?

- Could performing those transformations after loading endanger the destination system by bogging it down, causing errors, etc?

The ELT and ETL acronyms are traditionally used to refer to batch data pipelines, but the principles of process ordering also apply to real-time pipelines. Let’s talk about batch and real-time next.

Batch Data Pipelines vs Real-Time Data Pipelines

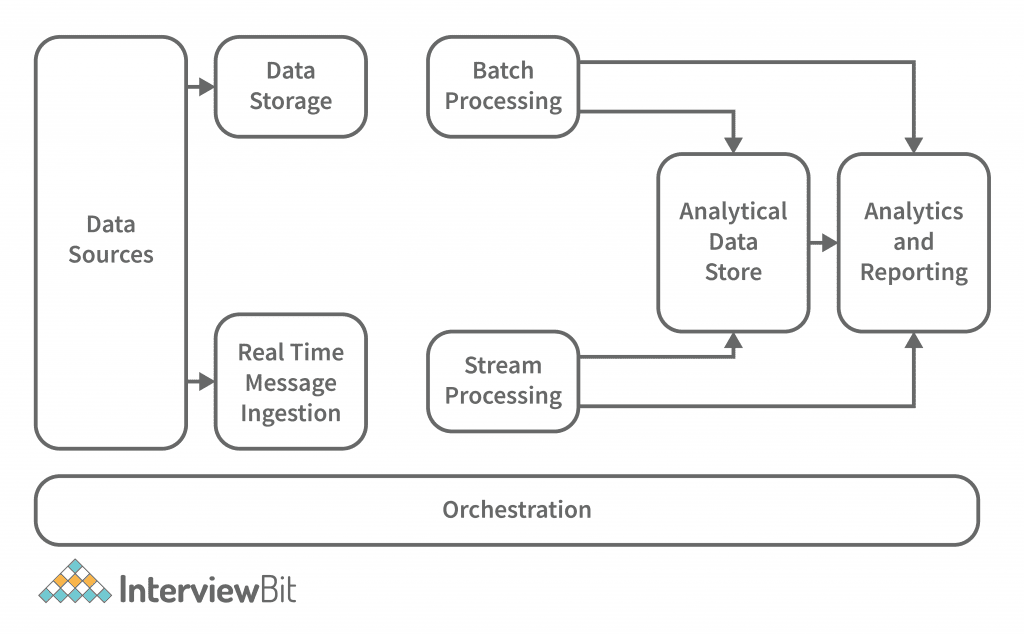

The next decision you must make has to do with the technical foundation of each pipeline: batch or real-time (also known as streaming).

Essentially, a pipeline can process data in two ways:

- In chunks, or batches, by checking in at a set interval.

- In real-time, by reacting as soon as any change occurs.

The most obvious difference between batch and real-time is the resulting latency.

Real-time data pipelines transport data virtually instantaneously, so they have extremely low latency. A batch pipeline only scans the source data at a set interval. For example, if that interval is hourly, there can be up to an hour of latency between when a new data event occurs and when the pipeline processes it.

In addition to the rate of data movement, choosing between a batch or real-time data pipeline architecture will have major effects on performance and cost. This is because they process data in fundamentally different ways.

Most batch processing frameworks have to periodically scan the full source dataset to look for changes. This can take a lot of computational power for large datasets. Real-time pipelines, by contrast, react to change events, and may not ever need to look at the entire source dataset.

Large-scale streaming data pipelines can also be cumbersome and costly if they’re not architected right. While a batch pipeline can easily be built from scratch, that’s not the case for real-time pipelines. A common pattern is to start with an open-source stream processing framework like Apache Kafka and build the pipeline on top of it, but even this can be extremely challenging.

A big part of optimizing your pipeline for its desired rate and throughput is choosing between batch and streaming, and from there, honing in on the exact processing framework. This requires either a data pipeline vendor you trust or a knowledgeable team of data engineers. Often, the answer is both.

Many vendors offer products that make batch pipelines easy, including Airbyte and Fivetran. Estuary Flow is an alternative that makes real-time pipeline simple.

Using a product like this to simplify batch or real-time pipeline setup allows data engineers to spend more time on the next step: your wider data infrastructure design.

Siloed Data Domains vs Data Monolith vs Data Mesh

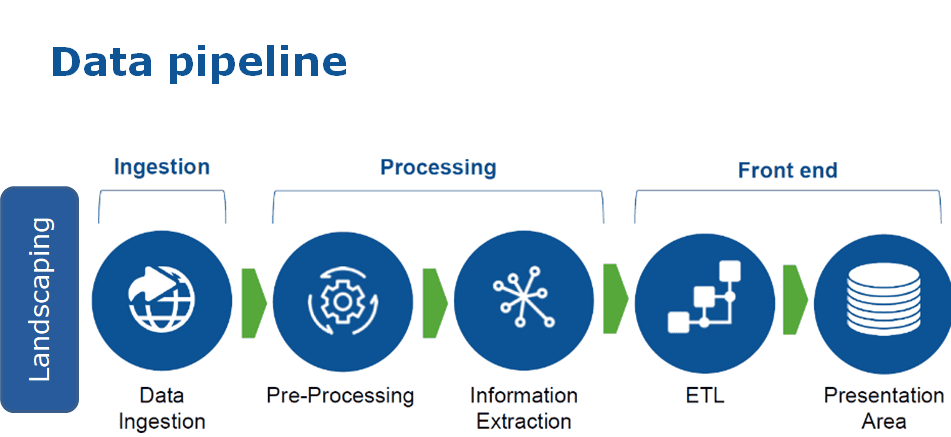

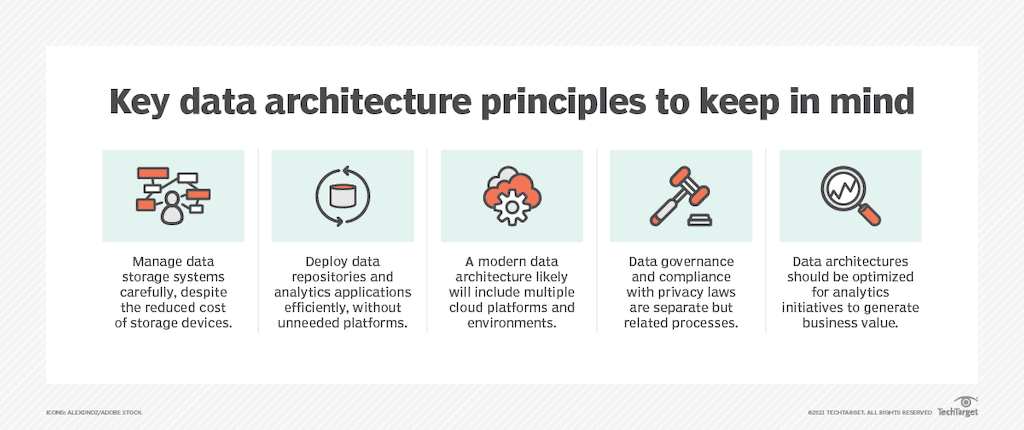

As you zoom out within an organization, data pipeline architecture becomes hard to distinguish from data architecture more broadly. Data architecture is a blueprint that describes how data is managed in an organization. This includes data ingestion, data transformation, storage, distribution, and consumption.

Data pipelines provide the data integration component of the data architecture: they are what holds everything together.

In any given organization, various business domains rely on data infrastructure to support vastly different workflows:

- Business leaders rely on business intelligence applications and dashboards.

- Marketers and salespeople need an accurate view of customers in their CRMs.

- Data scientists need access to company-wide data to perform analysis and machine learning.

Each needs its own pipelines for its specific use cases. This is where things get complicated.

The least sophisticated solution is for each business domain to have its entire data lifecycle in a silo. This means different pipelines, different storage, and different types of data.

With siloed data, things get out of control fast. Different departments have different views of reality because they’re working with different data. And the transfer of data products between business domains becomes next to impossible.

On the other extreme, you can have a data monolith. In this type of data architecture, different types of data from all different domains are combined in a huge, centralized data warehouse or data lake. All data pipelines either come from or lead to this central data store.

A monolith keeps all the data in sync, but people at different steps of the data life cycle — for example, data engineers and data users — become distant from each other and can have conflicting interests.

Somewhere in the middle is a data mesh. With a data mesh, the central monolith is eliminated, and pipeline sources and destinations are driven by business domains. But the infrastructure itself is centralized. This means that every domain must use the same technology, follow the same guidelines, and keep its data in sync.

Considerations For Data Pipeline Architecture

Planning your data pipeline architecture can take lots of thought and time. But once that’s done, it should be easy to implement, maintain, and extend as your business grows. Here are some considerations that will help you avoid common pitfalls.

1. Remember That Cloud Storage Isn’t Always Cheap

In the old days of on-premises servers, data storage resources were limited. This kept data architecture small. Today, in the age of big data, cloud storage is the standard. On paper, it’s extremely affordable. This can tempt us to treat it as if it’s unlimited, but that’s not the case.

Your entire data architecture should be designed with cost efficiency in mind — cheap and expensive systems alike. So even if you have data in a cheap cloud storage bucket, or your data pipeline provider is super affordable, you should optimize things there too.

Not only is this a good habit that will lead to savings in the long run, but it also becomes critical when you move data into other, more expensive systems. Cloud data warehouses like Snowflake and BigQuery have pricing models that can be surprising at times, for both computation and storage. You don’t want to land data into these systems without a plan.

To avoid being hit by higher-than-necessary bills:

- Read the fine print of your agreement with every platform you use and do research about other customers’ experiences. Use your data pipeline architecture to move data into each system in a format that will be most efficient.

- Store your data as efficiently as possible wherever you can, so that when you move it into more expensive systems, you won’t need to scramble to save yourself from a huge bill.

2. Spend Time On Security And Encryption

Security and encryption are top priorities for data infrastructure, including pipelines. Pipelining involves data in motion, so the process should be encrypted at your data’s source and/or destination.

There are two aspects of encryption you must think about:

- Encryption at rest: This means that either the source or destination systems contain encrypted data. Your data pipeline doesn’t control this, but it will need to securely integrate with the encrypted endpoints.

- Encryption in motion: The data is encrypted as it moves through the pipeline. TLS is a popular protocol used to achieve this.

Different tools you use (think data warehouses, cloud storage, and data pipeline providers) will likely have encryption mechanisms built in. Check these to make sure they meet the needs of your data, and if they work well together. For complex data architectures, you’ll likely need to enlist a security expert.

3. Think About Compliance Early

If your data pipeline is part of a commercial product, you’ll need to meet regulatory compliance standards. These standards have to do with data security and encryption, but likely go beyond what you would think to implement on your own. You’ll need dedicated engineering and planning to meet the requirements.

Compliance law varies by region, so you should take into account not only where you are now, but how your company might expand. For instance, let’s say you’re in the United States. The US doesn’t have a single, overarching law that protects all consumer data. But some states do, so if you have any customers in California, you’ll need to comply with CCPA. And if your five-year roadmap includes expansion to the European markets, you’ll want to build pipelines that comply with GDPR starting today.

If you have any questions about how these bodies of law are interpreted, it’s best to consult with a lawyer.

4. Plan Performance And Scale With The Future In Mind

In data pipeline design, two of the most important considerations are performance and scale.

Performance requirements refer to how quickly the pipeline can process a certain amount of data. For example, if your destination system expects 100 rows per second, you should ensure that your pipeline can handle this.

Scale refers to how much data can pass through the pipeline at any given time without causing any slowdowns or failures in service delivery.

Make sure your team is on the same page about what is realistic and what is possible within your budget. You may find that some goals need to be adjusted.

A well-designed architecture will distribute the load across multiple cloud-based servers or clusters. Distributed architecture allows you to intelligently use resources like memory, storage, and CPU. Perhaps more importantly, it protects against failure in the event that the system does get overloaded.

Finally, consider the fact that the volume and throughput requirements of your data pipeline, and your entire data infrastructure, will inevitably scale up over time. Make sure you are well-positioned to continue to scale in the future.

Some common approaches to handle scaling are:

- Using application containers (such as Docker) to distribute and manage workloads across a cluster of servers

- Adding more servers to become more fault-tolerant in case one goes down due to an outage or hardware failure

- Continuously rebalance the load of incoming requests across multiple servers

- Using auto-scaling to increase the number of servers when there is a surge in traffic and decrease it when there is not

- Use automation tools like Amazon Web Services (AWS) CloudFormation, Terraform, and Ansible to automate the process of adding new servers to your cluster when needed

Conclusion

Your organization likely has a large and ever-growing amount of data. High-quality data pipelines help you get value from that data in the form of better and faster decisions.

There’s no “right” way to architect data pipelines — it completely depends on your use case and business needs. You may require batch or real-time pipelines; they may be part of a data mesh or a monolith. The important thing is that the architecture is well-thought-out.

Planning and implementing data pipeline architecture across a company is a multi-step process that involves data engineers, business stakeholders from other domains, and sometimes even cybersecurity and legal experts. But putting in the effort up front to do this well will ensure that your data pipelines continue to meet your team’s needs with no nasty surprises.

Check out the rest of the blog to learn more about real-time data and data pipelines.

If your data pipeline architecture vision involves real-time pipelines, Estuary Flow can make this planning process quite a bit easier. Try Flow for free.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles