As data keeps growing, you might find that you’re struggling to leverage all the collected data for decision-making. Today, the rate of data collection is greater than the rate of data consumption in organizations. As a result, you often witness massive data silos in your organization. To eliminate data silos, you must rely on a robust data architecture that can democratize access to data.

This is where data mesh principles are being used by many organizations to enhance operational efficiency. Data mesh, although a new concept, is being embraced by organizations at a massive scale to improve data collection, management, and consumption across the departments of organizations.

So, let’s understand what a data mesh is.

What is Data Mesh?

Data Mesh is a modern data logical architecture introduced by Zhamak Dehghani. It is a decentralization of data within centralized storage systems like a data warehouse or a data lake. Meaning the data is still gathered at one location but stored according to their business lines or domains, such as marketing, sales, operations, etc.

Data mesh is a domain-oriented architecture with an aim to bring data producers and consumers closer together.

In data mesh, each domain or business line is responsible for collecting, processing, organizing, and managing data. This provides more ownership of the dataset to the respective business domain owner. When the domain owner takes full responsibility for their data, it authorizes them to set and follow specific governance standards. In return, the data generated is of high quality.

Data mesh follows federated governance standards. It overcomes many limitations, like lack of accountability and self-service data access in centralized systems. Data mesh allows you to segregate data for domain-specific autonomy. However, it links them together through global standards.

Data mesh follows the ideology of treating data as a product—a usable, feasible, and deliverable data set. So, all the product-related concepts apply to data in a data mesh. This can include data security, easy access, data management, and collaboration between cross-functional teams.

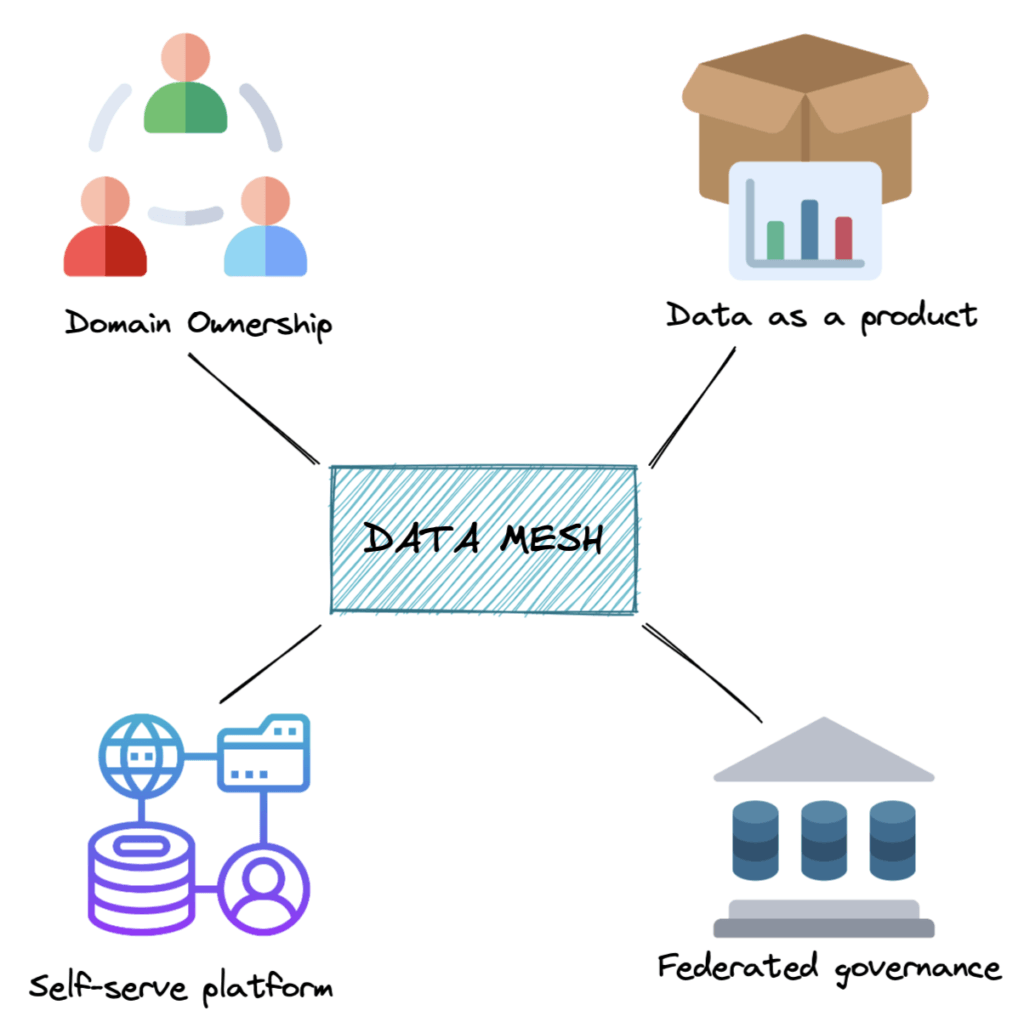

Image Credit: Dremio

When and Why to Use Data Mesh?

Organizations follow centralized data storage practices with data warehouse, data lake, cloud data platform, or lakehouse. You use these models to collect data from various sources and store them in one place. So, to manage centralized data, you need a single data team that is responsible for handling and managing data.

Unlike centralized data storage, a data mesh follows domain-oriented data storage management to ease the operational flow. As the data is categorized based on the specific department, you can easily search, use, and manage data.

Why use the Data Mesh model:

- Quick Access to Data: Data is always ready to be used since one central team is not constrained with creating data for all the business operations.

- Designed for all Types of Users: This model is devised for more than just technical roles; people from non-techie backgrounds can also use it.

- Better Clarity: As data is managed by their respective domain owners, it is more reliable than when data is prepared by the central team.

- Compliance-Ready & Superior Governance: As domain experts manage the sensitivity of their data, it ensures better compliance and governance.

What are the Data Mesh Principles?

There are 4 principles of Data Mesh that you should know before implementing it in your organization.

Domain Ownership

Domain ownership is the first data mesh principle and is the fundamental pillar that states each domain should fully own its data. As data mesh is decentralized architecture, you organize data according to its domains. Domain-oriented principle saves an ample amount of time in discovering, sharing, or sorting datasets. Therefore, data is streamlined end-to-end by its domain owner, enabling agility and scalability.

When the respective domain owner processes data, you have a clear understanding of who is accountable for the data. This ensures the generated data is contextually accurate. Domain owners and teams should be fully responsible for the output and data used to get to the insights. Whereas, in the centralized repository, it is unclear to you who owns the data and who is responsible for producing data.

Domain ownership ensures the data should be cleaned and enriched in a way that is understood by others within the organization. This ensures data is not only generated for operational but also for analytical purposes. As a result, data mesh empowers you to make informed decisions.

Data as a Product

Why should data be recognized as a product? Is it really a product?

The second data mesh principle stresses the importance of treating data as a product. It means, just like a product, your data should provide a better experience to its users. Here, users are other data users. In other words, this principle states that analytical data provided by the domain team should be considered as a product. And people who consume this data should be treated as customers.

One of the goals of this principle is to make data reusable by all other domain teams. For this, your data should be stored with proper metadata descriptions. Sorted data let other departments search and use data easily.

Based on the following characteristics, the domain should ensure that the data product is:

- Discoverable

- Self-describing

- Addressable

- Trustworthy

- Understandable

- Interoperable

- Secure

- Valuable

Self-Serve Data Infrastructure as a Platform

Self-serve data infrastructure as a platform is the technical pillar of data mesh principles. With this, you can obtain a robust infrastructure that empowers different domains and gives them autonomy in a self-serving manner. Meaning data infrastructure as a platform should be domain-specific, designed with a generalist approach, and able to support autonomous teams.

The domain team should not rely on a central data team and be able to manage—build, deploy, execute, monitor, and access—the data products.

To manage the data life cycle, you would need an infrastructure to support domain owners. However, with data mesh, you need to ensure that domain owners do not have to worry about the underlying infrastructure.

The idea with data mesh is to have a self-serve approach that democratizes data for both technical as well as non-technical users. Building a self-serve or a common infrastructure as a platform reduces the need for technical expertise among domain owners.

In case the self-serve data infrastructure is not in place, it would be difficult for domain expertise to manage data. And this would lead them to rely on centralized sources.

The third principle of data mesh architecture is designed to support autonomous teams and all users, not just technologists. So that it empowers cross-functional teams to exchange information.

Ultimately, the goal is that data should be easily accessible, understandable, and consumable by all users.

Federated Computational Governance

In this data mesh principle, the data governance standards are maintained centrally, but local domain teams have the liberty to execute this standard in their preferred environment. In simple terms, each domain can maintain its independent set of rules, but it must follow the global standard rules.

When you decentralize the data, there is a risk of inefficiencies in terms of collaboration if the standards are widely different. Data mesh handles this by devising the framework for governance with the central team. As a result, it is important to manage data under a set of global regulations.

The fourth principle follows strict compliance and governance policy as it secures domain-specific data. It ensures that the business follows the governance regulation to maintain data.

Conclusion

Data mesh is a revolutionary approach to organizing and maintaining data and overcoming the bottlenecks of centralized storage systems. The data mesh 4 principles can help us realize how adopting modern data storage technologies can bring revolutionary growth to the business. This approach can help businesses reduce the complexity around data to gain a competitive advantage.

Estuary Flow is a platform for flexible, accessible, real-time ETL. As a self-serve platform with strong data QA, use Flow to tie together your data mesh strategy. Try Flow today to get started.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles