Data Integration vs ETL: Comprehensive Comparison Guide

Uncover the key differences between data integration vs ETL and learn how these two approaches can streamline your data management processes.

In today's data-driven world, organizations have more data at their fingertips than ever before, and that's both a blessing and a bit of a curse. While having tons of data can provide better insights, it also means dealing with the headache of integrating data from different systems. To deal with this complexity effectively, it is crucial to understand the difference between 2 key approaches:

When 80-90% of all digital data produced is unstructured, the importance of these methods becomes even more obvious. For businesses to fully use this data, they need more than basic strategies to integrate and consolidate their various enterprise-level data sources. But which route should they take? Should they go with the ETL approach or is data integration the smarter move?

Our guide will answer all these questions. We'll get into the major difference between data integration and ETL and help you identify when to use each methodology based on different factors.

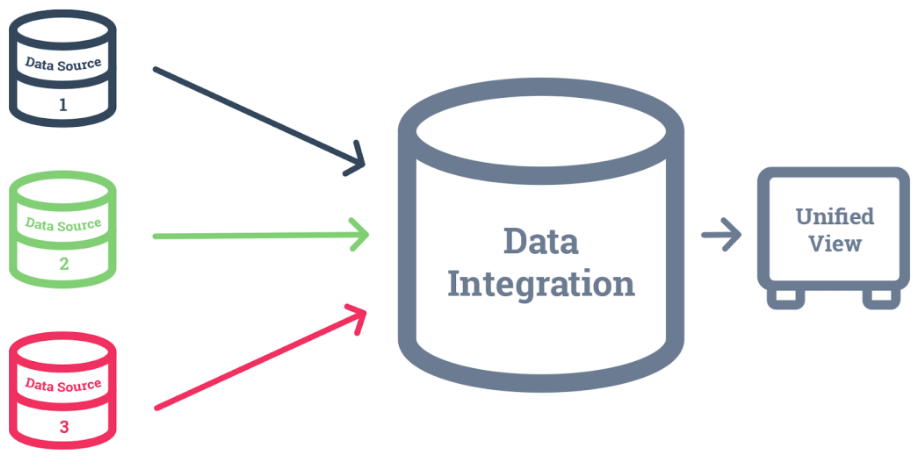

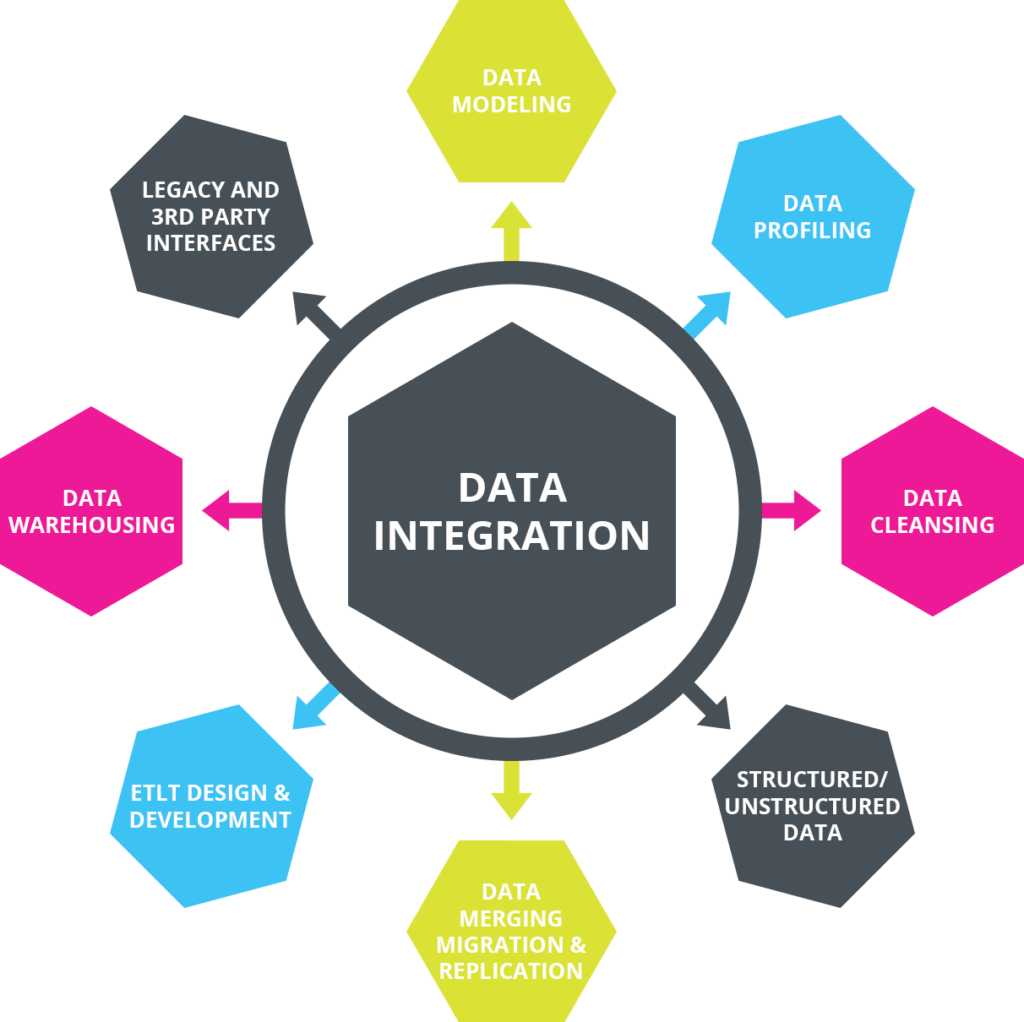

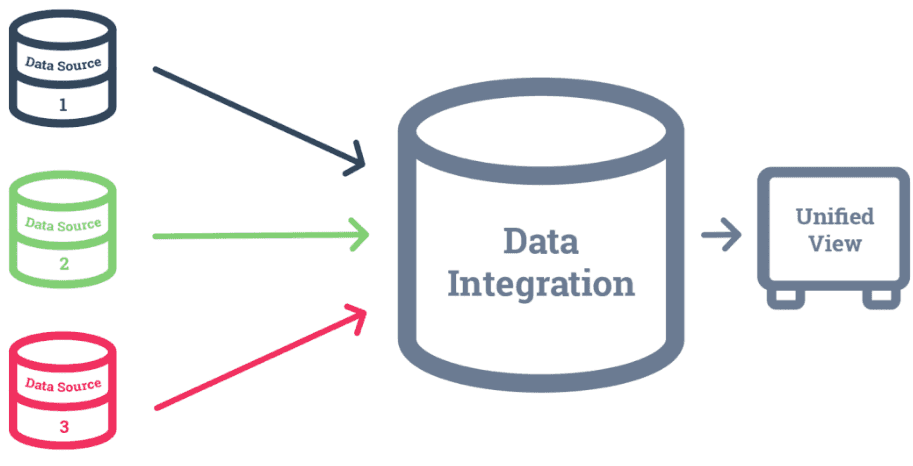

What Is Data Integration?

Data integration combines data from different sources for a unified view. It gives you consolidated data even when it is stored in multiple locations and formats. The major goal of data integration is to create a ‘single version of the truth’ across the enterprise and this is where defining master reference data becomes important.

Companies today frequently interact with many systems, databases, and applications. Each of these systems houses valuable data that offers limited insights when isolated. However, when it is integrated, it gives a clearer picture of the organization’s operations, strengths, and areas of improvement.

Centralizing data through integration helps you to streamline processes and make better decisions. But if you want to keep this advantage, regular maintenance and updates are needed to keep the integrated system accurate and in sync with the business’s changing requirements.

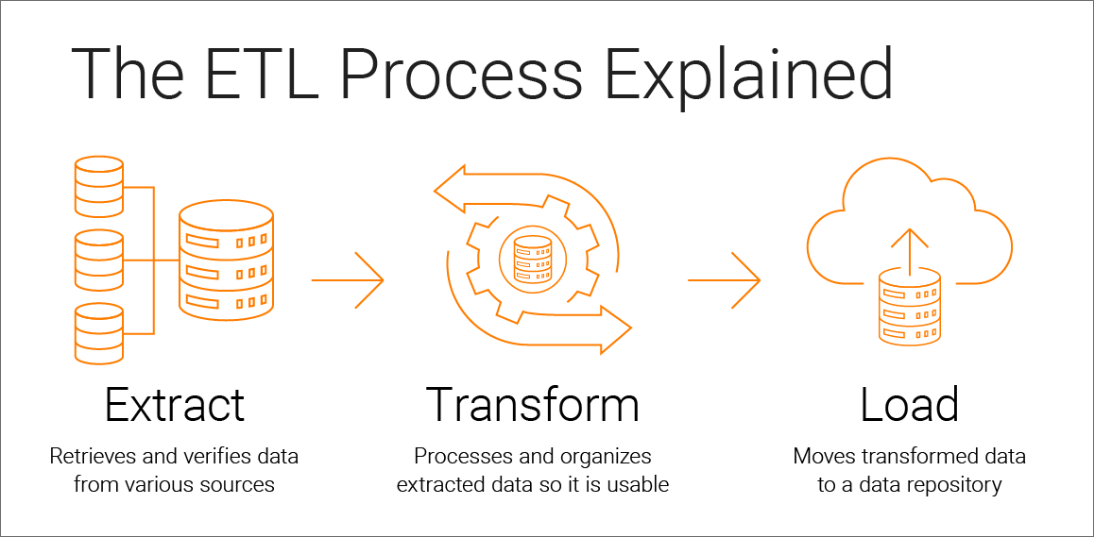

What Is Extract, Transform, & Load (ETL)?

ETL is a key data integration process commonly used to consolidate and prepare data for analytics and reporting needs. ETL involves moving data from various sources, processing it, and loading it into a destination data store like a data warehouse or data mart. For a better understanding of ETL processes, let's discuss them in detail.

Extract

The extract step retrieves data from various sources including relational databases, files, web APIs, SaaS applications, IoT devices, and social media. Data can be extracted using SQL, APIs, connectors, scripts, etc.

Common extraction patterns are:

- Full extraction of entire datasets: In this pattern, the entire source dataset is extracted in its entirety from the source system. This is suitable when data volumes are manageable and changes are infrequent but it can be resource-intensive for large datasets.

- Partitioned extraction to split data for parallelism: Data is divided into partitions or segments that can be extracted concurrently. This approach increases extraction speed and uses the capabilities of parallel processing systems to effectively reduce the overall extraction time.

- Incremental extraction of only changed data: This pattern involves identifying and extracting only the data added or modified since the last extraction. It optimizes resource usage and reduces processing time, making it ideal for large datasets with frequent updates.

The extracted data is then moved to a staging area for further processing.

Transform

In the staging area, the raw extracted data goes through processing and transformation to make it suitable for the intended analytical use case. This phase involves filtering, cleansing, de-duplicating, and validating the data to remove errors and inconsistencies.

To further transform data, other activities are performed that include:

- Aggregations: Combine multiple data rows into summarized or grouped results

- Calculations: Perform mathematical operations on data fields to derive new values or insights.

- Editing text strings: Modify textual content within data fields to correct errors or enhance uniformity.

- Changing headers for consistency: Renaming column headers to ensure uniformity and ease of data interpretation.

Audits are conducted to check data quality and compliance with any regulatory requirements. Certain data fields can also be encrypted or masked for security and privacy reasons. Finally, the data is formatted into structured tables or joined schemas to match the target data warehouse format.

Load

In this last step, the transformed data is moved from the staging area and loaded into the target data warehouse or data mart. The load process handles aspects like:

- Monitoring: Involves real-time or periodic tracking of the loading process, performance metrics, and resource utilization.

- Notifications: Sends alerts or notifications to relevant stakeholders when specific events or conditions are met during the loading process.

- Auditing: Tracks and records activities related to data loading, providing a detailed record of changes, transformations, and user interactions.

- Error handling: Manages and addresses errors that occur during the loading process, like data format issues, constraints violations, or connectivity problems.

For most organizations, this extract-transform-load process is automated, continuous, and batch-driven on a schedule during off-peak hours. This phase usually involves an initial bulk loading to populate the empty data warehouse with historical data. Then, periodic incremental loading happens to bring in new or updated data from sources. Rarely, a full refresh may be performed to replace existing warehouse data.

Data Integration vs ETL: 6 Key Differences

While data integration and ETL have some overlap, there are also major differences between them. Let’s discuss what they are.

Scope

Data integration has a broader scope that includes use cases beyond just data warehousing. It is about combining data for various end uses like application integration, cloud data consolidation, real-time analytics, etc. ETL is specific to data warehousing workflows and is mainly used for populating and managing enterprise data warehouses.

Methods Used

Data integration employs ETL (extract, transform, load) but can also make use of other methods like ELT (extract, load, transform), data virtualization, data replication, etc. as per your different integration needs. ETL, by definition, relies on the traditional extract, transform, load approach for populating data warehouses.

Frequency

Data integration workflows can be real-time, scheduled batch, or ad-hoc on-demand as per the requirements. ETL is traditionally associated with periodic and scheduled batch workflows for data warehouse loading.

Output Location

Data integration can deliver integrated data to any target system including databases, data lakes, cloud storage, applications, etc. ETL is closely associated with loading data into data warehouses and data marts for analytical use.

Tools Used

Data integration uses specialized integration platforms, data virtualization tools, and middleware designed to handle diverse integration needs. On the other hand, ETL relies heavily on traditional ETL tools optimized specifically for data warehousing tasks like bulk loading.

Data Volume

Data integration can handle all sorts of data volumes – from small to extremely large big data scenarios. ETL, however, typically handles high volumes of data since data warehouses aggregate large historical datasets from multiple data sources.

Beyond Traditional ETL: The Evolution of Data Management through Modern Integration Tools

With the ever-changing backdrop of data management, data integration tools are crucial for moving and orchestrating data across systems. Among these tools, Estuary Flow stands out as a specialized real-time data integration platform designed for efficient data migration and integration. Using real-time streaming SQL and Typescript transformations, it seamlessly transfers and refines data across various platforms.

While some platforms focus mainly on the Extract and Load components, Estuary Flow introduces a transformative layer, enhancing data in transit. Its unique transaction model upholds data integrity to reduce the risks of potential data losses or corruption.

Designed with a streaming-based architecture, it champions adaptability and caters to different workloads irrespective of their magnitude. To promote collective data management, its collaborative attributes let diverse team members synergize on the platform, regardless of their technical prowess.

6 Key Features Of Estuary Flow

- User control and precision: Maintains data quality with built-in schema controls.

- Expandability: Capable of managing Change Data Capture (CDC) up to 7GB/s.

- Top-notch security: Implements encryption and authentication to fortify the data integration process.

- Legacy system compatibility: Smoothly integrates traditional systems with modern hybrid cloud platforms.

- Integrated connectors: With over 200 pre-established connectors, it easily amalgamates data from diverse sources.

- Automated operations: Features like autonomous schema management remove the need for manual interventions and data duplication.

Data Integration Use Cases: Understanding Practical Applications for Better Insights

Data integration became important for many industries as organizations are all about breaking down data silos and getting a real full-picture grip on their business processes. Some of the top data integration examples are:

- Application Integration: Different applications can exchange data seamlessly through data integration. It can connect cloud and on-premise apps like ERP, CRM, HCM, etc. for cross-functional processes.

- Data Lake Ingestion: To perform advanced analytics on big data, you need to ingest and organize varied data types into data lakes. Data integration helps you acquire, transform, and load streaming and batch data into data lakes.

- Master Data Management: Data integration consolidates data from sources and resolves conflicts to create definitive master records. Master data like customers, products, and accounts is referenced across systems. This results in unified reference data.

- Real-Time Data Analytics: Data integration streams live transactional data and events into analytics systems for real-time monitoring, alerting, and reporting to support timely actions. It brings real-time data from databases, apps, and messaging into analytics tools.

- Self-Service Business Intelligence: When you centralize transactional data from the entire organization into a data warehouse, you get direct access to it without relying on IT assistance. This lets you independently assess financial indicators, evaluate customer metrics for segmentation, and explore other key data points.

- Unified Customer Profile: Building a complete view of customers requires bringing together data from sources like customer relationship management systems, website interactions, purchases, support tickets, etc. Data integration consolidates data across these channels to create a unified customer profile. This powers personalized marketing and experiences.

- Legacy Modernization: Data integration makes legacy system data accessible through APIs and strategically integrates it into contemporary platforms. This way, the data value can be realized without going through potentially risky overhauls. This data integration eases the way for a phased approach to modernization and helps in a smoother transition and reduced risk.

Examples of Data Integration in Various Industries

Here are industry-specific examples of data integration:

Retail Industry

- Inventory Management: Retailers integrate data from point-of-sale (POS) systems, inventory databases, and supply chain data to optimize stock levels, reduce overstocking or understocking, and improve order fulfillment.

- Customer Analytics: Combining data from customer loyalty programs, online and offline sales, and social media lets retailers create comprehensive customer profiles, tailor marketing campaigns, and enhance the overall shopping experience.

Healthcare Industry

- Electronic Health Records (EHR): Healthcare providers integrate patient data from EHR systems, medical devices, and laboratory results to provide a comprehensive view of a patient's medical history.

- Research & Clinical Trials: Integration of data from various clinical trials, research studies, and patient databases enables researchers to analyze large datasets and develop new treatments or therapies.

Financial Services Industry

- Trading & Investment: Investment firms integrate market data, financial news, and historical trading data to inform algorithmic trading strategies and portfolio management.

- Risk Management: Banks and financial institutions integrate data from various sources, including transaction data, credit scores, and external market data, to assess credit risk, detect fraud, and make informed lending decisions.

Manufacturing Industry

- Quality Control: Integration of data from sensors and quality control processes helps manufacturers detect defects and improve product quality.

- Supply Chain Optimization: Manufacturers integrate data from production systems, suppliers, and logistics to optimize supply chain operations, reduce lead times, and minimize production bottlenecks.

eCommerce Industry

- Fraud Detection: Integration of transaction data and user behavior patterns helps detect fraudulent activities and protect against online payment fraud.

- Recommendation Engines: eCommerce companies integrate customer behavior data, product catalog information, and historical purchase data to build recommendation engines that suggest personalized products to customers.

Energy & Utilities Industry

- Asset Management: Integration of sensor data from equipment and machinery enables predictive maintenance to reduce downtime and maintenance costs.

- Smart Grids: Energy companies integrate data from smart meters, weather forecasts, and grid sensors to optimize energy distribution, reduce outages, and improve energy efficiency.

Transportation & Logistics Industry

- Fleet Management: Logistics companies integrate data from GPS tracking, vehicle sensors, and traffic information to optimize routes and reduce fuel consumption.

- Inventory Tracking: Integration of data from warehouses, suppliers, and transportation systems helps maintain accurate inventory levels and streamline the supply chain.

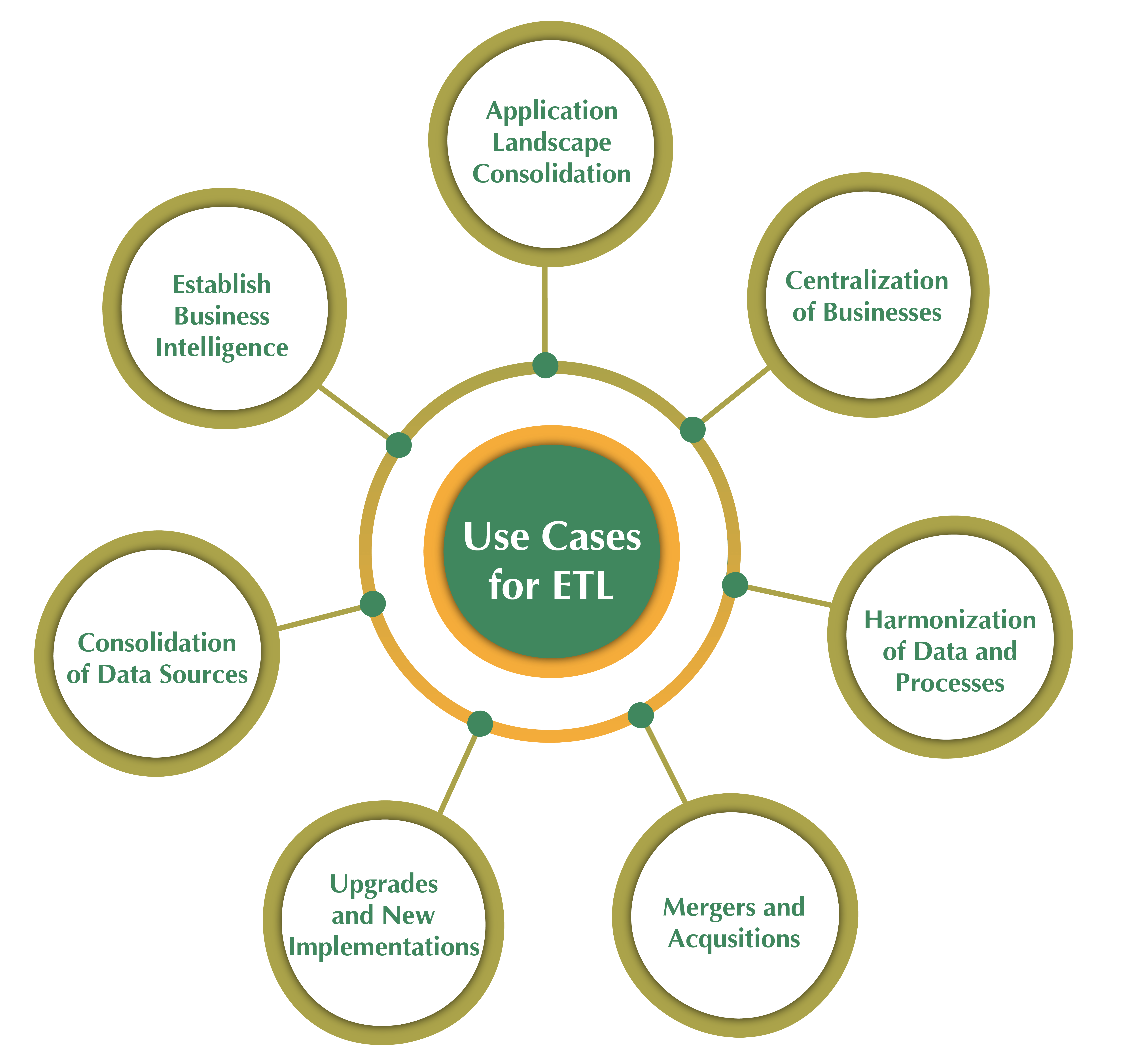

ETL Use Cases: Extracting Value for Streamlined Data Transformations

You can streamline operations and make more informed decisions if you understand the diverse applications of ETL. Here are major use cases where ETL offers business value:

- Cloud Data Warehouse Migration: Migrating an on-prem data warehouse like Teradata to a cloud option like Snowflake can be achieved via ETL.

- Data Quality & Security: ETL tools provide data masking, validation, and auditing capabilities for high-quality and data-compliant integration into warehouses.

- Testing & QA Datasets: It provides a quick and reusable way to load datasets required for testing, QA, and application development by integrating data from operational systems.

- Database Administration: ETL workflows help automate repetitive DBA tasks like statistics gathering, index creation, archiving, etc. that are vital for high database performance.

- Operational Data Integration: ETL workflows can move real-time data from transaction systems into operational dashboards and analytics platforms to monitor business events.

- Enterprise Data Warehousing: It helps load large volumes of historical data from multiple sources and manages incremental updates into enterprise data warehouses. This builds the foundation for BI.

Examples of ETL in Various Industries

Let’s look at the application of ETL in different sectors.

Media & Entertainment

- Audience Analytics: ETL processes are employed to collect data from streaming platforms, social media, and user interactions. This data is transformed and loaded for audience segmentation, content recommendations, and advertising targeting.

- Content Metadata Management: Media companies use ETL to extract metadata from various sources, transform it for consistency and accuracy, and load it into content management systems. This helps in organizing and categorizing digital assets, improving content discovery, and enhancing user experiences.

Agriculture

- Crop Monitoring: In agriculture, ETL is used to extract data from IoT sensors, satellite imagery, and weather forecasts. This data is transformed to monitor crop conditions, predict yields, and optimize irrigation and fertilization practices.

- Supply Chain Traceability: ETL processes help trace the origin and journey of agricultural products by extracting data from various checkpoints, transforming it into standardized formats, and loading it into a traceability system. This ensures food safety and quality control.

Government & Public Sector

- Census Data Processing: Government agencies use ETL to extract data from national census surveys, transform it to anonymize personally identifiable information, and load it for demographic analysis, policy planning, and resource allocation.

- Emergency Response: ETL processes are employed to gather data from emergency services, weather stations, and traffic management systems. This data is transformed and loaded to support real-time decision-making during disasters and emergencies.

Pharmaceuticals & Life Sciences

- Genomic Data Processing: ETL processes are crucial for managing and analyzing genomic data generated from DNA sequencing machines. Data extraction, transformation, and loading are necessary to identify genetic variations and disease markers.

- Clinical Trials Data Integration: In pharmaceuticals, ETL is used to extract data from different clinical trial systems, transform it into a common format, and load it into a central repository. This helps in data analysis, drug development, and regulatory compliance.

Education

- Admissions and Enrollment: ETL processes are used to manage student admissions and enrollment data, ensuring accurate and up-to-date information for decision-making, reporting, and compliance.

- Learning Analytics: Educational institutions extract data from learning management systems, student records, and online assessments. This data is transformed for analyzing student performance, identifying learning trends, and improving teaching methods.

Real Estate

- Property Valuation: In real estate, ETL is employed to extract data from property databases, transform it by incorporating market trends and comparables, and load it for property valuation and investment analysis.

- Customer Relationship Management (CRM): ETL processes help real estate agencies manage customer data by extracting it from various communication channels, transforming it for unified profiles, and loading it into CRM systems for better customer engagement and sales.

ETL vs. Data Integration: Choosing the Right Approach for Your Needs

The decision to use ETL or data integration depends on your particular use and the nature of the data environment. While data integration platforms generally offer greater scalability and user-friendliness than ETL, the latter needs more niche expertise and often comes with heftier setup and maintenance costs.

However, ETL performs better in managing high-volume batch workflows like filling enterprise data warehouses. On the other hand, data integration is more suitable for processing low-latency, real-time data streams.

ETL Works Best For | Data Integration Works Best For |

| Initial historical loads into a data lake | Consolidating siloed data across the organization |

| Aggregating data for business intelligence dashboards | Data transfer between cloud services and on-prem systems |

| Bulk loading of data warehouses on a scheduled basis | Enabling cross-platform data exchange via RESTful APIs and message queues

|

| Migrating data from legacy systems into a new data warehouse | Implementing microservices architecture with event-driven data synchronization |

| Enabling real-time data synchronization between applications | Near real-time data movement is needed using APIs and change data capture |

| Streamlining data ingestion from IoT devices for real-time analytics | Supporting data federation for federated querying across heterogeneous sources |

Conclusion

In the data integration vs ETL debate, it's not a matter of ETL being better than data integration or vice versa – it's more about figuring out what works best for you. Understanding when to deploy data integration's versatile bridge-building capabilities or when to opt for ETL's powerful data transformation and movement abilities is the key here.

Even though they share similarities, their differences in scope, frequency, implementation, and use cases set them up for specific jobs. The best strategy is to combine these approaches for different integration needs.

With its real-time streaming data platform, Estuary Flow provides a modern way to tackle ETL and data integration challenges. Its automated schema management and transformation capabilities seamlessly move and refine data for analytics. Flow enables you to handle data integration vs ETL use cases in a simple, cost-effective way.

Sign up for free or connect with our team to explore how Flow can answer your data needs.

Author

Popular Articles