Peek behind the curtain of modern businesses and you'll find a busy marketplace where data-driven decisions rule the roost. Nowadays, the efficiency of decision-making is directly proportional to the quality and accessibility of integrated data. Data Integration in data mining can turn chaotic information into valuable insights – insights that can make new things happen, streamline processes, and push companies to be the best in what they do.

But here's the twist: a Forrester report revealed that 60-70% of all data goes untouched, lying dormant without being analyzed for insights. It turns out that many brands still do not have access to the right technology required for extracting value from data. This paints a picture of untapped potential and missed opportunities – something that can be resolved through comprehensive data integration in data mining.

If you are one of those businesses that are now looking to harness the power of data integration, you're on the right page. This guide is a complete resource that will answer all your questions related to data integration in data mining – from its importance to the different types and even how to handle different issues associated with data integration.

What Is Data Integration?

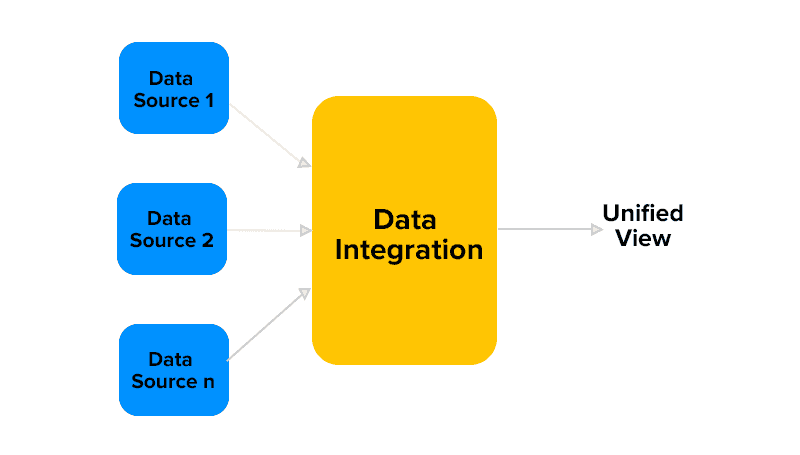

Data integration is, essentially, the process of consolidating data from multiple sources to get a unified and consistent view. It accesses multiple data sources and transforms them into a standard format for better data interpretation.

Data integration becomes important when data is spread across different platforms or systems. It makes data access and analysis simple and it improves efficiency.

Enterprise data integration has many uses, mainly in:

- Analytics

- Data warehousing

- Business intelligence

- Master data management

Considering how complex and varied data sources can be, it becomes difficult to maintain data reliability and value when combining data. You need to carefully plan and execute the whole process. Fortunately, there are different data integration solutions to make this whole task smoother.

What Is Data Integration in Data Mining?

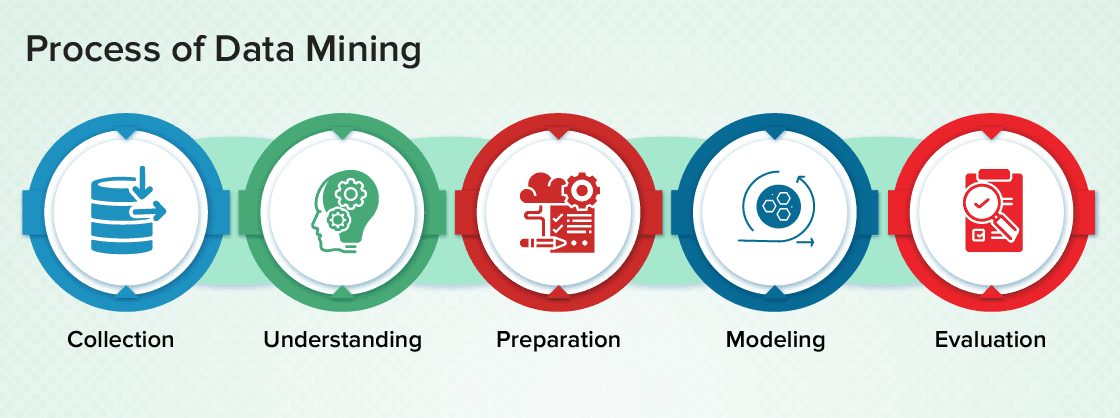

Data integration plays a big role in data mining. It reconciles formats and semantics to apply advanced techniques across broad, high-value scopes of data. Through data integration in data mining, the processes of wrangling, cleansing, and standardizing data all come together to create integrated datasets suitable for mining.

Data mining is about extracting valuable patterns and insights from large datasets.

Imagine having loads of data in different formats and structures; combining this data becomes crucial to make sense of it all. This combination and transformation of data for analysis is what data integration is all about in data mining.

With properly integrated data as its foundation, data mining delivers deep insights through predictive modeling, pattern discovery, and knowledge extraction.

The (G, S, M) Approach

In data mining, data integration follows a formal strategy which is called the (G, S, M) approach.

- G (Global Schema): This is a unified structure that defines how the combined data will look after integration.

- S (Heterogeneous Source): Refers to the diverse data sources which could be databases, file systems, or other repositories.

- M (Mapping): It serves as the bridge between G and S. It maps the data from its source format into the global schema.

For instance, when integrating employee data from 2 different HR databases:

- Our global schema (G) defines a standard structure for the data, like EmployeeID and Name.

- The heterogeneous data sources (S) would represent the individual database structures which can vary in their naming and data formatting conventions.

- The mapping (M) then serves as a guide and indicates how data from these different sources should be transformed and aligned within the global schema.

What Makes Data Integration Important in Data Mining?

Here are some of the major benefits of data integration in data mining.

Detailed Analysis

Data mining’s main objective is to extract actionable insights from comprehensive datasets. When data is integrated from different sources, analysts get a broader, more comprehensive canvas to work on.

Isolated datasets, no matter how large they are, can accidentally omit or overshadow important patterns or correlations that only emerge when data sources are combined. Bringing together data from different sources not only improves analysis depth but also reveals deeper and broader insights.

Quality of Insights

Data is the foundation where insights are built. The clearer and more consistent this base, the more dependable the insights become. Through data integration, discrepancies are minimized, redundancies are removed, and a more unified view emerges. This transformation process adds to the generation of insights that are not only reliable but also actionable.

Optimization & Productivity

The integration process serves as a funnel that directs multiple data streams into a single, unified channel. This optimization means that rather than going through different datasets individually, you can focus on a single, more comprehensive dataset.

This consolidation not only saves time but also reduces the computational power needed for a more streamlined and productive analysis process.

Scalability

As businesses expand, both data diversity and volume increase. Data integration gives a flexible framework that adds new data streams so that mining methodologies remain consistent and results are reliable.

Operational Efficiency

Data integration goes beyond simply combining datasets. While global schema queries carefully combine data from different sources for in-depth mining, it equally emphasizes the standard of data quality. This not only shapes the resulting insights but also builds a consistent data narrative that resonates throughout different organizational levels.

Better Insights

Today, any information that goes unexamined means possibly missing out on an opportunity. The sheer scope of integrated datasets along with advanced data mining techniques lets us discover insights that are both granular and detailed.

Types of Data Integration Tools

Let’s explore the 2 types of data integration tools and see what each has to offer.

On-Premise Data Integration Tools

On-premise data integration tools are installed directly on an organization's own servers and infrastructure, rather than relying on an external cloud provider. This gives the organization complete control over the entire integration process, architecture, and security.

On-premise tools are highly customized and can be adjusted according to your existing IT infrastructure. As the tools are within the organization's controlled environment, you get strict control over data security, privacy, and governance policies. However, you need to actively manage and maintain the integration platform.

Here are 5 scenarios and use cases where on-premise data integration tools should be preferred over cloud-based tools:

- Legacy Systems Integration: If your organization heavily relies on legacy systems or older technology that is not easily compatible with cloud services, on-premise tools offer a smoother integration process.

- Data Security & Compliance: If your organization operates in a highly regulated industry with strict data security and compliance requirements, on-premise tools offer greater control over sensitive data. They reduce the risk of data breaches and ensure compliance with industry regulations.

- Data Sensitivity & Privacy: When dealing with extremely sensitive data like proprietary research, trade secrets, or classified information, organizations prefer to keep data within their physical infrastructure to minimize exposure to potential cloud vulnerabilities or unauthorized access.

- Large Data Volumes & Latency: Processing and transferring large volumes of data to and from the cloud can introduce latency and bandwidth constraints. On-premises tools allow for quicker data movement and integration within your local network which can be beneficial when dealing with time-sensitive processes or massive datasets.

- Data Residency & Geographic Restrictions: Some industries or regions have strict regulations regarding where data can be stored and processed. On-premises solutions provide more control over data residency and ensure that data stays within specific geographical boundaries. This addresses compliance concerns related to data sovereignty.

Cloud-Based Data Integration Tools

Cloud-based integration platforms have gained popularity in recent years because of scalable and accessible integration across applications and modern systems. With the cloud delivery model, you can deploy these tools quickly without extensive on-premise infrastructure. This is attractive for businesses that want to set up quickly and cut down on operational costs.

One great thing about cloud integration platforms is how they effortlessly connect different systems and places. These platforms have integration abilities that ensure smooth data movement and uniform access integration, no matter where the systems are located. This makes it easy to link interfaces and exchange data between on-premise, SaaS, and cloud setups.

Here are 5 situations and examples of when cloud-based data integration tools are preferable over on-premise tools

- Cost Efficiency: Opt for cloud-based solutions when you want to pay only for what you use, avoiding upfront hardware costs and ongoing maintenance expenses.

- Scalability & Adaptability: Choose cloud-based tools when you need to handle variable workloads and data volumes as cloud platforms allow for easy resource scaling.

- Speedy Deployment: If you need quick data integration setup, go for cloud-based options as they allow faster provisioning and implementation compared to on-premise solutions.

- Remote Access: When data integration needs to happen across different locations or teams, cloud-based tools are the way to go because of their accessibility from anywhere with an internet connection.

- Managed Services: If you prefer to focus your IT resources on strategic tasks rather than routine maintenance, cloud-based tools are ideal as they often include managed services for updates, security, and upkeep.

Checkout top 11+ Data Integration Tools

Simplify Data Integration With Estuary Flow

Estuary Flow is our comprehensive ETL tool designed for seamless data migration and integration. It employs real-time streaming SQL and Typescript transformations for swift, continuous transfer and transformation of your data. Flow integrates data from different cloud platforms, databases, and SaaS applications which makes it a versatile tool for different operational needs.

- Integrated connectors: Over 100 pre-built connectors simplify integration from different sources.

- Real-Time CDC: Supports incremental data loading to optimize costs and integration performance.

- Security measures: Encryption, authentication, and authorization features protect the integration process.

- Safety protocols: In-built measures prevent unauthorized access or misuse and maintain data quality.

- Efficient data management: Real-time ETL capabilities accommodate various data formats and structures.

- Monitoring & reporting: Live updates provide an overview of the integration process for proactive management.

- Automated operations: Autonomous schema management and data deduplication eliminate redundancies.

- Comprehensive customer views: Provides real-time insights enriched by historical data for personalized interactions.

- Expandability: A distributed system that can handle change data capture (CDC) up to 7GB/s, adjusting to data volume needs.

- Legacy system connectivity: Facilitates seamless transitions between traditional systems and modern hybrid cloud setups.

- User control & precision: Built-in schema controls to maintain data quality and consistency throughout the integration process.

Data Integration Approaches In Data Mining

To effectively integrate and analyze data from different sources, you should know the 2 most effective data integration approaches first. Let’s discuss this in detail.

Tight Coupling Approach

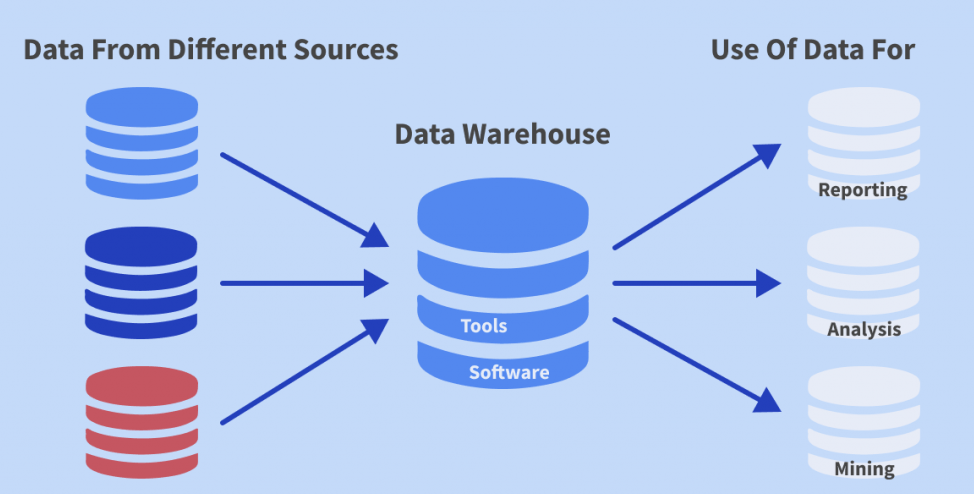

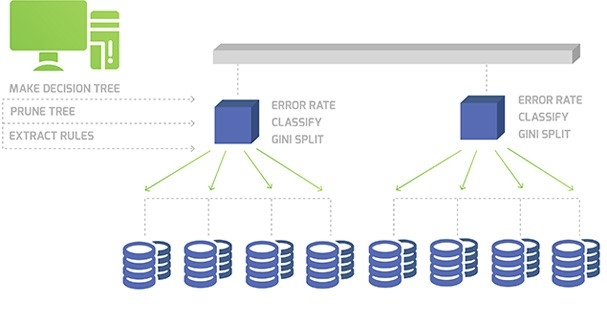

In this method, a centralized repository, often a data warehouse, is created to unify and store data from different sources.

- Centralized Repository: Data is brought into a unified data warehouse.

- Integration Mechanism: Integration happens at larger levels like involving entire datasets or schemas.

- Process Involved: The ETL processes – Extraction, Transformation, and Loading – are used for data collection and integration.

- Strengths & Weaknesses: It provides excellent data consistency and integrity but might face challenges in flexibility when updates are required.

Loose Coupling Approach

This approach promotes data integration at granular levels without the need for central storage. Data remains in its initial location and is accessed when needed.

- Repository Requirement: No centralized storage is necessary – a major differentiator that sets it apart from the tight coupling approach.

- Integration Mechanism: Integration happens at the level of individual records or specific data elements.

- Query Processing: Queries from users are transformed to make them compatible with source databases, fetching data directly from their original location.

- Strengths & Weaknesses: It offers flexibility and ease of updates but staying consistent and maintaining data quality across multiple sources can be challenging.

The 5 Different Data Integration Techniques in Data Mining

Let’s now discuss different data integration methods and understand the role they play.

Manual Integration

The most straightforward data integration approach is to manually gather, clean, and integrate data. While this method can be precise, it is best suited for businesses with smaller dataset

Manual integration gives analysts a hands-on approach to data integration for complete control over the process. However, scalability is the major issue in this type. As datasets grow, manual integration becomes tedious and time-consuming which makes it less efficient for larger datasets.

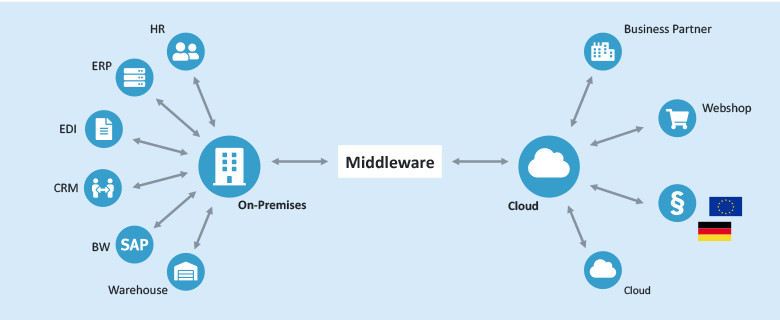

Middleware Integration

Middleware integration acts as the intermediary link and makes the data flow between older legacy systems and newer, modern databases easy. Using middleware software, you can collect, normalize, and transport data to the intended dataset.

The biggest advantage is its ability to smoothen transitions. But remember, it might be system-specific and may not cater to all integration needs.

Application-Based Integration

This technique uses software applications to automate the extraction, transformation, and loading (ETL) of data. It not only streamlines the entire data integration process but is also a more efficient way to make data from different sources compatible.

The major benefit here is the significant reduction in time and effort. However, things can get complicated when it comes to designing and implementing such applications as you need to have a specialized technical skill set for this.

Uniform Access Integration

Uniform Access Integration is unique in its approach to data integration. While it combines data from various sources, it keeps the data in its original location. The end-user gets a unified view, representing the integrated data without any physical movement of the data itself.

This technique can be highly beneficial for businesses that require a comprehensive view without additional storage overheads. The challenge, however, is that it might not be as efficient for operations that demand intensive data manipulations.

Data Warehouse Services

Data warehousing goes a step further than uniform access integration. While it provides a unified view of data, this view is stored in a specific repository known as a data warehouse. It helps in situations where high-speed query responses are needed.

Data warehousing provides a consolidated storage solution for business data and helps you manage complex queries with ease. However, while the technique provides faster query speeds, it does mean higher storage and maintenance costs.

What Are the Main Data Integration Issues in Data Mining?

While data integration in data mining is valuable, it comes with its set of challenges. Let’s look at the most common challenges that businesses face.

Entity Identity Troubles

Integrating data from heterogeneous sources can often create challenges when it comes to matching real-world entities. For instance, while one source may have a ‘customer identity’, another might assign a ‘customer number’ to it. Analyze metadata thoroughly to mitigate many of these discrepancies so that attributes from different sources align accurately within the target system

Redundancy & Correlation Analysis

Redundancy is a major challenge. For example, if 2 datasets have age and date of birth, the age becomes redundant since it’s derived from the birth date. The issue gets trickier when attributes across datasets are interdependent, as detected through correlation analysis. Dealing with these complexities requires careful examination so that the integrated data is compact and without unnecessary redundancies.

Data Conflicts

Merging data from different sources can sometimes cause conflicts. These inconsistencies come from differing attribute values or representations across datasets. For example, a hotel’s price could be represented differently because of regional currencies. It’s important to address these discrepancies to maintain the integrated data’s reliability.

Data Quality & Heterogeneity

Inconsistencies or missing values can compromise the accuracy of the integrated dataset. Also, integrating data from sources with different formats, terminologies, or structures can be complex and requires advanced data integration techniques to align the data.

Data Security, Privacy, & Governance

As data is gathered from various sources, worries about security and privacy increase. Following regulatory standards becomes vital to prevent breaches and maintain trust. Also, managing the accuracy, consistency, and timeliness of the integrated data becomes both challenging and important.

Technical & Scalability Issues

Integrating large datasets is resource-intensive. The process should remain scalable as data volumes grow. Also, the technical demands of data integration, from data modeling to employing ETL tools, require specialized expertise to be carried out effectively.

The Takeaway

Data integration in data mining isn't just some tool you use on the side. It's the backbone holding up the whole structure of efficient and effective data analysis. It keeps us safe from the hazards of data fragmentation and the danger of not seeing the full picture. At the same time, it acts like a bridge connecting the dots between raw data and insights – turning numbers into something profound.

With its real-time ETL capabilities, Estuary Flow facilitates swift and continuous data migration and integration. Its automated schema management eliminates redundancies while built-in controls maintain consistency. This makes Flow an efficient, scalable, and secure way to integrate data from different sources for enriched data mining and analytics.

To learn more about how Flow can support your data integration needs, try it out for free or contact our team for further assistance.

Interested in exploring more about optimizing your data processes?

Check out these articles on big data analytics, modern data warehousing, and data warehouse best practices. Dive deeper into the world of data integration with these insightful reads:

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles