Data integration isn't a new process, but it became more relevant with the increasing volume of data generated nowadays. It has evolved from being a conventional process to a mission-critical activity for organizations dealing with vast amounts of data. But, as simple as it may sound, there are a lot of data integration challenges on the way to successful data management and utilization.

Social media platforms, IoT devices, mobile applications, and countless other digital touchpoints all contribute to data generation – a staggering 328.77 million terabytes of data each day. Only with data integration can you combine different pieces of this massive amount of data in a way that helps you get meaningful insights and tap into its full potential.

However, every business is unique and so are its data integration needs. This makes it all the more important to understand the various data integration challenges. This is exactly what this guide is all about – the 12 most common challenges faced during data integration and how to overcome them.

What Is Data Integration?

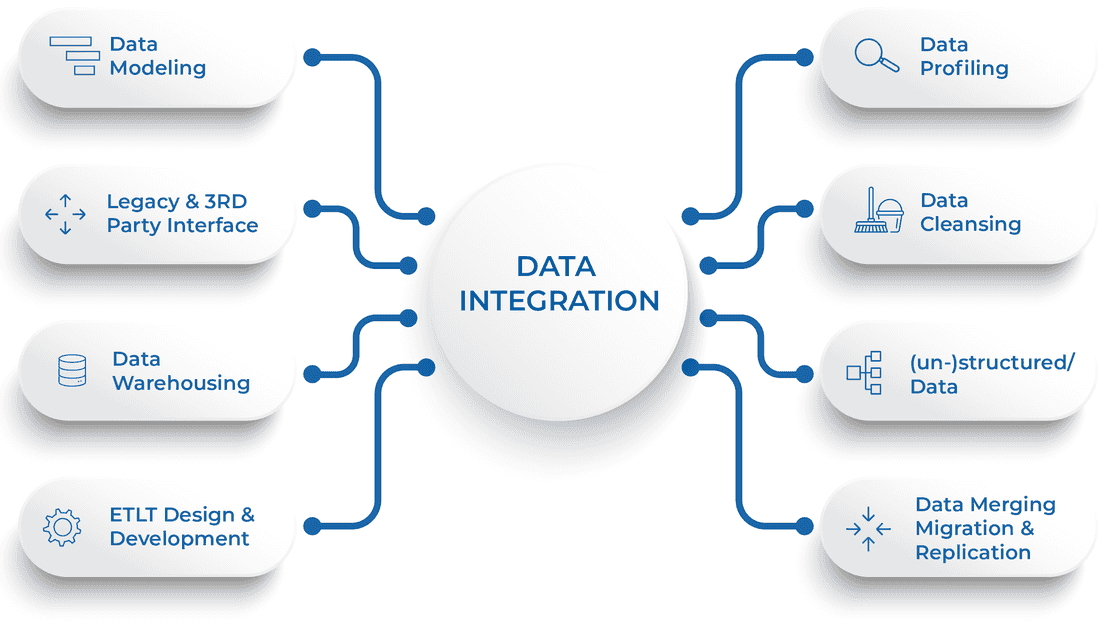

Data integration is a process that involves merging data from various sources to provide a unified view. This process is important in making sense of the vast amounts of data that businesses generate and collect from different systems, both internally and externally.

The real advantage of data integration is its flexibility as it can be adapted to the unique needs of each business. The common elements you'll find in any data integration solution are a network of data sources, a master server, and clients that access data from this server.

Key steps in the data integration process include:

- Gathering and assessing business and technical requirements

- Generating data profiling or assessment reports of the data to be integrated

- Identifying the gap between integration requirements and assessment reports

- Designing key concepts about data integration, like the architectural design, trigger criteria, new data model, data cleansing rules, and the technology to be used

- Implementing the designed integration process

- Verifying, validating, and monitoring the accuracy and efficiency of the data integration process

While data integration offers immense benefits, it also presents a host of challenges. Overcoming them requires a combination of robust data integration strategies, efficient data processing tools, and stringent data governance policies.

For a better understanding of these challenges and how you can overcome them, let’s look at them in detail.

12 Data Integration Challenges & Their Tested Solutions

The data integration challenges rise from the inherent complexity of dealing with diverse data sources, large volumes of data, and the need for real-time data processing. On top of that, problems with data quality, security, and privacy make these challenges even more complicated.

Let’s dive right into 12 of the most common data integration challenges and their solutions.

Establishing Common Data Understanding

While data is crucial in any business, different teams can interpret and use data differently, which causes inconsistencies. For effective data integration, there must be a common understanding of the data for consistent usage across teams.

Creating shared data understanding can be tough. It requires clear communication, teamwork, and alignment among teams, and well-defined data standards and usage policies. Without shared data understanding, data integration encounters issues and results in errors, inefficiencies, and potential team disputes.

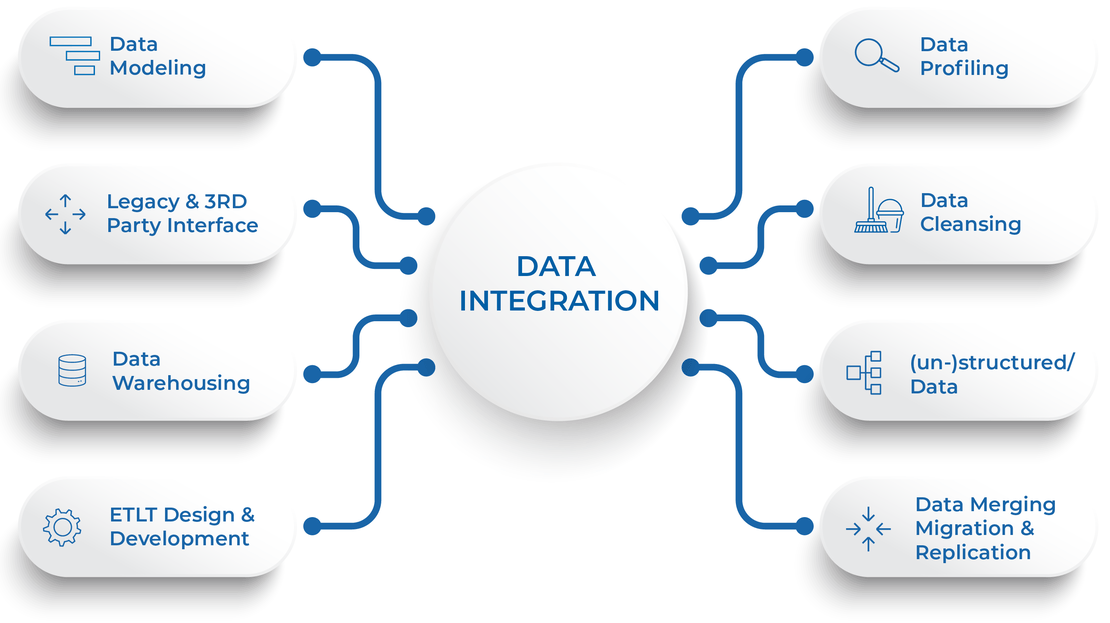

Solution: Data Governance & Data Stewardship

To create a shared understanding of data for data integration, we need to blend data governance, data stewardship, and the role of data stewards.

- Implement data governance and data stewardship: It creates a common data language that ensures consistent interpretation and use of data for efficient data integration.

- Assign data stewards: Use data stewards to guide data strategy, implement policies, and connect the IT team with business planners. This ensures compliance with data standards and policies across teams.

Understanding Source & Target Systems

Understanding source and target systems is a major challenge that organizations face. The source system is the original location where the data is stored while the target system is the destination where the data needs to be transferred, such as a data warehouse.

The challenge arises because data can come from a wide variety of data storage systems. Also, data in these systems might change at varying speeds, which makes the integration process even harder.

Solution: Training, Documentation, & Data Mapping Tools

To overcome this challenge, a multi-faceted approach is necessary.

- Conduct training: Train your team on the specifics of source and target systems, including data creation, storage, and change protocols to equip them for handling data integration complexities.

- Create thorough documentation: Maintain detailed records of source and target systems, including information about data structures, formats, change protocols, and change rates to help in understanding and problem-solving.

- Leverage data mapping tools: Employ data mapping tools to visualize data structure and relationships. This makes it easier to understand data storage and changes, and to automate data mapping, saving time and reducing the risk of errors.

Mapping Heterogeneous Data Structures

Data structures often differ based on individual system developments, each with unique rules for storing and changing data. This challenge becomes even more complex when systems use different data formats, schemas, and languages. Turning this data into a uniform format for integration can eat up time and cause errors.

Without a proper plan to handle these diverse data structures, there's a higher risk of data loss or corruption. This causes inaccurate data analysis and poor business choices.

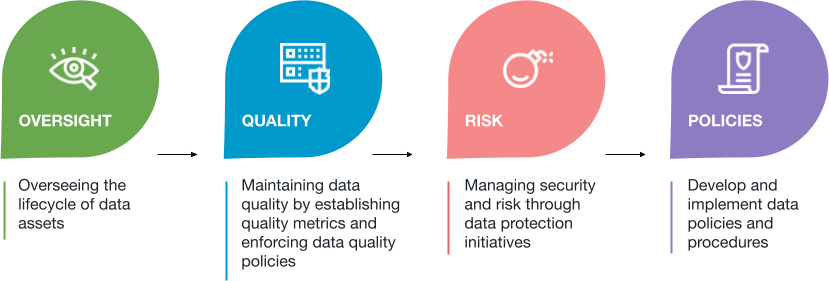

Solution: ETL Tools & Managed Solutions

To effectively handle the challenge of varied data structures, you need a combination of tools and strategies.

- Use ETL tools: Use Extract, Transform, Load (ETL) tools for managing different data formats and structures. They streamline the process of data extraction, transformation into a standard format, and loading into the target system, which reduces errors.

- Use managed integration solutions: Use managed solutions to handle complex integration tasks. They provide a comprehensive platform for data integration and offer features like data mapping, transformation, and loading for accurate data mapping and integration.

Handling Large Data Volumes

As your company continues to create and gather more and more data, processing and integrating this data becomes complex. Large data volumes can overwhelm traditional data integration methods, causing longer processing times and greater resource use. This also increases the risk of errors during integration and causes data loss or corruption.

Solution: Modern Data Management Platforms & Incremental Loading

To manage large data volumes effectively in data integration, use modern tools and strategies.

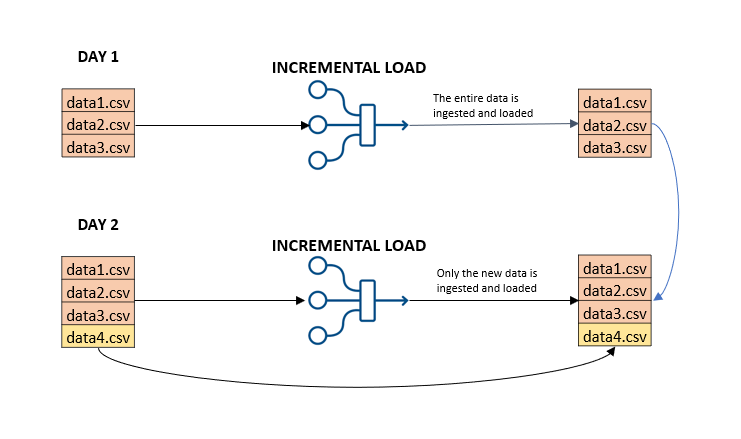

- Adopt modern data management platforms: Modern data management platforms streamline handling large volumes of data with features like parallel processing, distributed storage, and automated data management.

- Apply incremental data loading: Use an incremental data loading strategy to manage large data volumes. This approach breaks data into smaller segments and loads them incrementally rather than attempting to load all data simultaneously.

Infrastructure Management

The infrastructure for data integration should be strong and dependable for smooth data extraction, transformation, and loading. Plan for issues like system outages, network disruptions, or hardware failures that can disrupt integration and cause data loss, delays, or inaccuracies.

Besides the hardware, data integration infrastructure also includes software tools, platforms, and systems for data extraction, transformation, and loading. Changes or updates to these tools can affect the integration process and require workflow optimization.

Solution: Ecosystem Verification & Robust Solutions

Effective infrastructure management for data integration requires a proactive and robust strategy.

- Conduct comprehensive ecosystem verification: Before beginning the integration process, thoroughly verify the entire ecosystem. This includes checking the reliability and performance of hardware systems, network connectivity, and data integration software tools.

- Opt for robust solutions: Select flexible, scalable, and automated data integration tools and platforms. These solutions should scale and adapt to changes in your requirements as well as the source and target systems.

Managing Unforeseen Costs

Data integration's complexity can result in unexpected situations and raise data integration costs. Such situations are a result of changes in data or systems after setting up the integration. For example, changes in data format or structure need workflow modifications, causing additional costs in time and resources.

These unforeseen costs can burden your budget and resources and potentially affect other projects or operations. If not managed effectively, these costs detract from the value of data integration and make it less cost-effective.

Solution: Contingency Planning & Regular Monitoring

To manage unexpected costs in data integration effectively, deploy policies for proactive planning and regular monitoring of systems.

- Implement contingency planning: Include a contingency budget for unexpected costs during data integration. This budget can cover additional costs because of changes in data or systems, unexpected issues or delays, or other unforeseen circumstances.

- Perform regular monitoring: Regularly monitor the data integration process to identify and address issues before they become costly problems. This includes tracking data integration workflow performance, checking integrated data quality and accuracy, and monitoring data integration tool usage and performance.

Data Accessibility

Data accessibility is the ability to access data in the required location for integration, regardless of where it is stored or how it is managed. However, with data often spread across different systems, databases, and locations, it becomes difficult to ensure that all necessary data is accessible for integration.

Manual data curation relies heavily on human power. This dependency causes delays, inconsistencies, and errors in the data integration process. Also, as the volume and complexity of data grows, the task of data curation becomes even more challenging and time-consuming.

Solution: Centralized Data Storage & Data Governance Policies

For this, a combination of centralized data storage and data governance policies is necessary.

- Adopt centralized data storage: A centralized data storage system ensures data accessibility when needed. It consolidates data from various sources into a single location, simplifying access and management.

- Establish data governance policies: Implementing data governance policies can facilitate data accessibility. These policies define how data should be stored, managed, and accessed, providing explicit guidelines for data handling.

Data Quality Management

High-quality data is accurate, consistent, and reliable. But when data comes from different places, each with its own rules and formats, keeping data quality high is hard.

Poor data quality results from entry errors, variations in data formats or structures, and outdated or missing data. Such issues will result in incorrect data which ends up in false insights and bad decisions.

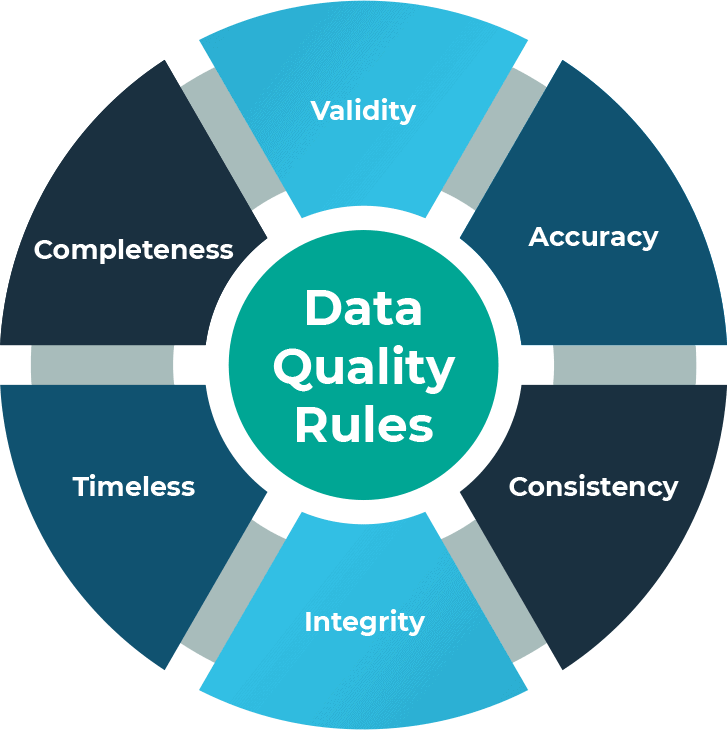

Solution: Data Quality Management Systems & Proactive Validation

A combination of data quality management systems, regular monitoring, and proactive validation is needed to resolve this issue.

- Use data quality management systems: Quality management systems help in data cleansing and standardization. They identify and rectify errors and discrepancies in the data and enhance its accuracy and consistency.

- Proactively validate data: Check for errors or inconsistencies soon after collecting data, before integrating it into the system. This stops bad-quality data from getting into your systems and ensures that your integrated data is good quality and reliable.

Data Security & Privacy

Make sure that your data is protected at all stages of the integration process. This is very important when dealing with sensitive data like personal information, financial data, or proprietary business information. Any data leak or misuse can harm your reputation and make customers and stakeholders lose trust.

As cyber threats continue to evolve and data protection regulations get stricter in many jurisdictions, make sure that your data integration processes are compliant with relevant laws and standards.

Solution: Protection Of Sensitive Data & Advanced Security Measures

Here’s how you can protect sensitive data with the help of strong security measures.

- Ensure protection of sensitive data: Use security measures like encryption, pseudonymization, and access controls to keep the data safe from unauthorized access or misuse.

- Use data integration solutions with robust security measures: They should have features like secure data transmission, data encryption, user authentication, and intrusion detection to protect against unauthorized data access and corruption.

Duplicate Data Management

When data records show up more than once in the dataset, it creates problems during data integration. Duplicates come from things like data entry mistakes, system errors, or different data formats or structures.

Duplicate data makes the integrated data inaccurate and hampers your decision-making. Also, handling duplicate data takes a lot of time and resources which makes data integration more complex and costly.

Solution: Data Sharing Culture, Collaborative Technology, & Data Lineage Tracking

Here’s how you can effectively manage duplicate data in data integration.

- Track data lineage: Data lineage refers to the life of data, including where it comes from, how it's used and changed, and where it's stored. Tracking data lineage can ensure data accuracy and completeness.

- Implement team collaboration technology: Deploy technology that promotes team collaboration and adherence to rules. These technologies facilitate data sharing, and teamwork, and help prevent duplicate data.

- Create a culture of data sharing: Promote open communication and teamwork in your company and encourage teams to work together. This helps find and handle duplicate data and makes the integrated data more accurate and consistent.

Performance Optimization

Your data integration solution should be capable enough to handle big volumes of data and complex tasks without affecting the system's performance too much. As the volume and complexity of data grow, the data integration processes can become more resource-intensive and potentially slow down the systems.

Without improving performance, data integration can slow everything else down, making it hard for your organization to get timely and accurate insights from your data.

Solution: Efficient Data Processing, Hardware Upgrades, & Load Balancing

Let’s see how you can overcome this challenge.

- Upgrade hardware: This can involve augmenting the processing power, memory, or storage of systems, or establishing faster network connections.

- Balance load: Distribute the data processing load across multiple servers. Load balancing ensures no single server is overloaded with data processing tasks. This prevents slowdowns and improves overall data integration performance.

- Implement efficient data processing: Employ efficient algorithms and data processing techniques to handle high data volumes. These methodologies can speed up data extraction, transformation, and loading processes, reducing data integration's time and resource needs.

Real-Time Data Integration

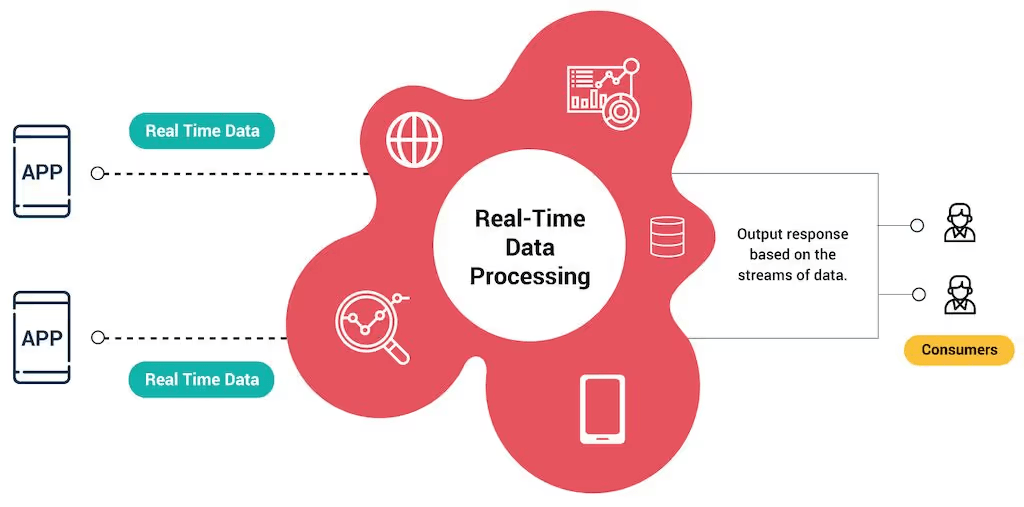

Some business processes need real-time or near-real-time data collection and delays can disrupt these processes. However, the process to integrate data in real time can be complex. It requires capturing, processing, and integrating data as soon as it's generated, without causing delays or disruptions.

Real-time data integration should also manage the high volume and speed of real-time data. Without an effective data integration strategy for handling real-time data, you’ll find it difficult to get timely insights, affecting your decision-making.

Solution: Real-Time Data Integration Tools, Stream Processing, & Event-Driven Architecture

You can implement the following strategies to address the issue:

- Implement event-driven architecture: In an event-driven architecture, actions are initiated by real-time data events which enhances real-time data integration.

- Employ real-time Data Integration Tools: Real-time data integration tools are capable of capturing and integrating data in real time. They ensure the latest data is always available for analysis.

- Stream processing: Stream processing techniques can process data in real time as it arrives. These techniques can manage large volumes of real-time data, processing it swiftly and efficiently for real-time insights.

How Estuary Can Help Overcome Data Integration Challenges

Estuary Flow is a robust DataOps platform for building and managing streaming data pipelines. It provides a user-friendly interface accessed through a UI or CLI, offering programmatic access for easy embedding and white-labeling of pipelines.

But Flow is not just about moving data. It also ensures the accuracy and consistency of your data as it evolves. It offers built-in testing with unit tests and exactly-once semantics to ensure transactional consistency. Here’s how Flow can help you solve common data integration challenges:

- Flow can handle large volumes of data, with proven capability to handle data volumes up to 7 GB/s.

- It provides real-time ETL capabilities for the efficient handling of different data formats and structures.

- It uses security measures like encryption and access controls to keep the data safe from unauthorized access or misuse.

- Flow offers live reporting and monitoring. This lets you keep track of your data integration process and identify any issues early on.

- It supports 200+ data sources and destinations. This makes it easy to consolidate data from many different sources into a single location.

- Flow provides real-time change data capture from many database systems. This lets you incrementally load data to save costs and improve the overall performance of integration.

- Its automated schema management and data deduplication features help in dealing with duplicated as well as raw data that can cause problems when data needs to be integrated.

Conclusion

Data integration challenges can act as hurdles on your path to effective data management and utilization. As data volumes continue to increase and new data sources emerge, you should employ scalable technologies that can handle large data volumes while adapting to changing requirements.

Estuary Flow can help you tackle these challenges easily and efficiently. With its ability to process data in real time and handle large volumes, Flow makes sure that your data integration keeps up with the pace of your business. It also simplifies consolidating data with over 200 sources, all while providing stringent data security, quality assurance, and efficient data management.

Take the next step in overcoming your data integration challenges with Flow and sign up here. You can also contact our team to discuss your specific needs.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles