Imagine a busy online store managing vast amounts of customer orders, a hospital gathering patient records, or even a weather forecasting agency collecting meteorological data. They might seem worlds apart, but one common thread binds them – the need to efficiently and reliably transport, process, and store data. This critical task is achieved through a core component of data management – the data ingestion pipeline.

Data ingestion goes beyond merely collecting data. It's about collecting data intelligently, securely, and at scale. You can really see the importance of a robust data ingestion pipeline when you think about the consequences of inefficiency, or worse… not having one at all!. Without it, data remains fragmented, disorganized, and largely unusable.

In this article, we will delve deeper into the significance of data ingestion pipelines, exploring their different types, the concepts that drive them, and how they are used in various industries.

What Is A Data Ingestion Pipeline?

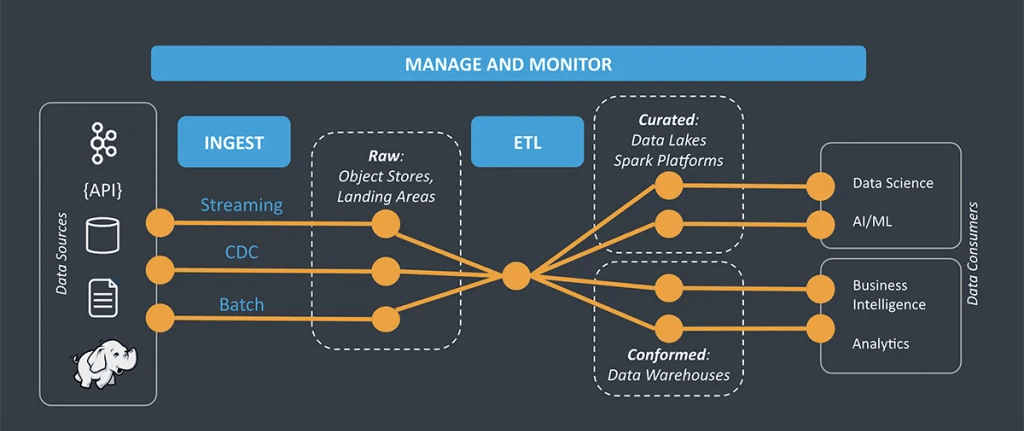

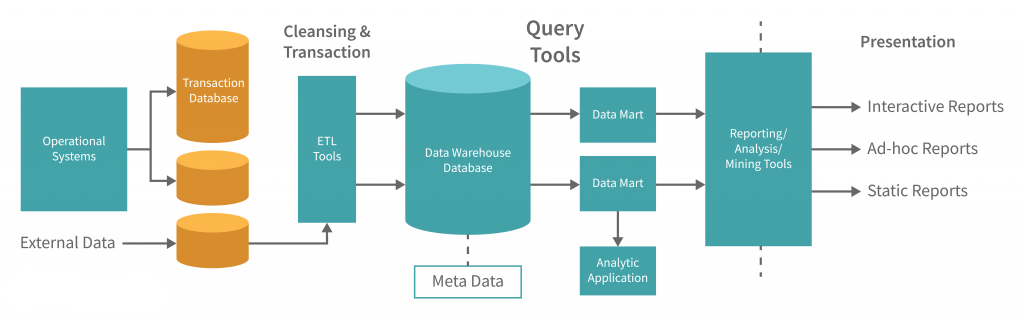

A data ingestion pipeline is a structured system that collects, processes, and imports data from various sources into a central storage or processing location, like a database or data warehouse. Its primary purpose is to efficiently and reliably transfer data from different origins, including databases, logs, APIs, and external applications, into a unified and accessible format for further processing.

This helps organizations to centralize, manage, and analyze their data for various purposes, like reporting, analytics, and decision-making. Data ingestion pipelines facilitate the movement of data, ensuring it is clean, transformed, and available for downstream applications.

SaaS tools like Estuary Flow streamline the process of establishing data pipelines to ensure swift and agile data ingestion from various sources. This helps you harness valuable insights from both historical and real-time data seamlessly. But before we look at how this can be effortlessly implemented, it’s important to get a better idea of how it actually works.

What Are The Types Of A Data Ingestion Pipeline?

Data ingestion pipelines come in 3 different types to accommodate different data requirements. Let’s explore them in detail.

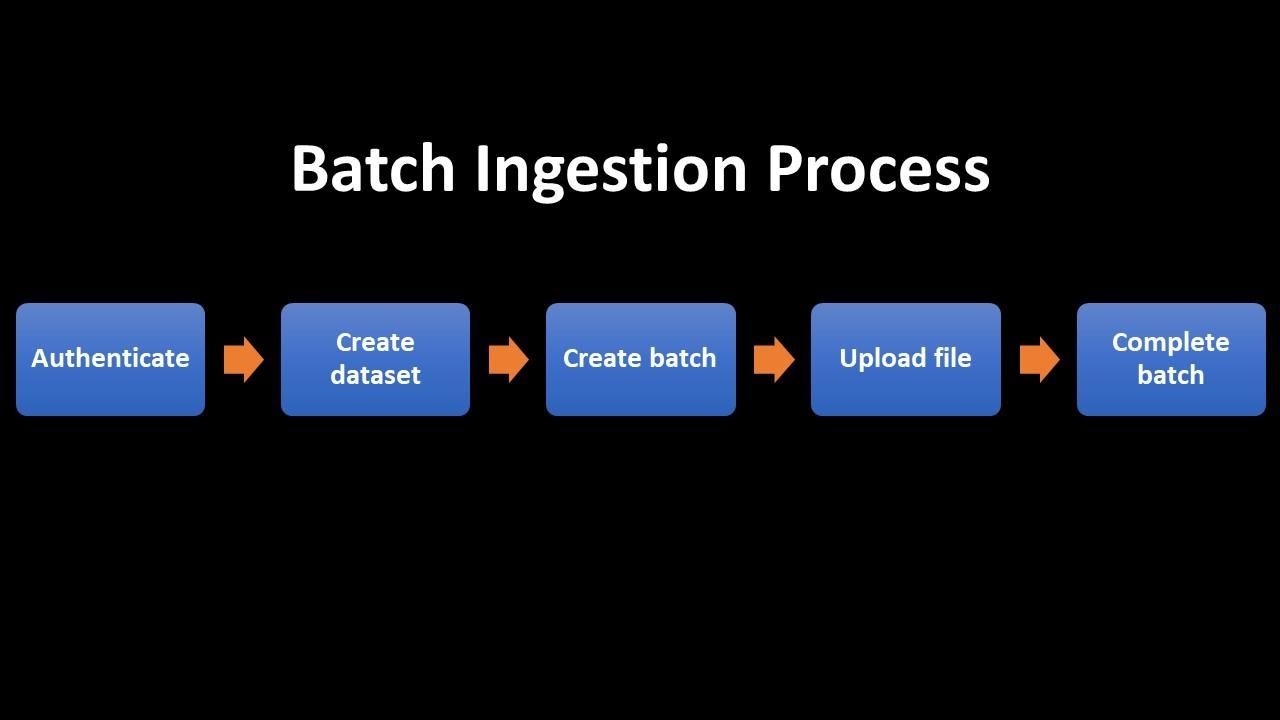

Batch Data Ingestion Pipeline

Batch data ingestion pipelines are designed for processing data in discrete, fixed-size chunks or batches. Raw data is collected from various sources in predefined intervals, like hourly, daily, or weekly.

Collected data is then processed in batches. During this stage, the data may undergo various transformations, including cleansing, enrichment, aggregation, and filtering. Data quality checks can be implemented to ensure data accuracy. Processed data is transferred to a centralized data storage, like a data warehouse, data lake, or other storage systems, in bulk.

Batch ingestion pipelines are scheduled to run at specific times or intervals which makes them predictable and suitable for non-real-time use cases. They are well-suited for scenarios where low latency is not critical, like historical data analysis, reporting, and periodic data updates. They are efficient for processing large volumes of data cost-effectively.

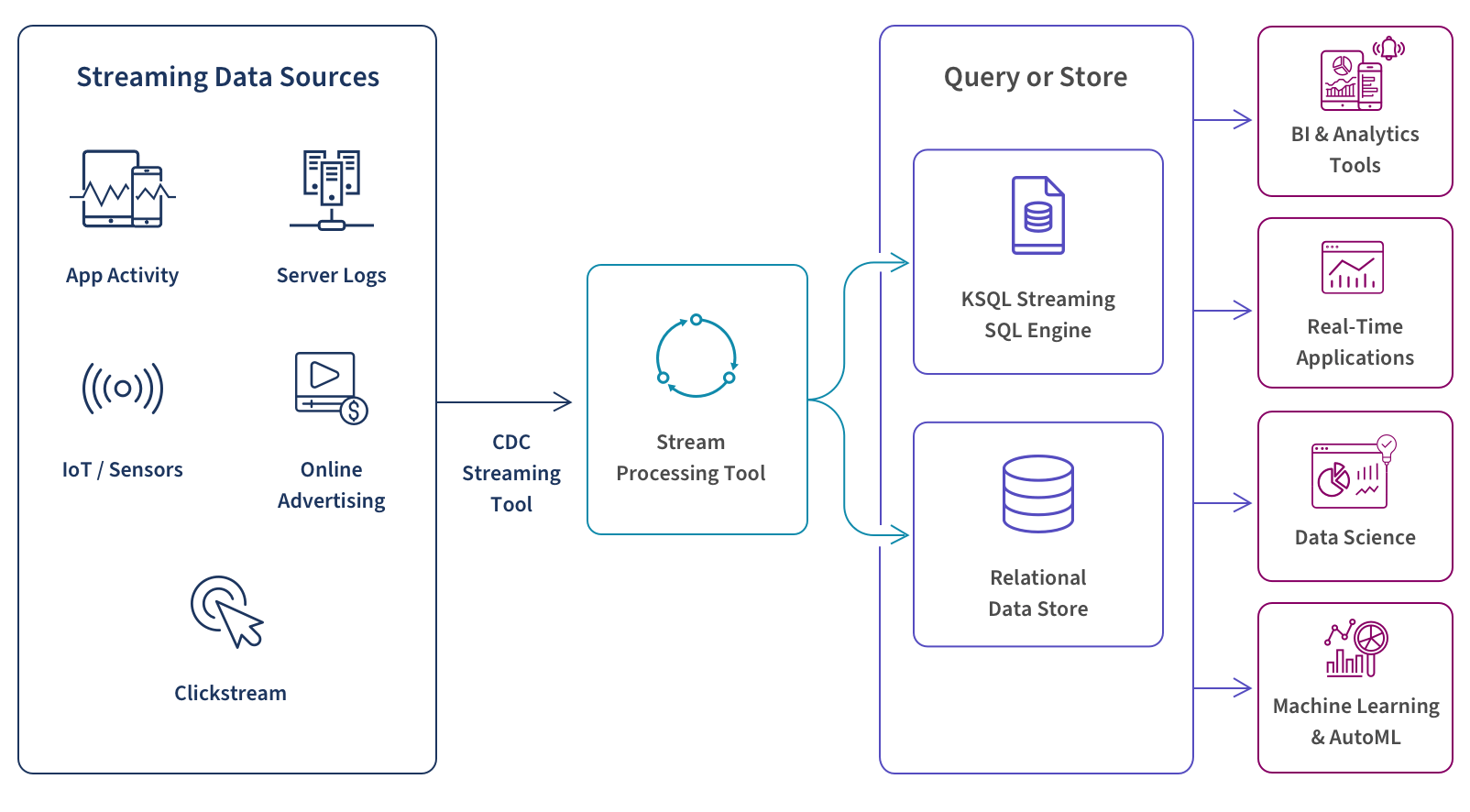

Real-Time Data Ingestion Pipeline

Real-time ingestion pipelines ingest streaming data continuously as it is generated by various sources, including sensors, IoT devices, social media feeds, and transaction systems. This real-time data is streamed to the pipeline.

Data is then processed as soon as it arrives, often in micro-batches or individually. Stream processing technologies are commonly used for real-time data processing. Processed data is then stored in real-time data stores, like NoSQL databases or in-memory databases, to make it readily available for real-time queries and analytics.

Real-time ingestion pipelines offer low latency which makes them ideal for use cases where immediate insights are crucial, like fraud detection, real-time monitoring, and recommendation systems.

Hybrid Data Ingestion Pipeline

A hybrid data ingestion pipeline is a combination of both batch and real-time data ingestion techniques. The batch-processing component handles data that doesn't require real-time analysis. This component can ingest data in larger, predefined batches and is suitable for historical analysis, reporting, and periodic updates.

Simultaneously, a real-time processing component is responsible for ingesting data with low latency. This component can handle events that require immediate action, real-time monitoring, and quick decision-making.

In a hybrid data pipeline, the processed data from both batch and real-time components can be stored in a combination of storage systems. Historical data processed in the batch component can be stored in data warehouses, data lakes, or traditional databases, while real-time data is often stored in in-memory databases, NoSQL databases, or distributed data stores.

Hybrid data pipelines can be configured with scheduling mechanisms and triggers to determine when batch processes run and when real-time ingestion occurs.

Key Concepts In A Data Ingestion Pipeline

A robust data ingestion pipeline plays a critical role in the data management process. But to make the best out of it, you should have a strong understanding of its core concepts. Let’s discuss these concepts in detail.

Data Ingestion

Data ingestion is dedicated to the efficient and reliable movement of raw data from various source systems into a central repository. Here are the key aspects of data ingestion:

Data Formats vs Protocols

Data ingestion systems need to support a wide range of data formats and communication protocols to interface with diverse source systems. These formats can include structured data (e.g., databases), semi-structured data (e.g., JSON or XML), and unstructured data (e.g., log files). The choice of protocols and formats depends on the source system's nature and the destination system's capabilities.

Data Validation & Error Handling

Data ingestion processes should include validation and error-handling mechanisms. This ensures that only valid data is ingested and any errors are identified, logged, and addressed. Robust error handling is crucial for maintaining data quality and ensuring data integrity.

Data Collection

Data collection is the first step within the data ingestion process which focuses specifically on the process of identifying, retrieving, and transporting data from source systems to a staging area or processing layer.

Source System Compatibility

To effectively collect data, the data ingestion system must be compatible with various source systems. This means having connectors, drivers, or APIs that can communicate with databases, web services, file systems, sensors, and other sources. Ensuring compatibility minimizes friction in data acquisition.

Data Extraction Methods

The methods for extracting data depend on the source. Common extraction methods include querying databases using SQL, pulling data from APIs, parsing log files, or listening to data streams. ETL (Extract, Transform, Load) processes may be used to transform the data into a suitable format during extraction.

Data Transport & Security

Data must be securely transported from the source to the ingestion system. This often involves encryption and secure communication protocols to protect sensitive data during transmission. Security measures are essential to protect data privacy and confidentiality.

Data Synchronization & Scheduling

For batch data collection, scheduling mechanisms are employed to ensure data is collected at the right times and intervals. This includes defining schedules, triggers, and dependencies to coordinate data collection effectively.

Data Processing

Data processing, within the data ingestion pipeline, follows data collection and is responsible for preparing the data for storage and analysis. This phase involves several key elements:

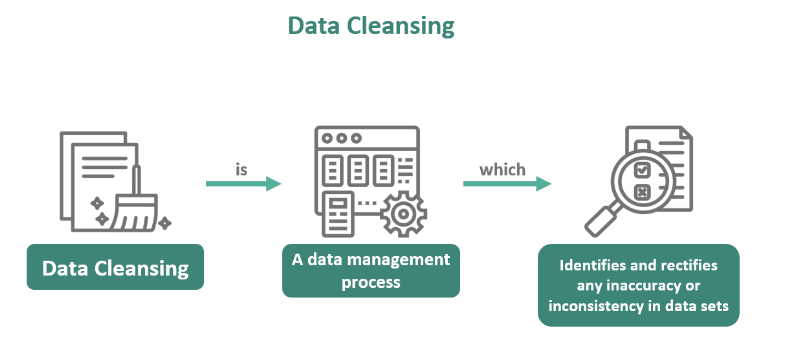

Data Cleansing

Data often arrives with inconsistencies, inaccuracies, or missing values. Data cleansing/cleaning involves the identification and correction of these issues to ensure data quality. This might include deduplication, data validation, and the removal of outliers.

Data Transformation

Data collected from various sources may have different structures and formats. Data transformation involves reshaping the data to conform to a common schema or format and make it suitable for the destination storage system and analytical tools.

Data Enrichment

In some cases, it is necessary to augment the data with additional information to enhance its value. Data enrichment can involve adding geospatial data, social media sentiment analysis, or other external data sources to provide context and insights.

Real-Time Stream Processing

In real-time data ingestion scenarios, data processing happens on the fly as data streams in. Real-time stream processing systems analyze and enrich the data as it arrives, making it immediately available for real-time analytics, monitoring, and decision-making.

Data Loading

Data loading is the step where processed data is transferred from the staging or processing layer to the final destination, typically a data warehouse, data lake, or other storage infrastructure. Here are key aspects of data loading:

Batch Loading

In batch-oriented data loading, data is collected and processed in predefined intervals and then loaded into the destination system. This loading method is suitable for scenarios where data can be periodically refreshed or batch processing is acceptable.

Stream Loading

For real-time data ingestion, data is loaded continuously or in micro-batches as it arrives for instant access to the most up-to-date information. Stream loading is crucial for applications that require low-latency data availability.

Data Loading Optimization

Loading data efficiently is important to minimize the impact on system performance and ensure data is available for analysis promptly. Optimization techniques may include parallel loading, data partitioning, and compression to speed up the process.

Data Storage

Data storage is the final destination where ingested data is persistently stored. The choice of storage system depends on data volume, access patterns, and cost considerations:

Data Warehouses

Data warehouses are optimized for analytical queries and are often used for structured data. They enable efficient data retrieval and support complex SQL queries for reporting and business intelligence.

Data Lakes

Data lakes, like Hadoop HDFS or cloud-based solutions like AWS S3, are versatile storage repositories that can handle structured, semi-structured, and unstructured data. They are commonly used for big data and machine learning applications.

NoSQL Databases

For semi-structured or unstructured data, NoSQL databases like MongoDB or Cassandra provide flexible and scalable storage solutions. These databases are well-suited for real-time applications and document-oriented data.

Use Cases Of Data Ingestion Pipelines: How It Works When Properly Implemented

Data ingestion pipelines are used in different sectors and their purpose differs based on their complexity, scale, and technologies. To understand their role better, let’s discuss some common use cases for them:

Log & Event Data Ingestion

Ingest logs generated by software applications to monitor performance, identify issues, and troubleshoot problems.

Data Warehousing

Ingest data from various transactional databases, applications, and external sources into a data warehouse for analytical and reporting purposes. This helps organizations make data-driven decisions.

IoT Data Ingestion

Collect and process data from sensors, devices, and IoT platforms. This has different applications like smart cities, industrial automation, and predictive maintenance.

Social Media & Web Scraping

Ingest data from social media platforms, websites, and web APIs for sentiment analysis, market research, competitive intelligence, and content aggregation.

Streaming Data Processing

Real-time data pipelines can ingest data from different sources and process it in real-time for applications like fraud detection, recommendation systems, and monitoring.

Data Migration

Migrate data from one data store or system to another, whether it's transitioning from an on-premises system to the cloud, changing databases, or consolidating multiple data sources.

ETL (Extract, Transform, Load)

Extract data from source systems, transform it into a suitable format, and load it into a target system or data warehouse. ETL pipelines are fundamental for data integration and data cleansing.

Machine Learning & AI Data Ingestion

Collect training data for machine learning models, including structured data, images, text, and other types of data.

Media & Content Ingestion

Ingest multimedia content like images, audio, and video for content management, streaming, or analysis.

Financial Data Ingestion

Collect financial market data, including stock prices, currency exchange rates, and economic indicators for analysis and trading.

Healthcare Data Ingestion

Ingest and process electronic health records (EHRs), medical images, and patient data for healthcare analytics, patient monitoring, and research.

Supply Chain & Inventory Management

Collect data related to inventory levels, shipping, and demand to optimize supply chain operations.

Geospatial Data Ingestion

Collect geographical and location-based data, including maps, satellite imagery, and GPS information for applications like navigation, geospatial analysis, and urban planning.

Telemetry & Monitoring Data

Ingesting data from infrastructure components (e.g., servers, network devices) to monitor system health and performance.

Estuary Flow: Simplify Your Data Ingestion Pipeline

Estuary Flow is our advanced DataOps platform that is purpose-built for processing high-throughput data swiftly and with minimal delay. It is designed to revolutionize your data management strategy.

Flow boasts real-time streaming SQL and TypeScript functionalities for smooth data ingestion and transformation between different databases, cloud-based platforms, and software applications. Our tool prioritizes user experience and offers advanced features for data integrity and consistency.

One of Estuary Flow's standout features is its scalability. Whether you're dealing with a small data stream of 100MB/s or a massive inflow of 5GB/s, Flow efficiently manages the workload. What's more, it excels at rapidly replenishing historical data from your source systems, completing the process in just a matter of minutes.

It can also handle multiple data formats and structures with ease. Through real-time ETL capabilities, Flow ensures that data is consistently processed, eliminating compatibility issues that can often arise in complex data ecosystems. Thanks to its incremental data update capabilities, which use real-time Change Data Capture (CDC) features, you can access the most current data at any given moment.

Estuary Flow also places a strong emphasis on efficiency. It automates schema governance and incorporates data deduplication features to streamline your operations and reduce redundancies to make sure that your data remains clean and concise.

The Takeaway

A well-optimized data ingestion pipeline provides the foundation for data reliability and consistency. They act as the gateway, efficiently ushering data into the system and laying the groundwork for meaningful insights. Without them, data would remain untapped potential and businesses would struggle to keep up with the ever-evolving demands of the digital age.

You can elevate your data management with Flow. From seamless data ingestion to real-time processing, it empowers your business with efficiency and reliability. With Estuary Flow, effortlessly set up data pipelines, scale to your needs, and enjoy the benefits of automated data handling. Don't miss out – register for free today and experience the difference firsthand. For more details, contact our support team.

Frequently Asked Questions About Data Ingestion Pipeline

We understand that data ingestion can be a complex process and despite our best efforts to provide a comprehensive guide, you may still have some unanswered questions. This section will answer these most commonly asked questions.

What are the common challenges in data ingestion and how can they be addressed?

Common challenges in data ingestion and their solutions include:

- Data Variety: Diverse data formats can cause compatibility issues. Support various formats to address this issue.

- Data Volume: Large data volumes can overwhelm ingestion pipelines. Employ distributed processing and scalable infrastructure.

- Data Velocity: Real-time data streaming requires low-latency ingestion. Implement stream processing frameworks.

- Data Security: Protect sensitive data from breaches during ingestion. Encrypt data in transit and at rest, and implement access controls.

- Data Monitoring: Lack of visibility into data pipelines can cause issues. Utilize monitoring tools to track data flow and performance.

- Data Source Changes: Data sources often evolve, causing disruptions in data pipelines. Employ version control, change management, and automated schema evolution handling to adapt to source changes.

What best practices should be followed when designing and implementing data ingestion pipelines?

For efficient data ingestion pipelines, focus on these best practices:

- Verify and validate data sources for accuracy and completeness.

- Implement incremental data loading to minimize data transfer and processing.

- Handle changes in data schema gracefully for backward compatibility.

- Set up robust error handling and retry mechanisms for fault tolerance.

- Utilize parallel processing to ingest data efficiently, taking advantage of available resources.

- Apply throttling and rate limiting to avoid overloading source systems.

- Convert data into a standardized format for downstream processing.

- Use CDC techniques when possible to capture only changed data.

- Keep track of metadata, like timestamps and data source details.

- implement monitoring and alerting for pipeline health and data anomalies.

What are the various data sources that data ingestion pipelines can connect to and collect data from?

Data ingestion pipelines can connect to a wide range of data sources, including:

- Databases (SQL, NoSQL)

- Cloud storage (AWS S3, Azure Blob Storage)

- Web services (REST APIs, SOAP)

- Log files

- IoT devices

- Messaging systems (Kafka, RabbitMQ)

- External applications

- Data streams.

They can also collect data from on-premises systems, social media platforms, and external partners.

Can data ingestion pipelines be used for migrating data?

Yes, data ingestion pipelines can be used for migrating data between different cloud platforms or providers. These pipelines are capable of extracting data from a source, transforming it as needed, and then loading it into the target cloud environment.

You can configure the source and target connections accordingly so that data can seamlessly transition between cloud providers. This approach streamlines data migration and ensures that it is efficient and minimizes downtime. Data ingestion tools automate data transfers to simplify the process, making it an effective choice for migrating data between various cloud platforms.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles