Corporate networks dealing with heavy online transactions, user analytics, and other tech-related activities are always handling huge amounts of different data. To manage this, it’s critical to understand what data ingestion is and how you can use it to your benefit.

Data ingestion is a critical first step for just about everyday processes, from analytics to well-governed data storage. However, the complexity and diversity of data sources and formats that organizations encounter during the data ingestion process make it difficult.

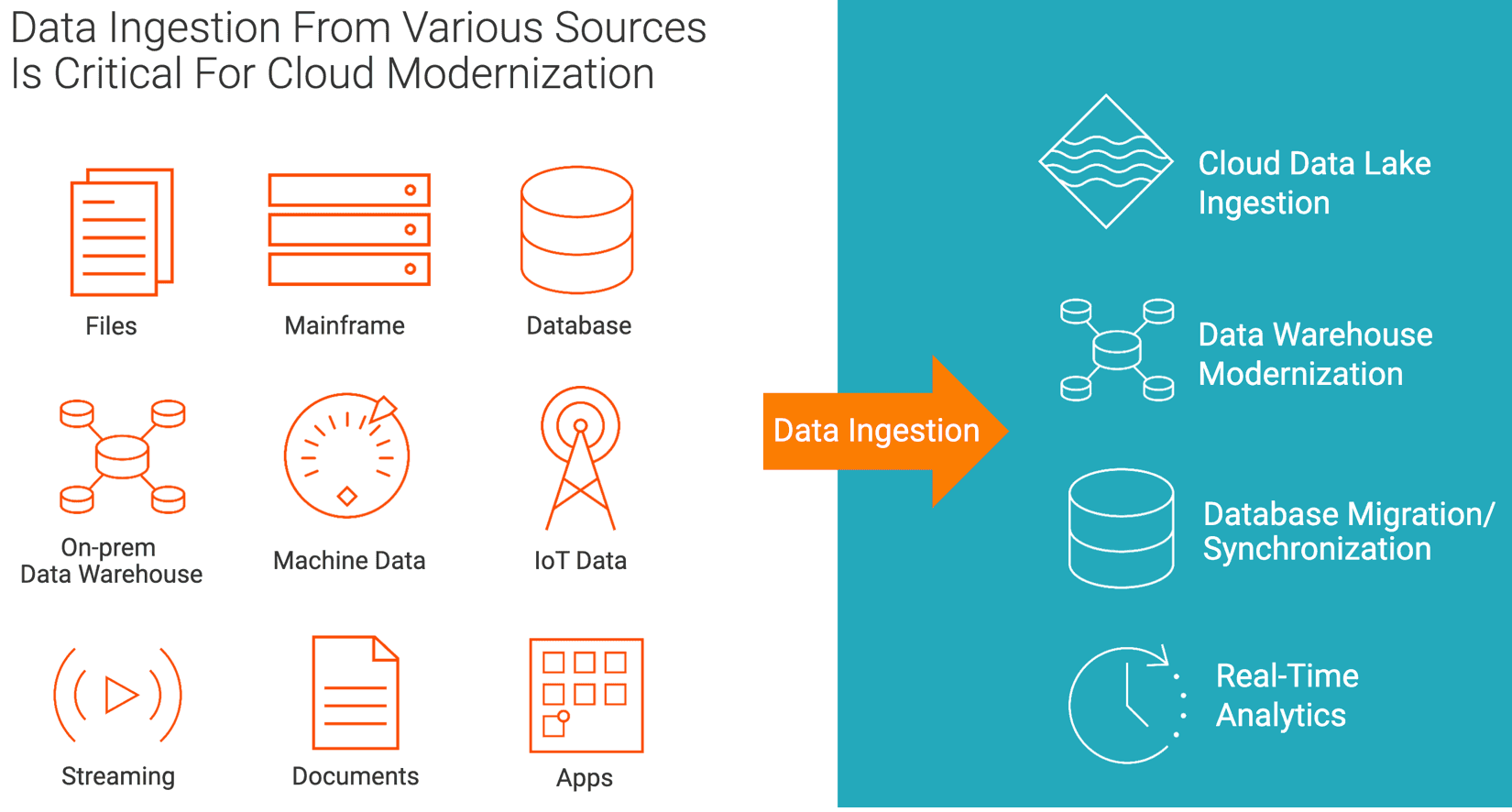

With data originating from structured databases, unstructured documents, APIs, IoT devices, social media platforms, and more, businesses struggle to bring all this data together for further analysis.

Our value-packed article will help you discover the world of data ingestion, its practical applications across a range of industries, and the right tools that aid in transporting data without leaving any valuable information behind.

By the end of this read, you will have a complete grasp of what data ingestion is, its different types, and how you can pick the right data ingestion tool for your business.

What Is Data Ingestion?

Data ingestion is the process of collecting, importing, and loading data from various sources into a system for storage or analysis. It’s the first step in the data analytics pipeline which ensures that the correct data is available at the right time.

The data ingestion pipeline, often called the data ingestion layer, can be broken down into:

- Data capture: This is the process of gathering data from various sources. Data can come from a variety of sources, including sensors, machines, applications, and social media.

- Data preparation: In this phase, the data is cleaned and transformed so that it is ready for analysis. This includes removing duplicates, correcting errors, and converting data into a consistent format.

- Data loading: This process involves transferring the ingested data to a storage location or database. The data can be loaded in real-time or in batches.

Now that we have the basics down, let’s explore why data ingestion matters so much.

Why Is Data Ingestion Important?

Here’s how data ingestion benefits you and your business.

- Real-Time Insights: With data ingestion, you can quickly access and analyze data as it’s generated. You can better respond to changing conditions, identify emerging trends, and take advantage of new opportunities – all in real time.

- Improved Data Quality: Data ingestion isn’t just about collecting data – it’s also about cleaning, validating, and transforming it. This process ensures that your data is accurate, reliable, and ready for analysis. Better data = better insights.

- Staying Competitive: When you have access to tons of data from multiple sources, you can make better decisions and act quickly. Data ingestion helps you stay competitive by providing them with the insights you need to innovate and grow.

- Enhanced Data Security: Data ingestion processes include security measures to protect sensitive information. By consolidating data into a single, secure location, you can control access and protect your data from unauthorized use.

- Scalability: Data ingestion tools and processes are designed to handle massive amounts of data. They can easily scale to accommodate growing data volumes, ensuring that you can keep up with the increasing demand for data analysis.

- Single Source of Truth: Consolidating all your data into one place ensures that everyone in the organization is working with the same, up-to-date information. This unified view of data reduces inconsistencies and makes it easier for teams to collaborate and simplify the process.

Now that we have established the importance of data ingestion, let's take a look into its key concepts for the effective utilization of data to unlock its full potential.

Mastering the Foundations: 4 Key Concepts Of Data Ingestion

Now let's talk about key concepts that lay the groundwork for effective data management.

Data Sources

You can’t have data ingestion without data sources, right? These are where you get your data – databases, files, APIs, or even web scraping from your favorite websites. The more diverse your data sources are, the better your insights will be. It’s all about getting the big picture.

Data Formats

Data comes in all shapes and sizes and you must be prepared to handle them all. You’ve got structured data (think CSV, JSON), semi-structured data (like XML), and unstructured data (text, images). Knowing your data formats is key to processing them smoothly during ingestion.

Data Transformation

You have collected a bunch of data from different sources but it’s all messy and inconsistent. You need to give it a makeover. That’s where transform data comes in – cleansing, filtering, and aggregating to make your data look its best and fit the target system’s requirements.

Data Storage

After your data’s been through the ingestion process, it’s time to find a storage place. That’s usually a database or data warehouse where it is held for further analysis and processing. Choosing the right storage solution is crucial so your data stays organized, accessible, and secure.

3 Types Of Data Ingestion

There are a few options when choosing the type of data ingestion and it’s important to know which one works best for your business needs. Let’s break them down for you.

Batch Ingestion

This method is about collecting and processing data in chunks or batches at scheduled intervals. Here’s what you need to know:

- It’s ideal for large volumes of data that don’t need real-time processing.

- Batch ingestion reduces the load on your system as it processes data at specific times.

- On the flip side, you might have to wait a bit for the latest data to be available for analysis.

Real-Time Ingestion

In this type, the data is captured and processed as it is generated or received in real time without any significant delay. It is:

- Perfect for situations where you need up-to-the-minute insights.

- Best for applications like fraud detection, where speed is crucial.

- More resource-intensive as your system constantly processes incoming data.

Hybrid Ingestion

Now let’s talk about hybrid ingestion. It’s the best of both worlds – combining batch and real-time ingestion to meet your unique needs.

- This approach gives you the flexibility to adapt your data ingestion strategy as your needs evolve.

- You can process data in real-time for critical applications and in batches for less time-sensitive tasks.

Having discussed the various types of data ingestion, we now turn our attention to an equally important aspect - the tools that aid in this process.

Driving Data Efficiency: Tools For Efficient Data Ingestion

Let’s discuss the leading data ingestion tools to streamline data ingestion, enhance data quality, and simplify data management.

Estuary Flow - A User-Friendly Data Ingestion Powerhouse

Our managed service, Estuary Flow is a UI-driven platform for building real-time data pipelines. Flow shines in empowering various team members with its dual-nature interface. It also provides a robust command-line interface that equips backend engineers with superior capabilities for data integration. This not only enhances their productivity but also enables them to handle complex data pipelines with ease.

Some key features of Estuary Flow are:

- Fully Integrated Pipelines: Flow simplifies data integration by enabling you to create, test, and adapt pipelines that gather, modify, and consolidate data from multiple sources.

- Powerful Transformations: Flow processes data using stable micro-transactions, guaranteeing committed outcomes remain unaltered despite crashes or machine failures.

- Connectors Galore: With pre-built connectors for popular data sources and sinks, such as databases and message queues, Flow reduces the need for custom connectors. This speeds up data pipeline deployment and ensures consistency across systems.

Why Estuary Flow Stands Out

Estuary Flow offers unique benefits that make it an attractive option for businesses of all sizes, especially SMBs with limited resources.

Here's why it's a top pick:

- Flexibility and Scalability: Flow can be easily extended and customized, making it adaptable to your organization’s changing needs.

- Time and Resource Savings: By providing pre-built connectors and an easy-to-use interface, Flow reduces the time and effort needed to set up and maintain complex streaming pipelines.

- User-Friendly Interface: Flow’s web application allows data analysts and other team members to manage data pipelines without needing advanced engineering skills.

Other Data Ingestion Tools To Consider

While Estuary Flow is an impressive option, let’s take a look at some popular data ingestion tools that are worth considering.

Apache Kafka

Apache Kafka is a distributed, high-throughput, and fault-tolerant platform for streaming data between applications and systems. It is widely used by big companies like LinkedIn, Netflix, and Uber.

Kafka is ideal for large-scale, real-time data ingestion, and offers the following benefits:

- High Performance: Kafka can handle millions of events per second, thanks to its efficient message passing and distributed architecture.

- Scalability: Kafka’s distributed design allows for easy horizontal scaling. So as your data processing needs grow, you can add more broker nodes to your cluster.

- Integration & Ecosystem: Kafka integrates with other stream processing frameworks like Apache Flink and Kafka Streams, allowing you to perform complex event processing and data enrichment in real time. Additionally, Kafka benefits from strong community support and provides plenty of resources to help you get started.

Amazon Kinesis

Amazon Kinesis is a cloud-based solution for real-time data ingestion. It’s an excellent option for businesses already using AWS services as it integrates seamlessly with the AWS ecosystem. Kinesis has several key features:

- Fully Managed Service: As an AWS-managed service, Kinesis takes care of infrastructure, scaling, and maintenance.

- Security: Kinesis offers various security features like data encryption, IAM roles, and VPC endpoints to protect your data streams and comply with industry-specific requirements.

- Kinesis Data Streams: Capture, store, and process data streams from various sources like logs, social media feeds, and IoT devices. Kinesis Data Streams can handle terabytes of data per hour.

Google Cloud Pub/Sub

Google Cloud Pub/Sub is a scalable messaging and event streaming service that ensures at-least-once delivery of messages and events. Pub/Sub is a great choice for organizations already operating within the Google Cloud Platform.

Its main features include:

- At-least-once delivery: Pub/Sub ensures the delivery of messages to subscribers even when transmission failures occur.

- Ordering Guarantees: While Pub/Sub doesn’t guarantee global message ordering by default, it provides ordering keys to ensure message order within specific keys. This is useful for applications requiring strict message ordering.

- Integration: Pub/Sub integrates seamlessly with other popular GCP services like Dataflow and BigQuery, making it easy to build end-to-end data processing and analytics applications on the GCP platform.

AWS Glue

AWS Glue is a fully managed, serverless data integration service that simplifies the process of discovering, preparing, and combining data for analytics, machine learning, and application development.

Some of the main features of AWS Glue include:

- Data Crawlers: Glue’s data crawlers automatically discover the structure and schema of your data, saving you time and effort in defining and maintaining schemas.

- Development Endpoints: With Glue development endpoints, you can interactively develop and debug ETL scripts, improving development speed and efficiency.

- Data Catalog: Glue’s data catalog serves as a centralized metadata repository for your data. This makes it easy to discover, understand, and use your data across different AWS services.

- Serverless ETL Data Ingestion: AWS Glue provides a serverless environment for running ETL (Extract, Transform, Load) jobs so you don’t have to worry about managing the underlying infrastructure.

- Integration: It integrates with other AWS services like Amazon S3, Amazon RDS, Amazon Redshift, and Amazon Athena for building end-to-end data processing and analytics pipelines on the AWS platform.

So how to go about selecting the perfect tool for your organization?

5 Factors To Consider When Selecting The Right Data Ingestion Tool For Your Business

When choosing the data ingestion tool for your organization, consider the following factors:

- Compatibility: Look for a tool that integrates seamlessly with your existing infrastructure and technology stack.

- Scalability: Ensure the tool can handle your current data ingestion needs and can easily scale as your business grows.

- Budget: Keep in mind the cost of the tool, including any additional resources or expertise required for setup and maintenance.

- Support and community: A strong community and support network can be invaluable when troubleshooting issues or seeking guidance.

- Ease of use: User-friendly tools can serve as a powerful catalyst, enabling team members with varying technical skills to collaborate and manage data ingestion pipelines.

Maximizing Data Potential: Real-Life Use Cases Of Data Ingestion Across Industries

Data ingestion plays a crucial role in various industries and helps optimize their operations. Let's check out some examples to see how data ingestion is used in real life.

Healthcare

Here’s how the healthcare sector is benefiting from data ingestion.

Electronic Health Records (EHR)

Healthcare providers use data ingestion to consolidate patient records for getting a complete view of a patient’s medical history. This helps physicians make better decisions and deliver personalized care.

Remote Patient Monitoring

Data from wearable devices and telemedicine platforms is ingested and analyzed to monitor patient health which helps in early intervention and improved health outcomes.

Finance

Let’s look at how data ingestion helps the finance sector.

Fraud Detection

Banks and financial institutions collect transaction data from multiple sources and ingest it into a centralized system for analysis. By identifying unusual patterns, they can detect and prevent fraudulent activities.

Risk Management

Data ingestion enables organizations to gather and analyze data related to market trends, customer behavior, and economic factors, helping them manage financial risks.

Manufacturing

Now let’s discuss the application of data ingestion in manufacturing.

Supply Chain Optimization

Manufacturers ingest data from suppliers, production lines, and inventory systems to gain insights into their supply chain operations. This helps them optimize processes, reduce costs, and improve efficiency.

Predictive Maintenance

By ingesting data from sensors and IoT devices, manufacturers can monitor equipment health, predict failures, and schedule maintenance proactively, reducing downtime and operational costs.

Transportation

Here’s how the transportation sector benefits from data ingestion.

Traffic Management

Data ingestion enables cities to collect real-time data from traffic sensors, cameras, and connected vehicles. Analysts use this data to optimize traffic flow, reduce congestion, and improve public transportation services.

Autonomous Vehicles

Self-driving cars ingest and process data from various sensors, such as LiDAR, cameras, and GPS, in real time to navigate safely and efficiently.

Energy

Let’s explore how data ingestion positively impacts the energy sector.

Smart Grids

Utility companies ingest data from smart meters, weather stations, and energy generation systems to monitor and manage the distribution of electricity. This enables them to balance supply and demand, reduce energy waste, and integrate renewable energy sources.

Predictive Maintenance For Wind Turbines

Operators use data ingestion to collect data from sensors installed on wind turbines which allows them to monitor performance, predict potential failures, and perform maintenance proactively.

Conclusion

You may end up with squandered opportunities if your business lacks an understanding of its target audience. To have that competitive advantage, real-time decision-making based on valuable customer-driven insights fosters innovation and growth. Comprehending what data ingestion is and it can be effectively utilized will help achieve all this.

While there is no denying that data ingestion enables automation, process optimization, and resource allocation based on accurate insights, it may present a significant challenge because of the vast variety of data sources and formats.

This is where Estuary Flow can help. Our cutting-edge data ingestion platform streamlines the process of collecting, organizing, and integrating data from many sources regardless of their formats. So if you want to make your data ingestion process smoother and more efficient, Estuary Flow is a great option. Try Estuary Flow by signing up here.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles