Start from scratch understanding introductory concepts within a Data Flow

Etymology:

1- Something that originates from something else, something derived

2- An act or process of deriving

Introduction

In this tutorial, you will learn basic concepts related to Estuary’s Data Flows, including their basic elements, how they generate Data Flows, and how to write Derivations in the Estuary Flow web application.

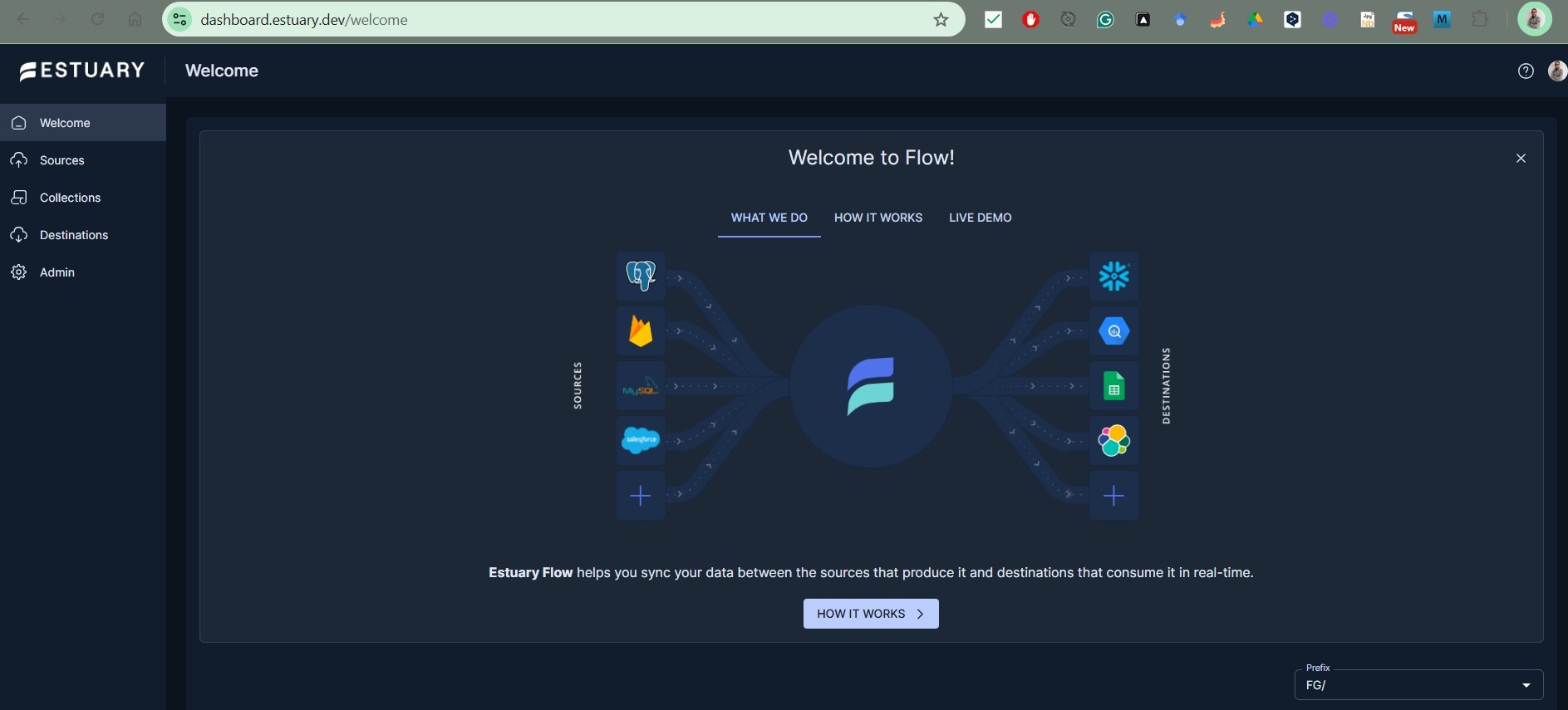

What is Estuary Flow?

Estuary Flow is a real-time data integration platform that enables organizations to move and transform data across various systems, supporting both streaming and batch data processes. It allows users to connect sources and destinations, create data pipelines, and manage data in a highly efficient, unified manner.

Built for enterprise needs, Estuary Flow offers features like high scalability, reliability, and governance, which make it suitable for complex data workflows and real-time analytics.

Key highlights of Estuary Flow include:

- Unified Data Integration: Handles both real-time streaming and batch data in a single platform, which simplifies data operations by reducing the need for multiple tools.

- Real-Time Processing: Supports low-latency data processing for real-time analytics and decision-making, helping organizations respond to business events as they happen.

- Declarative Pipelines: Allows users to define data flows in a declarative manner, making pipeline setup and maintenance more straightforward.

- Flexible Deployment Options: Offers SaaS, self-hosted, and hybrid deployment models, providing flexibility to meet different security and operational requirements.

- Connectors and Integrations: Integrates with a wide array of data sources and destinations, including cloud databases, streaming systems, and data lakes.

Estuary Flow is particularly designed for enterprise users, decision-makers, and data professionals who require robust, scalable, and secure data integration solutions that can support modern, real-time data-driven applications.

Foundational Concepts of Estuary Flow

In Flow, transformations are implemented using Derivations in a declarative way using SQL or Typescript.

To start working and understanding how Derivations work within Estuary Flow, it is necessary to have a basic understanding of the elements that comprise a Data Flow.

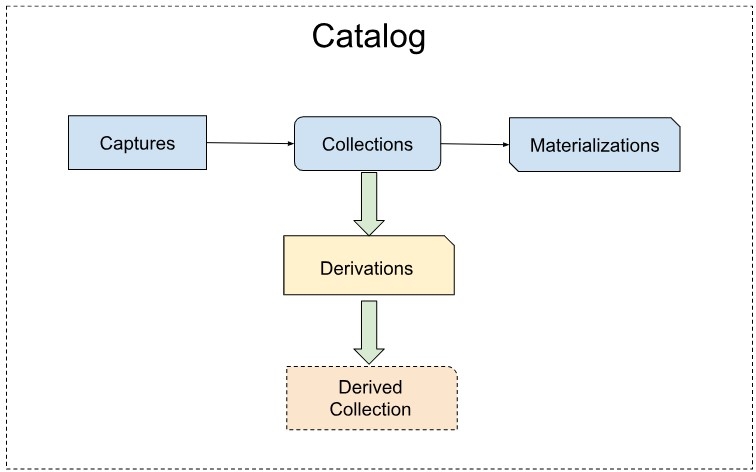

Data Flows are defined by writing specification files, generated manually or by the Flow Web App. Every end-to-end Data Flow between systems A and B has three main components that will be described in detail below Captures, Collections, and Materializations.

Estuary Flow has some cells in its ADN from all those data tools we Data Practitioners are familiar with, some of them are:

- Apache Beam and Google Cloud Dataflow

- Kafka

- Spark

- dbt

- Fivetran, Airbyte, and other ELT solutions

…and a few other ones

Estuary’s Data Flow can be compared with other ETL and streaming platforms, although it offers a unified approach that combines real-time and batch ingestion, unlike other tools. For example, while Kafka is an infrastructure component that supports streaming applications, Flow’s framework works with real-time data behind the scenes, using a slightly different infrastructure than Kafka, built over the shoulders of Gazette, a highly scalable streaming broker similar to log-oriented pub/sub systems.

Data Flow Essential Concepts

This section will cover introductory concepts so that the reader can briefly understand how a Data Flow works under the hood in an Estuary and what its different layers are composed of.

Catalog: A catalog comprises all of the elements in a Data Flow described below, but mainly by Captures, Collections and Materializations.

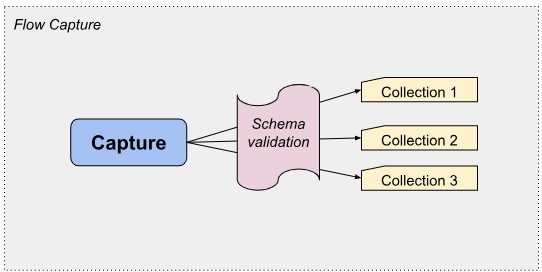

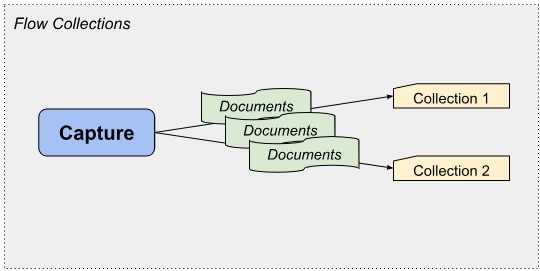

Captures: In the Capture phase, a Flow task ingests data from a specified external source into one or more Collections, mainly using connectors. While Documents continuously move from the source into Flow, the schema is validated and added to their corresponding collection.

Collections: Inside Estuary Flow Collections represent datasets captured as documents and then written to a collection. All materialized documents are read from a collection. Collections have some similarities with traditional data lakes in terms of storage, as they are saved as JSON files in your preferred cloud storage bucket.

Because you can bring your own object storage, you are able to configure whatever retention and security policy fits your organization best.

Materializations: While Flow Data Captures ingest data from an external source into one or more flow collections, materializations do just the opposite, pushing data from one or more collections to an external destination. Materializations are continuously synced with destinations through connectors, they are plugin components that allow Flow to interface with endpoint data systems.

Understanding Derivations

You can think of Derivations just as a transformation that any other data integration platform would do once has captured data, and then it would do something else with that data. All data transformations done in the middle of both processes are considered Devirations in Flow.

In plain words, Derivations are collections built from applying a filter, aggregation, calculation, and unnesting operations over a collection.

Three main elements comprise a Derivation:

- A collection that stores the output.

- A catalog task (captures, derivations, and materializations) that applies transformations to source documents as they become available and writes the resulting documents into the derived collection.

- An internal task state that enables stateful aggregations, joins, and windowing.

So far, Flow enables developers to write derivations using two languages SQLite, and TypeScript. Support for more languages is in the works.

Writing Derivations

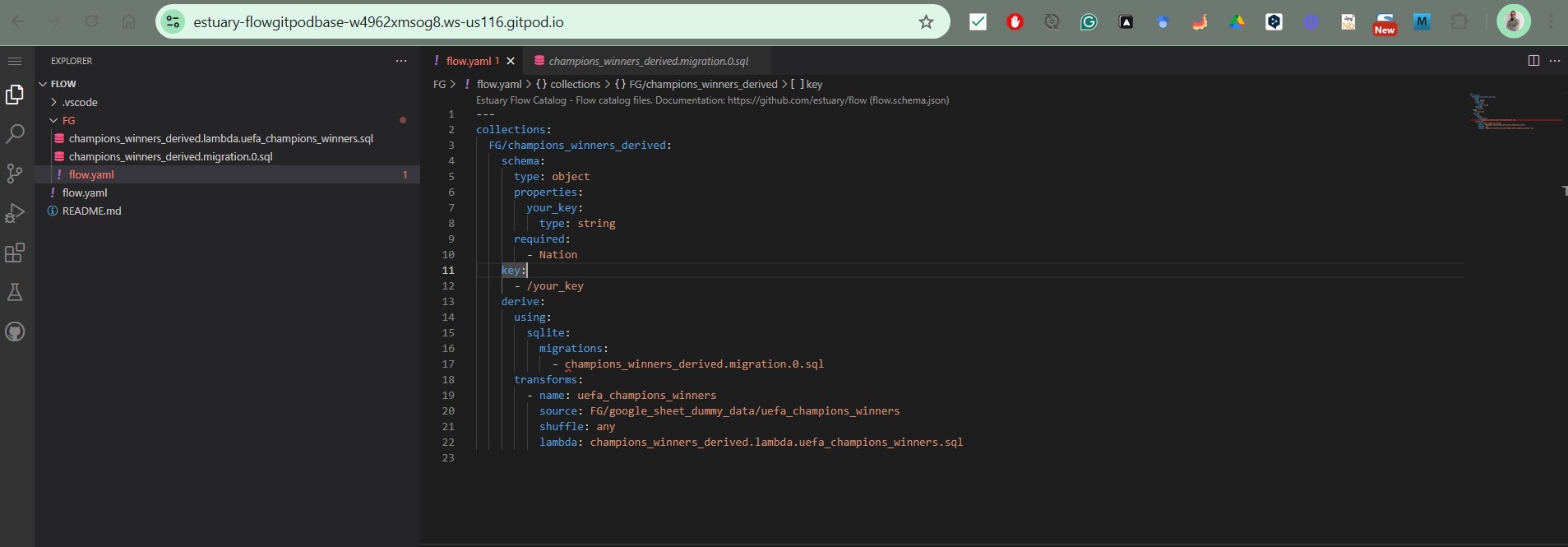

Developers write derivations locally or by the Flow UI when adding Transformations. These are plain .YAML files that specify the name of the collection that is derived, the settings of the derivation, and the language used to set the Derivation.

A sample .YAML file that defines a Derivation can be found in Estuary Flow's official documentation. For this tutorial, I’ve set a new sample collection that takes data from a dataset stored in Google Sheets.

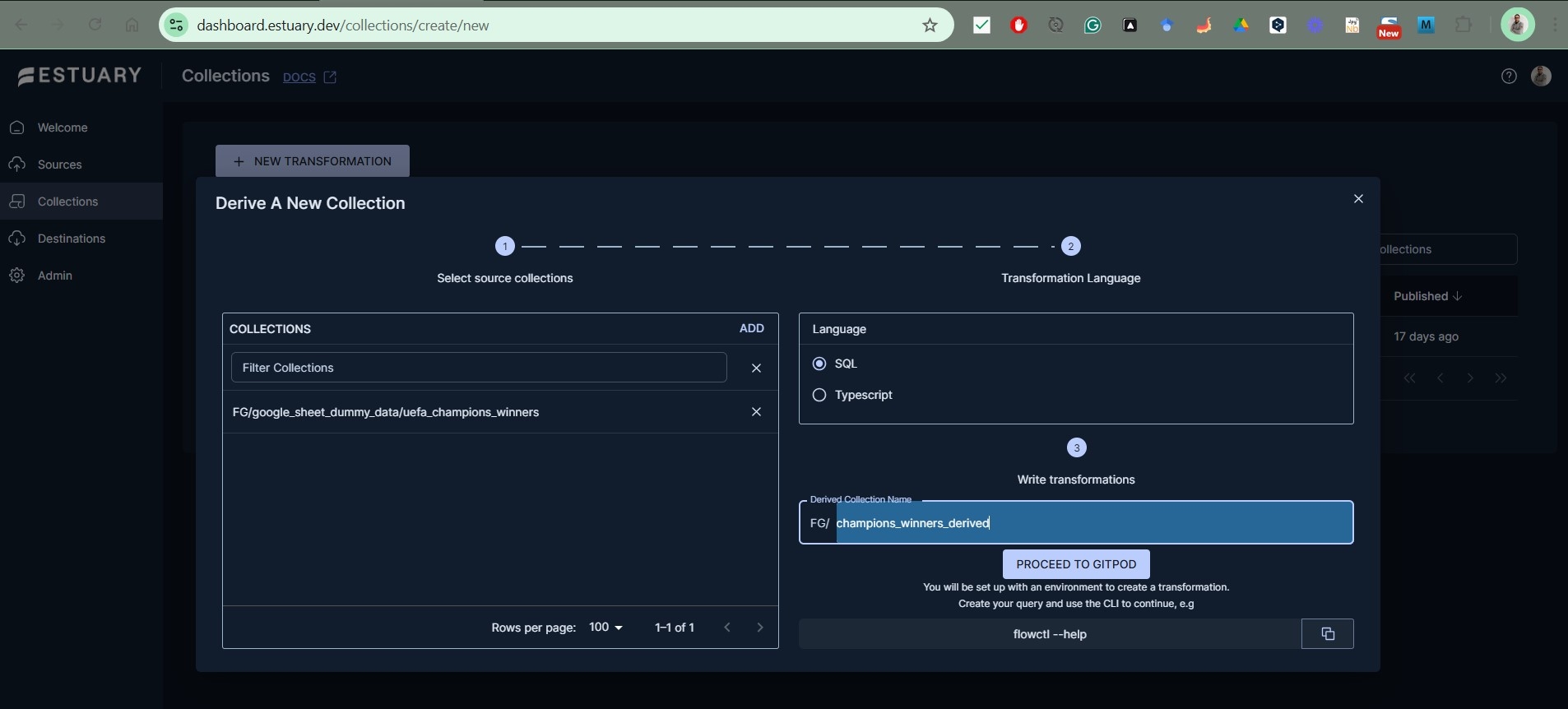

When you set a Collection in the web application, you will be able to set up a new Transformation and select the language you want to write it.

Then you will be redirected to Gitpod, which is a developer platform that provides pre-configured and ready-to-use dev environments, there you will have available all the necessary configuration files to start writing your Derivations in the web application.

--- collections: FG/champions_winners_derived: schema: type: object properties: your_key: type: string required: - Nation key: - /your_key derive: using: sqlite: migrations: - champions_winners_derived.migration.0.sql transforms: - name: uefa_champions_winners source: FG/google_sheet_dummy_data/uefa_champions_winners shuffle: any lambda: champions_winners_derived.lambda.uefa_champions_winners.sql |

This example derivation implements a migration in SQL which creates a new table and defines a transformation that is performed through a SQL Lambda.

As stated before one of the elements that act as a bridge between Data Flow’s sources and destinations, are the Connectors, they are generally built by Esturay and open-sourced dual-licensed under Apache 2.0 or MIT. They power captures and materializations, and also of the Connectors currently included in Flow are available in the web application to be used.

Conclusion

In this brief tutorial, we have started to know the key components of Estuary, and how they work together to generate reliable and scalable Data Flows. We have also learned how to specify Collections and Derivations (Transformation within an Estuary Data Flow), they are plain .YAML files that describe your Derivations in Flow UI.

In the next tutorial, we’ll take a look at how you can write your own derivations and some of the powerful use cases it unlocks. Stay tuned!

If you’re interested in trying out Estuary Flow, you can register for free here.

If you want to learn more, join Estuary’s Slack community!

About the author

Felix is a Data Engineer with experience in multiple roles related to Data. He's building his professional identity not only based in a tool stack, but also being an adaptable professional who can navigate through constantly changing technological environments.

Popular Articles