Data integrations are part of every business’ tech stack, especially those that capture and process vast amounts of data. MySQL to BigQuery data pipelines are common, but not always easy to set up correctly.

Relational databases (like MySQL) provide the transactional foundation used to deploy websites and power applications of all sizes. MySQL and BigQuery also support content management systems for various purposes, including web applications, e-commerce, and data warehousing.

So why might a company want to connect MySQL to a second type of data storage system (BigQuery)?

The simple answer is: “for data analytics.”

Data warehouses like BigQuery are a necessity if you care about analytics at scale. Whether your team is building classic SQL or Python-based data models or working on a complex machine learning project, you’ll need a data warehouse in addition to your relational database.

But connecting MySQL and BigQuery is never a one-and-done process: as data continues to be updated in the relational database it must be copied to the data warehouse.

Deploying a real-time data pipeline is the best way sync the same data for use in both operational and analytical workflows, but you can also hand-code ETL pipelines and gain similar — though less instantaneous — results.

In today’s article, we’ll provide step-by-step tutorials to do both. By the end of this post, you will have two workable methods to connect your MySQL database to BigQuery.

Let’s get started.

Understanding the MySQL to BigQuery Connection

Before we move on to the steps for connecting MySQL and Google BigQuery, let’s go over some fundamentals and advantages.

Rather skip to the tutorials? Click here.

What is BigQuery?

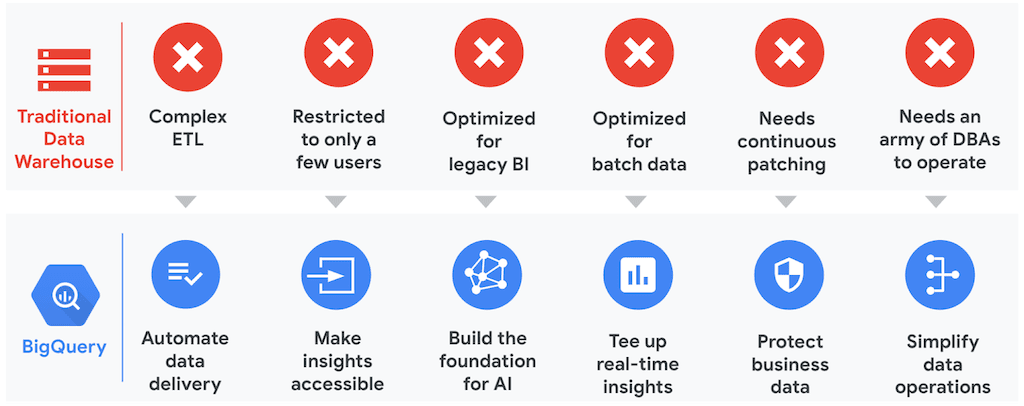

Part of the Google Cloud Platform (GCP), BigQuery is Google’s entry into the enterprise Data Warehouse space. The program today is mostly used for complex analytical queries but has utility in helping other relational databases such as MySQL to carry heavy query loads.

BigQuery is known for its serverless architecture, perfect for businesses that want to offload their work to the cloud with zero infrastructure management. For this reason, it’s a popular destination for data pipelines. It excels in handling large-scale data analytics, making it ideal for processing and querying massive datasets in real-time.

Plus it can easily synchronize with other Google services like Google Drive and Spreadsheets.

The platform helps companies maximize flexibility by separating the compute engine that analyzes data from storage systems. Users have the option to store and analyze data within BigQuery or use the data warehouse platform to access the data from the source.

Reasons To Use BigQuery for Large Scale Data Analytics

Google BigQuery is unique among other data warehouse solutions in various aspects:

1. It is Serverless

BigQuery automatically scales up and down based on the data volume and query complexity. Because of this advantage, companies do not have to manually set up cluster scaling or sizing. It is also very secure since all data is encrypted.

2. Provides Streaming And Machine Learning Support

Google BigQuery does not hamper query performance since it supports the seamless insertion of streams of data into tables without affecting query performance. And since Google is adding machine learning modules to it, users can create and train ML models using SQL.

3. Has a Flexible Payment System

The storage cost on BigQuery to other Cloud Storages runs at $0.020/GB/month, and the Query Cost is $5/TB. This makes BigQuery one of the most affordable data warehouse options available online.

An important detail here is that BigQuery charges queries by the amount of data processed rather than the amount of time the compute engine is running. This makes it much easier to manage your bill size compared to other data warehouses that charge by the minute of active warehouse time.

What Data Can You Migrate to BigQuery from MySQL?

MySQL has retained its spot as the most popular open-source relational database management system (RDBMS) since the 1980s and is currently used by companies of all sizes.

At its core, MySQL is a relational database. It organizes and queries data in systems of rows and columns and is known for its stability and quick performance. You can migrate various types of data, including MySQL tables, to BigQuery.

To facilitate this migration, you can use the mysqldump command for exporting MySQL data.

Here’s what you can expect to integrate when shifting to BigQuery:

Tables

MySQL and BigQuery both store data in tables. When you port a table from MySQL to BigQuery, it persists in BigQuery as a standard, or managed table.

Both MySQL and BigQuery use SQL, but support different data types, so you’ll need to map the MySQL data types to equivalent BigQuery data types. There are different ways to handle this, depending on the data pipeline you use.

Once the table has landed in BigQuery, it is stored as encrypted backups in Google’s warehouse. Users can run complex queries on it or complete any task enabled by BigQuery.

The Benefits Of Connecting MySQL To BigQuery

Today, MySQL ranks second as the most popular database, according to DB-engines’ rankings and BigQuery is Google’s foray into a multi-cloud, serverless, and cost-effective enterprise data warehouse space.

Therefore, replicating data from MySQL to BigQuery will be an important part of your overall data integration strategy. Doing so can provide you with the following benefits:

- BigQuery is designed for efficient and quick analytics and it does this without affecting operational workloads, which you’ll likely continue to handle in MySQL. It excels in large-scale data processing, making it suitable for working with massive datasets in real-time, high-performance analytics.

- It optimizes workloads and creates a single source of truth. Analysts can find it tricky and time-consuming to shuffle from one platform to another. Keeping BigQuery up-to-date with MySQL insures that both data storage platforms are united around the same source of truth and other platforms, whether operational or analytical, are always pulling in correct data.

- BigQuery improves data security. By replicating data from MySQL to BigQuery, users remove the need to grant permissions to other data engineers on operational systems.

- BigQuery handles Online Analytical Processing (OLAP) whereas MySQL is built for Online Transaction Processing (OLTP). Because of this difference, BigQuery can provide deeper data insights and help turn big data into valuable insights since it is a cost-effective, serverless, and multi-cloud data warehouse.

Connecting MySQL To BigQuery: 2 Methods

To move data from MySQL to BigQuery, you’ll need a data pipeline.

There are many ways to create one, Here are 2 best methods for setting up your MySQL to BigQuery integration

- Method 1: Manual ETL Method to Connect MySQL to BigQuery

- Method 2: Use Estuary a no-code data pipeline tool to Connect MySQL to BigQuery

The right strategy for you will depend on your expertise and needs. Examples and steps for both strategies are detailed below.

Method 1: Manual ETL Method to Connect MySQL to BigQuery

The manual method of connecting MySQL to BigQuery requires writing a custom ETL pipeline. Such a pipeline must include three main steps.

- Extract data from MySQL.

- Transform data into a format compatible with BigQuery.

- Load data into BigQuery.

This method is well-suited for one-off data transfers, particularly when you only need to move one table rather than the entire contents of a database. It also requires at least basic programming skills.

This method gets tricky when you require frequent updates, real-time insights, or are syncing multiple tables. You can certainly build such pipelines, but they require more sophisticated engineering. It’s also not recommended if you have no software development or data engineering experience.

Because this type of pipeline is built from scratch, there’s no single, authoritative set of steps you can follow. Below, we’ll summarize two documented methods, with links to step-by-step tutorials that you can adapt to your specific environment and goal.

Most hand-coded methods (like the ones included here) will not ensure that both systems are continuously in-sync and authoritative sources of truth. For that, we recommend a real-time pipeline.

Manual ETL: Dump and Load

This simple manual process is called a “dump and load.” It entails manually extracting or dumping, a table from MySQL, transforming it, and uploading it to BigQuery. If the table already exists in BigQuery, it’ll be dropped and replaced or completely overwritten.

You can use a SQL query or MySQL’s built-in mysqldump utility to do so. You’ll need to convert the resulting data to CSV format. You may then need to clean data further to comply with BigQuery’s expectations. Once that’s done, upload the file to BigQuery

Most of this process can be completed with command line tools and SQL.

See the full tutorial from Hevo here.

Manual ETL: Use Python and Google Cloud Functions for Data Replication

With this more sophisticated method, you can set up a pipeline to run on a set cadence, and have access to more sophisticated transformations with Python’s Pandas library. If you’re familiar with Python, this may be for you.

Additionally, you can use Google Cloud SQL for exporting tables from MySQL to BigQuery.

You’ll use Google Cloud Functions, Google’s serverless script execution environment, to orchestrate this pipeline.

After configuring the Cloud Function environment, you’ll use Python to:

- Create a function that logs into your MySQL database via SSH tunneling and extracts data from a table with an SQL query.

- Use Pandas to transform the data to meet your requirements.

- Load the transformed data into BigQuery

Finally, you’ll use Google Cloud Scheduler to run this job regularly.

See the full tutorial on Towards Data Science

Method 2: Use Estuary a no-code data pipeline tool to Connect MySQL to BigQuery

As data pipeline architectures get more sophisticated, they get harder and harder to create and manage on your own. Fortunately, data pipeline services are proliferating, allowing businesses of all sizes to create high-quality pipelines without a large engineering team.

These services take care of engineering the core of the pipeline and its components. All you have to do is supply credentials and configuration details for MySQL and BigQuery, and the service takes care of the rest.

One of these platforms is Estuary Flow. Flow is a real-time data integration platform that allows you to ingest, transform, and load data between a variety of data systems in milliseconds.

You can use Flow’s low-code UI to connect MySQL to BigQuery in minutes as described in the steps below.

- Step 3: Set up MySQL and BigQuery

- Step 2: Select and Capture MySQL Data for Replication

- Step 3: Map and Materialize Data into BigQuery in Real-Time

When you use this method:

- Minimal technical expertise is required.

- Your data pipeline is backed up securely.

- Data is kept accurate with schema validation and exactly-once semantics.

- A single data pipeline can sync many (or all) tables in your MySQL database into equivalent BigQuery tables.

- You’re using change data capture to capture data from MySQL instantly — there’s no delay, and changes appear in BigQuery immediately.

Before you begin, head to the web app and sign up for a free trial if you don’t have an account.

Step 1: Set up MySQL and BigQuery

Before you begin, configure both systems to meet the prerequisites.

Estuary’s MySQL integration is a change data capture connector. It reads change events from the MySQL binary log and uses a watermarks table to protect against data loss. You’ll need to run a few SQL queries against your database to get things working.

The MySQL prerequisites can be found here.

Estuary’s BigQuery integration uses a Google Cloud Storage bucket as a temporary staging area, so you’ll need to create a new bucket. It also requires credentials for a Google service account with appropriate permissions.

The BigQuery prerequisites can be found here.

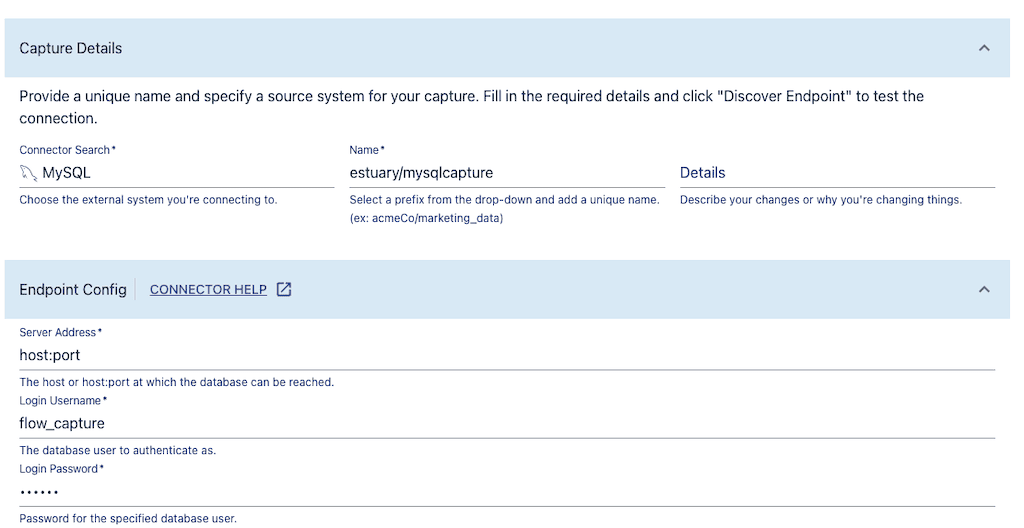

Step 2: Select and Capture MySQL Data for Replication

- Go to the create a new capture page of the Estuary web app and select the MySQL connector.

- Add a unique name for the capture. Provide the MySQL server address, database username (this should be “flow_capture” if you followed the prerequisite steps), and a password.

- Click the Next button. Flow lists all the tables in your database, which it will convert into Flow data collections. You can remove any tables you don’t want to capture.

- Click Save and Publish.

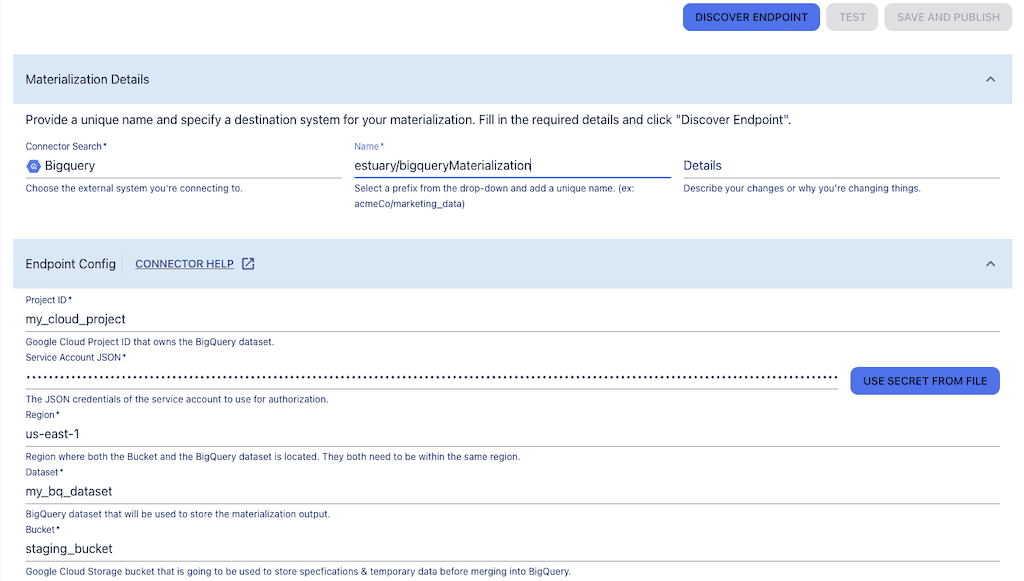

Step 3: Map and Materialize Data into BigQuery in Real-Time

- Click the Materialize Collections button to continue.

- Choose the BigQuery connector.

- Add a unique name for the materialization.

- Provide the following details for your BigQuery dataset:

- Google Cloud project ID

- Service account JSON credentials (which you generated per the prerequisites)

- The project’s Google Cloud Region

- Dataset name

- Staging Google Cloud Storage bucket name

- Scroll down to the Collection Selector. Each table you just captured from MySQL will be mapped to a new table in BigQuery. Provide a name for each (you might choose to use the same names).

- Click Next.

- Click Save and Publish.

All historical data from your MySQL database will be copied to BigQuery. Any new data that appears in MySQL will also be copied to BigQuery in real-time.

For more help with this method, see the Estuary Flow documentation on:

Conclusion

Connecting MySQL to BigQuery is critical for companies that want to power operational and analytical workflows off the same data.

There are many ways to accomplish this. The two we’ve covered today — hand-coded ETL and using a managed real-time pipeline service — are just two ends of a large spectrum.

While hand-coded methods offer complete freedom, managed services give you access to more reliable, scalable, and efficient data pipelining capabilities with less engineering effort.

You can try Estuary Flow for free or get in touch with our team to discuss your production use case.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles