To stay relevant in a digital-first world, it’s pivotal for organizations to set their data in motion.

56% of IT leaders have reported that modernizing the data infrastructure with real-time data streams has led to higher revenue growth than their competitors. This is made possible by providing personalized, on-demand experiences to customers, grounded in streaming data.

That’s where Confluent comes in. It allows you to connect, process, and react to your data in real time with a complete, self-managed platform for data in motion.

While Confluent is a great platform that offers powerful features, it may not always be the most suitable solution for a given project. In this article, we’ll be discussing some great alternatives to Confluent that should be taken into consideration. From cloud-based solutions that allow for scalability to open-source options that are both powerful and free, there are plenty of options data engineers should be aware of.

What is Confluent?

Confluent is an open-source platform for streaming data used by organizations to process, store, and analyze data in real time. More specifically, Confluent simplifies the connection of disparate data sources to Kafka since it offers Kafka as a managed service.

You can develop applications with Confluent’s suite of tools, client libraries, and services for stream processing.

It also provides an array of connectors for integrating with other data sources, including databases and cloud services.

Here are a few limitations of Confluent Kafka that might not make it suitable for every project out there:

- Scaling Issues: Subsequent scale-outs won’t be completed if the cluster lacks the resources to complete the scale-out. Confluent Kafka doesn’t let you decrease the number of pods to prevent accidental data losses.

- Virtual Networks: When deploying Confluent Kafka to a virtual network, you might not be able to switch to host networking without completely re-installing it. You’ll need to reinstall it if you want to switch from host to virtual networking as well.

- Out-of-band Configuration: Confluent Kafka doesn’t support out-of-band configuration modifications. Instead, it focuses on deploying and maintaining the service with a specified configuration. To succeed in this, Confluent Kafka assumes ownership of the task configuration. But, if an end-user changes individual tasks through out-of-band configuration operations, the service will override these changes later. So, if a task crashes, it’ll not be restarted with the configuration that was changed out-of-band. Also, if you initiate a configuration update, all the out-of-band changes will be overwritten in the rolling update.

- Disk Changes: Confluent Kafka doesn’t support changing volume requirements after initial deployment. This ensures that no data is lost during reallocation by accident.

So, with these limitations in mind, you’ll have to look for Confluent Kafka alternatives that meet your needs. In the next section, we’ll go over 7 popular alternatives to Confluent that you can consider for your workflow.

Primary Confluent Alternatives in the Market

Estuary Flow

Confluent Kafka and Estuary Flow are both managed services built on top of event streaming platforms. However, the event streaming platforms used are different. Confluent Kafka is built on top of Apache Kafka, while Estuary Flow is built on top of Gazette.

Gazette simplifies building platforms that effortlessly mix batch, SQL, and millisecond-latency streaming processing paradigms. It allows teams to work from a common repository of data in a way they find fit. Gazette’s core abstraction is a “journal”. These are append logs that are roughly analogous to Kafka partitions.

Here are a few more analogies between Estuary Flow/Gazette and Kafka:

- Flow Collections —>Kafka Streams

- Flow Tasks —>Kafka Stream Processors

Here are a couple of key features of Estuary Flow that make it a great Confluent Control Center alternative:

- Data Modeling: Flow models data in collections which are then split into journals. Confluent Kafka, on the other hand, models data in fundamental topics which can be split into partitions.

Collections always store JSON and have an associated JSON schema, which you can easily manipulate in the Flow web app. With Flow collections, you have an easy path to enforce data validation, giving you tighter control over your data in a streaming context. - Scalability: Estuary Flow scales dynamically: provisioning compute resources as-needed without data loss. There's no need to disable your pipelines to scale up.

- Data Connectors: Similar to Confluent, Estuary Flow includes a variety of connectors for source and destination systems. It emphasizes facilitating connections to high-scale technologies like databases, cloud storage, and pub/sub systems. All Flow connectors are open-sourced.

- Historical Data Handling Efficiency: Gazette journals store data in contiguous chunks known as fragments, which usually live in cloud storage. Flow uses every journal’s unique storage configuration to let users bring their own cloud storage buckets. Gazette also provides clients with pre-signed cloud storage URLs, allowing it to handle historical data more efficiently than Kafka and Kafka-based systems.

- Pricing: Estuary Flow provides three pricing plans — Cloud, Open Source, and Enterprise. Flow’s pricing is more flexible since it charges users for the amount of data processed whereas Confluent charges users by the hour.

AWS Kinesis

AWS Kinesis is used to process, collect, and analyze data in real time. The serverless streaming data service lets you stream and process data at a scale of your choice.

Kinesis is similar to Kafka since they are both designed to tackle huge volumes of data in real time, making them suitable for use cases like real-time analytics, event-driven architectures, and log aggregation.

Here are a couple of key features of AWS Kinesis that make it a great Confluent Control Center alternative:

- Dedicated Throughput/Consumer: You can attach as many as 20 consumers to your Kinesis data stream, each with its dedicated read throughput.

- Highly Available and Durable: In AWS Kinesis, you can synchronously replicate your streaming data across three Availability Zones (AZ) in an AWS region. You can even store this data for up to a year to provide additional layers of data loss protection.

- Low Latency: AWS Kinesis ensures that you can make your streaming data available to various real-time analytics applications within 70 milliseconds of collecting it.

Taking attributes of a stream processing system into consideration, here’s how AWS Kinesis fares against Confluent Kafka:

- Scalability: With Confluent Kafka, you can add more nodes to the cluster for increased capacity. AWS Kinesis scales well via sharding, but a Kinesis stream imposes a limit on the number of shards you can work with. A workaround here could be using multiple Kinesis streams for greater scalability.

- Security/Monitoring: AWS Kinesis integrates with AWS CloudWatch for alerting and monitoring. Confluent Kafka can use the Confluent Control Center for enhanced monitoring capabilities.

- Performance: AWS Kinesis has a higher latency and a moderate throughput when compared to Confluent Kafka. Kafka supports stream processing through KsqlDB and Kafka streams and AWS Kinesis supports it through Kinesis Data Analytics.

- Learning Curve: The learning curve for AWS Kinesis is typically shorter than for Kafka. However, teams still need to learn the ropes and understand how it integrates with other AWS services.

Aiven

With Aiven, you can set up a fully managed Kafka as a service directly from your web console or programmatically through their CLI, API, Kubernetes operator, or Terraform provider.

Aiven allows you to connect to external data sources with Aiven’s fully managed Kafka connect service alongside various in-built connectors. It allows you to integrate with your authentication solution using Google OAuth or SAML. You can even integrate with observability tools like Prometheus and Datadog to name a few.

Here’s how Aiven for Apache Kafka fares as a Confluent alternative:

- Quality of Support: Aiven stands out as a Confluent alternative due to its quality of support for stream processing platforms. It offers a basic level of support for no additional fee for regular subscriptions via email. You can seek answers to problems related to accessing and using the Aiven cloud services. Apart from the basic support tier, Aiven offers three support tiers: Priority, Enterprise, and Business.

- Flexibility: Aiven lets you use your existing cloud account a.k.a Bring Your Own Account.

- Consistent Availability: With Aiven, you can enjoy an SLA of 99.99% across pricing plans. Confluent Kafka also promises consistent availability, but it offers an SLA of 99.99% only on multi-AZ clusters. For basic and single AZ clusters, you can expect an SLA of 99.95%.

- Cost: With Aiven, you get an inclusive price that covers both storage and networking costs. Confluent Kafka starts with a low price, but as you look for additional features, the cost increases in proportion as well.

- Monitoring: Both Aiven and Confluent Kafka offer out-of-the-box monitoring apart from plug-in monitoring solutions. Aiven’s observability components bring together a set of reliable blocks that work together seamlessly. These components are Grafana (for alerts and visualization), M3 (as a highly scalable metrics engine), and OpenSearch (used to examine logs with OpenSearch dashboards as search UI).

Strimzi

Strimzi is an open-source project that provides Kubernetes operators and containers that let you manage and configure Kafka on Kubernetes. These operators simplify the process of managing Kafka components, clusters, topics, access, and users.

Here are a few factors to keep in mind for the Strimzi vs Confluent Kafka discussion:

- User and Topic Management: In terms of managing topics and users, along with internal Kafka RBAC configuration, the Strimzi operator has an edge over Confluent Kafka. You can configure users and topics pretty easily in Strimzi. You can also use the kubectl get commands to get information about all the topics.

- Security: Strimzi offers basic cluster security configuration for your clusters when considering certificate management and TLS settings. The topic and user operators provide granular configuration for access in ways that the Confluent operator doesn’t.

- External Cluster Access: External access is supported for clusters in Strimzi only by way of exposing node ports. Strimzi doesn’t provision load balancers, unlike Confluent.

- Alerting and Monitoring: Strimzi provides a Grafana dashboard out of the box while exporting Kafka metrics to Prometheus. On the other hand, Confluent Kafka has its proprietary “control center” that it uses for alerting and monitoring. Apart from this, Confluent also exports alerts and metrics through Sink connectors for third-party software like PagerDuty, Datadog, and Prometheus, to name a few.

- Cost: Given that Strimzi is an open-source project, there’s no cost involved. With that said, you do need a considerable amount of expertise in Kubernetes and Kafka to get the most out of it. Strimzi doesn’t offer any professional services, unlike Confluent.

Azure Event Hubs

Azure Event Hubs is a fully managed, real-time data streaming platform on Microsoft Azure. It enables users to easily build efficient, secure, reliable, real-time data streaming applications and services.

It provides an Apache Kafka endpoint on an event hub. This allows users to connect to the event hub through the Kafka protocol.

You can easily use an event hub’s Kafka endpoint from your applications without any code changes.

Here are a few key features that make it a great Confluent Kafka alternative:

- Scalability: The scale in Azure Event Hubs is controlled by the processing units or throughput units (TUs) you buy. On enabling the Auto-Inflate feature for a standard-tier namespace, Event Hubs will automatically scale up TUs when you hit the throughput limit.

- Idempotency: Azure Event Hubs for Kafka supports both idempotent consumers and producers. Its “at least once” delivery approach ensures that events will always be delivered. You can even receive these events multiple times.

- Easy to Use: With Azure Event Hubs, you don’t have to set up, manage, or configure your Zookeeper and Kafka clusters. You don’t need to use Kafka-as-a-service since Event Hubs gives you the PaaS (platform-as-a-service) Kafka experience you’re looking for.

- Real-time for Batch Processing: Azure Event Hubs uses a partitioned consumer model that allows multiple applications to process streams simultaneously and quickly.

- Flexibility: If the Event Hubs’ platform still doesn’t meet your needs that need specific Kafka features, you can run a native Apache Kafka cluster in Azure HDInsight.

Quix

Quix is a fully-managed platform that works with the Quix Streams library. Tooled out for ML training from the get-go, the Quix platform allows you to easily separate resource consumption by running stream processing tasks as serverless functions.

To create and deploy a service or a job in the Quix platform, developers just need to specify their dependencies in a requirements file and Quix will install them automatically for you.

Here’s how Quix squares up with Confluent Kafka:

- Architecture: Since Quix is a unified platform, data processing, sourcing, and analyzing are all done in one place. You can use the Quix Streams library as an external source to send data from Python-based data producers. You can even deploy connectors within the Quix platform to ingest data from external APIs like IoT message hubs and WebSockets.

- Monitoring: Quix has a set of monitoring tools like deployment logs, build logs, and infrastructure monitoring. It also has a monitoring API that’s primarily meant for internal use.

- Learning Curve: Since there is no domain-specific language that developers need to learn, they can get started right away. With Quix, developers have a gentle learning curve since it lacks infrastructural obstacles. A singular focus on Python as a first-class citizen further makes it easier to comprehend for Python developers.

- Ecosystem: The Quix Streams library was open-sourced in 2023, therefore it doesn’t quite enjoy the extensive tooling that Confluent Kafka does.

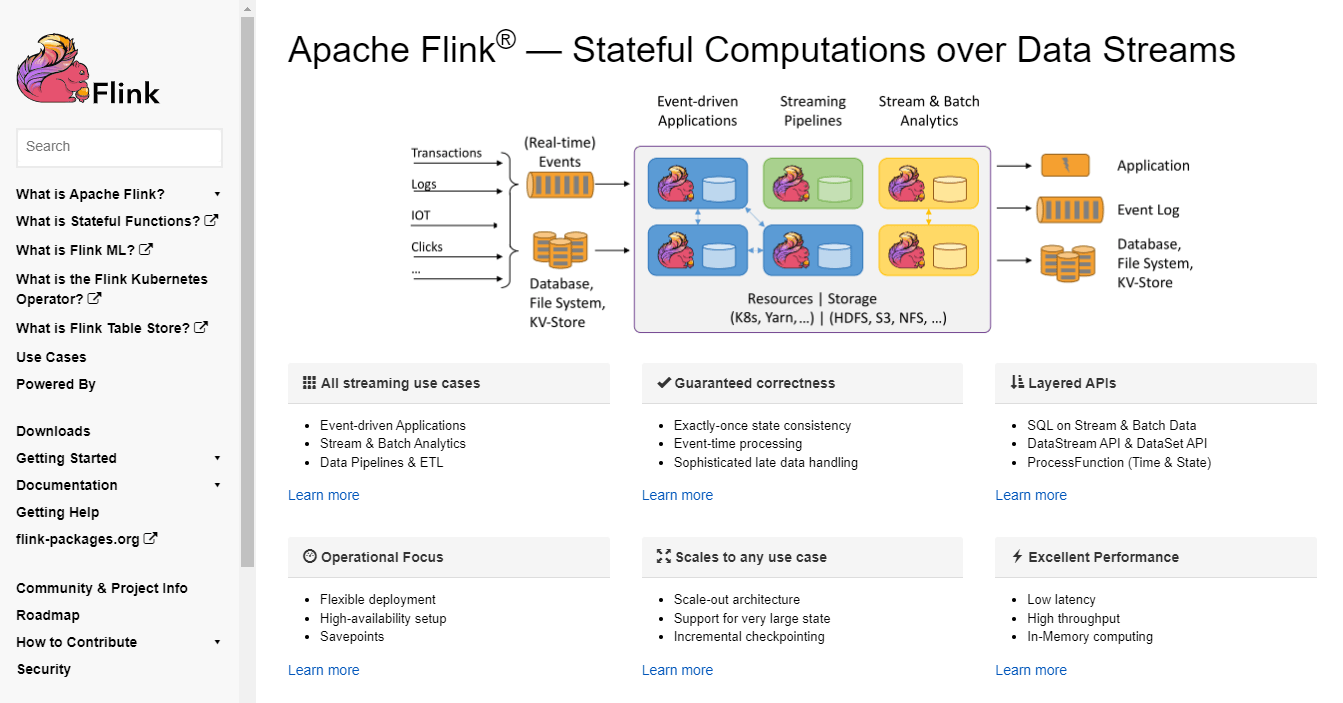

Apache Flink

Apache Flink provides high-throughput, low latency for stateful stream processing. It’s widely used in event-driven applications, real-time data analytics, and complex transformations.

Flink as a framework is designed to run separately from your main pipeline or application, in its container or cluster.

Here is a list of the primary differences between Apache Flink and Kafka Streams:

- Data Sources: Kafka Streams are limited to Kafka topics as the source. Apache Flink, on the other hand, can ingest data from multiple sources like message queues and external files.

- Stream Type: Apache Flink supports both unbounded and bounded streams whereas Kafka Streams only supports unbounded streams.

- Organizational Management and Deployment: A Flink streaming program is modeled as an independent stream processing computation and is called a job. On the other hand, Kafka Streams is a library in Kafka that can be embedded inside any standard Java application.

- Accessibility and Complexity: Kafka Streams usually needs less expertise to get started and manage overtime for developers. It works out of the box; this means teams don’t have to integrate any cluster management to get started, reducing its overall complexity. However, this can be a pretty sharp curve for non-technical users. Since Apache Flink is deployed on the cluster, it’s slightly more complex. Flink’s flexible nature lets you customize it to support a vast variety of data sources. Given that it’s managed by the infrastructure team, some of the complexity is taken care of while setting up Flink for data scientists and developers.

FAQs

What is stream processing, and why is it important?

Stream processing is a way of processing data in real-time as it enters a system. Instead of waiting for a batch of data to come in before processing it, stream processing allows data to be processed as it arrives, allowing for faster analysis and decision-making.

It's like having a window into the future and being able to make decisions today based on what we know tomorrow

What are the key features to consider when evaluating stream processing platforms?

The key features to consider when evaluating stream processing platforms include scalability, fault tolerance, latency, and throughput.

Scalability refers to how well the platform can handle large amounts of data.

Fault tolerance ensures that any failure or interruption won't cause data to be lost.

Latency is the amount of time it takes for data to be processed and throughput is the amount of data that can be processed in a given period.

What are the main challenges in implementing custom stream processing solutions?

The main challenges that you might face when trying to implement custom stream processing solutions are as follows:

- Complexity: Complex stream processing solutions are difficult to set up and maintain, as they require a deep understanding of the underlying technologies and architecture.

- Scalability: Stream processing solutions must be able to scale with increasing data volumes and number of users while ensuring that the processing is still reliable and efficient.

- Fault Tolerance: Custom stream processing solutions must be able to handle server downtime and other errors without affecting the output of the stream processing.

- Performance: Stream processing solutions must be able to process data in real-time and ensure that the output is accurate and up to date.

- Security: They need to be secure and able to protect sensitive data from unauthorized access.

What industries benefit the most from stream processing platforms?

Industries that benefit the most from stream processing platforms include banking, telecommunications, healthcare, and logistics.

Stream processing allows these industries to process large amounts of data quickly and accurately, enabling them to make better, more informed decisions in real time.

Are there any free or open-source alternatives to Confluent?

Confluent has several open-source alternatives in the market. Some examples of open-source alternatives to Confluent are:

- Apache Kafka

- Strimzi

- Apache Flink

- Apache Samza

Confluent alternatives like Quix, Aiven, Azure Event Hubs, and Amazon Kinesis offer free plans that you can try before picking a commercial solution for your use case.

Summary

Stream processing is a powerful way to process large volumes of data in real-time.

Confluent is a popular choice for stream processing, but several alternatives on the market offer additional features and benefits. Estuary Flow, AWS Kinesis, Aiven, Strimzi, Azure Event Hubs, Quix, and Apache Flink are some of the top solutions to consider when evaluating stream processing platforms.

If your organization is being held back by a data engineering bottleneck, while less technical stakeholders are blocked from contributing by a high barrier of entry…

Or

If you manage continuous processing workflows with tools like Flink, Spark, or Google Cloud Dataflow, and you’d like a faster alternative that scales to your liking, then you should opt for Estuary Flow.

It integrates with an ecosystem of free, open-source connectors to extract data from sources of your choice with low latency to a destination of your choice. This’ll allow you to replicate that data to various systems for both operational and analytical purposes in real-time.

For more comparison guides, check out these related blogs:

Confluent Kafka Vs. APache Kafka Vs. Estuary: 2024 Comparison

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles