Change data capture (CDC) is a crucial component of data management and analytics. Its ability to identify and capture changes made in a database is a vital factor for many processes like data replication, data warehousing, and data integration. For implementing change data capture, Kafka has emerged as a powerful tool.

But implementing CDC with Apache Kafka isn’t always straightforward. There are challenges in understanding the complex architecture, selecting the right tools for implementation, and successfully deploying and maintaining the CDC service.

Today’s guide has been specially curated to address these issues and simplify the implementation of change data capture with Apache Kafka. We will cover the fundamentals of Change Data Capture and how it works with Apache Kafka. We will also introduce you to Debezium, a distributed platform that enables CDC with Kafka, and Estuary Flow, our tool designed to streamline your CDC pipeline.

By the end of this guide, you will not only understand how to implement CDC using Apache Kafka but also learn how to select the right tool for your specific needs.

Looking for something else? Check out our CDC Guides for other systems:

What Is Apache Kafka?

Apache Kafka is a powerful, open-source event-streaming platform you can use to handle your real-time data feed reliably. It was originally developed by LinkedIn and later open-sourced. 80% of the Fortune 500 rely on it for handling their streaming data.

Core Functionalities Of Apache Kafka

Kafka handles data streams seamlessly through a handful of key functions. These include:

- Publish: Kafka collects data streams from data sources. It then publishes or sends these data streams to specific topics that categorize the data.

- Consume: On the other side, Kafka allows applications to subscribe to these topics to process the data that is flowing into them. This ensures that each application has access to the data it needs when it needs it.

- Store: Lastly, Kafka stores data reliably. It can distribute data across multiple nodes to ensure high availability and prevent data loss.

To make all this happen, Kafka relies on several different parts. These include:

- Brokers: These are the servers in Kafka that hold event streams. A Kafka cluster usually has several brokers.

- Topics: Kafka organizes and stores streams of events into different categories which in Kafka systems are referred to as Topics.

- Producers: These are the entities that write events to Kafka. They determine which topics they will write and manage how events are assigned to partitions within a topic.

- Consumers: On the other hand, consumers are the entities that read events from Kafka. They can read records in any order and they manage their position in a topic.

- Partitions: Topics are split up into partitions which are like sub-folders within a topic folder. This allows the work of storing and processing messages to be shared among many nodes in the cluster.

Change Data Capture (CDC): The Basics

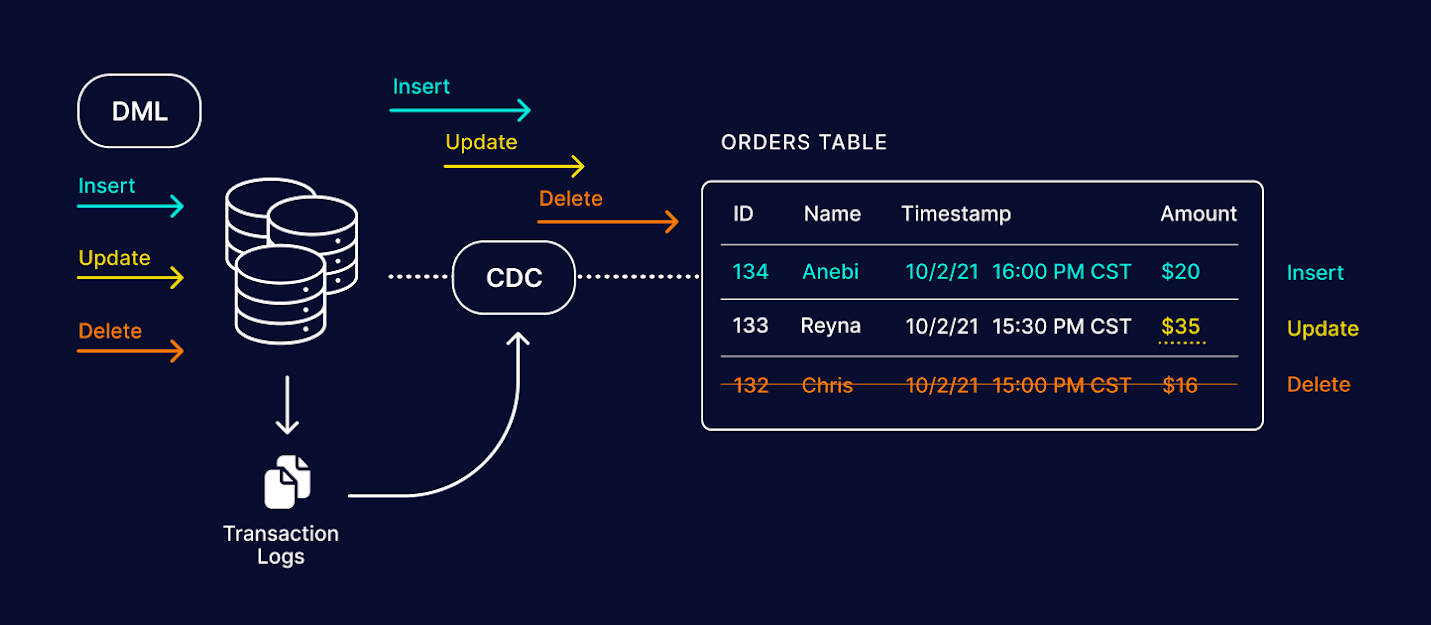

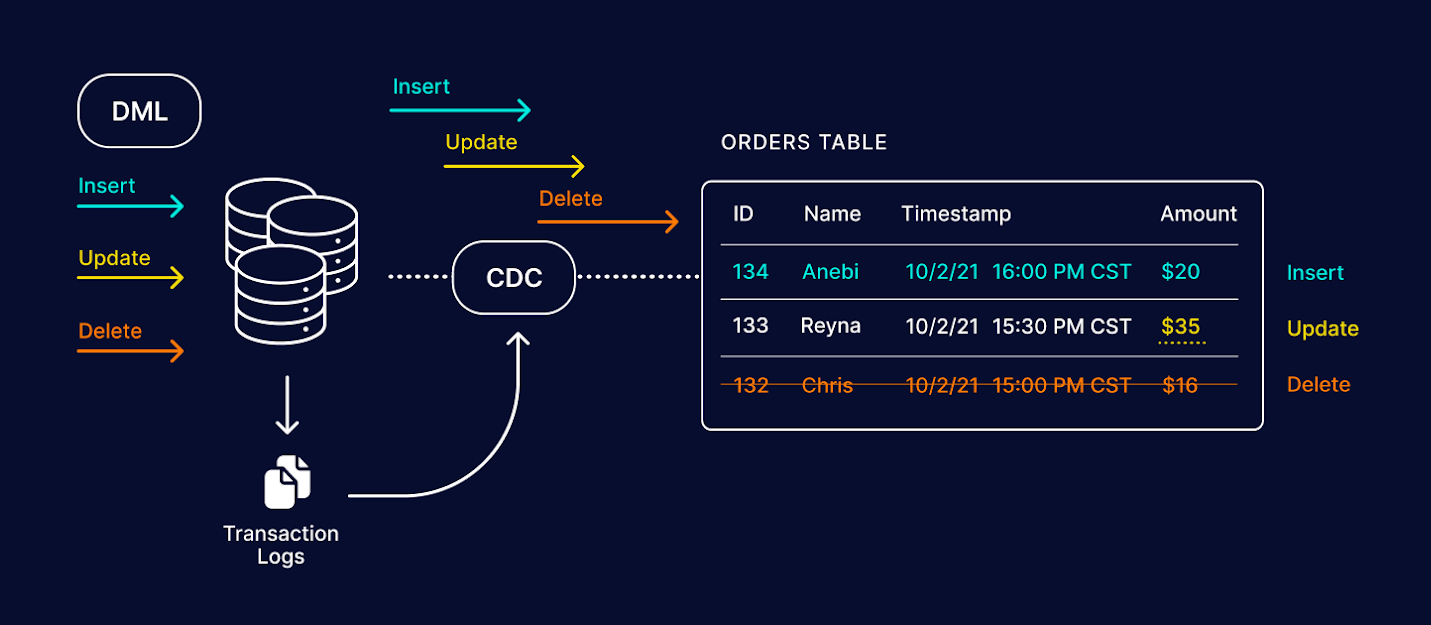

At its core, Change Data Capture CDC is the process of identifying and capturing changes in a database and delivering those changes in real time to a downstream system. In your database, data can change dynamically. New data comes in, old data gets updated or removed. CDC keeps a tab on these changes as they happen, in real time.

So how does CDC actually work? It follows 3 simple steps:

- First, CDC keeps a lookout on your database for any insertions, updates, or deletions.

- When a change does happen, CDC takes note. It captures that change including what was changed and when it happened.

- Once captured, the changes are then stored in a target data storage system.

Next, let’s look at how the process of CDC works with Apache Kafka.

Leveraging Change Data Capture With Apache Kafka

Kafka is fundamentally designed to handle streaming data and can effectively turn databases into real-time sources of information. Here’s why Kafka excels at CDC:

- Kafka stores data for a configurable period which makes it possible to access historical change data. This storage capability enables advanced analytics and auditing operations.

- Kafka provides robustness and reliability. With CDC, you can capture data change and ensure no data is lost. Kafka’s ability to distribute data across multiple nodes means a high level of data durability and reliability.

- Kafka’s publish-subscribe system is ideally suited for CDC. The events in CDC, which are simply the changes in data, are in themselves a stream of information that can be published and consumed. With Kafka’s capabilities, data changes are transmitted in real-time, enabling applications to respond to changes as they happen.

But not all Kafka CDC implementations are the same. Let’s talk about this next.

2 Types Of Kafka CDC

Broadly, two types of CDC can be carried out using Apache Kafka: query-based and log-based. Let’s go through both.

Query-Based Kafka CDC

Kafka can also work with Query-based CDC where any changes in the database are identified by running a database query. The Bottled Water connector for PostgreSQL, for instance, uses this technique to convert database mutations into a stream of structured Kafka messages.

Log-Based Kafka CDC

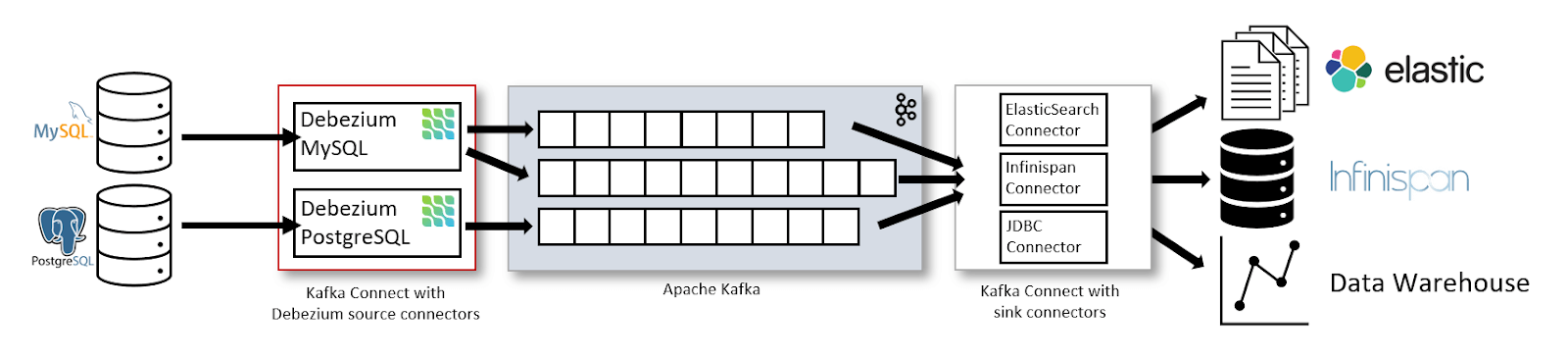

Kafka can read from the log files of your database like MySQL's Binary Logs or SQL Server’s Transactional Logs. It is an efficient method as changes are tracked automatically by the database system itself. The Debezium connector uses this approach to capture and stream database changes.

To implement these methods, you need to utilize Kafka Connect, a tool specifically designed to link Kafka with various data systems. This is where Kafka Connectors come into play. There are 2 types of connectors in Kafka Connect:

- Source Connectors: These source connectors work as the link between the database and Kafka. They extract the change data, whether it’s via query-based or log-based CDC, and publish it to Kafka as an event stream.

- Sink Connectors: Sink connectors take this event stream from Kafka and deliver it to a destination system. These could be anything from another database to a big data analytics tool, depending on what you need to do with the change data.

Meet Debezium: The Kafka-Enabled CDC Solution

Debezium is an open-source distributed platform designed for CDC. Its primary role is to monitor and record all the row-level changes occurring in your databases in real time and transfer them to Apache Kafka or some other messaging infrastructure.

It can be configured to work with many different databases, in which it tails the transaction logs and produces a stream of change events. This lets applications react to data changes no matter where they originate from.

The core strength of Debezium is its ability to enhance the efficiency and real-time capabilities of the CDC in various environments. This makes it a flexible and comprehensive solution for capturing and streaming data changes.

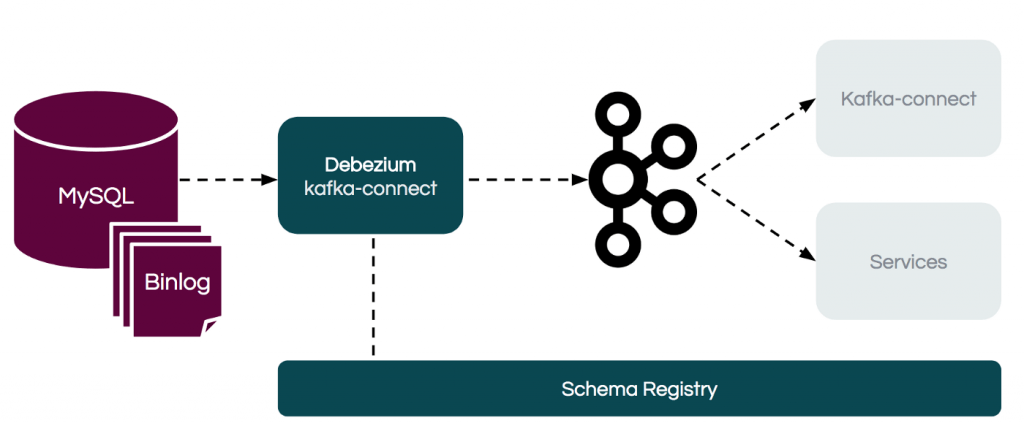

The Debezium Architecture & Its Implementation

Debezium architecture revolves around the central concept of ‘connectors’. These connectors can be configured with a source database of your choice where they can tail its transaction log, detect changes, and produce a stream of change events.

What Are Connectors In Debezium?

Debezium currently ships connectors for various databases, including MySQL, PostgreSQL, SQL Server, Oracle, Db2, and MongoDB.

In essence, Debezium is just a collection of connectors, without a central entity controlling them. Each connector encompasses Debezium’s logic for change detection and conversion into events.

While the connectors are different, they produce events with very similar structures, simplifying the consumption and response to events by your applications regardless of the change origin.

Deploying Debezium: 3 Main Choices

Debezium offers 3 primary deployment choices. You can:

- Deploy it as a standalone server

- Embed it into your application as a library

- Utilize it as an Apache Kafka Connect service for enterprise use cases

Regardless of the chosen option, Debezium delivers its core functionality consistently.

Since our core focus here is CDC with Apache Kafka, let’s see how Debezium works with Kafka Connect.

Using Debezium With Kafka Connect

For enterprise use cases that require fault-tolerant storage, scalability, and high performance, Debezium should be deployed as a service on Apache Kafka Connect. Each Debezium connector is deployed as a separate Kafka Connect service, making Debezium a truly distributed system.

Once deployed, the connector transcribes the changes from one database table to a Kafka topic whose name corresponds to the source table name. Other connectors within the Kafka Connect ecosystem then consume that topic to stream records to other systems, like relational databases, data warehouses, or data lakes.

Kafka Connect enhances Debezium with fault tolerance and scalability. It can schedule multiple connectors across numerous nodes. If a connector crashes, Kafka Connect will reschedule it, allowing it to resume operations.

Implementing CDC With Apache Kafka Using Debezium

You need to run several Apache services, install the right Debezium connectors and set up a Kafka consumer to build a real-time CDC pipeline using Kafka and Debezium. Here are the main steps you need to follow:

Step 0: Pre-requisites

Before getting started, make sure you have a running instance of Apache Kafka and your chosen database. You need at least a Zookeeper server and a Kafka broker. For databases, the required services vary based on the database you're using.

Step 1: Running Apache Services

The first step is to start the Zookeeper server and Kafka broker. Zookeeper helps manage the distributed nature of the system. The Kafka broker, on the other hand, is the heart of the Kafka system and is responsible for receiving messages from producers, assigning offsets to them, and committing them to storage on disk.

Step 2: Installing Debezium

Next, install Debezium. Although there are other installation options, it is recommended to use it as a Kafka Connect service. For detailed installation steps, refer to the official Debezium documentation.

Step 3: Configuring Debezium Connector

Next, configure your Debezium connector. Provide the necessary details such as database host, port, username, password, and database server id. Also, don’t forget to include the Debezium connector jar in Kafka Connect’s classpath and register the connector with Kafka Connect.

Step 4: Starting Change Data Capture

Once the connector is configured and registered, it will start monitoring the database’s transaction log which records all database transactions. Any changes to the database will trigger the connector to generate a change event.

Step 5: Streaming Change Events To Kafka

Each change event generated by the Debezium connector is sent to a Kafka topic. The name of the topic corresponds to the source table name. The event consists of a key and a value. These are serialized into a binary form using the configured Kafka Connect converters.

Step 6: Creating A Downstream Consumer

You should now set up a downstream consumer to retrieve the data from the Kafka topics and store it in a relational database, data warehouse, or data lake.

Step 7: Consuming Change Events

The change events are consumed by applications or other Kafka Connect connectors from the corresponding Kafka topics. These events provide a real-time feed of all changes happening in your database so that your applications react immediately to these changes.

Step 8: Performance Tuning

To optimize your CDC setup, consider adjusting parameters such as batch size, poll interval, and buffer sizes based on the performance and resource utilization of your Kafka and Debezium setup.

Step 9: Monitoring & Maintenance

For system health, monitor metrics such as latency, throughput, and error rates. Regular maintenance tasks include checking log files, verifying backups, and monitoring resource usage.

Estuary Flow: Streamline Your CDC Pipeline

Estuary Flow is our DataOps platform engineered specifically to enable real-time data pipelines. This powerful tool offers our custom-built real-time CDC functionality which ensures scalability and ‘exactly-once’ semantics.

Flow supports many data storage systems and real-time SaaS integrations. It also integrates Airbyte connectors to access 200+ batch-based endpoints. This makes sure that you never miss out on any data.

What sets Estuary Flow apart is its:

- No Kafka Dependency: With Estuary Flow, you don’t have to worry about managing Kafka or compute resources. This frees up your team to focus on more pressing tasks.

- Gazette Streaming Framework: Built on the robust Gazette distributed pub-sub streaming framework, Estuary Flow efficiently handles your data, offering low latency and powerful transformations.

- Robust and Resilient Architecture: Flow is built to survive cross-region and data center failures, minimizing downtime. Its ‘exactly-once’ semantics guarantee transactional consistency and data integrity.

- Open-source and Managed Connectors: Estuary Flow boasts open-source connectors for each supported database, making it easy to pull data from write-ahead logs or transaction logs without overburdening the production database. Plus, the fully managed connectors offer a hands-off approach to real-time data ingestion.

- CLI and No-Code Web GUI: Flow serves users of all technical abilities with its command-line interface and no-code web GUI. The CLI is perfect for those comfortable with coding, allowing for powerful script automation and deep control. Alternatively, the no-code web GUI enables even non-coders to manage data pipelines effortlessly.

Estuary Flow vs. Debezium For CDC

Before deciding on a CDC tool, you need to evaluate options. So let’s take a closer look at how two CDC tools, Estuary Flow and Debezium, compare.

- Backfills: With Estuary Flow, backfilling data is a breeze. Any data backfill from a point in time to a specific consumer will be fully backfilled by default in a cloud data lake, with exactly-once semantics.

- Transformations: Estuary Flow facilitates more complex transformations than Debezium. It allows stateful joins or transformations in-flight using SQL and can handle both real-time and historical data.

- Data Delivery: Estuary Flow guarantees exactly-once data delivery. This ensures your data is processed accurately, without repetition. On the other hand, Debezium offers at-least-once delivery, which could lead to duplicate entries in your log and downstream consumers.

- Handling Large-scale Data Changes: Estuary Flow shines when it comes to processing large-scale data changes. It’s designed to avoid the common pitfalls that Debezium encounters, like running out of memory during the extraction of massive data from database logs.

- Schema Changes: Estuary Flow takes a proactive approach to schema changes. It validates incoming data against the defined JSON schema, flagging any discrepancies for the user. It can also manage schema changes and end-to-end migrations, reducing the manual workload.

Setting Up A Real-Time CDC Pipeline With Estuary Flow

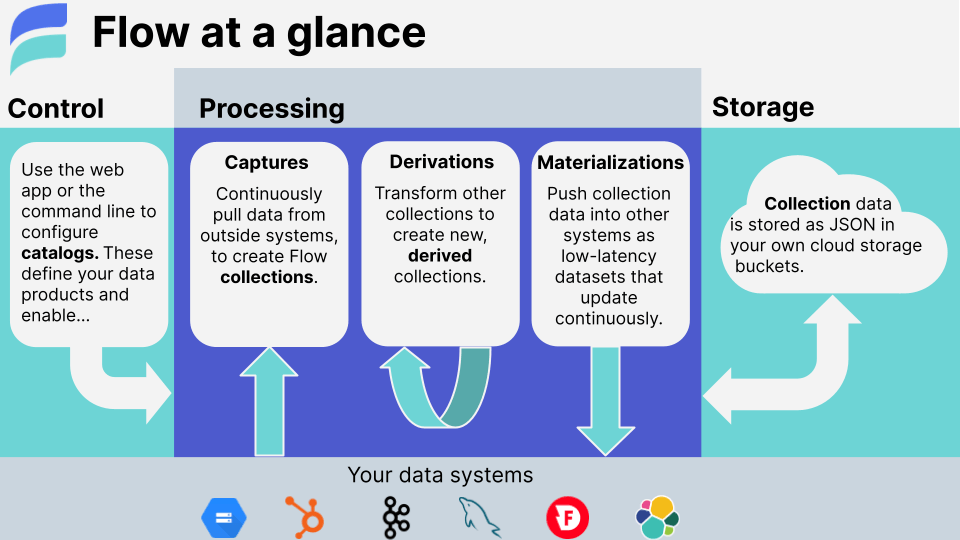

Getting a real-time CDC pipeline up and running with Estuary Flow can be broken down into 3 main steps:

- Create a Data Capture: Start by logging into Estuary’s dashboard where you’ll initiate a new capture. This process involves selecting your data source connector, providing necessary details, and specifying the data collections you want to include.

- Carry out Transformations: After your capture is set, you can make derived collections by applying transformation methods like aggregation, filtering, and joins on the data using Typescript or SQL.

- Set up a Materialization: Finally, choose your data destination by selecting the appropriate connector. Provide necessary configuration details, and hit ‘Save and publish’.

Conclusion

Implementing change data capture with Kafka and Debezium provides powerful functionalities to make real-time data pipelines that capture changes in your databases. Debezium, especially, allows you to translate all your data changes into event streams with connectors designed to handle various database systems.

But if you’re looking for a more streamlined solution that not only takes care of your CDC requirements but also ensures efficient data operations, Estuary Flow is just the platform for you. Unlike Debezium which works specifically with Kafka, Flow has no such restrictions.

If you are eager to see how Estuary Flow can make a difference to your data operations, you can start for free by signing up here, or get in touch with our team to discuss your specific requirements.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles