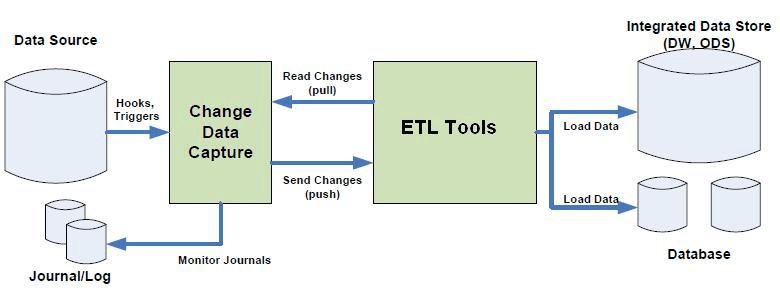

Traditional Extract, Transform, Load (ETL) processes, while effective in their own right, often fall short when it comes to real-time data integration and scalability. A CDC ETL integration identifies and captures data changes as they occur, eliminating the need for full data loads and providing near real-time updates.

But integrating ETL processes with CDC often comes with challenges like complexity in implementation, data consistency issues, and managing the performance of data pipelines. Addressing these challenges requires a clear understanding of CDC and ETL processes and knowledge of key steps needed for incorporating CDC into ETL workflows.

This guide is designed to do just that. In the next 10 minutes, you will learn what CDC and ETL really do, the steps to make them work well together, and the benefits of incorporating CDC in your ETL pipelines.

By the end of this guide, you’ll be all set to use CDC to optimize your ETL pipelines and make your data management more efficient and effortless.

Understanding Change Data Capture & How It Works

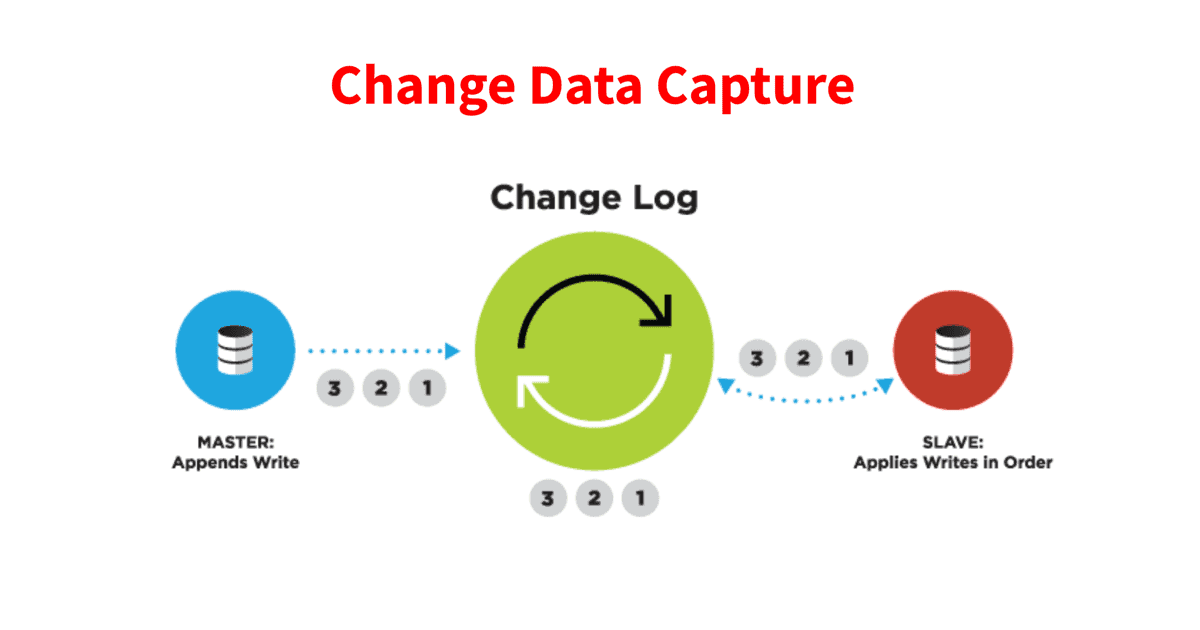

Change Data Capture (CDC) identifies and captures changes made to data and keeps track of all the modifications. When a change is detected, CDC captures the details and transfers the changes to your data warehouses, data lakes, or other systems that use the data.

CDC helps keep your data up-to-date for business analytics and decision-making. Traditional methods of data integration, like batch processing, can struggle to keep up with the constant flow of changes. On the other hand, CDC operates in real-time and ensures that your data is always current, no matter how often changes happen.

How Does CDC Work?

Now let’s break down how CDC operates:

- Change Detection: CDC tools monitor your database. They keep an eye on the transaction log where the database records all changes. When a change occurs, the CDC tool spots it.

- Change Recording: Once a change is detected, the CDC tool captures the details. It records what was changed, how it was changed, and when it was changed.

- Change Delivery: After recording the change, the CDC tool reflects it in your data warehouse or other systems that you use.

CDC isn’t a one-size-fits-all process. There are different methods to capture changes in data. Let’s take a look at the most common ones:

- Table Delta CDC: This method uses a tool or script to compare the data in two tables and spot the differences.

- Timestamp-based CDC: In timestamp-based CDC, timestamps are used to track changes. Most systems record when a row was created and most recently modified. The CDC tool checks these timestamps to identify the changes.

- Log-based CDC: This method involves reading the transaction logs of the source database. The transaction log contains a complete list of all data changes in their exact order of application. The CDC tool reads this log to track all changes.

- Trigger-based CDC: In this method, database triggers are used to identify changes. For every insert, update, and delete operation, a trigger is fired, capturing the change. The trigger then writes the changes into another table, typically called a shadow or staging table.

Each method has its strengths and weaknesses. Some are easier to implement, while others offer more flexibility. The choice of method depends on your specific needs and the nature of your data. However, the log-based CDC method is often regarded as the most efficient method, especially when dealing with large volumes of data.

Check out our CDC Guides for popular databases:

Understanding The ETL Process

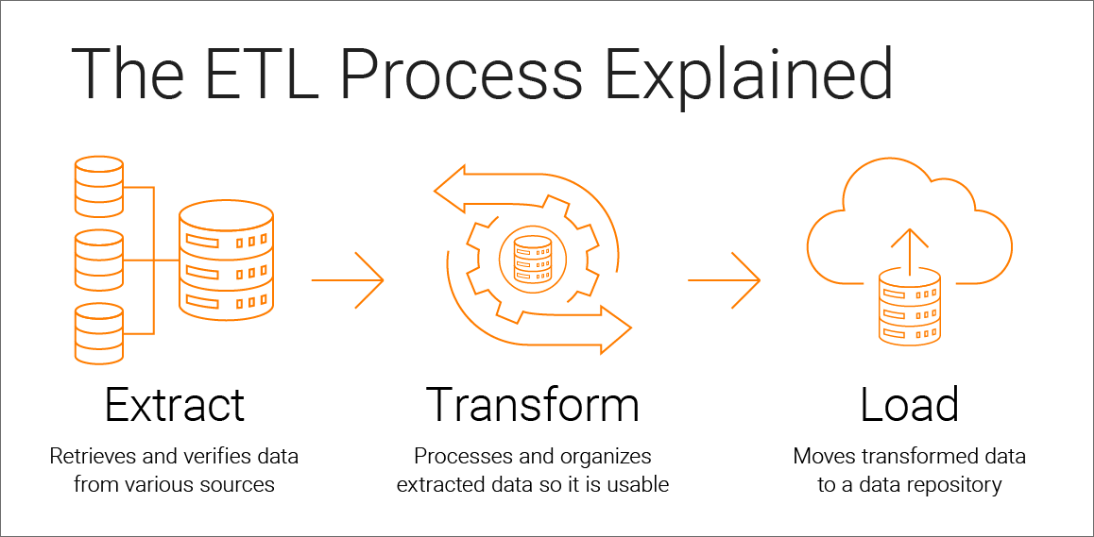

ETL, short for Extract, Transform, and Load, is a fundamental process in data management. It’s crucial for businesses that rely on data analysis to uncover insights, identify trends, and detect patterns that guide strategic decision-making.

It’s a structured procedure that involves 3 separate stages. Let’s understand each of these and talk about the common challenges you might face when you set up ETL in your data system.

Key Stages Of The ETL Process

The ETL process comprises 3 main stages:

Extraction

The first step in the ETL process is to extract data. Here, data is collected from various sources which could include databases, CRM systems, files, and more. The objective is to gather all the necessary data for further processing.

Transformation

After extraction, the raw data is transformed. This stage involves cleaning, validating, and modifying the data to ensure it’s in the right format and quality for analysis. Standardization, removal of duplicates, and addressing inconsistencies are all parts of this stage.

Loading

The final stage is loading where the transformed data is stored in a target system or data warehouse. This data is then ready for analysis and reporting.

Common Challenges In ETL

The ETL process, while crucial, is not without its challenges. Some of the common issues include:

- Ensuring the quality of extracted data

- Optimizing the performance of the data pipeline

- Handling the integration of data from diverse sources

- Dealing with inefficiencies caused by unoptimized code

- Mitigating the impact of network latency on data transfer

- Adapting to the evolving needs and expectations of data consumers

- Addressing resource constraints that can slow down ETL workloads

Why Use CDC In Your ETL Processes

As we discussed, traditional ETL processes, while essential, have their limitations. They can be resource-intensive, slow, and can’t provide real-time data updates. Change Data Capture can help overcome these challenges and significantly enhance the efficiency and effectiveness of your ETL process.

Let’s discuss how CDC addresses the limitations of traditional ETL processes:

Resource Efficiency

Traditional ETL processes often involve processing entire databases or tables which can be resource-intensive. CDC, on the other hand, focuses only on the changes, reducing the amount of data that needs to be processed. This reduces database load and speeds up data integration.

Real-time Updates

With traditional ETL, data updates are typically batch-processed so they may not reflect the most recent changes. CDC supports continuous integration, ensuring every change from the database is promptly delivered to the data warehouse.

Handling Complex Tasks

Traditional ETL processes can struggle with complex tasks and diverse data sources. Modern Change Data Capture technology, however, is designed to handle these complexities. They can stream data stored on a wide range of sources in different formats and even provide in-flight data correlation, all without the need for manual coding.

Data Quality Assurance

CDC ensures the quality of extracted data. CDC focuses on changes to reduce the risk of data duplication or omission that occurs in traditional ETL processes. This results in more accurate and reliable data in your warehouse.

Now that we know the benefits of using CDC with ETL processes, let’s discuss how you can achieve this integration to unlock new possibilities in data management and drive informed decision-making.

How To Incorporate CDC In The ETL Process: 6 Steps

Adding CDC to your ETL processes needs a careful plan while considering the intricacies of your data ecosystem. The integration process should ensure that CDC not only captures changes efficiently but is also well-tuned with your ETL pipeline to maintain data integrity and system performance.

The aim is to create a streamlined and dynamic environment where data changes are seamlessly captured and integrated into your data warehouse, enhancing the reliability and timeliness of your data analytics. Let’s discuss the steps for effectively implementing CDC within your ETL process:

Step 1: Assess Your Current Data Architecture

Start by assessing your current data architecture and ETL processes. Understand where data is coming from, where it’s going, and how it’s currently managed. This will give you a better understanding of how CDC can be incorporated into your existing workflows.

Step 2: Choose The Right CDC Tool Or Solution

There are various CDC tools and solutions available in the market, like Estuary Flow, Debezium, or AWS DMS. Evaluate the tools based on your specific requirements, like scalability, support for different databases, performance, etc., and choose the one that best fits your needs.

Step 3: Set Up Your Database For CDC

Next, configure your source database for CDC. This involves setting up necessary user privileges, enabling CDC at the database level, and specifying which tables or columns you want to monitor. Remember to allocate adequate disk space for the CDC logs as these can grow quickly depending on the volume of data changes.

Step 4: Integrate CDC With Your ETL Pipeline

After your database is ready for CDC, it is time to integrate the CDC process into your ETL pipeline. Configure your ETL tool to accept data from the CDC solution and apply transformations if necessary. For example, you might need to use an appropriate CDC connector for your database.

Step 5: Test Your Implementation

Test your CDC implementation to ensure that it’s correctly capturing and processing changes. Set up a testing environment that mimics your production data infrastructure and create test cases that cover various scenarios.

Step 6: Monitor & Optimize Performance

After deploying your CDC-enhanced ETL process, continuously monitor its performance. This will help you understand if there are any bottlenecks or performance issues. Based on this analysis, you may need to optimize your CDC configurations, ETL transformations, or data-loading processes.

Now that we know how to incorporate CDC in the ETL process, let's turn our attention to an invaluable tool, Estuary Flow, and see how we can use it to effortlessly establish an ETL pipeline enriched with CDC capabilities.

Using Estuary Flow To Easily Set Up An ETL Pipeline With CDC

When setting up your ETL pipelines with CDC, choose a solution that offers CDC data ingestion and replication on a single platform. This simplifies the process and makes it easier to manage, and Estuary Flow is the perfect tool for the job.

Flow is our powerful tool that quickly creates and manages CDC and ETL pipelines. It provides an impressive suite of features that empower you to move and transform your data in real time, sometimes in milliseconds.

With Flow, you can capture data from various sources, like cloud platforms, databases, and SaaS apps. It has an impressive range of CDC connectors available for in-demand databases like MySQL, PostgreSQL, and MS SQL Server.

Estuary Flow also provides other essential features including automated schema management, data deduplication, and a fault-tolerant architecture with real-time functionalities.

Now let’s see how easy it is to set up an ETL pipeline with CDC using Estuary Flow.

Step 1: Taking Care Of The Prerequisites

Before you start capturing data, make sure that your database meets the prerequisites needed for Flow CDC connectors. These prerequisites differ based on the database you are using. You can find the detailed steps in Flow’s documentation for connectors.

Step 2: Capturing CDC Data

The next step is to configure Flow to capture change data from your database. To do this:

- Start by signing up for Estuary Flow and logging into the dashboard.

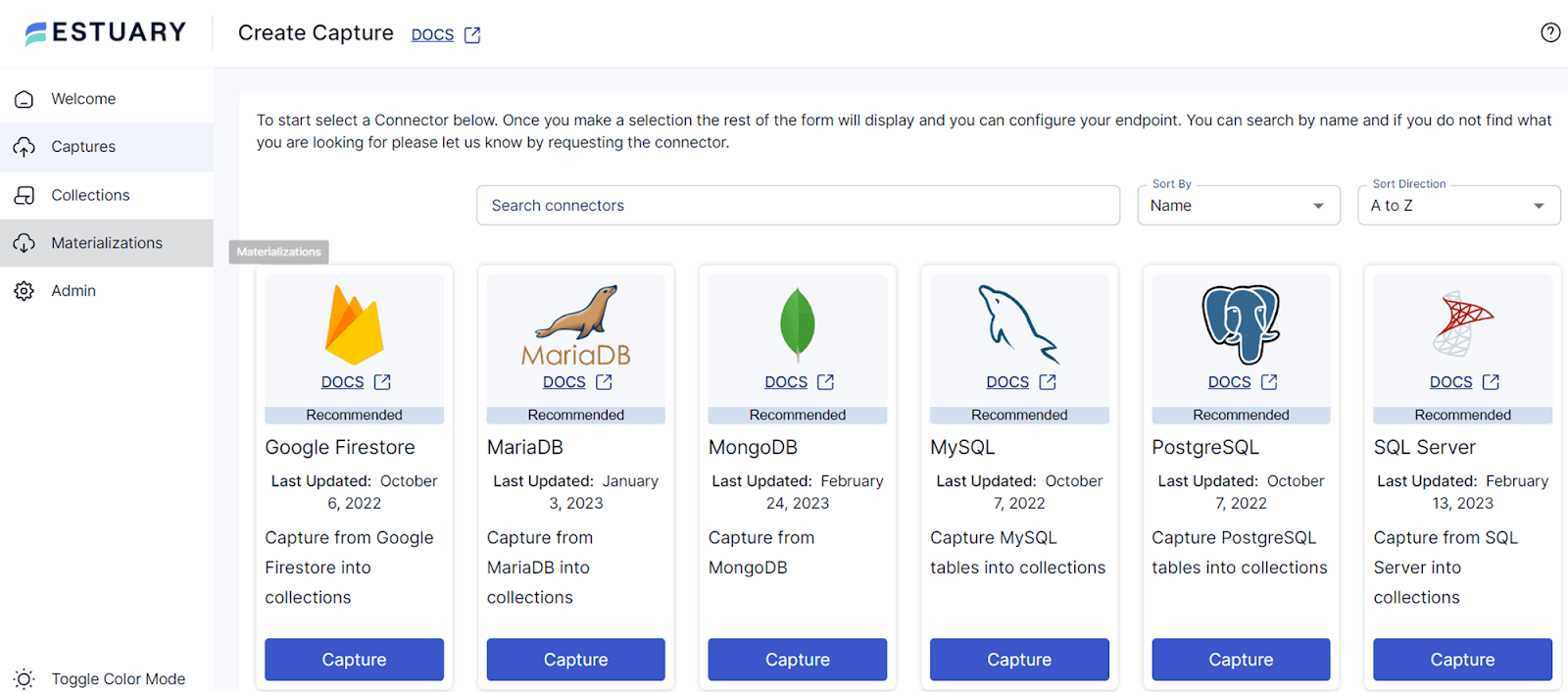

- In the ‘Captures’ tab, click on the ‘New Capture’ option and select the CDC connector for your database.

- Enter the connection details and credentials for your database.

- Flow will then show you a list of tables in your database. Select the tables you want to track and capture data from, name your new capture, and click “Save and Publish”.

Step 3: Creating Transformations

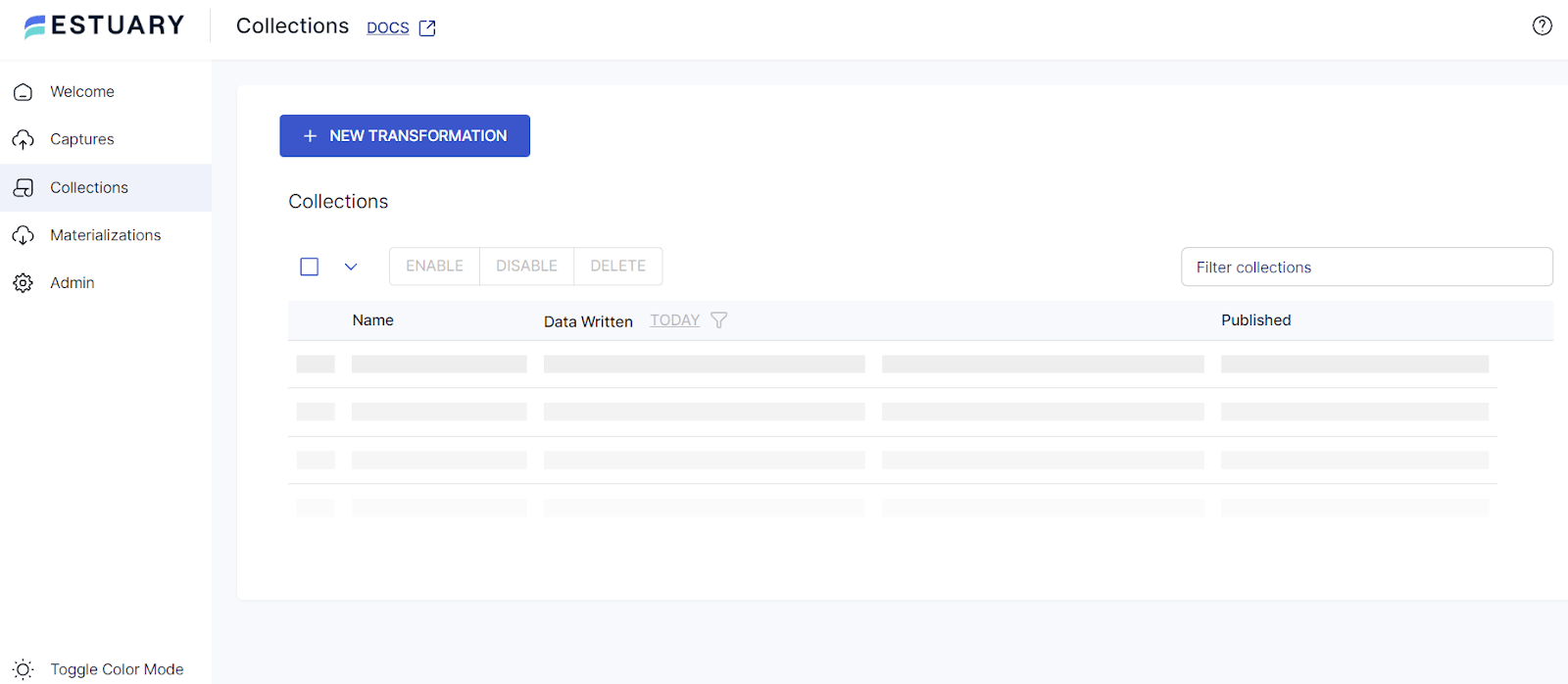

Once data is captured, move on to the transformation stage. Go to the ‘Collections’ tab, click on ‘New Transformation’, choose the source collections you want to transform, and write the transformation logic. In Flow, you can employ streaming SQL or TypeScript for transformations.

Step 4: Materializing Data To A Destination

Finally, to move the transformed data to a destination, you need to set up a materialization. Use the following steps to set up a materialization in Flow:

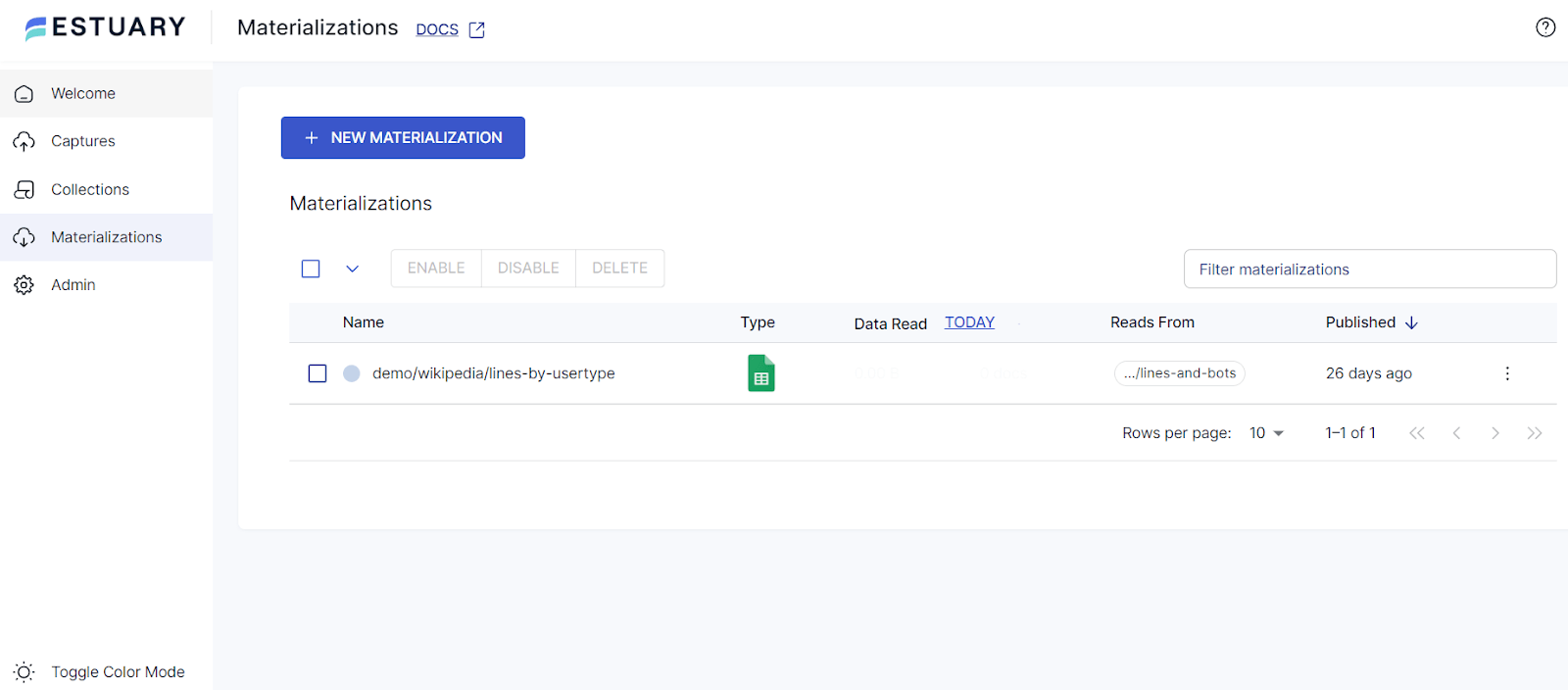

- Select the “Materializations” tab and select the “New Materialization” option.

- Next, select the appropriate connector for the data destination you want to use.

- Configure the connector, and click “Save and Publish”.

Step 5: Monitoring & Management

After setting up the pipeline, monitor its status and performance through the dashboard. Make adjustments to configurations or add new transformations if necessary.

Reading about theories and methodologies is insightful but nothing hits home like a real-life example. To fully grasp their potential, let’s see these technologies in action, solving actual business problems.

Conclusion

Using Change Data Capture and ETL process together to make CDC-ETL pipelines is a must for creating flexible and efficient data systems. These pipelines are especially important in achieving real-time analytics as they ensure faster data processing by minimizing the resources needed to handle massive data sets.

Nevertheless, incorporating CDC and ETL together can be complex and demands careful planning. Here, Estuary Flow can be really useful. It significantly reduces the complexity of CDC-ETL integration, making it easier to roll out ETL pipelines with CDC functionality. This gives you more time to focus on data insights without worrying about complicated configurations.

So if you want to take advantage of the benefits of integrating CDC into your ETL process, choosing Estuary Flow is a no-brainer. Take the first step by signing up for a free Estuary account, or reach out to our team to discuss your particular requirements.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles