As organizations continue to expand and adapt, the demand for reliable and efficient data extraction becomes increasingly crucial. One of the challenges for data engineers is to find the best CDC method to capture data from MySQL reliably.

This is where things can get complex, given the numerous extraction techniques and a variety of Change Data Capture methods to choose from. Not all teams have the necessary knowledge and skills to implement CDC effectively which fosters inefficient extraction methodologies and data integrity problems.

In today’s guide, we’re set to explore various methods of data capture from MySQL. We’ll also discuss how to use Estuary Flow, our powerful platform, that can streamline capturing and streaming data from MySQL databases.

By the time you finish this guide, you’ll have complete command over both basic strategies to extract data as well as advanced CDC mechanisms for real-time data updates.

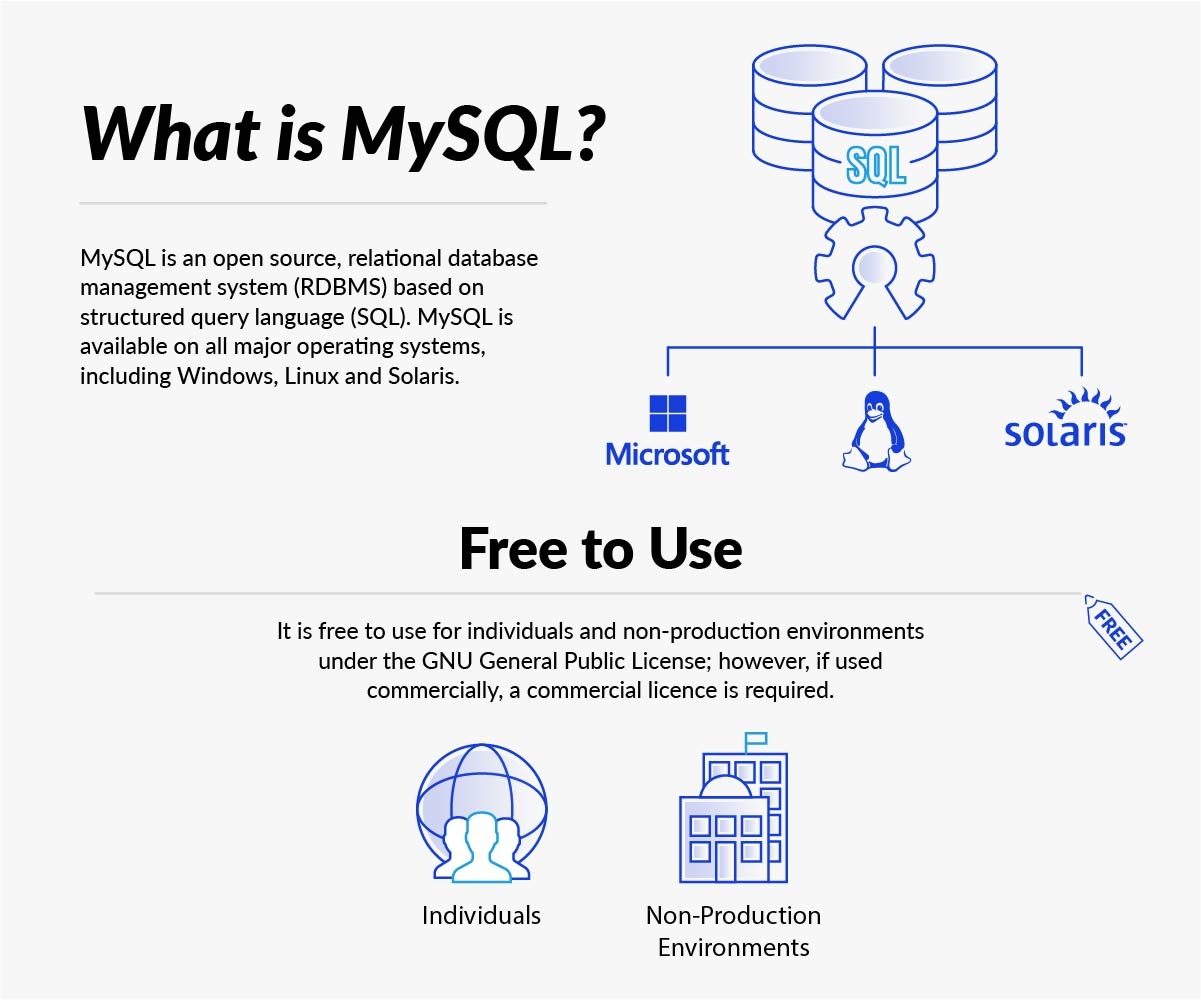

What Is MySQL?

MySQL is an open-source Relational Database Management System (RDBMS) known for its high performance, reliability, and tons of features. It is built on a client-server model which makes it a favorite for developers and businesses all around the world.

MySQL is super versatile because it allows multiple users to access and work with data at the same time. The MySQL server runs separately to handle the database tasks while clients connect to the server to perform their magic on the databases. This setup allows it to scale and adapt to different projects, from small web apps to massive enterprise solutions.

Features Of MySQL

MySQL has earned its reputation as a popular choice for database management for the remarkable features it offers. Some of the most notable features of MySQL are:

- Open-source: Tweak the source code to fit your unique needs.

- Scalable: Handles everything from small-scale data to big-time enterprise operations.

- Character sets support: Plays well with various character sets like Latin 1, German, and Unicode.

- Strong community support: Get help and resources from a dedicated community of developers and users.

- Data Types: Offers a wide range of data types such as INTEGER, VARCHAR, TIMESTAMP, and more.

- Large databases support: Manages databases with up to 50 million records and 200,000 tables.

- Cross-platform compatibility: Runs smoothly on different operating systems like Windows, macOS, Linux, and UNIX.

Benefits Of MySQL

Apart from its impressive feature set, MySQL also provides numerous advantages that make it a top choice for developers and businesses alike. Some key benefits include:

- Flexibility: Works seamlessly with tons of applications.

- Secure: Offers solid security features to protect your precious data.

- Ease of use: Jump right into MySQL with just basic SQL knowledge.

- Frequent updates: Keeps up with new features and security improvements.

- Fast and reliable: Provides speedy data access and consistent performance.

With such an extensive range of features and benefits, MySQL is an excellent platform for managing your data.

Now, let's dive into how you can leverage its capabilities for Change Data Capture (CDC) in MySQL.

Benefits Of Change Data Capture (CDC) In MySQL

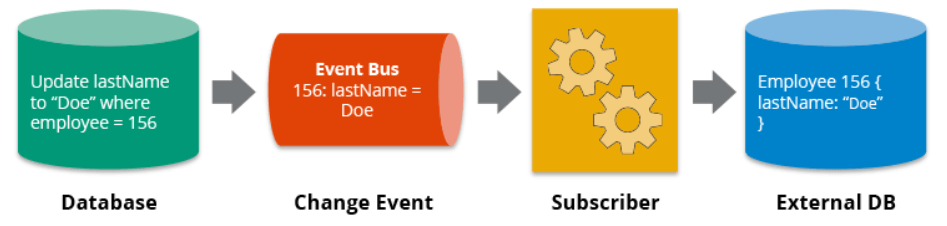

CDC in MySQL offers a real-time, efficient alternative to old-school batch-based methods. It monitors your database and replicates changes (like INSERT, UPDATE, DELETE) to downstream systems like a data warehouse. Using CDC in MySQL comes with some great benefits for managing your data:

- Decreased load on systems: Captures and processes only relevant changes, making both source and target systems run smoother.

- Improved data consistency: CDC ensures all your data-dependent applications and processes work with accurate and up-to-date information.

- Real-time data synchronization: Keeps downstream systems like data warehouses and analytics platforms always updated with the latest data. This helps businesses make smarter decisions based on current information.

- Simplified implementation: Makes it easy to implement audit logs, change tracking, and event-driven data pipelines without messing with the application code. This saves time and reduces errors.

- Enhanced recovery options: CDC comes to the rescue when recovering from system failures or data corruption. By continuously capturing changes, it lets you restore data up to the point of failure, minimizing data loss and keeping data integrity intact.

As we have now gone through the basic information on CDC and MySQL, let’s put this knowledge to use and explore the most prominent CDC methods next.

4 Change Data Capture (CDC) Methods For Capturing Changes In MySQL

When it comes to keeping your data in sync and up-to-date, you need a reliable and efficient method for capturing changes in your MySQL database. Let's discuss 4 popular techniques for change data capture.

Using Estuary Flow

Estuary Flow is our cloud-based data integration platform that allows you to easily export data from SQL, even for those without extensive SQL experience. It connects various source and destination data systems through open-source connectors, offering an event-driven runtime for true, real-time CDC.

When you use the MySQL source connector, Flow turns into a log-driven, real-time CDC pipeline.

Here are some major use cases:

- Streaming data to target systems with millisecond latency.

- Syncing data in real-time between source and destination systems.

- Automatically backing up data collections to a cloud-based data lake.

Let's talk about some advantages of Flow as a MySQL CDC pipeline:

- No extra tools for transformations: Perform aggregations, joins, and stateful stream transformations right within Flow.

- Minimized backfill impact: Flow writes checkpoints to avoid performance impacts and errors like data duplication during backfills.

- Excellent customization: Use GitOps workflow or UI to add data sources and destinations or modify data streams without the help of an engineer.

Implementation Process

Excited to try Flow? Here's how to get started. In the following example, we will see how to use Estuary Flow to retrieve information from a locally hosted SQL database, starting with the required prerequisites.

- binlog_format system variable set to ROW (default value)

- Set Binary log expiration period to MySQL's default value of 30 days (2592000 seconds), or not below 7 days

- A watermarks table (default name: "flow.watermarks")

- A database user with appropriate permissions. For details click here

- If capturing tables with DATETIME columns, set the time_zone system variable to an IANA zone name or numerical offset

Next, let's discuss the detailed steps to achieve CDC:

- Ensure prerequisites are met: Check your MySQL database configuration.

- Create watermarks table: Use these SQL commands:

plaintextCREATE DATABASE IF NOT EXISTS flow;

CREATE TABLE IF NOT EXISTS flow.watermarks (slot INTEGER PRIMARY KEY, watermark TEXT);- Create flow_capture user: Set up a user with the right permissions, like this:

plaintextCREATE USER IF NOT EXISTS flow_capture

IDENTIFIED BY 'secret'

COMMENT 'User account for Flow MySQL data capture';

GRANT REPLICATION CLIENT, REPLICATION SLAVE ON *.* TO 'flow_capture';

GRANT SELECT ON *.* TO 'flow_capture';

GRANT INSERT, UPDATE, DELETE ON flow.watermarks TO 'flow_capture';Configure MySQL binary log and time zone (if not already set):

SET PERSIST binlog_expire_logs_seconds = 2592000;

SET PERSIST time_zone = '-05:00'

- Integrate with Flow: Connect your MySQL database to the Flow platform and set up the MySQL source connector.

- Monitor and tweak: Keep a check on your Flow data collections and make adjustments to your CDC pipeline as needed.

At this point, you can connect the data captured from MySQL to a variety of destinations by adding a materialization. With Flow, your self-hosted MySQL data synchronization will be smoother and more efficient than ever before.

Binary Logs In MySQL

Binary logs are a series of log files that record all the changes made to the database. They're important for tasks like data recovery, replication, and CDC.

Some common use cases are:

- Analyzing changes in data over time.

- Copying data from a master server to a replica server.

- Each event in binary logs represents a single database modification.

Each event in binary logs represents a single database modification. By processing these events, you can accurately replicate changes made in your MySQL database. Most real-time CDC tools (like Flow) work by connecting to the binary log. But you can also create your own solution by accessing the binary log directly.

Utilizing Binary logs for MySQL CDC offers several advantages, as highlighted below:

- Data recovery: Helps recover data after a crash or accidental deletion.

- Low overhead: Binary logs need minimal resources for recording and processing changes.

- Real-time replication: Captures changes as they happen, allowing for real-time data synchronization.

However, using Binary logs in MySQL Change Data Capture also comes with some drawbacks:

- Log growth: Binary log files can grow large so you need to manage and store them properly.

- Potential performance impact: Writing to logs may affect database performance, especially with a high volume of transactions.

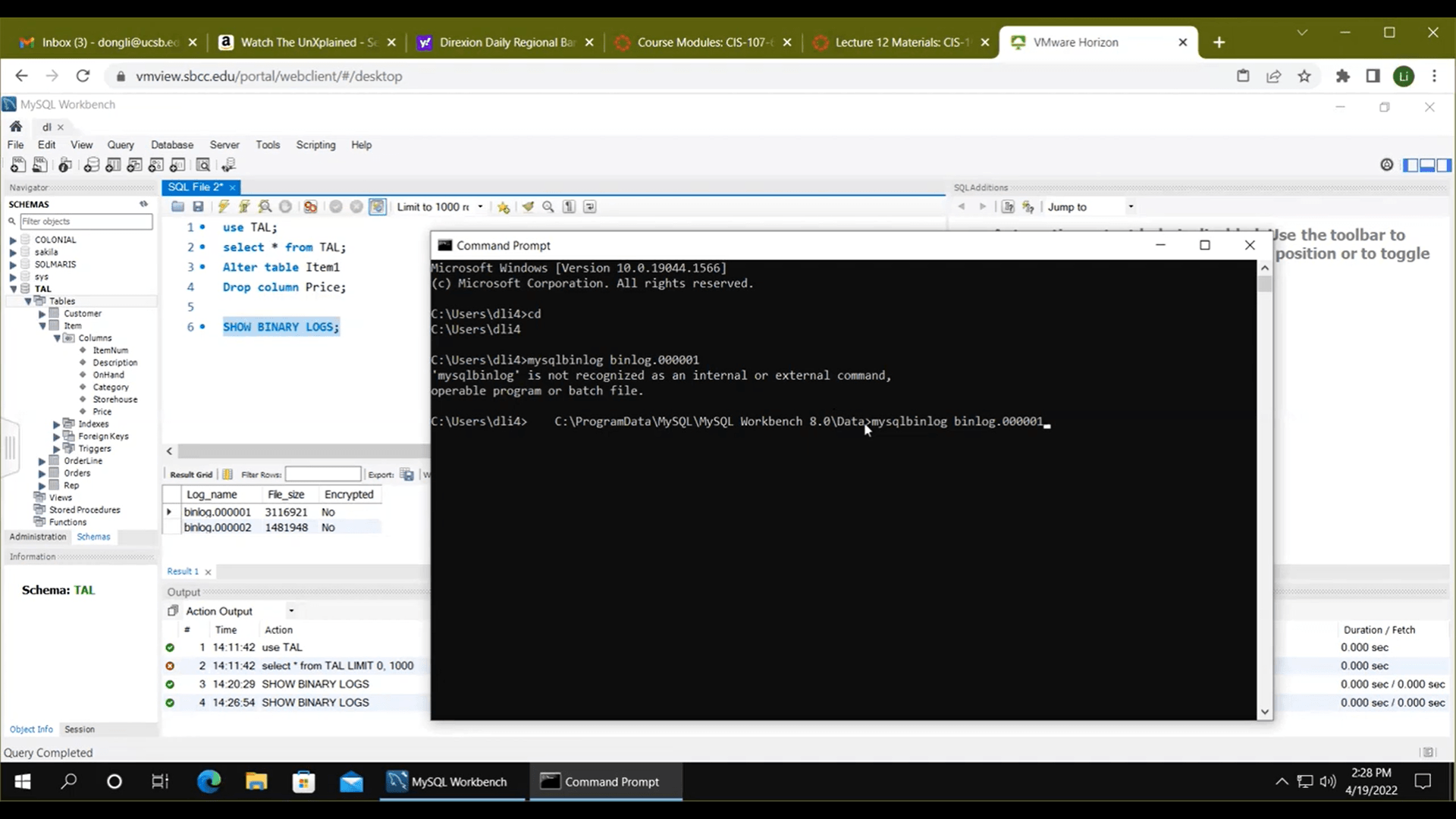

Implementation Process

To set up MySQL binary logs for CDC, just follow these simple steps:

- Enable binary logging: Edit the MySQL configuration file (my.cnf or my.ini) by adding these lines:

plaintextlog-bin = mysql-bin

server_id = 1Make sure to replace server_id with a unique integer for each server involved in the replication.

- Restart MySQL: Apply the changes by giving your MySQL server a quick restart.

- Monitor log files: You'll find binary logs in the data directory of your MySQL server. Use the SHOW BINARY LOGS; command to display a list of binary files.

- Process binary logs: Use tools like mysqlbinlog to process binary logs and extract change events. Apply these events to the target system to keep it up-to-date.

Don't forget to manage MySQL binary files and rotate or purge them regularly to avoid storage issues.

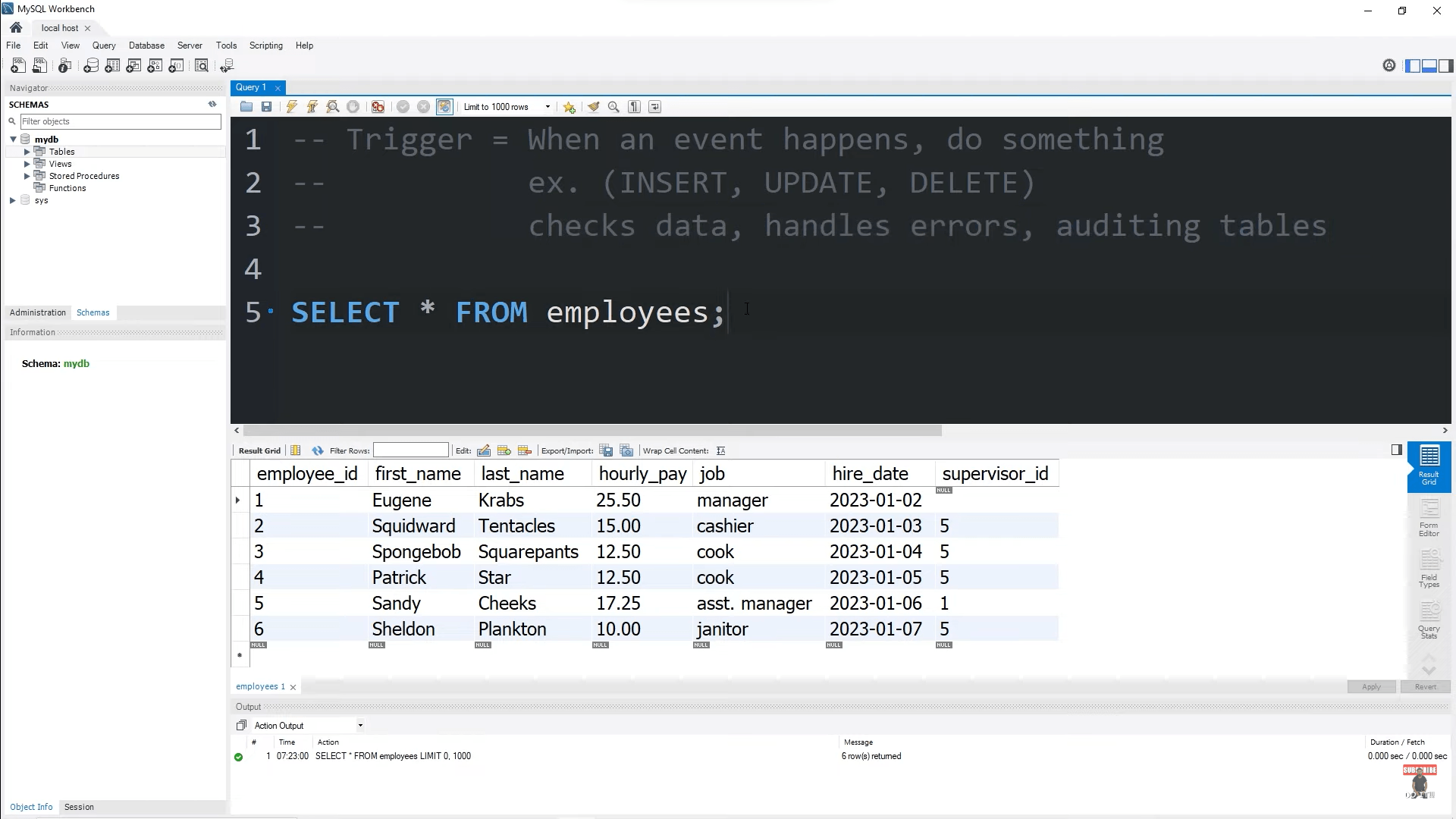

MySQL Triggers

MySQL triggers are automatic actions that occur in response to specific events like INSERT, UPDATE, or DELETE. They help maintain data consistency and integrity, automating tasks that would otherwise be manual. For instance, you might use a trigger to update an inventory count whenever a new order is placed.

Triggers can be great for audit logging and data validation. By using triggers, you can:

- Track changes in a MySQL table.

- Maintain referential integrity between tables in a database.

- Enforce specific constraints, such as preventing negative values in a "quantity" field.

Triggers in MySQL CDC have some major advantages:

- Triggers are perfect for audit logging and tracking changes.

- They ensure data consistency and integrity by automating actions.

- Triggers help enforce business rules and maintain referential integrity.

Despite their benefits, MySQL CDC triggers also possess some limitations, such as:

- Debugging and troubleshooting can be challenging with triggers.

- They can introduce performance overhead and slow down database operations.

- Triggers may cause unintended side effects if not designed and implemented carefully.

Implementation Process

First, you need to identify the specific event you want the trigger to respond to, such as INSERT or DELETE. Next, decide when the trigger should fire: before or after the event. Here's a simple example of how to create a trigger:

plaintextDELIMITER //

CREATE TRIGGER update_inventory

AFTER INSERT ON orders

FOR EACH ROW

BEGIN

UPDATE inventory

SET quantity = quantity - NEW.quantity_ordered

WHERE product_id = NEW.product_id;

END; //

DELIMITER ;This trigger updates the inventory count after a new order is placed. It fires after an INSERT event on the 'orders' table and updates the 'inventory' table accordingly.

Don't forget to test the trigger to ensure it works as intended and always document your triggers to make maintenance easier for other developers.

Queries In MySQL

Queries are the key to extracting and processing data from your MySQL database. With the right queries, you can effectively capture changes in data and apply them to downstream systems. This approach to CDC involves using SQL queries to fetch changes from your source database and apply them to the target database.

With Queries, you can do the following:

- Track data changes in specific tables: You can use queries to monitor specific tables for changes and then process the updates accordingly.

- Data synchronization: By using queries, you can synchronize data between two MySQL databases to ensure that they remain consistent.

- Incremental data extraction: Queries can be used to extract only the changed data from a source database. It reduces the amount of data transferred and improves efficiency.

Using Queries for MySQL CDC offers several advantages, as highlighted below:

- Flexibility: Queries can be tailored to meet specific requirements which allows you to capture changes at a granular level.

- Lower complexity: Writing SQL queries can be more straightforward than implementing other CDC methods like triggers or binlog replication.

- Reduced load on the source database: By fetching only the changed data, queries can reduce the performance impact on the source database.

However, using Queries in MySQL CDC also comes with some drawbacks:

- Increased latency: The real-time aspect of CDC might be compromised if queries take a long time to execute and return results.

- Manual intervention: Queries may require manual updates and maintenance, increasing the risk of human error and operational complexity.

- Performance impact: Continuously polling the database for changes increases load and reduces performance, especially in large-scale environments.

Implementation Process

Setting up CDC using queries in MySQL involves the following steps:

- Identify the changes: Determine the best way to capture changes in your source database. This may involve using a timestamp column or tracking changes through unique identifiers.

- Write the queries: Craft SQL queries to fetch changes from the source database. For example:

SELECT * FROM table_name WHERE last_modified >= '2023-05-12 00:00:00'; - Apply the changes: Use the extracted data to update your target database or downstream system.

- Schedule the process: Automate the CDC process by running your queries at regular intervals.

With these steps, you can set up a SQL query solution that keeps your data up-to-date and maintains consistency across your systems.

Understanding the different change data capture methods for capturing changes in MySQL is important. But you can benefit from these methods only if you are familiar with the industry's best practices. Let’s take a look.

3 Best Practices for CDC in MySQL

When it comes to change data capture (CDC) in MySQL, employing the right practices can make all the difference in effectively tracking and managing changes within your database. Here are the 3 best practices that will streamline your approach to CDC in MySQL.

Security Considerations

Keeping your data safe is a top priority. So let's take a look at some security practices when using MySQL Change Data Capture:

- Encrypt connections: Use SSL/TLS to encrypt the communication between MySQL and the CDC solution. This prevents unauthorized access to your data.

- Strong authentication: Implement strong authentication methods like two-factor authentication or client certificate authentication to protect your MySQL server.

- Limit access: Only grant necessary permissions to the CDC user like REPLICATION CLIENT and REPLICATION SLAVE. You should also restrict SELECT permissions to just the tables that need to be captured.

By following these steps, you'll ensure a more secure environment for your data.

Monitoring & Maintenance

To keep your CDC pipeline running smoothly, consider these monitoring and maintenance tips:

- Monitor logs: Keep a check on logs for any issues, such as replication lag or errors. Use log aggregation tools to analyze logs and identify patterns or trends.

- Keep software updated: Regularly update your MySQL server and CDC solution to benefit from the latest features, bug fixes, and security patches.

- Track performance: Regularly check the MySQL server's CPU, memory, and I/O usage to detect potential bottlenecks. Set up alerts that notify you if any metrics exceed specific thresholds.

By staying proactive, you can address any issues before they escalate into bigger problems.

Ensuring Data Integrity

Finally, make sure your data stays accurate and reliable. Here are some proven ways to do it:

- Test thoroughly: Always validate the output of your CDC pipeline to ensure it matches the source data. Set up automated tests to compare source and destination data regularly.

- Error handling and recovery: Implement robust error handling and recovery mechanisms in your CDC pipeline. If an issue arises, your solution should recover gracefully and continue processing.

- Handle schema changes: Plan for schema changes in your MySQL database and update your CDC solution accordingly. Make sure your solution can handle changes like adding or dropping columns without losing data.

With these practices in place, you'll maintain data integrity throughout your CDC process for reliable insights.

Conclusion

Capturing data from MySQL is not merely a technical task; it is an art form that requires careful consideration and strategic implementation. We cannot overstate the importance of continuous learning and staying updated with the latest advancements in MySQL and data-capturing techniques. The landscape of data management is ever-evolving and by staying informed, you can adapt and optimize your approach to data capture accordingly.

Using Estuary Flow to capture data from MySQL significantly streamlines the entire process and enables you to capture change data from MySQL in real time while ensuring data integrity and consistency.

So if you are ready to revolutionize your data capture process and harness the true potential of your data, give Estuary Flow a go by signing up here, or contact our team to discuss your unique needs.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles