It’s pretty rare to find a dataset that’s actually useful in its raw form. And in a world where virtually every business seems to be drowning in a sea of raw data, it’s not surprising that we’re constantly looking for the best way to process it. One method that has consistently proven its worth is batch data processing.

However, like any other technique, batch data processing isn’t devoid of challenges which makes choosing between batch processing and its real-time alternative tricky. Similarly, finding the right tools for effective batch processing can also be challenging.

In today’s guide, we look into the world of data processing with a particular focus on batch data processing. We will contrast it with real-time processing, discuss scenarios where batch processing is the optimal choice, and explore the tools that can help you quickly set up your batch processing system.

By the end of this insightful read, you’ll have a comprehensive understanding of batch data processing, its advantages over real-time processing, and how to choose the right approach for your specific needs.

Unmasking Data Processing: The What, Why, & How

Let’s start by setting a baseline: what exactly is data processing?

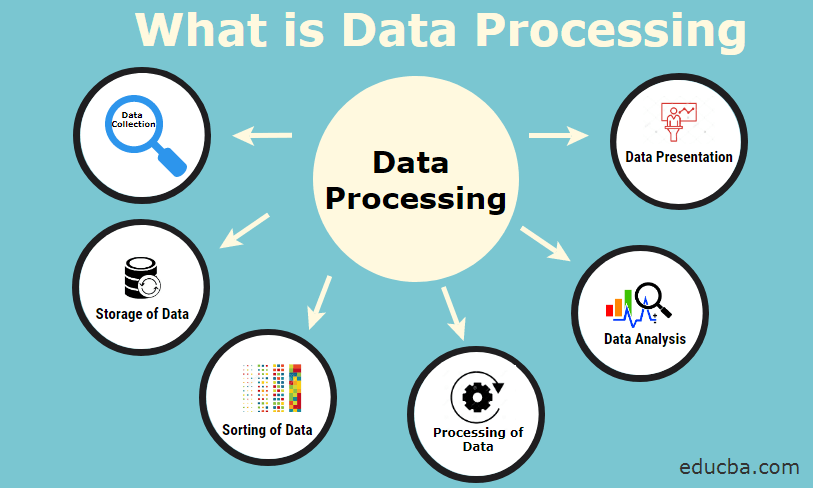

Data processing is the act of converting raw data into useful information. It’s a deceptively simple concept with huge real-world implications.

Raw data is rarely useful to help make decisions or develop business insight. To turn it into information, you need to process it in some way. Your approach to data processing will depend on several factors, which include:

- Raw data state: Where is the data collected from? What format is it in? How much of it is there?

- Goals: What information do you need to gain from this data? This represents the end state of data processing.

- Business domain: From finance to marketing, different business domains use data differently. This influences how it needs to be processed.

You can break data processing down into these high-level steps:

Step 1: Data collection. Also known as data ingestion or data capture, in this step, the data enters the processing pipeline. Data can be collected from applications, IoT sensors, SaaS APIs, external databases, or other storage systems.

Step 2: Data cleaning or transformation. Next, the processing pipeline needs to get the data into a usable state. The definition of “usable” will vary, but at minimum, the data will need to be in a consistent, known format that can be described by a schema.

The pipeline might:

- Validate incoming data against an established schema and reject data that doesn’t conform.

- Apply basic transformations so that data fits a schema. For example, it might add a required field or replace disallowed characters.

- Apply complex transformation to unlock more sophisticated workflows. For example, it could join several datasets together to arrive at an important business metric.

Step 3: Operationalization

At this step, it’s time to use the data to power business outcomes. For example:

- Use the data to power a live dashboard or BI platform tracking KPIs, progress toward a goal, or a team’s workload.

- Configure alerts when an action is needed — anything from deploying emergency personnel to replacing a piece of equipment on a factory floor.

- Create tailored customer experiences in e-commerce apps or streaming platforms.

Step 4: Data storage

It’s a waste to only use data once and let it disappear! In addition to operationalization, you’ll also want to store your data. This allows you to continue to analyze your data for years to come.

With this in mind, it’s time to choose a data processing framework. This is where things get tricky: you have many options. But a great place to start is by figuring out whether you need batch or real-time processing.

Batch Data Processing: A Closer Look

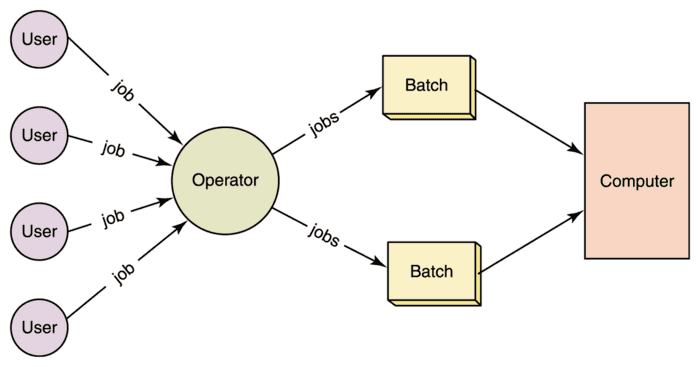

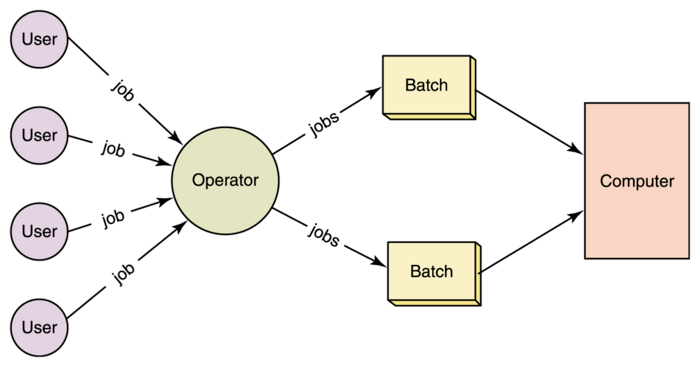

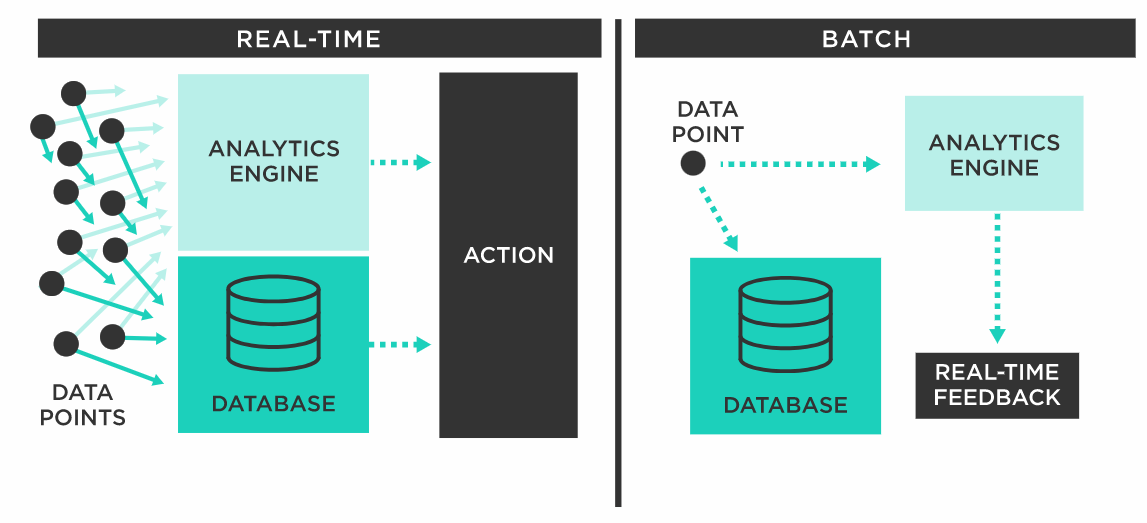

In batch data processing, the data pipeline collects data over an interval of time and processes it all at once. This window of time is called the “batch interval,” and it repeats over and over.

In other words, the data collection step is not ongoing. The pipeline stays idle for a while as new data builds up in the source. Then, when the batch interval ends, the pipeline process begins. Usually, data is collected by querying the source for changes.

Once collected, all the new data is processed at once, operationalized at once, and stored at once.

Real-time vs Batch Data Processing

Real-time data processing, often referred to as stream data processing or simply streaming, is a method that processes data the second it comes into the system. There’s no waiting around or collecting data over time. It is continuous data processing that’s always on. In this process, the system is always checking for new data. When it finds it, it processes it right away.

So how does real-time processing compare to batch processing? Let’s break down the differences between them:

| Batch Processing | Real-Time Processing | |

| Age | Legacy: the first type of data processing. | Modern: became a realistic option in recent years. |

| Mechanism | Querying data source and processing chunks of data all at once. | Watching source for change events and processing them as they arise. |

| Engineering difficulty | Easier. | Challenging. |

| Timeliness | Latency from seconds to days. | Instant. |

| Managed solutions available? | Yes. | Yes. |

| Price | Depends on details, but generally affordable for small legacy setups and expensive at scale. | Affordable when well optimized. Avoid the cost of large queries on source systems. |

Data Stream processing can be a great option especially as it’s become more efficient over time. It works well in the cloud and doesn’t put as much pressure on data source systems as batch processing does. But it can be overkill if you're dealing with smaller amounts of data or if you’re using old systems or have a small setup.

Batch processing, while it can be slower, is simpler to build and keep running than real-time processing systems. It is a reliable choice for smaller teams who want to keep control of their systems. In some cases, it is more efficient especially if you don't have a lot of data or if you're sharing resources.

Let’s look at the advantages and disadvantages of batch processing vs stream processing in more detail.

5 Advantages Of Batch Data Processing Over Stream Processing

Let’s look at the compelling reasons why batch data processing is often a preferred choice for many businesses, especially those who prefer to build data processing frameworks in house:

Efficiency

Batch data processing optimally utilizes idle resources to perform operations. Batch jobs are scheduled to run during off-peak hours when the system isn’t as busy. On the other hand, real-time processing systems have to run continuously, and getting them to be efficient takes more work.

Cost-Effectiveness

Batch processing is very economical as it doesn’t need high-end hardware and software. It operates on less powerful servers without needing the high-security levels that real-time data processing requires, thus saving on costs.

Accuracy

Given that batch jobs often run once a day or a week, it gives data scientists ample time to thoroughly examine the data and rectify any errors. Real-time data processing, on the other hand, provides less time for data review because of its real-time nature.

Scalability

Batch data processing is easily scalable in response to data volume fluctuations. The jobs can be executed on multiple servers and the system can be scaled up or down as needed. Real-time data processing, which is often (but not always!) limited to a single server, can only scale up to a certain limit.

Reliability

Batch processing ensures reliability for its resistance to system failures. Job distribution on multiple servers ensures the completion of the job even if one server fails. In contrast, real-time data processing, when deployed on a single server, is vulnerable to complete job failure if the server fails.

3 Potential Drawbacks Of Batch Processing

While batch processing provides numerous advantages, it comes with certain challenges that may influence an organization’s decision to use it. Here are some key disadvantages:

Training & Deployment Issues

Implementing in-house batch processing systems requires significant training and planning. Managers and system designers should understand what batch size to use, what triggers a batch, and how to schedule processing.

Continuous Monitoring

Batch processing can impact the precision of processing metrics. This could mean you spot issues and exceptions late, making your work less efficient. To avoid this, you need to manage your system actively, ensuring batch processing shows when you're bringing in and sending out events as they happen.

Increased Employee Downtime

Depending on data size, job type, and other factors, there may not be processed data available for your employees to do their jobs effectively. This may increase employee downtime and limit output.

Between real-time processing and batch processing, what you eventually choose depends on what you need to do with your data and the available resources. Let’s talk about this next.

When To Opt For Batch Processing

Batch processing shines under certain circumstances but there’s no absolute rule dictating its use. Batch processing is best suited for tasks that don’t demand real-time processing. When deciding whether batch processing is suitable for an organization, ask yourself the following questions:

- Does any job in the system have to wait for other jobs to finish? How is the completion of one job and the start of the next monitored?

- Does the organization manually check for new files? Is there an automated script that checks for files frequently enough to be efficient?

- Does the existing system retry jobs at the server level? Does this slow down operations or require task reprioritization? Could the server be utilized more effectively?

- Does the organization have a large volume of manual tasks? What mechanisms ensure their accuracy? Is there a system guaranteeing they are processed in the right order?

If your answer to one or more of these questions is ‘yes’, batch data processing is right for you.

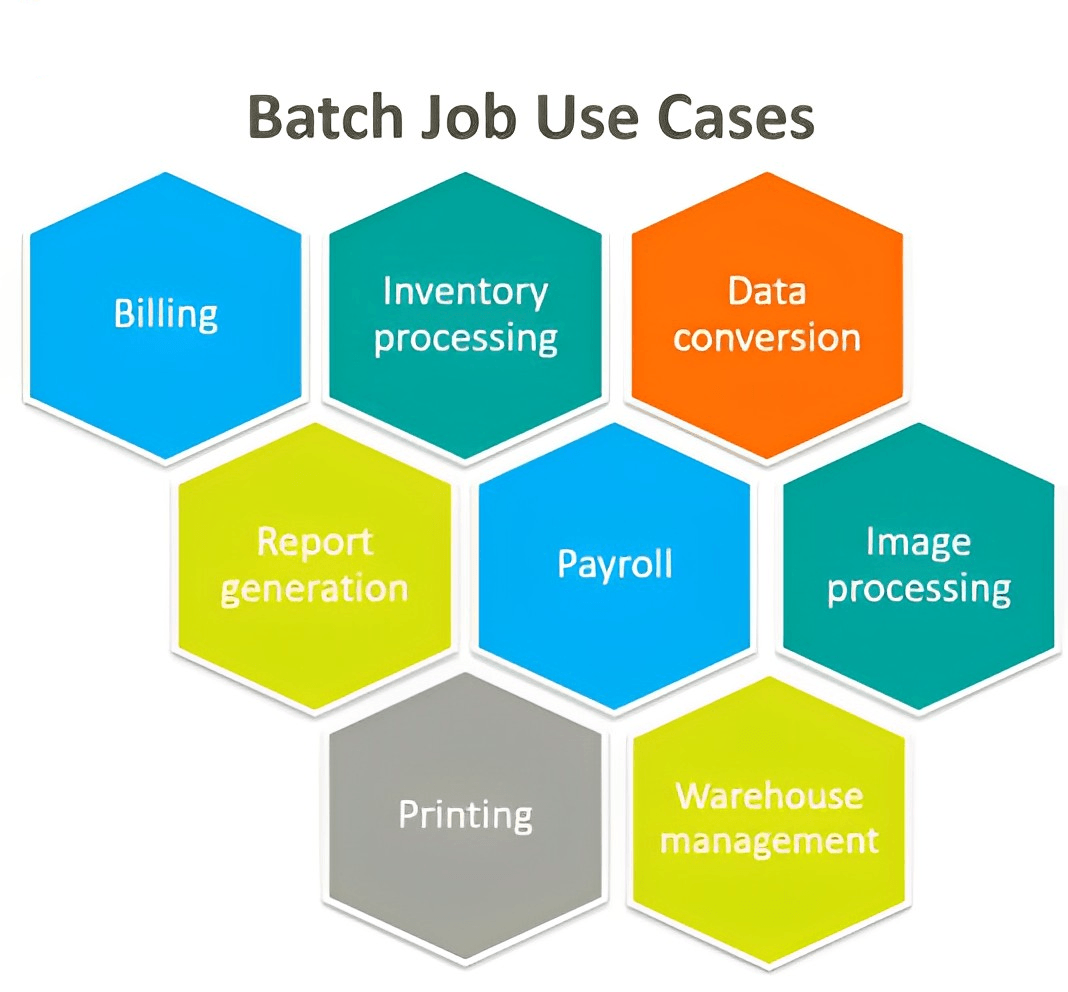

Diverse Applications Of Batch Processing Across Different Fields

Studies reveal that while 90% of business executives view data analytics as key to their organization's digital transformation, they only utilize 12% of their data. Batch processing can bridge this gap.

Let’s see some ways batch data processing can be used by real businesses:

General Use Cases

- Report Generation: Batch processing collects, sorts, and analyzes data to generate detailed reports that offer financial, operational, or performance-based insights for critical decision-making.

- Backup and Recovery: Using batch processing, you can implement automated backup schedules and manage backup files automatically. It also assists in data restoration, ensuring data integrity and accessibility.

- Integration and Interoperability: By automating data exchange and synchronization between various systems and departments, batch processing can help break data silos and promote better communication and integration.

Applications In Sales

- Lead Management: Batch processing can enrich and help prioritize leads, allowing sales personnel to target high-value prospects effectively.

- Sales Reporting: It can be set up to automatically process data related to sales for any period to provide insightful and timely sales reports.

- Order Processing: Batch processing can also accelerate the management of the order fulfillment process, inventory tracking, and customer information management.

- Campaign Management: It can enhance sales campaign management by analyzing large batches of leads or prospects, enabling sales reps to focus on specific segments.

- Customer Intelligence: You can even use batch data processing to uncover customer behavior, buying patterns, and trends. With this information, you can adapt your business to better respond to consumer needs.

Applications In Marketing

- Social Media Marketing: It can simplify the scheduling and publishing of posts on social media platforms, ensuring consistency and timely engagement.

- Email Marketing: You can use batch processing to quickly and efficiently deliver large volumes of emails to a list of subscribers to improve your business outreach and engagement.

- Marketing Analytics: All the emails you send to prospects and the ads you run on search engines and social media generate a huge amount of data. Batch processing can be used to process all the data generated during your marketing campaigns to provide valuable insights into customer behavior, engagement, and campaign ROI.

Applications In Finance

- Risk Management: It assists the finance sector in identifying and mitigating risks through data analysis.

- Fraud Detection: By analyzing large volumes of transactional data, batch processing detects patterns and anomalies indicative of fraudulent activities.

- End-of-Day Processing: Financial services companies can use the batch processing method to perform automatic and accurate end-of-day processing which includes reconciling transactions and generating reports.

Estuary Flow - Top Choice For Batch & Streaming Data Processing

Estuary Flow is our data pipeline tool known for its real-time data processing capabilities. But don’t be mistaken - it’s also equipped to handle batch data processing efficiently. This comes in handy when dealing with large volumes of data or when real-time processing isn’t required. Here’s how it excels at batch processing:

- Reliability: Flow is based on cloud storage and has real-time capabilities, making it dependable for critical tasks.

- Scalability: Estuary Flow can scale with your data to handle larger volumes which is ideal for batch data processing.

- Schema Inference: Unstructured data is not a problem for Estuary Flow. It can organize it into structured data by schema inference.

- Transformations: It allows data shaping with streaming SQL and Typescript transformations, making your data ready for analysis.

- Integration: Estuary Flow works well with Airbyte connectors, giving you access to more than 300 batch-based endpoints. That means you can pull data from many different sources.

Flow truly shines when it comes to real-time data processing. Its real-time capabilities were designed to solve some of the common challenges of older real-time data systems, which we discussed above. They include:

- Fault Tolerance: With its fault-tolerant architecture, Estuary Flow can continuously process data streams even during system failures.

- Real-time Data Integration: It has built-in real-time Salesforce integration and connects with other databases for immediate data integration.

- Real-time Materializations: It provides instant updates on your data as new business events happen, maintaining low-latency views across systems.

- Real-time Data Capture: Estuary Flow grabs data from various sources like databases and data streams from SaaS applications as soon as it arrives.

- Real-time Database Replication: Estuary Flow can maintain exact copies of your data in real time. This feature supports databases larger than 10TB.

Conclusion

Batch data processing is a smart pick when you're dealing with large volumes of data that don't need immediate results. But keep in mind that it might cause more downtime, risks of errors, and inactive periods. Consider these aspects alongside your business needs to make the right choice.

With Estuary Flow, you can experience a seamless and efficient data processing experience like never before. Our innovative platform offers a wide range of capabilities that handles both batch and streaming data effortlessly.

Whether it's large-scale data processing tasks or real-time data streaming, Estuary Flow provides a versatile solution that caters to diverse data processing needs. To see how Estuary Flow can help you manage data, sign up for Estuary Flow for free, or reach out to our team to discuss your unique requirements.

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles