What I’m about to say shouldn’t have to be a bold stance. It should be a no-brainer. But you’re going to be skeptical of it. Ready?

It is possible for a data system’s pricing model to be a win-win for the vendor and its users.

Obviously, it’s impossible to please everyone when money is involved, and a data stack will always be a major investment.

But it’s possible to meet in the middle — to price data infrastructure in a way that allows the vendor to profit without forcing customers to choose between performance and budget.

You’re probably raising your eyebrow right about now, and rightfully so. Looking at major Modern Data Stack vendors, it’s hard to find examples of pricing structures that strike this balance.

That’s because we’re in the midst of an unfortunate trend: one in which predatory pricing models have become the norm. Vendors know what they can get away with and have pressure to make profits; users are frustrated but often find their hands are tied.

About a month ago, dbt Labs became the latest company to announce a massive change to their pricing model. This has re-ignited the discussion around pricing data systems.

Though positioned as an upgrade from a less-than-ideal seat-based model, dbt’s new model is yet another example of how the Modern Data Stack taxes low-latency data.

Unfortunately, this is causing “fast” to become synonymous with “pricey,” and “modern data stack” companies are exploiting that preconception.

Low Latency Data Doesn’t Have to Be Expensive

I’m sure you remember the days when low latency was expensive for a very real reason.

Low latency data can refer to either incremental batch processing at a fast pace, or stream processing. Either way, it used to be challenging.

Without getting into the nitty-gritty details: repeat queries were inefficient. And the alternative, event-driven streaming architecture, was hard to manage. Before the heyday of the cloud, low-latency workflows didn’t scale; not to mention the fact that systems like Kafka, ZooKeeper, and Debezium are notoriously finicky, and hiring a whole team of people who are great with these systems is notoriously expensive.

But the days of on-prem architecture and self-managed even brokers are mostly over.

Today, we live in a world where low-latency data can be:

- Managed

- Intuitive

- Affordable

I say this with confidence because we built a system designed for managed, intuitive, and affordable real-time data movement — Estuary Flow.

In many cases, it’s cheaper than batch data movement because we can avoid large backfills, scale reactively, and statefully reduce data.

And we’re not the only ones who have cracked the code for making low-latency data competitively priced and easy to use. Confluent and Google PubSub, for example, are also breaking down barriers in the streaming world.

Even beyond streaming, low-latency batch workflows have become more manageable. The whole data landscape is shifting toward low-latency workflows because they just make more sense.

Or it would be… if the Modern Data Stack didn’t tax low-latency data.

How Vendor Pricing Models Artificially Inflate The Cost of Speed

In the data industry, we’re seeing many pricing models that charge based on changes to your data, often through an arbitrary proxy for data volume like credits or rows.

At first blush, this might seem reasonable, but it can manifest in nonsensical charges like:

- An upcharge to see the output of a CDC pipeline you built elsewhere

- A per-query price tag that doesn’t consider data volume

- Outright charges for updating your own data

Just because it’s a cultural shift — a chain reaction throughout the data industry — doesn’t mean it’s the right move. As vendors, we can do better.

Let’s look at a few examples.

Fivetran’s MARs and CDC Upcharge

We’ve previously written about the issues with Fivetran’s pricing model, which has several issues that cause unexpected price hikes as companies scale their data infrastructure.

- Monthly Active Rows as a proxy for data volume. The amount of data contained in a “row” varies widely from system to system. And because Fivetran owns the schemas for SaaS destination connectors, you can’t control how many rows are generated for every data event. In practice, one event can trigger up to 10 new rows. This is compounded at low latency.

- Low-latency upsells. Fivetran has multiple arbitrary upsell tiers for fast data movement. If you enabled CDC on your database, it wouldn’t cost Fivetran anything to give you much faster access to your data feed. Still, they keep your data behind a paywall and release it to you incrementally — unless you pay for an upgrade.

dbt’s Transition to Consumption-Based Pricing

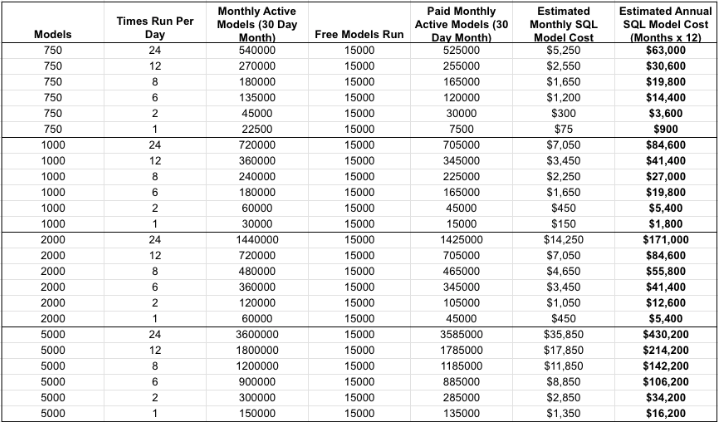

On August 8, dbt Labs announced that their dbt Cloud product would go through a major change. No longer are customers going to be billed on platform and seat costs. Their new consumption-based model includes costs based on the number of SQL models run each month.

Though dbt positions this change as a shift away from a model that didn’t map well to their product value, it’s ultimately a tax on the highly normalized SQL that dbt itself has promoted.

The lower your data’s latency, the harder you’ll be hit: this pricing is based on the number of times an object is updated as part of dbt each month.

For example, if you use hourly updates, you’re affected 24 times as much as someone who uses daily updates.

We ran some quick calculations to show you how this could affect you over the course of a year, based on public information provided by dbt.

Updating a model based more frequently costs dbt pennies. The real work is done in your warehouse, but these upcharges can instantly put you over your annual data budget.

In other words, the fact that low-latency processing itself has become affordable is completely negated downstream.

Handling Pricing Changes

The notion of low-latency data being an exclusive privilege is outdated. But culturally, we haven’t arrived at that consensus, and big-name vendors are perpetuating the old paradigm through their pricing.

As a data professional, this makes you less inclined to push back against vendors that tell you real-time data should be expensive.

You might…

- Eat the extra cost (not always an option).

- Feel incentivized to hack together a clever way to reduce cost based on the pricing model.

- Feel incentivized to reduce the cadence of data throughput, ultimately adding latency to your data product.

Most often, it’s a combination of 2 and 3.

The result? You pay more to go slower. And instead of focusing on modeling and solving business problems, you spent extra time and resources to defensively manage against the pricing model.

But there’s a better way.

What To Do Instead

You can obviously host dbt yourself and continue life as normal, but we are in this position in the first place due to overuse of ELT. A simple solution is to use the right types of compute in the right places.

- Do transformations further upstream via EtLT

- If you must keep a platform that has a sub-optimal pricing model (like dbt), reduce its role to the minimum and farm out its other functions to different platforms

How, you ask? Lots of data pipelining tools out there offer transformations – if you haven’t, check out Estuary (yeah that’s my company) or Narrator. Both offer strategies to massage your data up front and do less crunching of models downstream saving time, tech debt and complexity.

How to Spot a Fairly Priced Data Tool

No matter what you do, you’ll still be working with at least a few vendors. It’s up to you to choose the ones with fair pricing models.

This takes a bit of calculation, but is pretty straightforward.

The costs to run a data platform come purely from the amount of data transferred, servers used, and machine uptime. Any pricing model should roughly track these very tangible costs. If it doesn’t, it’s arbitrary, and often not to your benefit as the customer.

If it helps, calculate how much you would spend on infrastructure with an open source equivalent to the product you’re evaluating. Then, factor in convenience: how many team members would it take you to manage this open-source alternative? What is their time worth?

If the SaaS is dramatically more expensive than the number you come up with, the pricing model is off.

Data Integration Priced on Volume

We’ve talked a lot about pricing models, so what about Estuary Flow’s pricing?

Flow is a streaming platform. It doesn’t store data, but it handles data in motion.

In our case, narrowing in on a fair pricing model was pretty straightforward:

Estuary Flow is priced strictly on monthly data volume.

More specifically, the monthly data volume processed by a “task.” Tasks can move data to and from your systems, or transform it on the fly. It costs us roughly the same amount to do any of those things per GB of data. So, that’s how we calculate what to charge you.

We don’t charge you on any other benefit you happen to get from using Flow. That value is yours to keep.

Data Management for 2023 and Beyond

If you’re facing higher bills than ever from your data vendors — if you get sticker shock every month no matter how much optimization you try to perform — I’m willing to bet that your infrastructure is not the problem.

Instead, you’ve probably fallen into a pricing model that favors the vendor far more than it does you. And it’s undoing the efficiency you’ve worked hard to create.

Ultimately, low-latency data does affect downstream systems. But usually, that effect is minimal, and it shouldn’t cost you tens of thousands of dollars.

You can design your infrastructure to get around some of these unwarranted costs. (If you’d like help creating an affordable, streamlined data architecture, reach out to our team on Slack.)

Ultimately, my hope is that our industry can shift back to pricing that’s fair for both vendors and customers.

About the author

David Yaffe is a co-founder and the CEO of Estuary. He previously served as the COO of LiveRamp and the co-founder / CEO of Arbor which was sold to LiveRamp in 2016. He has an extensive background in product management, serving as head of product for Doubleclick Bid Manager and Invite Media.

Popular Articles