Inferring useful information from many sources is essential in the rapidly developing data management and analytics fields. AlloyDB and BigQuery are two such powerful solutions that provide effective data management capabilities. Data migration from AlloyDB to BigQuery opens up an abundance of analytical opportunities for enterprises that use both tools.

This article will go over how you can load data from AlloyDB to BigQuery, allowing you to take advantage of the features of both platforms for data analysis and reporting. We will begin the article by understanding AlloyDB and BigQuery.

Ready to dive into the data migration process? Skip the overview and jump directly to the methods for loading data from AlloyDB to BigQuery.

AlloyDB – The Source

AlloyDB, available on the Google Cloud Platform (GCP), is a fully managed database service that is compatible with PostgreSQL. Business-critical applications can benefit from AlloyDB’s outstanding performance and scalability, which are built into the system. AlloyDB has become a popular choice for organizations across industries thanks to its user-friendly design and intuitive functionality.

Some of the key features of AlloyDB include:

- High-Performance Transactions and Queries: AlloyDB is optimized to handle both read and write workloads efficiently. It scales to meet the needs of demanding applications, ensuring high-performance transactions and queries.

- Automatic Storage Management: AlloyDB eliminates the need for manual storage provisioning. The solution provides flexibility and reduces the complexity of storage management by automatically scaling storage based on the needs of the application.

- Built-in Replication and Disaster Recovery: AlloyDB continuously replicates data across multiple zones to provide data resiliency. This built-in replication reduces the impact of outages on the system by providing a level of disaster recovery and improving high availability.

- Seamless Integration with GCP Services: AlloyDB provides seamless connectivity with other Google Cloud Platform services. You can leverage the power of BigQuery, Cloud Functions, and Dataflow to create rich and efficient data pipelines using an interface that makes these services easy to use.

Google BigQuery – The Destination

Launched in May 2010, Google BigQuery is a serverless data warehouse that is fully managed and part of the Google Cloud Platform (GCP). For organizations dealing with large amounts of data, it is an effective tool because of its ability to process and analyze large amounts of information quickly and effectively.

It operates as a platform as a service (PaaS), providing you with a stable environment to perform scalable and effective data analysis across large data sets. It has a number of important features that make it a desirable choice for you to manage your data in the cloud.

Let’s look into some of BigQuery’s key features.

- Data Management: Google BigQuery has powerful data management features that make it easy for you to add and remove objects, including tables, views, and column functions. The platform also allows data to be imported from Google Storage in a variety of formats, including CSV, Parquet, Avro, and JSON, giving you the flexibility to manage a variety of data sources and structures.

- Querying: BigQuery uses a dialect of SQL to streamline the query process so that people familiar with regular SQL syntax can use it. JSON format is used to return query results, and a maximum response length of 128 MB is allowed. You can choose to allow infinite size for larger query results, providing flexibility to manage varying data volumes and complexities.

- Machine Learning: Machine learning is added to BigQuery’s capabilities, allowing you to use SQL queries to develop and run machine learning models. Without the need for additional tools or platforms, this integrated model method simplifies the process of incorporating machine learning into data analysis operations.

- In-Memory Analysis Service (BI Engine): With sub-second query response time and high concurrency, BigQuery includes BI Engine and an in-memory analytics engine that enables users to interactively analyze large, complex data sets. This capability improves the speed and effectiveness of data exploration and analysis.

How to Load Data From AlloyDB to BigQuery

To migrate data from AlloyDB to BigQuery, you can use one of the following two methods.

- Method 1: Using Estuary Flow to connect AlloyDB to BigQuery

- Method 2: Manually connecting AlloyDB to BigQuery

Method 1: Using Estuary Flow to Connect AlloyDB to BigQuery

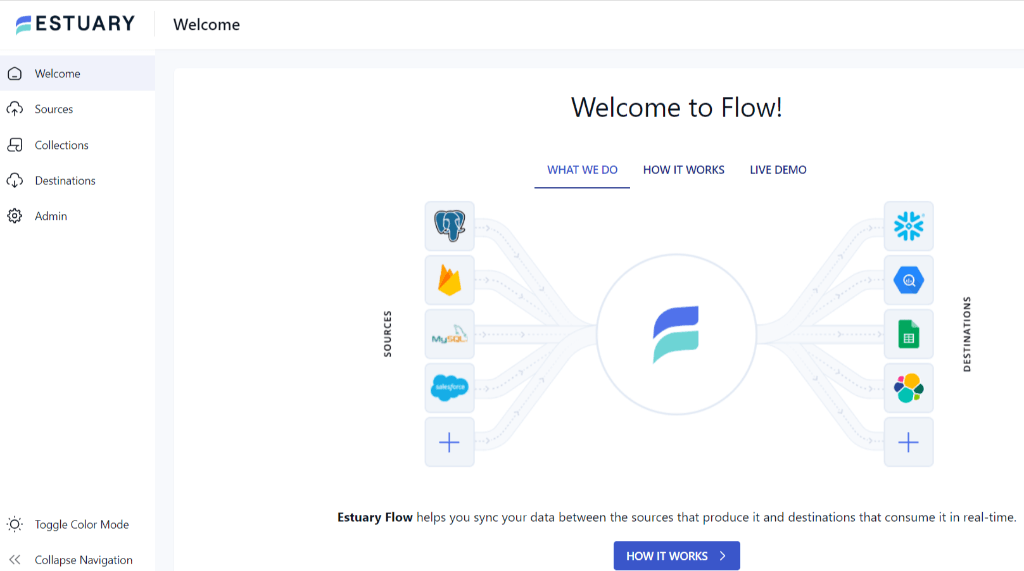

Here is a step-by-step tutorial on replicating data from AlloyDB to BigQuery using a real-time, no-code ETL (Extract, Transform, Load) platform called Estuary Flow.

Step 1: Configure AlloyDB as the Source

- Go to Estuary’s official website, and sign in to your existing account or create a new account.

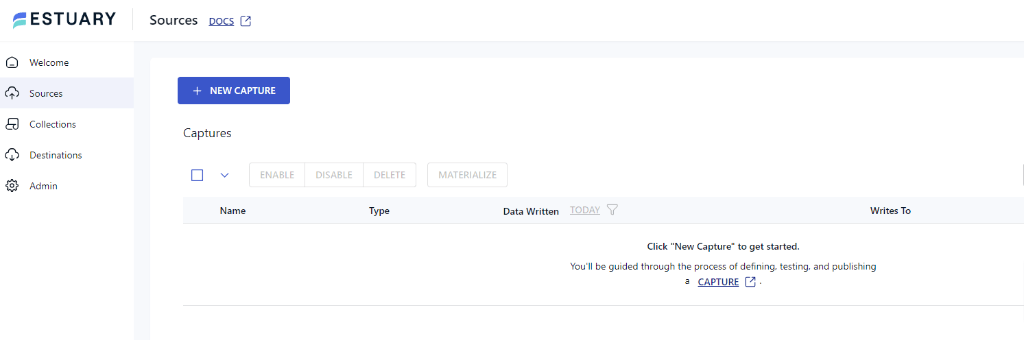

- Navigate to the main dashboard and click the Sources option on the left-side pane.

- On the Sources page, click on the + NEW CAPTURE option located at the top left.

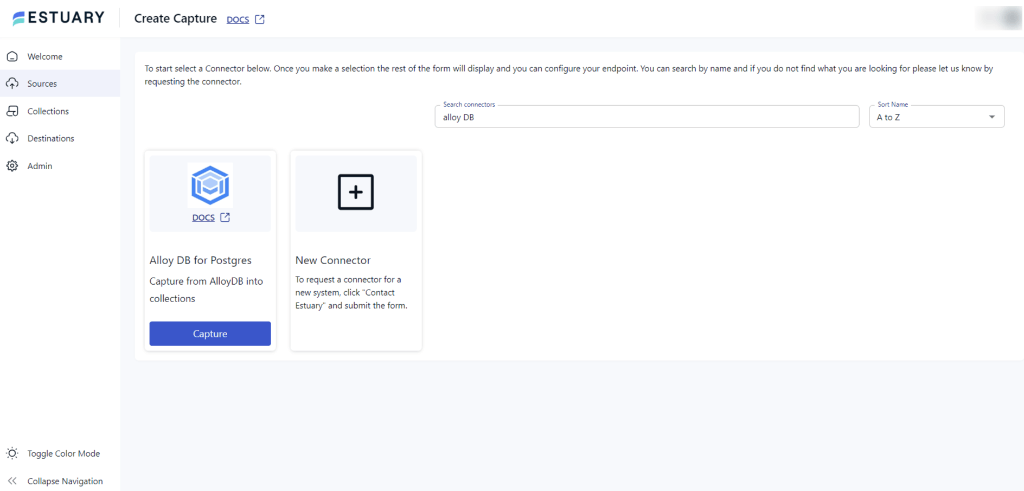

- Type AlloyDB in the Search connectors box to locate the connector. When you see the AlloyDB connector in the search results, click on its Capture button.

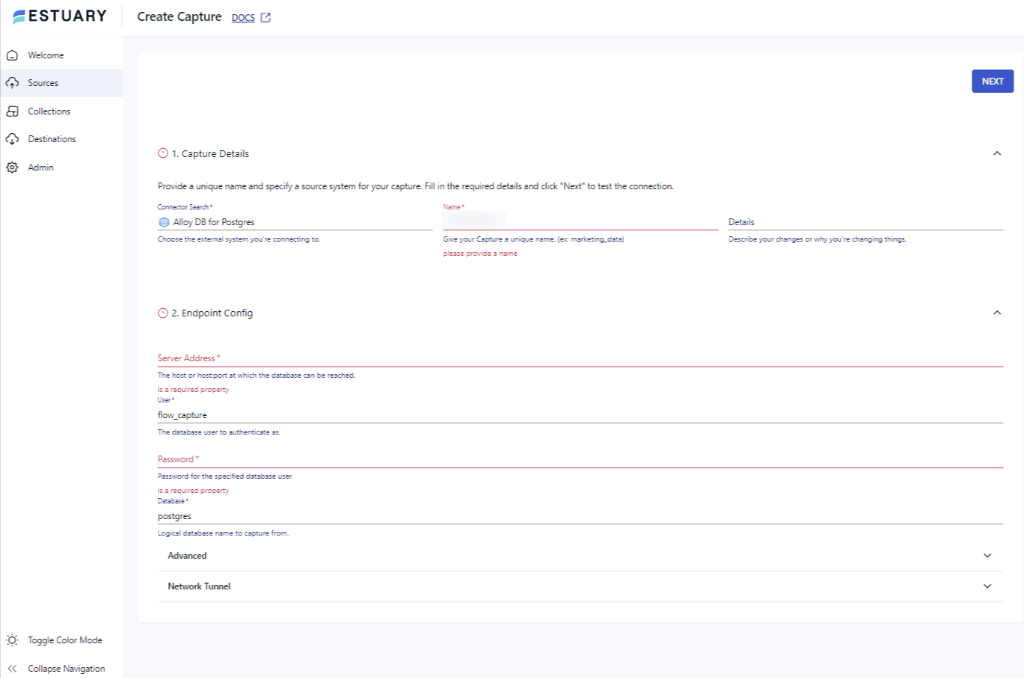

- Enter Name, Server Address, user, and Password on the Create Capture page.

- Click on NEXT > SAVE AND PUBLISH to finish configuring AlloyDB as your Source.

Step 2: Configure Google BigQuery as the Destination

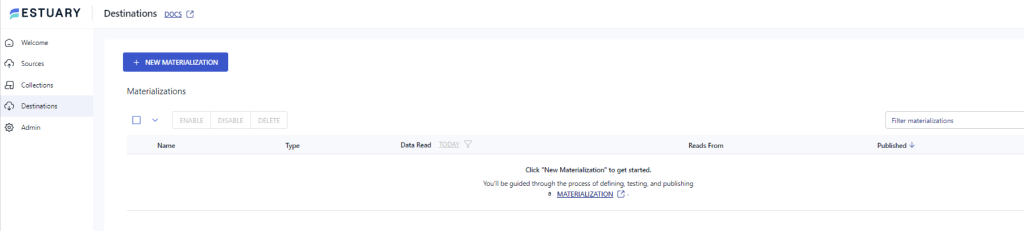

- Locate the Destinations option on the left-side pane of the after setting up the source.

- Find and click the + NEW MATERIALIZATION button on the Destinations page.

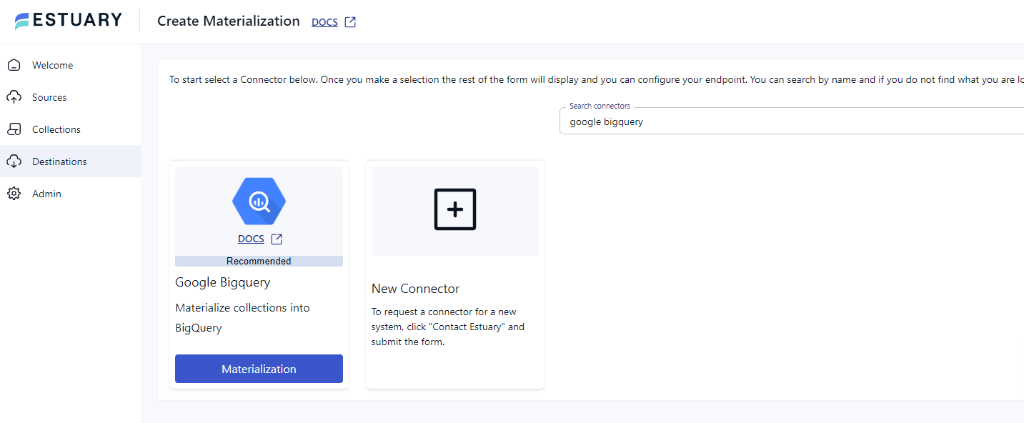

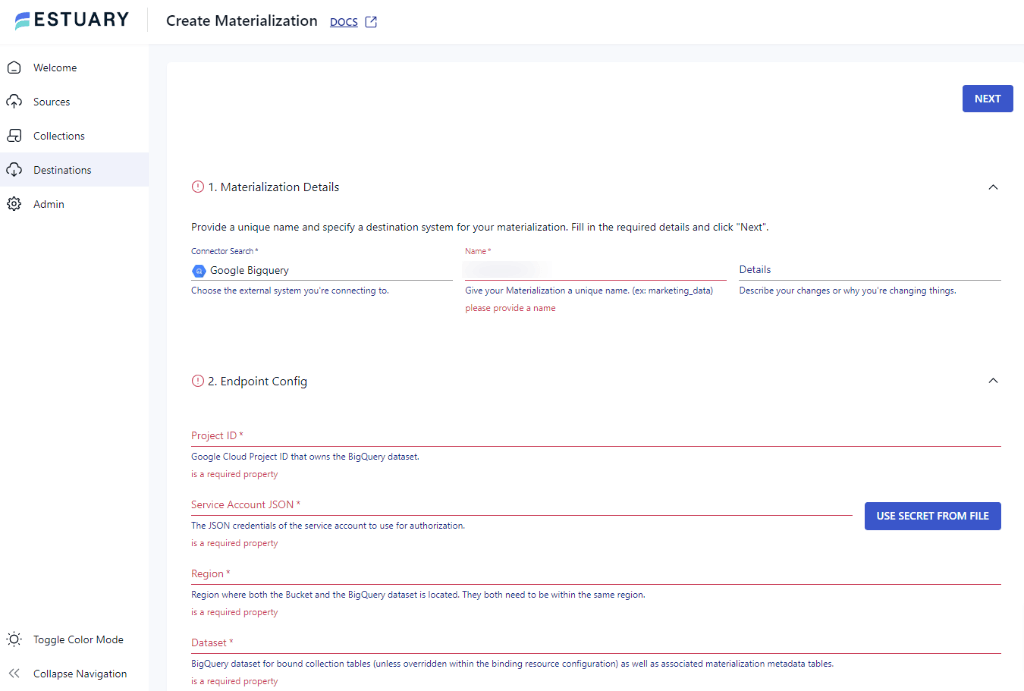

- Use the Search connectors field to look for the Google BigQuery connector. When it appears in the search results, click on its Materialization button.

- You’ll be redirected to the Create Materialization page. Fill in essential fields, such as Name, Project ID, Service Account JSON, and Region.

This final step will complete the data loading from AlloyDB to BigQuery utilizing Estuary Flow. To understand Estuary Flow better, read the documentation below:

Key Features of Estuary Flow

- Extensive Connector Library: Estuary Flow provides a diverse library of 150+ pre-built connectors. These connectors enable quick merging of data from multiple sources into targets without coding.

- Data Cleansing: Flow includes the capability of data cleansing. This ensures data integrity and quality by transferring only accurate data to the target database.

- Minimal Technical Knowledge: The migration process between AlloyDB and BigQuery can be completed with just a few clicks using Flow. Suitable for professionals with any level of technical expertise.

- User-Friendly Interface: Flow offers a straightforward and easy-to-use interface for data migration tasks.

- Complete Data History Tracking: It keeps track of the entire history of your data during the migration process.

Method 2: Manually Connecting AlloyDB to BigQuery

You can also choose to manually load data from AlloyDB to Google BigQuery by following these steps:

Step 1: Export Data from AlloyDB

First, export the data from your AlloyDB database. You can generate data files in a compatible format, such as CSV or JSON, by using the AlloyDB export capability.

Step 2: Create a New BigQuery Dataset

Navigate to the Google Cloud Console (console.cloud.google.com), select BigQuery, and create the new dataset. The imported data will be stored in this dataset.

Step 3:Upload Data to Cloud Storage

Upload the AlloyDB exported data file to Google Cloud storage. Create a new bucket in the Cloud Storage or use an existing one, then upload the data file there.

Step 4: Create a BigQuery Table

Within the dataset you established before, create a new table in BigQuery. Based on the structure of the exported data, define the table schema. To create the table, you can use BigQuery online UI, command-line tools like bq, or the API.

Step 5: Load Data into BigQuery

To begin the data load from Cloud Storage to BigQuery, use the BigQuery web UI, bq command-line tools, or API. Set the location of the source data file in Cloud Storage, the destination table BigQuery, and any other parameters or customizations that are required.

Step 6: Monitor the Data Load Process

Keep an eye on the data load process to verify it is successful. The progress and status of the load task can be viewed in BigQuery web UI or by using the monitoring tools offered in Google Cloud Console.

When the data load process is finished, your AlloyDB data will be available in BigQuery for additional analysis and querying.

Manual Data Loading has Various Limitations

- Time-Consuming: Manual data loading can be time-consuming, especially for large datasets, because each data point must be handled and processed individually.

- Prone to Errors: Manual data entry is more prone to human errors like typos and inconsistencies, which might jeopardize data integrity.

- Data Integration Difficulties: Loading the data manually may make it difficult to integrate with different sources or databases, restricting the capacity to seamlessly mix disparate datasets.

- Data Inconsistency Risk: Without automated systems, guaranteeing consistency across several data sources becomes difficult, sometimes leading to discrepancies in the loaded data.

- Possibility of Data Security Issues: Manual data loading can expose data to security concerns such as unauthorized access or unintentional exposure, especially if suitable security measures are not followed.

Takeaways

As businesses navigate the complexity of data management, the integration of AlloyDB and BigQuery exemplifies the ever-changing environment of data-driven solutions. As shown in this article, the process of importing data from AlloyDB to BigQuery acts as a bridge between databases, allowing for a seamless and reliable interchange of information.

Using no-code SaaS tools like Estuary Flow speeds up the migration process and allows you to connect two databases with a few clicks. You can also manually transfer the data, which is slightly more complicated but reliable.

For hassle-free AlloyDB to BigQuery migration, we recommend using Estuary. Its comprehensive functionalities and interactive user interface automate the majority of the procedure. Sign in or register for free to get started with Estuary Flow today!

FAQs

What is the difference between AlloyDB and BigQuery?

AlloyDB is a distributed SQL database designed for high-throughput transactional workloads, fast data loading, and parallel processing capabilities. On the other hand, BigQuery is a fully managed serverless cloud data warehouse suitable for processing large-scale datasets and performing complex analytics owing to its automatically scalable architecture.

How to load data into BigQuery?

Some common methods for loading data into BigQuery include uploading data from Google Cloud Storage, using low-code ETL tools such as Estuary Flow, or directly uploading CSV or JSON files via the BigQuery Web UI.

When to use AlloyDB?

AlloyDB is ideal for demanding workloads that require superior performance, scalability, and availability. It suits applications needing high transactional throughput, large data volumes, or multiple-read replicas.

About the author

Dani is a data professional with a rich background in data engineering and real-time data platforms. At Estuary, Daniel focuses on promoting cutting-edge streaming solutions, helping to bridge the gap between technical innovation and developer adoption. With deep expertise in cloud-native and streaming technologies, Dani has successfully supported startups and enterprises in building robust data solutions.

Popular Articles