Businesses rely on different platforms for payment processing to facilitate seamless transactions and provide customers with varied payment options. However, understanding and analyzing payment-related information obtained from the different payment processing platforms will not only provide insights into your customers but also support a comprehensive analysis of your business performance.

Stripe is one of the leading payment processing platforms that can handle anything from one-time transactions to subscription billing. Moving data from Stripe to BigQuery can uncover the true potential of your payment data by seamlessly integrating these two platforms, allowing you to gain a real-time view of your finances.

In this guide, we'll cover an overview of both platforms and explore two methods for integrating Stripe with BigQuery, including a step-by-step process for each.

What is Stripe?

Stripe is a payment processing platform that enables you to accept and manage online payments. It offers different services for online payments, invoices, among others. However, Stripe primarily acts as a payment processing service, allowing your business to accept different payment methods. This includes credit and debit cards, Google Pay, Apple Pay, and more across web and mobile applications. Stripe helps ensure secure payment processing with any of these payment methods by using its ML algorithms and advanced fraud detection mechanisms.

Organizations that want to launch faster often use Stripe to manage the entire payment workflow end-to-end. To integrate the Stripe payment gateway with your website or application, you can leverage its well-documented API or plugins.

What is BigQuery?

Google BigQuery is a fully-managed, serverless data warehouse and analytics platform that can handle petabyte-scale, real-time data processing.

BigQuery separates storage and compute resources, allowing you to scale storage and processing power independently for a cost-effective solution. Unlike traditional database systems that store their data in rows, BigQuery stores its data in columns. As a result, BigQuery will only read the relevant columns for query execution, processing each column independently and in parallel. This can significantly reduce response times and provide faster insights.

With parallel processing, a distributed architecture, and columnar storage, BigQuery can deliver fast query results even on large datasets.

Methods to Move Data from Stripe to BigQuery

There are two different methods to load data from Stripe to BigQuery:

- Method #1: Move data from Stripe to BigQuery using custom scripts

- Method #2: Use no-code integration tools like Estuary

Method #1: Moving Data from Stripe to BigQuery Using Custom Scripts

This integration method involves the manual extraction of Stripe data using its API and then loading it into BigQuery.

You can use Stripe’s REST API to access, store, and retrieve data. The API supports core resources, including balance, charges, customers, events, and tokens. All of these resources support CRUD operations by using HTTP verbs on the endpoints. You can use CURL, Postman, or an HTTP client to access the Stripe API.

Here are the different steps involved in this method:

Step 1: Extract Data from Stripe

Let’s use CURL for this tutorial and assume you want to perform some churn analysis for your company. To do this, you require customer data that indicates when they have canceled their subscriptions. You can access the customer objects in Stripe with the following command:

plaintextcurl https://api.stripe.com/v1/charges?limit=3 -u sk_test_BQokikJOvBiI2HlWgH4olfQ2:A typical response to this command will look like this:

plaintext{ "object": "list", "url": "/v1/charges", "has_more": false, "data": [ { "id": "ch_17SY5f2eZvKYlo2CiPfbfz4a", "object": "charge", "amount": 500, "amount_refunded": 0, "application_fee": null, "balance_transaction": "txn_17KGyT2eZvKYlo2CoIQ1KPB1", "captured": true, "created": 1452627963, "currency": "usd", "customer": null, "description": "thedude@grepinnovation.com Account Credit", "destination": null, "dispute": null, "failure_code": null, "failure_message": null, "fraud_details": { }, …….The customer object, with a list of subscription objects, in the JSON document will look like this:

plaintext{ "id": "sub_7hy2fgATDfYnJS", "object": "subscription", "application_fee_percent": null, "cancel_at_period_end": false, "canceled_at": null, "current_period_end": 1455306419, "current_period_start": 1452628019, "customer": "cus_7hy0yQ55razJrh", "discount": null, "ended_at": null, "metadata": { }, "plan": { "id": "gold2132", "object": "plan", "amount": 2000, "created": 1386249594, "currency": "usd", "interval": "month", "interval_count": 1, "livemode": false, "metadata": { }, "name": "Gold ", "statement_descriptor": null, "trial_period_days": null }, "quantity": 1, "start": 1452628019, "status": "active", "tax_percent": null, "trial_end": null, "trial_start": null }You can save the JSON document to your local machine.

Step 2: Prepare the Stripe Data for BigQuery

Google BigQuery supports CSV and JSON data formats. If the API used to extract Stripe data returns XML, you will need to transform it into CSV or JSON to load it into BigQuery.

Additionally, you must ensure the data types you’re using are ones supported by BigQuery, including:

- Integer

- String

- Float

- Boolean

- Record

- Timestamp

Step 3: Load Data into BigQuery

To load the data from your local machine, you can use the Google Cloud console. Here are the steps to follow:

- On the BigQuery page of the Google Cloud console, expand your project in the Explorer panel, and select a dataset.

- Click on the three vertical dots (View Actions) and select Open.

- Click on Create table in the details panel.

- In the Source section of the Create table page,

- Select Upload for Create table from.

- For Select file, click on Browse.

- After locating the file, click Open.

- For File format, select JSON.

- In the Destination section of the Create table page,

- Choose the appropriate project for Project.

- Choose the appropriate dataset for Dataset.

- In the Table field, provide a name for the table you’re creating.

- Verify that the Table type is Native table.

- In the Schema section, you can check Auto-detect.

- Click Create Table.

Alternatively, if you choose to load data into Google Cloud Storage (GCS) before loading it into BigQuery, you will need to provide the Cloud Storage URI. You can then use a POST request or create a ‘Load Job’ in BigQuery pointing to your source data in Cloud Storage. You can also refer to the documentation for more information on how to load data into BigQuery for different data formats.

This successfully loads your data from Stripe to Google BigQuery.

Limitations of Using Custom Script Method

- Custom scripts require continual maintenance, especially when there are updates or changes to any of the APIs.

- Writing custom scripts requires technical expertise and dedicated resources for maintenance.

- You must be able to handle errors, like network issues, failed API requests, and other inconsistencies in custom scripts. This is essential for data integrity and preventing data loss.

Method #2: Using a No-Code Integration Tool like Estuary Flow

You can overcome the limitations associated with custom scripts by leveraging ETL (Extract, Transform, Load) tools like Estuary Flow.

Estuary simplifies the process of creating a data integration pipeline with an easy-to-use interface and in-built connectors. Setting up a data pipeline using Estuary Flow will only take a few minutes, enabling seamless batch and streaming data ingestion, while enhancing data automation in your operations.

To use Flow for integrating Stripe to BigQuery, register for an Estuary account. If you’re already a registered user, sign in to your account and follow these steps:

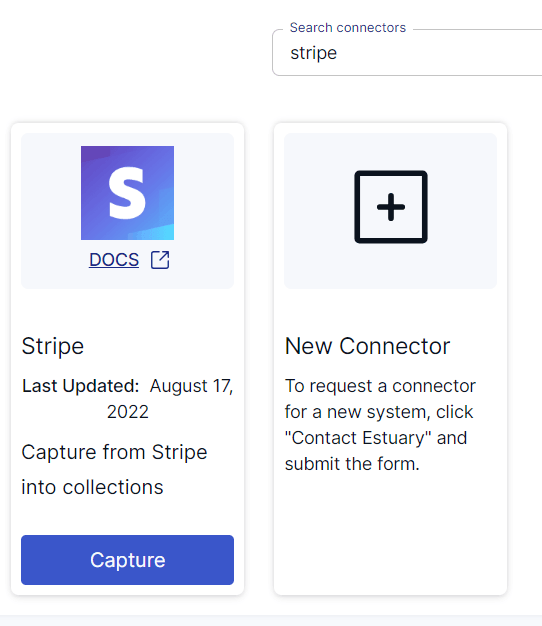

Step 1: Configure Stripe as the Source End of the Pipeline

Click on Sources on the Estuary dashboard. Now, click on the + NEW CAPTURE button. In the Search Connectors box, search for Stripe. The Stripe connector will appear in the search results. Click on the Capture button of the connector.

Image Source: Estuary

Before using the Stripe connector to capture data, there are a few prerequisites you need to complete. Read about the prerequisites here.

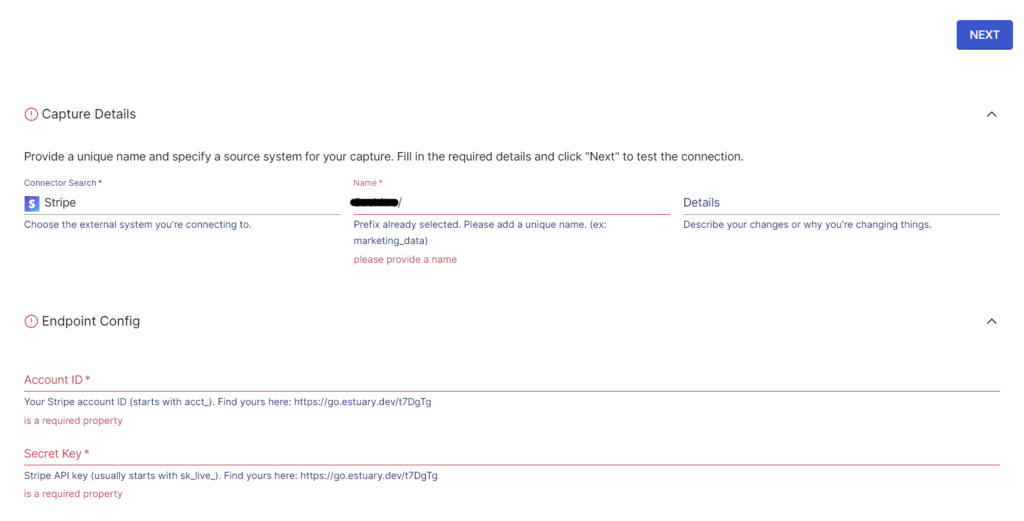

On the Stripe connector page, specify a Name for the connector, Account ID, Secret Key, and Replication start date. Then, click on NEXT, followed by Save and Publish.

Image Source: Estuary

The Stripe API supports multiple data resources, including:

- Balance transactions

- Checkout sessions

- Disputes

- Invoices

- Payment intents

- Subscriptions

- Transfers

By default, Flow maps each resource to a collection through a separate binding.

Step 2: Configure BigQuery as the Destination End of the Pipeline

Setting up BigQuery to connect with Flow involves a few prerequisites. Ensure you fulfill the prerequisites before proceeding.

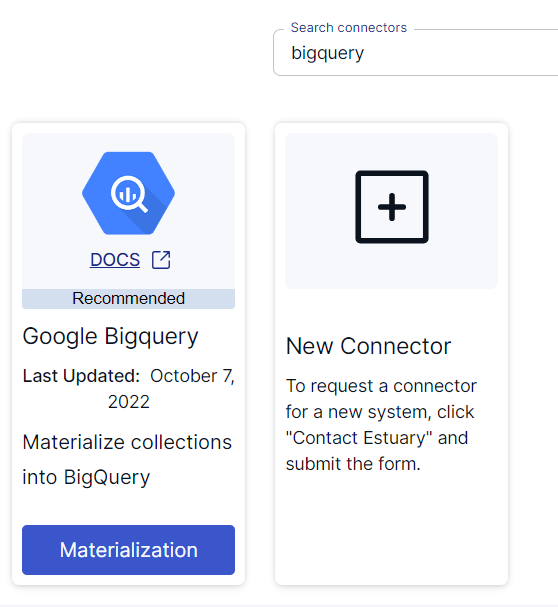

To set up the destination to load the data, click on Materialize Connection in the pop-up that follows a successful capture. Alternatively, you can navigate to the Estuary dashboard and click on Destinations, then click on + NEW MATERIALIZATION.

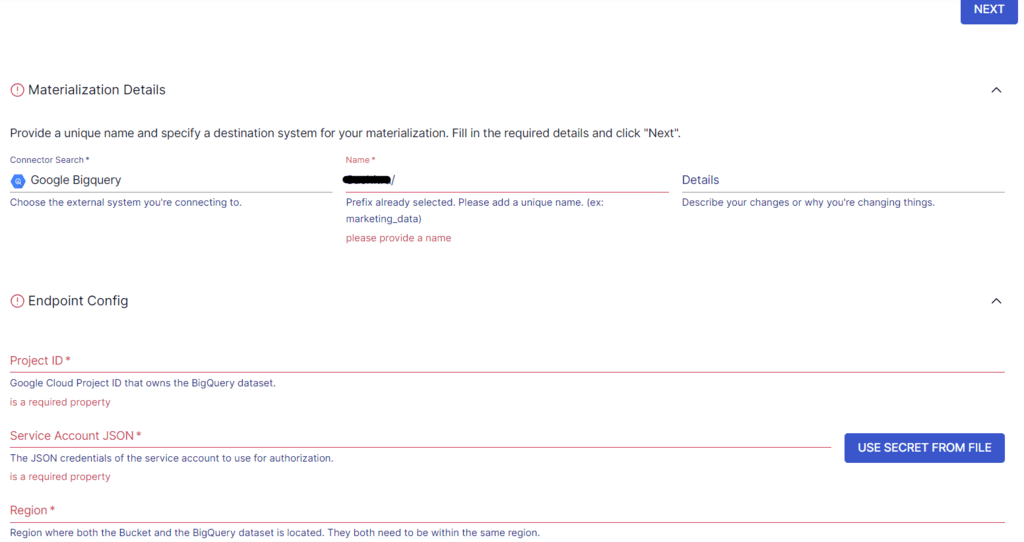

Search for BigQuery in the Search Connectors box, then click on the Materialization button of the Google Bigquery connector.

Image Source: Estuary

This will redirect you to the BigQuery connector page, where you must fill in the required fields. Some of the necessary details include a Name for the connector, Project ID, Region, Dataset, and Bucket.

Tip: You can use the Source Collections feature to select the Flow collections that you want to materialize.

Then, click on Next, followed by Save and Publish, once you’re done filling in the details.

The BigQuery connector will materialize Flow collections of the captured Stripe data into tables within a Google BigQuery dataset. It uses your GCS account to materialize to BigQuery tables and a GCS bucket as a temporary staging area for data storage and retrieval.

To learn more about the process, read the Estuary documentation below:

Conclusion

Integrating Stripe to BigQuery helps extract actionable insights from payment-related data. Combining Stripe’s robust payment processing capabilities and BigQuery’s advanced analytics will vastly improve data-driven decision-making. With real-time access to valuable payment information, you can tailor your marketing strategies, optimize pricing, and promote enhanced customer experiences.

The two different methods to move data from Stripe to BigQuery include using a custom script approach and using SaaS alternatives like Flow. There are, however, a few limitations associated with the custom script approach include resource-intensive workflows. Powerful no-code solutions like Estuary Flow help overcome such limitations, thanks to in-built connectors, low-latency processing, and real-time capabilities.

Estuary Flow provides automated and real-time integration capabilities to migrate data between any two platforms. Register for an Estuary account to experience the easier way of building data integration pipelines. Your first pipeline is free!

Interested in exploring more ways to seamlessly move your Stripe data into other destinations? Check out these guides:

About the author

With over 15 years in data engineering, a seasoned expert in driving growth for early-stage data companies, focusing on strategies that attract customers and users. Extensive writing provides insights to help companies scale efficiently and effectively in an evolving data landscape.

Popular Articles